2019独角兽企业重金招聘Python工程师标准>>> ![]()

ELK

一、ELK介绍

需求背景:随着业务发展越来越大,服务器会越来越多,那么,各种日志量(比如,访问日志、应用日志、错误日志等)会越来越多。 因此,开发人员排查问题,需要到服务器上查看日志,很不方便。而运维人员也需要一些数据,所以也要到服务器分析日志,很麻烦。

对于日志来说,最常见的需求就是收集、存储、查询、展示,开源社区正好有相对应的开源项目:logstash(收集)、elasticsearch(存储+搜索)、kibana(展示),我们将这三个组合起来的技术称之为ELKStack,所以说ELKStack指的是Elasticsearch、Logstash、Kibana技术栈的结合。

ELK Stack (5.0版本之后)Elastic Stack == (ELK Stack + Beats)

ELK Stack包含:ElasticSearch、Logstash、Kibana

ElasticSearch是一个搜索引擎,用来搜索、分析、存储日志。它是分布式的,也就是说可以横向扩容,可以自动发现,索引自动分片,总之很强大。

Logstash用来收集日志,把日志解析为json格式交给ElasticSearch。

Kibana是一个数据可视化组件,把处理后的结果通过web界面展示

Beats在这里是一个轻量级日志收集器,其实Beats家族有5个成员(不断增加) 早期的ELK架构中使用Logstash收集、解析日志,但是Logstash对内存、cpu、io等资源消耗比较高。相比 Logstash,Beats所占系统的CPU和内存几乎可以忽略不计。 x-pack对Elastic Stack提供了安全、警报、监控、报表、图表于一身的扩展包,是收费的;

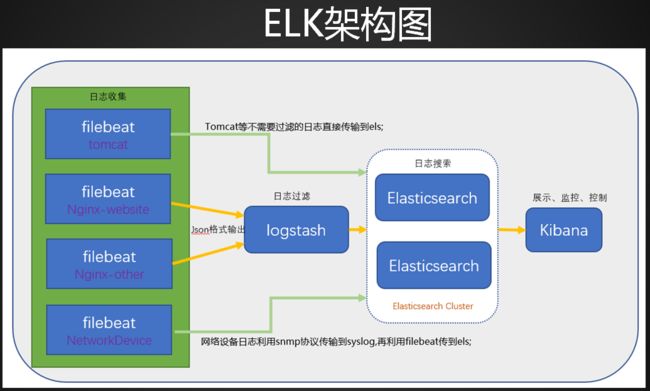

ELK的架构图

二、ELK安装准备工作

构建集群的机器角色的分配:

IP hostname 节点定义 openjdk elasticsearch kibana logstash beats 192.168.112.150 ying04 主节点 安装 安装 安装 —— —— 192.168.112.151 ying05 数据节点 安装 安装 —— 安装 —— 192.168.112.152 ying06 数据节点 安装 安装 —— —— 安装

把三台主机的IP 域名增加到/etc/hosts

[root@ying04 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.112.150 ying04

192.168.112.151 ying05

192.168.112.152 ying06

三台机器需要安装openjdk;

[root@ying04 ~]# java -version //查无openjdk

-bash: java: 未找到命令

[root@ying06 ~]# yum install -y java-1.8.0-openjdk //安装openjdk

[root@ying04 ~]# java -version

openjdk version "1.8.0_181"

OpenJDK Runtime Environment (build 1.8.0_181-b13)

OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)

三、配置elasticsearch

三台机器都安装elasticsearch

按照官方文档;创建创建yum源的elastic文件;然后就可以yum安装elasticsearch

[root@ying04 ~]# rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch //导入官方秘钥

[root@ying04 ~]# vim /etc/yum.repos.d/elastic.repo //创建yum源的elastic文件

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

[root@ying04 ~]# yum install -y elasticsearch

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executing

sudo systemctl start elasticsearch.service

Created elasticsearch keystore in /etc/elasticsearch

验证中 : elasticsearch-6.4.2-1.noarch 1/1

已安装:

elasticsearch.noarch 0:6.4.2-1

完毕!

[root@ying04 ~]# echo $?

0

ying04机器上的配置文件按如下编辑:

[root@ying04 ~]# vim /etc/elasticsearch/elasticsearch.yml

# ---------------------------------- Cluster -----------------------------------

#cluster.name: my-application

cluster.name: fengstory //集群的名称

# ------------------------------------ Node ------------------------------------

#node.name: node-1

node.name: ying04 //节点的名称

#node.attr.rack: r1

node.master: true //此机器是master节点

node.date: false //此机器不是数据节点

# ---------------------------------- Network -----------------------------------

#network.host: 192.168.0.1

network.host: 192.168.112.150 //绑定的IP,意思在哪个IP上监听

#http.port: 9200

http.port: 9200 //端口

# --------------------------------- Discovery ----------------------------------

#discovery.zen.ping.unicast.hosts: ["host1", "host2"]

discovery.zen.ping.unicast.hosts: ["feng04", "feng05", "feng06"] //参与集群的角色

ying05机器上的配置文件按如下编辑:

[root@ying05 ~]# vim /etc/elasticsearch/elasticsearch.yml

# ---------------------------------- Cluster -----------------------------------

#cluster.name: my-application

cluster.name: fengstory //集群的名称

# ------------------------------------ Node ------------------------------------

#node.name: node-1

node.name: ying05 //节点的名称

#node.attr.rack: r1

node.master: false //ying05不是master节点

node.date: true //ying05是数据节点,多以为true

# ---------------------------------- Network -----------------------------------

#network.host: 192.168.0.1

network.host: 192.168.112.151 //绑定的IP,意思在哪个IP上监听

#http.port: 9200

http.port: 9200 //端口

# --------------------------------- Discovery ----------------------------------

#discovery.zen.ping.unicast.hosts: ["host1", "host2"]

discovery.zen.ping.unicast.hosts: ["feng04", "feng05", "feng06"] //参与集群的角色

ying06机器上的配置文件按如下编辑:

[root@ying06 ~]# vim /etc/elasticsearch/elasticsearch.yml

# ---------------------------------- Cluster -----------------------------------

#cluster.name: my-application

cluster.name: fengstory //集群的名称

# ------------------------------------ Node ------------------------------------

#node.name: node-1

node.name: ying06 //节点的名称

#node.attr.rack: r1

node.master: false //ying05不是master节点

node.date: true //ying05是数据节点,多以为true

# ---------------------------------- Network -----------------------------------

#network.host: 192.168.0.1

network.host: 192.168.112.152 //绑定的IP,意思在哪个IP上监听

#http.port: 9200

http.port: 9200 //端口

# --------------------------------- Discovery ----------------------------------

#discovery.zen.ping.unicast.hosts: ["host1", "host2"]

discovery.zen.ping.unicast.hosts: ["feng04", "feng05", "feng06"] //参与集群的角色

三台机器都开启elasticsearch服务

[root@ying04 ~]# systemctl start elasticsearch

此时安装配置正确的情况下,每台机器其端口会出现9200和9300

[root@ying04 ~]# ps aux |grep elastic

elastic+ 1163 79.7 28.6 1550360 538184 ? Ssl 17:05 0:03 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.io.tmpdir=/tmp/elasticsearch.uXgx3jDC -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/var/lib/elasticsearch -XX:ErrorFile=/var/log/elasticsearch/hs_err_pid%p.log -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:/var/log/elasticsearch/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -Des.path.home=/usr/share/elasticsearch -Des.path.conf=/etc/elasticsearch -Des.distribution.flavor=default -Des.distribution.type=rpm -cp /usr/share/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -p /var/run/elasticsearch/elasticsearch.pid --quiet

root 1207 0.0 0.0 112720 984 pts/0 R+ 17:05 0:00 grep --color=auto elastic

[root@ying04 ~]# netstat -lnpt |grep java

tcp6 0 0 192.168.112.150:9200 :::* LISTEN 1163/java

tcp6 0 0 192.168.112.150:9300 :::* LISTEN 1163/java

四、curl查看elasticsearch

集群健康检查:

[root@ying04 ~]# curl '192.168.112.150:9200/_cluster/health?pretty'

{

"cluster_name" : "fengstory",

"status" : "green", //green 表示正确

"timed_out" : false, //未超时

"number_of_nodes" : 3, //节点3个

"number_of_data_nodes" : 2, //数据节点2个

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

查看集群详细信息:curl '192.168.112.150:9200/_cluster/state?pretty'

[root@ying04 ~]# curl '192.168.112.150:9200/_cluster/state?pretty' |head //集群详细信息,只显示前10行

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0{

"cluster_name" : "fengstory", //集群名字

"compressed_size_in_bytes" : 9577, //文件太大,约几千行

"cluster_uuid" : "5pI8vvn0RXWBmGmj7Lj54A",

"version" : 5,

"state_uuid" : "Mc803-QnRQ-pkw4UWC7Gqw",

"master_node" : "0nBvsj3DTTmYSdGyiI1obg",

"blocks" : { },

"nodes" : {

"-gLGPb6tTEecUPPhlUlUuA" : {

62 126k 62 81830 0 0 498k 0 --:--:-- --:--:-- --:--:-- 502k

curl: (23) Failed writing body (90 != 16384)

五、安装kibana

注意:kibana只需要在ying04 (192.168112.150)上安装

因为已经创建yum源,因此直接用yum安装kibana

[root@ying04 ~]# yum install -y kibana

编辑kibana的配置文件:/etc/kibana/kibana.yml

[root@ying04 ~]# vim /etc/kibana/kibana.yml //添加以下配置;带#的是系统举例;为了清晰规整,则需要配置的内容,写在相应的说明下;

#server.port: 5601

server.port: 5601

#server.host: "localhost"

server.host: 192.168.112.150 //只监控主机 150

#elasticsearch.url: "http://localhost:9200"

elasticsearch.url: "http://192.168.112.150:9200"

#logging.dest: stdout

logging.dest: /var/log/kibana.log //定义日志的路径

因为kibana的配置文件中,指定日志的文件,因此需要创建此文件,并给予777权限;

[root@ying04 ~]# touch /var/log/kibana.log; chmod 777 /var/log/kibana.log

[root@ying04 ~]# ls -l /var/log/kibana.log

-rwxrwxrwx 1 root root 10075 10月 13 18:25 /var/log/kibana.log

启动kibana服务,并查看是否有进程以及5601端口;

[root@ying04 ~]# systemctl start kibana

[root@ying04 ~]# ps aux |grep kibana

kibana 1968 25.2 6.8 1076360 128712 ? Rsl 18:24 0:06 /usr/share/kibana/bin/../node/bin/node --no-warnings /usr/share/kibana/bin/../src/cli -c /etc/kibana/kibana.yml

root 1980 5.0 0.0 112720 984 pts/0 R+ 18:24 0:00 grep --color=auto kibana

[root@ying04 ~]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 536/rpcbind

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 966/nginx: master p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 820/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1031/master

tcp 0 0 192.168.112.150:5601 0.0.0.0:* LISTEN 1968/node //出现5601端口

tcp6 0 0 :::111 :::* LISTEN 536/rpcbind

tcp6 0 0 192.168.112.150:9200 :::* LISTEN 1870/java

tcp6 0 0 192.168.112.150:9300 :::* LISTEN 1870/java

tcp6 0 0 :::22 :::* LISTEN 820/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1031/master

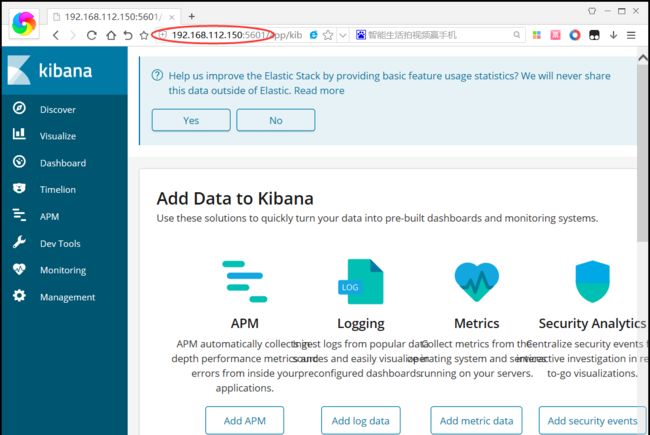

此时在浏览器里,访问http://192.168.112.150:5601

能够显示以上页面,说明配置成功;

六、安装logstash

只需要在ying05(192.168.112.151)机器上安装;

[root@ying05 ~]# yum install -y logstash

在/etc/logstash/conf.d/目录下,专门存放收集相关定义日志的配置文件

[root@ying05 ~]# ls /etc/logstash/

conf.d jvm.options log4j2.properties logstash-sample.conf logstash.yml pipelines.yml startup.options

[root@ying05 ~]# ls /etc/logstash/conf.d/ //自定义的配置文件,放在此目录下,才会被加载

先创建syslog.conf,专门来收集系统日志;

[root@ying05 ~]# vim /etc/logstash/conf.d/syslog.conf

input { //输入配置

syslog {

type => "system-syslog" //定义日志类型

port => 10514 //定义端口

}

}

output { //输出配置

stdout { //标准输出

codec => rubydebug

}

}

检测一下配置是否正确,显示OK,则配置正确;

./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

释义:

- --path.settings /etc/logstash/ :指定logstash配置文件的目录;系统会按照这个目录寻找;

- -f /etc/logstash/conf.d/syslog.conf :自定义配置与logstash相关的文件;

- --config.test_and_exit :检测此文件;如果不要exit,则不能自动退出,会直接启动logstash;

[root@ying05 ~]# cd /usr/share/logstash/bin

[root@ying05 bin]# ls

benchmark.sh dependencies-report logstash logstash-keystore logstash.lib.sh logstash-plugin.bat pqrepair setup.bat

cpdump ingest-convert.sh logstash.bat logstash-keystore.bat logstash-plugin pqcheck ruby system-install

[root@ying05 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-10-13T19:06:58,327][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/var/lib/logstash/queue"}

[2018-10-13T19:06:58,337][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/var/lib/logstash/dead_letter_queue"}

[2018-10-13T19:06:58,942][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-10-13T19:07:01,595][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

修改此rsyslog的配置文件

[root@ying05 bin]# vim /etc/rsyslog.conf

#### RULES ####

*.* @@192.168.112.150:10514 //*.*,表明所有的日志;

重启rsyslog

[root@ying05 bin]# systemctl restart rsyslog

现在启动logstash,此时会把 所定义的日志全部打印到屏幕上,而且不会自动退出来;相当于开了另一个终端

[root@ying05 bin]# ./logstash --path.settings /etc/logstash/ -f

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-10-13T22:41:00,835][INFO ][logstash.agent ] No persistent UUID file found. Generating new UUID {:uuid=>"5039884c-a106-4370-8bb3-fcab8227a8d6", :path=>"/var/lib/logstash/uuid"}

[2018-10-13T22:41:01,662][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.4.2"}

[2018-10-13T22:41:05,042][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2018-10-13T22:41:05,838][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

[2018-10-13T22:41:06,101][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2018-10-13T22:41:06,179][INFO ][logstash.inputs.syslog ] Starting syslog udp listener {:address=>"0.0.0.0:10514"}

[2018-10-13T22:41:06,209][INFO ][logstash.inputs.syslog ] Starting syslog tcp listener {:address=>"0.0.0.0:10514"}

[2018-10-13T22:41:06,757][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2018-10-13T22:41:17,106][INFO ][logstash.inputs.syslog ] new connection {:client=>"192.168.112.151:60140"}

{

"logsource" => "ying05",

"message" => "DHCPDISCOVER on ens37 to 255.255.255.255 port 67 interval 19 (xid=0x3a663c52)\n",

"@timestamp" => 2018-10-13T14:41:16.000Z,

"severity_label" => "Informational",

"priority" => 30,

"severity" => 6,

"host" => "192.168.112.151",

"pid" => "2163",

"facility" => 3,

"program" => "dhclient",

"type" => "system-syslog",

"timestamp" => "Oct 13 22:41:16",

"facility_label" => "system",

"@version" => "1"

}

{

"logsource" => "ying05",

"message" => " [1539441739.5305] device (ens37): state change: failed -> disconnected (reason 'none') [120 30 0]\n",

"@timestamp" => 2018-10-13T14:42:19.000Z,

"severity_label" => "Informational",

"priority" => 30,

"severity" => 6,

"host" => "192.168.112.151",

"pid" => "559",

"facility" => 3,

"program" => "NetworkManager",

"type" => "system-syslog",

"timestamp" => "Oct 13 22:42:19",

"facility_label" => "system",

"@version" => "1"

}

由于此处是终端显示,不能够退出;会不停的刷新日志情况;此时需要查看其主机的信息,需要复制一个终端(简称B终端);

在B重点,查看端口,此时有10514;

[root@ying05 ~]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 550/rpcbind

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 930/nginx: master p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 821/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1041/master

tcp6 0 0 :::111 :::* LISTEN 550/rpcbind

tcp6 0 0 192.168.112.151:9200 :::* LISTEN 1391/java

tcp6 0 0 :::10514 :::* LISTEN 2137/java

tcp6 0 0 192.168.112.151:9300 :::* LISTEN 1391/java

tcp6 0 0 :::22 :::* LISTEN 821/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1041/master

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 2137/java

回到之前的终端(成为A端口),Ctrl + C强制退出,此时再查看监听端口,10514也不会存在;

^C[2018-10-13T23:52:23,187][WARN ][logstash.runner ] SIGINT received. Shutting down.

[2018-10-13T23:52:23,498][INFO ][logstash.inputs.syslog ] connection error: stream closed

[2018-10-13T23:52:23,651][INFO ][logstash.pipeline ] Pipeline has terminated {:pipeline_id=>"main", :thread=>"#"}

[root@ying05 bin]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 550/rpcbind

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 930/nginx: master p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 821/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1041/master

tcp6 0 0 :::111 :::* LISTEN 550/rpcbind

tcp6 0 0 192.168.112.151:9200 :::* LISTEN 1391/java

tcp6 0 0 192.168.112.151:9300 :::* LISTEN 1391/java

tcp6 0 0 :::22 :::* LISTEN 821/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1041/master

此时在A端口上,再次启动logstash;

[root@ying05 bin]# ./logstash --path.settings /etc/logstash/ -f //此启动方式为,前台启动

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-10-13T23:54:27,377][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.4.2"}

[2018-10-13T23:54:30,556][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2018-10-13T23:54:31,118][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

[2018-10-13T23:54:31,182][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2018-10-13T23:54:31,217][INFO ][logstash.inputs.syslog ] Starting syslog udp listener {:address=>"0.0.0.0:10514"}

[2018-10-13T23:54:31,243][INFO ][logstash.inputs.syslog ] Starting syslog tcp listener {:address=>"0.0.0.0:10514"}

[2018-10-13T23:54:31,525][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2018-10-13T23:58:47,450][INFO ][logstash.inputs.syslog ] new connection {:client=>"192.168.112.151:60152"}

[2018-10-13T23:58:47,785][INFO ][logstash.inputs.syslog ] new connection {:client=>"192.168.112.151:60154"}

{

"facility" => 3,

"severity_label" => "Informational",

"program" => "systemd",

"timestamp" => "Oct 13 23:58:47",

"@timestamp" => 2018-10-13T15:58:47.000Z,

"type" => "system-syslog",

"logsource" => "ying05",

"message" => "Stopping System Logging Service...\n",

"severity" => 6,

"facility_label" => "system",

"priority" => 30,

"host" => "192.168.112.151",

"@version" => "1"

}

在B终段上,查看端口,一直没有10514,之后出现,说明之前一直在加载,启动后,就会监听10514端口

[root@ying05 ~]# netstat -lnpt |grep 10514

[root@ying05 ~]# netstat -lnpt |grep 10514

[root@ying05 ~]# netstat -lnpt |grep 10514

[root@ying05 ~]# netstat -lnpt |grep 10514

tcp6 0 0 :::10514 :::* LISTEN 2535/java

[root@ying05 ~]#

七、配置logstash

刚才只是把日志显示在屏幕上,方便测试,现在需要把日志输入到elasticsearch;

现编辑配置文件syslog.conf

[root@ying05 bin]# vim /etc/logstash/conf.d/syslog.conf

input {

syslog {

type => "system-syslog"

port => 10514

}

}

output {

elasticsearch {

hosts => ["192.168.112.150:9200"] //指向master机器ying04,由于是分布式的可以指向151、152

index => "system-syslog-%{+YYYY.MM}" //定义日志索引

}

}

检测配置文件否正常;输出OK则为配置成功;

[root@ying05 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-10-14T00:16:21,163][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-10-14T00:16:23,242][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

现在启动服务

[root@ying05 bin]# systemctl start logstash

但是查看日志,却久久不能够被写入,因为权限问题

[root@ying05 bin]# ls -l /var/log/logstash/logstash-plain.log

-rw-r--r-- 1 root root 624 10月 14 00:16 /var/log/logstash/logstash-plain.log

[root@ying05 bin]# chown logstash /var/log/logstash/logstash-plain.log

[root@ying05 bin]# ls -l /var/log/logstash/logstash-plain.log

-rw-r--r-- 1 logstash root 624 10月 14 00:16 /var/log/logstash/logstash-plain.log

[root@ying05 bin]# ls -l /var/lib/logstash/ //这个也需要更改

总用量 4

drwxr-xr-x 2 root root 6 10月 13 19:06 dead_letter_queue

drwxr-xr-x 2 root root 6 10月 13 19:06 queue

-rw-r--r-- 1 root root 36 10月 13 22:41 uuid

[root@ying05 bin]# chown -R logstash /var/lib/logstash/

[root@ying05 bin]# systemctl restart logstash

同时9600端口监听,改为192.168.112.151

[root@ying05 bin]# vim /etc/logstash/logstash.yml

# ------------ Metrics Settings --------------

#

# Bind address for the metrics REST endpoint

#

# http.host: "127.0.0.1"

http.host: "192.168.112.151" //添加主机IP

#

此时已经出现10514端口和9600,说明启动成功

[root@ying05 bin]# systemctl restart logstash //重启logstash服务;

[root@ying05 bin]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 550/rpcbind

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 930/nginx: master p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 821/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1041/master

tcp6 0 0 :::111 :::* LISTEN 550/rpcbind

tcp6 0 0 192.168.112.151:9200 :::* LISTEN 1391/java

tcp6 0 0 :::10514 :::* LISTEN 4828/java

tcp6 0 0 192.168.112.151:9300 :::* LISTEN 1391/java

tcp6 0 0 :::22 :::* LISTEN 821/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1041/master

tcp6 0 0 192.168.112.151:9600 :::* LISTEN 4828/java

到master机器(ying04)上,查看logstash收集的日志传到能够传到elasticsearch中;现查看其日志索引,可以看到system-syslog-2018.10

[root@ying04 ~]# curl '192.168.112.150:9200/_cat/indices?v' //看到索引,说明logstash与elasticsearch通信正常

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open system-syslog-2018.10 uP2TM4UFTdSx7fbvLD1IsQ 5 1 82 0 773.8kb 361.9kb

现在我们从feng06(192.168.112.152)机器,由ssh登录到feng05(192.168.112.151)机器,会产生日志;

[root@ying06 ~]# ssh 192.168.112.151

The authenticity of host '192.168.112.151 (192.168.112.151)' can't be established.

ECDSA key fingerprint is SHA256:ZQlXi+kieRwi2t64Yc5vUhPPWkMub8f0CBjnYRlX2Iw.

ECDSA key fingerprint is MD5:ff:9f:37:87:81:89:fc:ed:af:c6:62:c6:32:53:7a:ad.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.112.151' (ECDSA) to the list of known hosts.

[email protected]'s password:

Last login: Sun Oct 14 13:55:30 2018 from 192.168.112.1

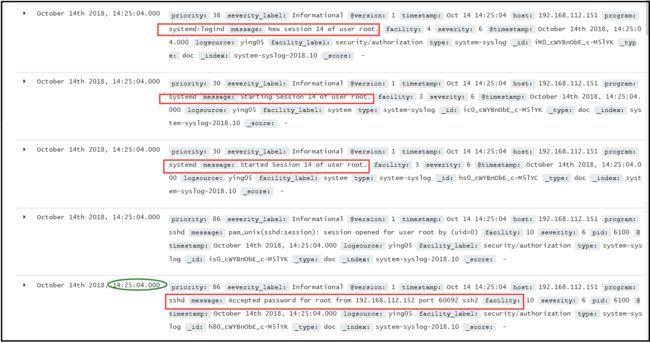

在feng05机器上查看,这个时间段发生的日志;

[root@ying05 ~]# less /var/log/messages

......截取14.25.04这个时间点

Oct 14 14:25:04 ying05 systemd: Started Session 14 of user root.

Oct 14 14:25:04 ying05 systemd-logind: New session 14 of user root.

Oct 14 14:25:04 ying05 systemd: Starting Session 14 of user root.

在浏览器上,刷新kibana;会出现日志,就是虚拟机显示的日志;注意时间点的对照

八、收集nginx日志

在/etc/logstash/conf.d/目录下创建一个收集nginx的配置文件;

[root@ying05 ~]# cd /etc/logstash/conf.d/

[root@ying05 conf.d]# ls

syslog.conf

[root@ying05 conf.d]# vim nginx.conf //创建一个收集nginx的配置文件

input {

file {

path => "/tmp/elk_access.log" //产生日志的路径

start_position => "beginning" //从哪里开始

type => "nginx" //类型标记

}

}

filter { //有grok过滤器进行解析字段

grok {

match => { "message" => "%{IPORHOST:http_host} %{IPORHOST:clientip} - %{USERNAME:remote_user} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:http_verb} %{NOTSPACE:http_request}(?: HTTP/%{NUMBER:http_version})?|%{DATA:raw_http_request})\" %{NUMBER:response} (?:%{NUMBER:bytes_read}|-) %{QS:referrer} %{QS:agent} %{QS:xforwardedfor} %{NUMBER:request_time:float}"}

}

geoip {

source => "clientip"

}

}

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["192.168.112.151:9200"] //主机ying05

index => "nginx-test-%{+YYYY.MM.dd}"

}

}

对此配置进行检查;生成OK,就是正确的;

[root@ying05 conf]# cd /usr/share/logstash/bin

[root@ying05 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/nginx.conf --config.test_and_exit

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-10-15T08:31:42,427][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-10-15T08:31:47,080][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

需要你的机器上安装nginx;下面得知,nginx已经运行,而且也在占用80端口,OK;

[root@ying05 ~]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 550/rpcbind

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 930/nginx: master p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 821/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1041/master

tcp6 0 0 :::111 :::* LISTEN 550/rpcbind

tcp6 0 0 192.168.112.151:9200 :::* LISTEN 1391/java

tcp6 0 0 :::10514 :::* LISTEN 4828/java

tcp6 0 0 192.168.112.151:9300 :::* LISTEN 1391/java

tcp6 0 0 :::22 :::* LISTEN 821/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1041/master

tcp6 0 0 192.168.112.151:9600 :::* LISTEN 4828/java

在nginx配置文件中,添加代理kibana(安装在feng04的主机)的虚拟主机文件;

[root@ying05 ~]# cd /usr/local/nginx/conf/

[root@ying05 conf]# vim nginx.conf

server {

listen 80;

server_name elk.ying.com;

location / {

proxy_pass http://192.168.112.150:5601; //真实服务器

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

access_log /tmp/elk_access.log main2; //定义日志格式为main2

}

由于定义的格式为main2,因此需要在nginx配置文件中也需要 添加main2格式

[root@ying05 conf]# vim nginx.conf //跟上面为同一个配置

......默认

log_format combined_realip '$remote_addr $http_x_forwarded_for [$time_local]'

' $host "$request_uri" $status'

' "$http_referer" "$http_user_agent"'; //此为默认配置

...... 默认

log_format main2 '$http_host $remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$upstream_addr" $request_time'; //此为main2格式定义

重启nginx服务;

[root@ying05 conf]# /usr/local/nginx/sbin/nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful

[root@ying05 conf]# /usr/local/nginx/sbin/nginx -s reload

再到 C:\Windows\System32\drivers\etc目录下,hosts下,配置定义的域名;

192.168.112.151 elk.ying.com

再到ying05上,重启logstash 服务

[root@ying05 conf]# systemctl restart logstash

稍等片刻后,到ying04机器上,查看生成的索引 nginx-test-2018.10.15;

[root@ying04 ~]# curl '192.168.112.150:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana aO3JiaT_TKWt3OJhDjPOvg 1 0 3 0 17.8kb 17.8kb

yellow open nginx-test-2018.10.15 taXOvQTyTFely-_oiU_Y2w 5 1 60572 0 6mb 6mb

yellow open system-syslog-2018.10 uP2TM4UFTdSx7fbvLD1IsQ 5 1 69286 0 10.7mb 10.7mb

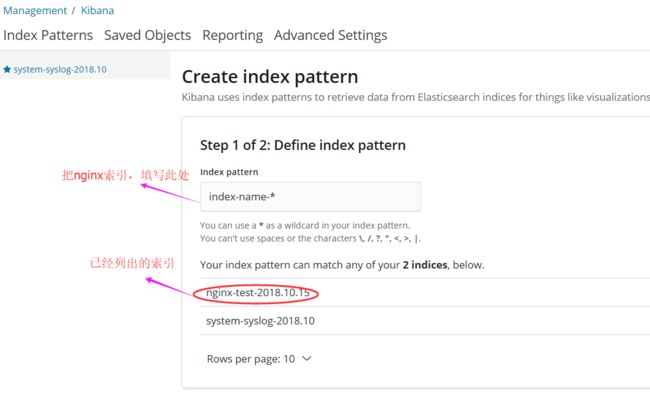

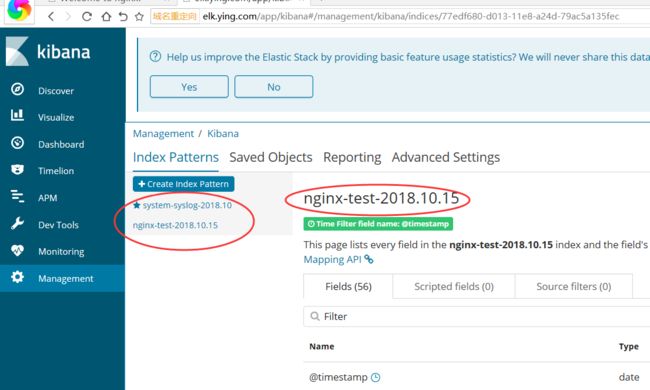

到浏览器上,输入elk.ying.com;并点击 index patterns

把nginx-test-2018.10.15 这个索引填入到图示位置;

填写好之后,点击 creat,就可以看到 success! 添加索引成功;点击下一步;

此时点击左侧Management,会出现两个索引,其中一个就是刚才添加的 nginx-test-2018.10.15;

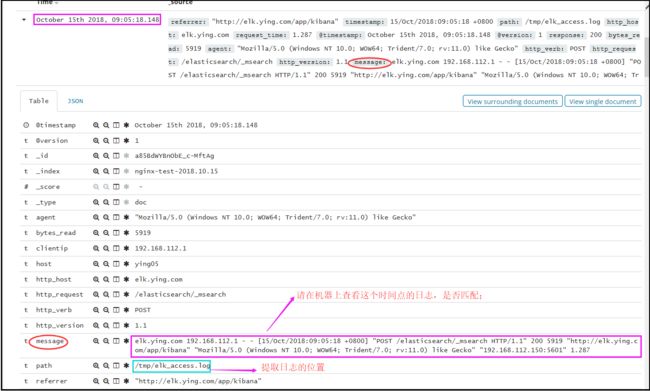

在ying05机器上查看/tmp/elk_access.log日志,9.15分的日志,与kibana显示的对比;结果肯定是一样的;

[root@ying05 bin]# less /tmp/elk_access.log

elk.ying.com 192.168.112.1 - - [15/Oct/2018:09:05:18 +0800] "POST /elasticsearch/_msearch HTTP/1.1" 200 5919 "http://elk.ying.com/app/kibana" "Mozilla/5.0 (Windows NT 10.0; WOW64; Trident/7.0; rv:11.0) like Gecko" "192.168.112.150:5601" 1.287

请看下面的message;注意时间是否一致;测试成功;

九、使用beats采集日志

目前还有一种日志收集器beats;

- beats是轻量级采集日志,耗用资源少,可扩展;

- logstash耗费资源;

先yum安装filebeat

[root@ying06 ~]yum list |grep filebeat

filebeat.x86_64 6.4.2-1 @elasticsearch-6.x

filebeat.i686 6.4.2-1 elasticsearch-6.x

[root@ying06 ~]yum install -y filebeat

按下面编辑filebeat.yml的配置文件,目的是为了测试,此配置;

[root@ying06 ~]# vim /etc/filebeat/filebeat.yml

#=========================== Filebeat inputs =============================

# Change to true to enable this input configuration.

# enabled: false //默认范例

enabled: true

# Paths that should be crawled and fetched. Glob based paths.

paths:

# - /var/log/*.log //默认范例

- /var/log/messages //指定日志路径

#-------------------------- Elasticsearch output ------------------------------

#output.elasticsearch:

# Array of hosts to connect to.

# hosts: ["localhost:9200"] //范例

output.console:

enable: true

此时会显示很对的日志,不断刷屏,此时显示的日志,就是"source":"/var/log/messages"

[root@ying06 ~]/usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml

{"@timestamp":"2018-10-15T07:32:06.322Z","@metadata":{"beat":"filebeat","type":"doc","version":"6.4.2"},"beat":{"version":"6.4.2","name":"ying06","hostname":"ying06"},"host":{"name":"ying06"},"source":"/var/log/messages","offset":1253647,"message":"Oct 15 15:32:04 ying06 NetworkManager[558]: \u003cwarn\u003e [1539588724.3946] device (ens37): Activation: failed for connection '有线连接 1'","prospector":{"type":"log"},"input":{"type":"log"}}

{"@timestamp":"2018-10-15T07:32:06.322Z","@metadata":{"beat":"filebeat","type":"doc","version":"6.4.2"},"host":{"name":"ying06"},"source":"/var/log/messages","offset":1253784,"message":"Oct 15 15:32:04 ying06 NetworkManager[558]: \u003cinfo\u003e [1539588724.3958] device (ens37): state change: failed -\u003e disconnected (reason 'none') [120 30 0]","prospector":{"type":"log"},"input":{"type":"log"},"beat":{"name":"ying06","hostname":"ying06","version":"6.4.2"}}

^C[root@ying06 ~]#

此两个message与上面采集的日志,是对应的;

[root@ying06 ~]# less /var/log/messages

Oct 15 15:32:04 ying06 NetworkManager[558]: [1539588724.3946] device (ens37): Activation: failed for connection '有线连接 1'

Oct 15 15:32:04 ying06 NetworkManager[558]: [1539588724.3958] device (ens37): state change: failed -> disconnected (reason 'none') [120 30 0]

现在只是把日志显示在屏幕上,现在需要把日志,传到elasticsearch,再通过kibana可视化显示;

[root@ying06 ~]# vim /etc/filebeat/filebeat.yml

#=========================== Filebeat inputs =============================

# Paths that should be crawled and fetched. Glob based paths.

paths:

# - /var/log/*.log

- /var/log/elasticsearch/fengstory.log //现在收集的此日志

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch: //输出到elasticsearch中

# Array of hosts to connect to.

hosts: ["192.168.112.150:9200"] //指向feng04主机

#output.console: //之前测试的 不用加载

# enable: true

开启filebeat服务,并查看其进程;

[root@ying06 ~]# systemctl start filebeat

[root@ying06 ~]# ps aux|grep filebeat

root 1599 0.0 0.8 309872 16528 ? Ssl 16:20 0:00 /usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat

root 1704 0.0 0.0 112720 980 pts/0 R+ 16:47 0:00 grep --color=auto filebeat

[root@ying06 ~]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 556/rpcbind

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 964/nginx: master p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 827/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1034/master

tcp6 0 0 :::111 :::* LISTEN 556/rpcbind

tcp6 0 0 192.168.112.152:9200 :::* LISTEN 1711/java

tcp6 0 0 192.168.112.152:9300 :::* LISTEN 1711/java

tcp6 0 0 :::22 :::* LISTEN 827/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1034/master

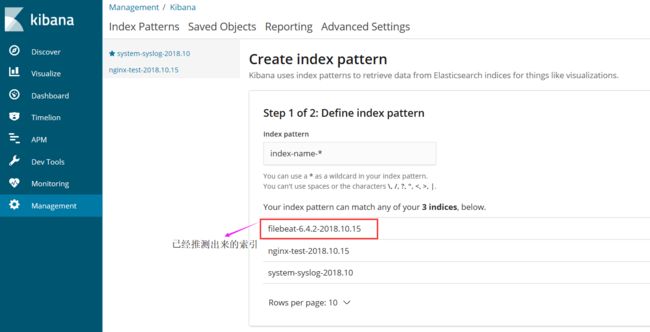

在feng04(192.168.112.150)机器上,查看日志是否到达elasticsearch中;出现 filebeat字样,说明已经到达;

[root@ying04 ~]# curl '192.168.112.150:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open nginx-test-2018.10.15 taXOvQTyTFely-_oiU_Y2w 5 1 202961 0 36.1mb 18.5mb

green open .kibana aO3JiaT_TKWt3OJhDjPOvg 1 1 4 0 80.3kb 40.1kb

green open filebeat-6.4.2-2018.10.15 m7Biv3QMTXmRR5u-cxIAoQ 3 1 73 0 153.3kb 95.4kb

green open system-syslog-2018.10 uP2TM4UFTdSx7fbvLD1IsQ 5 1 211675 0 41.9mb 21.4mb

feng04上端口也有5601,此时可以在浏览器上查看;

[root@ying04 ~]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 543/rpcbind

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 907/nginx: master p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 820/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1042/master

tcp 0 0 192.168.112.150:5601 0.0.0.0:* LISTEN 1420/node

tcp6 0 0 :::111 :::* LISTEN 543/rpcbind

tcp6 0 0 192.168.112.150:9200 :::* LISTEN 1255/java

tcp6 0 0 192.168.112.150:9300 :::* LISTEN 1255/java

tcp6 0 0 :::22 :::* LISTEN 820/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1042/master

此时在浏览器访问:elk.ying.com

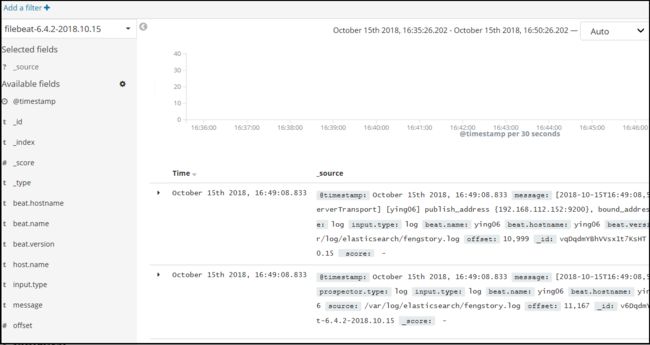

创建成功后,就可以看到日志 /var/log/elasticsearch/fengstory.log

此时查看日志来源,通过对比,可以得出两者一样

[root@ying06 ~]# less /var/log/elasticsearch/fengstory.log

[2018-10-15T16:49:08,548][INFO ][o.e.x.s.t.n.SecurityNetty4HttpServerTransport] [ying06] publish_address {192.168.112.152:9200}, bound_addresses {192.168.112.152:9200}

[2018-10-15T16:49:08,548][INFO ][o.e.n.Node ] [ying06] started