YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

来源:https://arxiv.org/abs/2207.02696

代码:https://github.com/WongKinYiu/yolov7

0. Abstract

YOLOv7 surpasses all known object detectors in both speed and accuracy in the range from 5 FPS to 160 FPS and has the highest accuracy 56.8% AP among all known realtime object detectors with 30 FPS or higher on GPU V100.

YOLOv7在5 FPS到160 FPS的范围内,在速度和精度方面都超过了所有已知的物体检测器,在GPU V100上以30 FPS或更高的速度在所有已知的实时物体检测器中具有最高的精度56.8% AP。

YOLOv7-E6 object detector (56 FPS V100, 55.9% AP) outperforms both transformer-based detector SWINL Cascade-Mask R-CNN (9.2 FPS A100, 53.9% AP) by 509% in speed and 2% in accuracy, and convolutional based detector ConvNeXt-XL Cascade-Mask R-CNN (8.6 FPS A100, 55.2% AP) by 551% in speed and 0.7% AP in accuracy, as well as YOLOv7 outperforms: YOLOR, YOLOX, Scaled-YOLOv4, YOLOv5, DETR, Deformable DETR, DINO-5scale-R50, ViT-Adapter-B and many other object detectors in speed and accuracy. Moreover, we train YOLOv7 only on MS COCO dataset from scratch without using any other datasets or pre-trained weights.

YOLOv7-E6对象检测器(56 FPS V100,55.9% AP)的速度和精度分别比基于transformer的检测器SWINL Cascade-Mask R-CNN(9.2 FPS A100,53.9% AP)快509%和2%,比基于卷积的检测器conv next-XL Cascade-Mask R-CNN(8.6 FPS A100,55.2% AP)快551%和0.7% AP,YOLOv7的性能也优于此外,我们仅在MS COCO数据集上从头开始训练YOLOv7,而不使用任何其他数据集或预训练的权重。

1. Introduction

Real-time object detection is a very important topic in computer vision, as it is often a necessary component in computer vision systems. For example, multi-object tracking[94, 93], autonomous driving [40, 18], robotics [35, 58], medical image analysis [34, 46], etc. The computing devices that execute real-time object detection is usually some mobile CPU or GPU, as well as various neural processing units (NPU) developed by major manufacturers.

实时目标检测是计算机视觉中一个非常重要的课题,因为它通常是计算机视觉系统中一个必要的组成部分。比如多目标跟踪[94,93],自动驾驶[40,18],机器人学[35,58],医学图像分析[34,46]等。执行实时物体检测的计算设备通常是一些移动CPU或GPU,以及各大厂商开发的各种神经处理单元(NPU)。

For example, the Apple neural engine (Apple), the neural compute stick (Intel), Jetson AI edge devices (Nvidia), the edge TPU (Google), the neural processing engine (Qualcomm), the AI processing unit (MediaTek), and the AI SoCs (Kneron), are all NPUs. Some of the above mentioned edge devices focus on speeding up different operations such as vanilla convolution, depth-wise convolution, or MLP operations.

例如,苹果神经引擎(Apple)、神经计算棒(Intel)、杰特森人工智能边缘设备(Nvidia)、边缘TPU (Google)、神经处理引擎(高通)、人工智能处理单元(联发科)和人工智能SOC(耐能)都是npu。上面提到的一些边缘设备致力于加速不同的运算,例如普通卷积、深度卷积或MLP运算。

In this paper, the real-time object detector we proposed mainly hopes that it can support both mobile GPU and GPU devices from the edge to the cloud.

在本文中,我们提出的实时对象检测器主要是希望它能够从边缘到云端同时支持移动GPU和GPU设备。

In recent years, the real-time object detector is still developed for different edge device. For example, the development of MCUNet [49, 48] and NanoDet [54] focused on producing low-power single-chip and improving the inference speed on edge CPU.

近年来,实时目标检测器仍在针对不同的边缘设备进行开发。例如,MCUNet [49, 48] 和 NanoDet [54] 的开发专注于生产低功耗单芯片并提高边缘 CPU 的推理速度。

As for methods such as YOLOX [21] and YOLOR [81], they focus on improving the inference speed of various GPUs. More recently, the development of real-time object detector has focused on the design of efficient architecture. As for real-time object detectors that can be used on CPU [54, 88, 84, 83], their design is mostly based on MobileNet [28, 66, 27], ShuffleNet [92, 55], or GhostNet [25].

至于YOLOX [21] 和YOLOR [81] 等方法,他们专注于提高各种GPU的推理速度。最近,实时目标检测器的发展集中在高效架构的设计上。至于可以在CPU [54, 88, 84, 83]上使用的实时目标检测器,他们的设计主要基于MobileNet [28, 66, 27]、ShuffleNet [92, 55] 或 GhostNet [25] .

Another mainstream real-time object detectors are developed for GPU [81, 21, 97], they mostly use ResNet [26], DarkNet [63], or DLA [87], and then use the CSPNet [80] strategy to optimize the architecture. The development direction of the proposed methods in this paper are different from that of the current mainstream real-time object detectors. In addition to architecture optimization, our proposed methods will focus on the optimization of the training process.

另一个主流的实时目标检测器是为 GPU [81, 21, 97] 开发的,它们大多使用 ResNet [26]、DarkNet [63] 或 DLA [87],然后使用 CSPNet [80] 策略来优化建筑学。本文提出的方法的发展方向与当前主流的实时目标检测器不同。除了架构优化之外,我们提出的方法将专注于训练过程的优化。

Our focus will be on some optimized modules and optimization methods which may strengthen the training cost for improving the accuracy of object detection, but without increasing the inference cost.We call the proposed modules and optimization methods trainable bag-of-freebies.

我们的重点将放在一些优化的模块和优化方法上,这些优化模块和优化方法可能会增加训练成本以提高目标检测的准确性,但不会增加推理成本。我们将提出的模块和优化方法称为可训练的bag-of-freebies。

Recently, model re-parameterization [13, 12, 29] and dynamic label assignment [20, 17, 42] have become important topics in network training and object detection. Mainly after the above new concepts are proposed, the training of object detector evolves many new issues.

最近,模型重新参数化[13,12,29]和动态标签分配[20,17,42]已成为网络训练和目标检测的重要课题。主要是在上述新概念提出之后,物体检测器的训练演变出了很多新的问题。

In this paper, we will present some of the new issues we have discovered and devise effective methods to address them. For model reparameterization, we analyze the model re-parameterization strategies applicable to layers in different networks with the concept of gradient propagation path, and propose planned re-parameterized model.

在本文中,我们将介绍我们发现的一些新问题,并设计解决这些问题的有效方法。对于模型重参数化,我们用梯度传播路径的概念分析了适用于不同网络层的模型重参数化策略,并提出了有计划的重参数化模型。

In addition, when we discover that with dynamic label assignment technology, the training of model with multiple output layers will generate new issues.That is: “How to assign dynamic targets for the outputs of different branches?” For this problem, we propose a new label assignment method called coarse-to-fine lead guided label assignment.

此外,当我们发现使用动态标签分配技术时,对具有多个输出层的模型的训练会产生新的问题。即:“如何为不同分支的输出分配动态目标?”针对这个问题,我们提出了一种新的标签分配方法,称为从粗到细的引导式标签分配。

The contributions of this paper are summarized as follows:

(1) we design several trainable bag-of-freebies methods, so that real-time object detection can greatly improve the detection accuracy without increasing the inference cost;

(1)我们设计了几种可训练的bag-of-freebies方法,使得实时目标检测可以在不增加推理成本的情况下大大提高检测精度;

(2) for the evolution of object detection methods, we found two new issues, namely how re-parameterized module replaces original module, and how dynamic label assignment strategy deals with assignment to different output layers. In addition, we also propose methods to address the difficulties arising from these issues;

(2) 对于目标检测方法的演进,我们发现了两个新问题,即重新参数化的模块如何替换原始模块,以及动态标签分配策略如何处理分配给不同输出层的问题。此外,我们还提出了解决这些问题所带来的困难的方法;

(3) we propose “extend” and “compound scaling” methods for the real-time object detector that can effectively utilize parameters and computation;

(3) 我们提出了实时目标检测器的“扩展”和“复合缩放”方法,可以有效地利用参数和计算;

(4) the method we proposed can effectively reduce about 40% parameters and 50% computation of state-of-the-art real-time object detector, and has faster inference speed and higher detection accuracy.

(4) 我们提出的方法可以有效减少最先进的实时目标检测器40%左右的参数和50%的计算量,并且具有更快的推理速度和更高的检测精度。

2. Related work

2.1. Real-time object detectors

Currently state-of-the-art real-time object detectors are mainly based on YOLO [61, 62, 63] and FCOS [76, 77], which are [3, 79, 81, 21, 54, 85, 23]. Being able to become a state-of-the-art real-time object detector usually requires the following characteristics: (1) a faster and stronger network architecture; (2) a more effective feature integration method [22, 97, 37, 74, 59, 30, 9, 45]; (3) a more accurate detection method [76, 77, 69]; (4) a more robust loss function [96, 64, 6, 56, 95, 57]; (5) a more efficient label assignment method [99, 20, 17, 82, 42]; and (6) a more efficient training method.

目前最先进的实时目标检测器主要基于 YOLO [61, 62, 63] 和 FCOS [76, 77],分别是 [3, 79, 81, 21, 54, 85, 23] .成为最先进的实时目标检测器通常需要以下特性:(1)更快更强的网络架构; (2) 更有效的特征整合方法[22, 97, 37, 74, 59, 30, 9, 45]; (3) 更准确的检测方法 [76, 77, 69]; (4) 更稳健的损失函数 [96, 64, 6, 56, 95, 57]; (5) 一种更有效的标签分配方法 [99, 20, 17, 82, 42]; (6) 更有效的训练方法

In this paper, we do not intend to explore self-supervised learning or knowledge distillation methods that require additional data or large model. Instead, we will design new trainable bag-of-freebies method for the issues derived from the state-of-the-art methods associated with (4), (5), and (6) mentioned above.

在本文中,我们不打算探索需要额外数据或大型模型的自我监督学习或知识蒸馏方法。相反,我们将针对与上述 (4)、(5) 和 (6) 相关的最先进方法衍生的问题设计新的可训练trainable bag-of-freebies 方法。

2.2. Model re-parameterization

Model re-parametrization techniques [71, 31, 75, 19, 33, 11, 4, 24, 13, 12, 10, 29, 14, 78] merge multiple computational modules into one at inference stage. The model re-parameterization technique can be regarded as an ensemble technique, and we can divide it into two categories, i.e., module-level ensemble and model-level ensemble.

**模型重新参数化技术 [71、31、75、19、33、11、4、24、13、12、10、29、14、78] 在推理阶段将多个计算模块合并为一个。**模型重参数化技术可以看作是一种集成技术,我们可以将其分为两类,即模块级集成和模型级集成。

There are two common practices for model-level reparameterization to obtain the final inference model. One is to train multiple identical models with different training data, and then average the weights of multiple trained models. The other is to perform a weighted average of the weights of models at different iteration number.

模型级别的重新参数化有两种常见的做法来获得最终的推理模型。一种是用不同的训练数据训练多个相同的模型,然后对多个训练模型的权重进行平均。另一种是对不同迭代次数的模型权重进行加权平均。

Module-level re-parameterization is a more popular research issue recently. This type of method splits a module into multiple identical or different module branches during training and integrates multiple branched modules into a completely equivalent module during inference.

模块级重新参数化是最近比较热门的研究问题。这种方法在训练时将一个模块拆分为多个相同或不同的模块分支,在推理时将多个分支模块整合为一个完全等效的模块。

However, not all proposed re-parameterized module can be perfectly applied to different architectures. With this in mind, we have developed new re-parameterization module and designed related application strategies for various architectures.

然而,并非所有提出的重新参数化模块都可以完美地应用于不同的架构。考虑到这一点,我们开发了新的重新参数化模块,并为各种架构设计了相关的应用策略。

2.3. Model scaling

Model scaling [72, 60, 74, 73, 15, 16, 2, 51] is a way to scale up or down an already designed model and make it fit in different computing devices. The model scaling method usually uses different scaling factors, such as resolution (size of input image), depth (number of layer), width (number of channel), and stage (number of feature pyramid), so as to achieve a good trade-off for the amount of network parameters, computation, inference speed, and accuracy.

模型缩放[72,60,74,73,15,16,2,51]是一种放大或缩小已经设计的模型并使其适合不同计算设备的方法。模型缩放方法通常使用不同的缩放因子,如分辨率(输入图像的大小)、深度(层数)、宽度(通道数)和阶段(特征金字塔数),以便在网络参数的数量、计算、推理速度和精度之间取得良好的折衷。

Network architecture search (NAS) is one of the commonly used model scaling methods. NAS can automatically search for suitable scaling factors from search space without defining too complicated rules. The disadvantage of NAS is that it requires very expensive computation to complete the search for model scaling factors. In [15], the researcher analyzes the relationship between scaling factors and the amount of parameters and operations, trying to directly estimate some rules, and thereby obtain the scaling factors required by model scaling. Checking the literature, we found that almost all model scaling methods analyze individual scaling factor independently, and even the methods in the compound scaling category also optimized scaling factor independently. The reason for this is because most popular NAS architectures deal with scaling factors that are not very correlated. We observed that all concatenationbased models, such as DenseNet [32] or VoVNet [39], will change the input width of some layers when the depth of such models is scaled. Since the proposed architecture is concatenation-based, we have to design a new compound scaling method for this model.

网络架构搜索(NAS)是常用的模型缩放方法之一。 **NAS 可以自动从搜索空间中搜索到合适的缩放因子,而无需定义过于复杂的规则。 NAS 的缺点是需要非常昂贵的计算来完成对模型缩放因子的搜索。**在[15]中,研究人员分析了缩放因子与参数数量和操作量之间的关系,试图直接估计一些规则,从而获得模型缩放所需的缩放因子。查阅文献,我们发现几乎所有的模型缩放方法都独立地分析了单个缩放因子,甚至复合缩放类别中的方法也独立地优化了缩放因子。这样做的原因是因为大多数流行的 NAS 架构处理的比例因子不是很相关。我们观察到,所有基于连接的模型,例如 DenseNet [32] 或 VoVNet [39],都会在缩放此类模型的深度时改变某些层的输入宽度。由于所提出的架构是基于串联的,我们必须为此模型设计一种新的复合缩放方法。

3. Architecture

3.1. Extended efficient layer aggregation networks

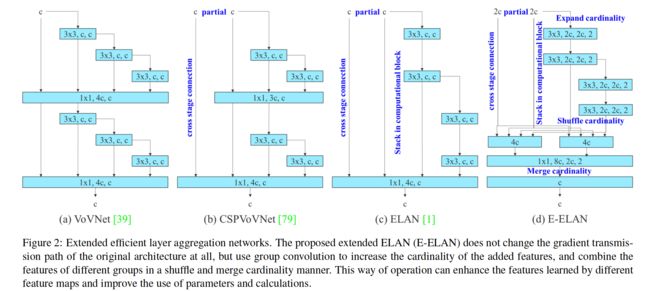

In most of the literature on designing the efficient architectures, the main considerations are no more than the number of parameters, the amount of computation, and the computational density. Starting from the characteristics of memory access cost, Ma et al.[55] also analyzed the influence of the input/output channel ratio, the number of branches of the architecture, and the element-wise operation on the network inference speed. Dolla´r et al. [15] additionally considered activation when performing model scaling, that is, to put more consideration on the number of elements in the output tensors of convolutional layers. The design of CSPVoVNet [79] in Figure 2 (b) is a variation of VoVNet [39].

在大多数关于设计高效架构的文献中,主要考虑因素不超过参数的数量、计算量和计算密度。 Ma et al.[55]从内存访问成本的特点出发还分析了输入/输出通道比、架构的分支数量以及逐元素操作对网络推理速度的影响。美元等。 [15] 在执行模型缩放时还考虑了激活,即更多地考虑卷积层输出张量中的元素数量。图 2(b)中 CSPVoVNet [79] 的设计是 VoVNet [39] 的变体。

In addition to considering the aforementioned basic designing concerns, the architecture of CSPVoVNet [79] also analyzes the gradient path, in order to enable the weights of different layers to learn more diverse features.The gradient analysis approach described above makes inferences faster and more accurate. ELAN [1] in Figure 2 © considers the following design strategy – “How to design an efficient network?.” They came out with a conclusion: By controlling the shortest longest gradient path, a deeper network can learn and converge effectively. In this paper, we propose Extended-ELAN (E-ELAN) based on ELAN and its main architecture is shown in Figure 2 (d).

除了考虑上述基本设计问题外,CSPVoVNet [79] 的架构还分析了梯度路径,以使不同层的权重能够学习更多样化的特征。上述梯度分析方法使推理更快、更准确.图 2 © 中的 ELAN [1] 考虑了以下设计策略——“如何设计一个高效的网络?”。他们得出了一个结论:通过控制最短最长的梯度路径,更深的网络可以有效地学习和收敛。在本文中,我们提出了基于 ELAN 的扩展 ELAN(E-ELAN),其主要架构如图 2(d)所示。

Figure 2: Extended efficient layer aggregation networks. The proposed extended ELAN (E-ELAN) does not change the gradient transmission path of the original architecture at all, but use group convolution to increase the cardinality of the added features, and combine the features of different groups in a shuffle and merge cardinality manner. This way of operation can enhance the features learned by different feature maps and improve the use of parameters and calculations.

Figure 2: 扩展的高效层聚合网络。提出的扩展ELAN(E-ELAN)完全没有改变原有架构的梯度传输路径,而是使用组卷积来增加添加特征的基数,并以shuffle和merge cardinality的方式组合不同组的特征.这种操作方式可以增强不同特征图学习到的特征,提高参数的使用和计算。

Regardless of the gradient path length and the stacking number of computational blocks in large-scale ELAN, it has reached a stable state. If more computational blocks are stacked unlimitedly, this stable state may be destroyed, and the parameter utilization rate will decrease. The proposed E-ELAN uses expand, shuffle, merge cardinality to achieve the ability to continuously enhance the learning ability of the network without destroying the original gradient path.In terms of architecture, E-ELAN only changes the architecture in computational block, while the architecture of transition layer is completely unchanged.

无论梯度路径长度和大规模 ELAN 中计算块的堆叠数量如何,它都达到了稳定状态。如果无限堆叠更多的计算块,可能会破坏这种稳定状态,参数利用率会降低。提出的E-ELAN通过expand、shuffle、merge cardinality来实现在不破坏原有梯度路径的情况下不断增强网络学习能力的能力。在架构方面,E-ELAN 只改变了计算块的架构,而过渡层的架构完全没有改变。

Our strategy is to use group convolution to expand the channel and cardinality of computational blocks. We will apply the same group parameter and channel multiplier to all the computational blocks of a computational layer. Then, the feature map calculated by each computational block will be shuffled into g groups according to the set group parameter g, and then concatenate them together. At this time, the number of channels in each group of feature map will be the same as the number of channels in the original architecture. Finally, we add g groups of feature maps to perform merge cardinality. In addition to maintaining the original ELAN design architecture, E-ELAN can also guide different groups of computational blocks to learn more diverse features.

我们的策略是使用组卷积来扩展计算块的通道和基数。我们将对计算层的所有计算块应用相同的组参数和通道乘数。然后,每个计算块计算出的特征图会根据设置的组参数g被打乱成g个组,然后将它们连接在一起。此时,每组特征图的通道数将与原始架构中的通道数相同。最后,我们添加 g 组特征图来执行合并基数。除了保持原有的 ELAN 设计架构,E-ELAN 还可以引导不同组的计算块学习更多样化的特征。

3.2. Model scaling for concatenation-based models

The main purpose of model scaling is to adjust some attributes of the model and generate models of different scales to meet the needs of different inference speeds. For example the scaling model of EfficientNet [72] considers the width, depth, and resolution. As for the scaled-YOLOv4 [79], its scaling model is to adjust the number of stages. In [15], Dolla´r et al. analyzed the influence of vanilla convolution and group convolution on the amount of parameter and computation when performing width and depth scaling, and used this to design the corresponding model scaling method.

模型缩放的主要目的是调整模型的一些属性,生成不同尺度的模型,以满足不同推理速度的需求。例如,EfficientNet [72] 的缩放模型考虑了宽度、深度和分辨率。至于 scaled-YOLOv4 [79],其缩放模型是调整阶段数。在 [15] 中,Dolla´r 等人分析在进行宽度和深度缩放的情况下vanilla卷积和组卷积对参数量和计算量的影响,并以此设计了相应的模型缩放方法。

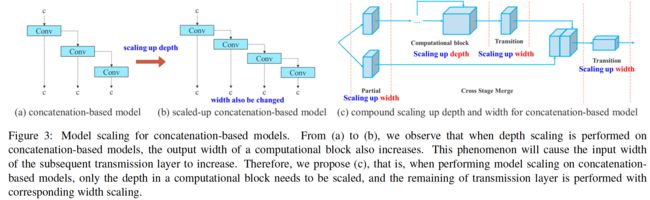

The above methods are mainly used in architectures such as PlainNet or ResNet. When these architectures are in executing scaling up or scaling down, the in-degree and out-degree of each layer will not change, so we can independently analyze the impact of each scaling factor on the amount of parameters and computation. However, if these methods are applied to the concatenation-based architecture, we will find that when scaling up or scaling down is performed on depth, the in-degree of a translation layer which is immediately after a concatenation-based computational block will decrease or increase, as shown in Figure 3 (a) and (b).

以上方法主要用在PlainNet或ResNet等架构中。这些架构在执行扩容或缩容时,每一层的入度和出度都不会发生变化,因此我们可以独立分析每个缩放因子对参数量和计算量的影响。但是,如果将这些方法应用于基于连接的架构,我们会发现,当对深度进行放大或缩小时,紧邻基于连接的计算块的平移层的入度会减小或增加,如图3(a)和(b)所示。

It can be inferred from the above phenomenon that we cannot analyze different scaling factors separately for a concatenation-based model but must be considered together. Take scaling-up depth as an example, such an action will cause a ratio change between the input channel and output channel of a transition layer, which may lead to a decrease in the hardware usage of the model.

从上述现象可以推断,对于一个基于串联的模型,我们不能单独分析不同的比例因子,而必须一起考虑。以放大深度为例,这种行为会导致过渡层的输入通道和输出通道之间的比例发生变化,这可能会导致模型的硬件使用率降低。

Therefore, we must propose the corresponding compound model scaling method for a concatenation-based model. When we scale the depth factor of a computational block, we must also calculate the change of the output channel of that block. Then, we will perform width factor scaling with the same amount of change on the transition layers, and the result is shown in Figure 3 ©. Our proposed compound scaling method can maintain the properties that the model had at the initial design and maintains the optimal structure.

因此,对于基于拼接的模型,必须提出相应的复合模型缩放方法。当我们缩放计算块的深度因子时,我们还必须计算该块的输出通道的变化。然后,我们将在过渡层上执行宽度因子缩放,并进行相同程度的更改,结果如图3©所示。我们提出的复合尺度方法既能保持模型在初始设计时的性质,又能保持最优结构。

Figure 3: Model scaling for concatenation-based models. From (a) to (b), we observe that when depth scaling is performed on concatenation-based models, the output width of a computational block also increases. This phenomenon will cause the input width of the subsequent transmission layer to increase. Therefore, we propose ©, that is, when performing model scaling on concatenationbased models, only the depth in a computational block needs to be scaled, and the remaining of transmission layer is performed with corresponding width scaling.

Figure 3: 基于串联的模型的模型缩放。从(A)到(B),我们观察到,当对基于级联的模型执行深度缩放时,计算块的输出宽度也增加。这种现象会导致后续传输层的输入宽度增加。因此,我们提出©,即在对级联模型进行模型缩放时,只需要对计算块中的深度进行缩放,而传输层的其余部分则进行相应的宽度缩放。

4. Trainable bag-of-freebies

4.1. Planned re-parameterized convolution

Although RepConv [13] has achieved excellent performance on the VGG [68], when we directly apply it to ResNet [26] and DenseNet [32] and other architectures, its accuracy will be significantly reduced. We use gradient flow propagation paths to analyze how re-parameterized convolution should be combined with different network.We also designed planned re-parameterized convolution accordingly.

虽然RepConv[13]在VGG[68]上取得了很好的性能,但当我们将其直接应用到ResNet[26]和DenseNet[32]等体系结构上时,其准确性会显著降低。我们利用梯度流传播路径分析了不同网络如何结合再参数卷积,并设计了相应的再参数卷积方案。

RepConv actually combines 3×3 convolution, 1×1 convolution, and identity connection in one convolutional layer. After analyzing the combination and corresponding performance of RepConv and different architectures, we find that the identity connection in RepConv destroys the residual in ResNet and the concatenation in DenseNet, which provides more diversity of gradients for different feature maps.

**RepConv 实际上在一个卷积层中结合了 3×3 卷积、1×1 卷积和identity connection。**在分析 RepConv 和不同架构的组合和对应性能后,我们发现 RepConv 中的identity connection破坏了 ResNet 中的残差和 DenseNet 中的连接,为不同的特征图提供了更多的梯度多样性。

For the above reasons, we use RepConv without identity connection (RepConvN) to design the architecture of planned re-parameterized convolution. In our thinking, when a convolutional layer with residual or concatenation is replaced by re-parameterized convolution, there should be no identity connection.

基于以上原因,我们使用无identity connection的 RepConv (RepConvN) 来设计Planned re-parameterized model的架构。在我们的想法中,当一个带有残差或连接的卷积层被re-parameterized model的卷积代替时,应该没有identity connection。

Figure 4 shows an example of our designed “planned re-parameterized convolution” used in PlainNet and ResNet. As for the complete planned re-parameterized convolution experiment in residual-based model and concatenation-based model, it will be presented in the ablation study session.

图 4 显示了我们设计的用于 PlainNet 和 ResNet 的“计划重新参数化卷积”的示例。关于残差模型和级联模型中完整计划的重新参数化卷积实验,将在消融研究会议上介绍。

Figure 4: Planned re-parameterized model. In the proposed planned re-parameterized model, we found that a layer with residual or concatenation connections, its RepConv should not have identity connection. Under these circumstances, it can be replaced by RepConvN that contains no identity connections.

Figure 4:Planned re-parameterized model。在拟议的重新参数化模型中,我们发现,一个具有剩余连接或串联连接的层,其RepConv不应该具有标识连接。在这些情况下,它可以被不包含 identity connections的RepConvN替代。

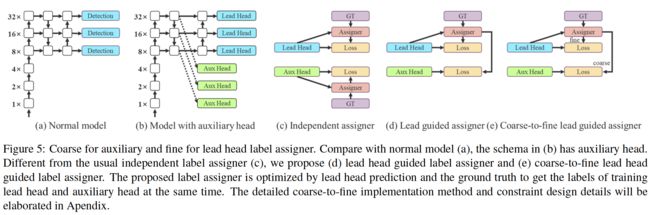

figure 5: Coarse for auxiliary and fine for lead head label assigner. Compare with normal model (a), the schema in (b) has auxiliary head.Different from the usual independent label assigner ©, we propose (d) lead head guided label assigner and (e) coarse-to-fine lead head guided label assigner. The proposed label assigner is optimized by lead head prediction and the ground truth to get the labels of training lead head and auxiliary head at the same time. The detailed coarse-to-fine implementation method and constraint design details will be elaborated in Apendix.

图 5:粗略表示辅助,精细表示铅头标签分配器。与普通模型(a)相比,(b)中的模式具有辅助头部。与通常的独立标签分配器(c)不同,我们提出(d)引导头部引导标签分配器和(e)粗到细引导头部引导标签分配器。所提出的标签分配器通过前

4.2. Coarse for auxiliary and fine for lead loss

Deep supervision [38] is a technique that is often used in training deep networks. Its main concept is to add extra auxiliary head in the middle layers of the network, and the shallow network weights with assistant loss as the guide. Even for architectures such as ResNet [26] and DenseNet [32] which usually converge well, deep supervision [70, 98, 67, 47, 82, 65, 86, 50] can still significantly improve the performance of the model on many tasks. Figure 5 (a) and (b) show, respectively, the object detector architecture “without” and “with” deep supervision.

深度监督 [38] 是一种经常用于训练深度网络的技术。它的**主要概念是在网络的中间层添加额外的辅助头,以辅助损失为指导的浅层网络权重。**即使对于 ResNet [26] 和 DenseNet [32] 等通常收敛良好的架构,深度监督 [70, 98, 67, 47, 82, 65, 86, 50] 仍然可以显着提高模型在许多任务上的性能.图 5 (a) 和 (b) 分别显示了“没有”和“有”深度监督的目标检测器架构。

In this paper, we call the head responsible for the final output as the lead head, and the head used to assist training is called auxiliary head.

在本文中,我们将负责最终输出的head称为lead head,用于辅助训练的head称为辅助head。

Next we want to discuss the issue of label assignment. In the past, in the training of deep network, label assignment usually refers directly to the ground truth and generate hard label according to the given rules. However, in recent years, if we take object detection as an example, researchers often use the quality and distribution of prediction output by the network, and then consider together with the ground truth to use some calculation and optimization methods to generate a reliable soft label [61, 8, 36, 99, 91, 44, 43, 90, 20, 17, 42].For example, YOLO [61] use IoU of prediction of bounding box regression and ground truth as the soft label of objectness.

接下来我们要讨论标签分配的问题。在过去,深度网络的训练中的标签分配通常直接指的是ground truth,并根据给定的规则生成硬标签。然而,近年来,如果我们以物体检测为例,研究人员往往会利用网络预测输出的质量和分布,然后与ground truth一起考虑使用一些计算和优化方法来生成可靠的软标签[61, 8, 36, 99, 91, 44, 43, 90, 20, 17, 42]。例如,YOLO [61]使用预测边界框回归和ground truth的IoU作为objectness的软标签。

In this paper, we call the mechanism that considers the network prediction results together with the ground truth and then assigns soft labels as “label assigner.”

在本文中,我们将网络预测结果与基本事实一起考虑,然后分配软标签的机制称为“标签分配器”。

Deep supervision needs to be trained on the target objectives regardless of the circumstances of auxiliary head or lead head. During the development of soft label assigner related techniques, we accidentally discovered a new derivative issue, i.e., “How to assign soft label to auxiliary head and lead head ?” To the best of our knowledge, the relevant literature has not explored this issue so far.

**无论auxiliary head或lead head的情况如何,都需要对目标目标进行深度监督。**在开发软标签分配器相关技术的过程中,我们无意中发现了一个新的衍生问题,即“如何将软标签分配给辅助头和前导头?”据我们所知,到目前为止,相关文献还没有探讨过这个问题。

The results of the most popular method at present is as shown in Figure 5 ©, which is to separate auxiliary head and lead head, and then use their own prediction results and the ground truth to execute label assignment. The method proposed in this paper is a new label assignment method that guides both auxiliary head and lead head by the lead head prediction. In other words, we use lead head prediction as guidance to generate coarse-to-fine hierarchical labels, which are used for auxiliary head and lead head learning, respectively. The two proposed deep supervision label assignment strategies are shown in Figure 5 (d) and (e).

目前最流行的方法的结果如图5(c)所示,就是将辅助head和lead head分离出来,然后使用各自的预测结果和ground truth来执行标签分配。本文提出的方法是一种新的标签分配方法,通过lead head预测来指导auxiliary head和lead head。换句话说,我们使用lead head预测作为指导来生成从粗到细的分层标签,这些标签分别用于auxiliary head and lead head 学习。提出的两种深度监督标签分配策略如图 5 (d) 和 (e) 所示。

Lead head guided label assigner is mainly calculated based on the prediction result of the lead head and the ground truth, and generate soft label through the optimization process. This set of soft labels will be used as the target training model for both auxiliary head and lead head.The reason to do this is because lead head has a relatively strong learning capability, so the soft label generated from it should be more representative of the distribution and correlation between the source data and the target.

Lead headguided label assignmenter主要根据lead head的预测结果和ground truth计算,通过优化过程生成软标签。这组软标签将作为辅助头和前导头的目标训练模型。这样做的原因是因为 lead head具有比较强的学习能力,因此从中生成的软标签应该更能代表源数据和目标数据之间的分布和相关性。

Furthermore, we can view such learning as a kind of generalized residual learning. By letting the shallower auxiliary head directly learn the information that lead head has learned, lead head will be more able to focus on learning residual information that has not yet been learned.

此外,我们可以将这种学习视为一种广义残差学习。通过让较浅的辅助头直接学习领导头已经学习的信息,lead head将更能专注于学习尚未学习的残差信息。

Coarse-to-fine lead head guided label assigner also used the predicted result of the lead head and the ground truth to generate soft label. However, in the process we generate two different sets of soft label, i.e., coarse label and fine label, where fine label is the same as the soft label generated by lead head guided label assigner, and coarse label is generated by allowing more grids to be treated as positive target by relaxing the constraints of the positive sample assignment process.

Coarse-to-fine lead head guided label assigner 还使用了lead head和ground truth的预测结果来生成软标签。然而,在这个过程中,我们生成了两组不同的软标签,即粗标签和细标签,其中细标签与lead head引导标签分配器生成的软标签相同,而粗标签是通过允许更多的网格来生成的。通过放宽正样本分配过程的约束,将其视为正目标。

The reason for this is that the learning ability of an auxiliary head is not as strong as that of a lead head, and in order to avoid losing the information that needs to be learned, we will focus on optimizing the recall of auxiliary head in the object detection task. As for the output of lead head, we can filter the high precision results from the high recall results as the final output.

原因是auxiliary head的学习能力不如lead head强,为了避免丢失需要学习的信息,我们将重点优化auxiliary head的在物体检测任务召回率。至于lead head的输出,我们可以从高recall结果中过滤出高精度结果作为最终输出。

However, we must note that if the additional weight of coarse label is close to that of fine label, it may produce bad prior at final prediction. Therefore, in order to make those extra coarse positive grids have less impact, we put restrictions in the decoder, so that the extra coarse positive grids cannot produce soft label perfectly. The mechanism mentioned above allows the importance of fine label and coarse label to be dynamically adjusted during the learning process, and makes the optimizable upper bound of fine label always higher than coarse label.

但是,我们必须注意,如果粗标签的附加权重接近细标签的附加权重,则可能会在最终预测时产生不良先验。因此,为了使那些超粗的正网格影响更小,我们在解码器中设置了限制,使超粗的正网格不能完美地产生软标签。上述机制允许在学习过程中动态调整细标签和粗标签的重要性,使细标签的可优化上界始终高于粗标签。

4.3. Other trainable bag-of-freebies

In this section we will list some trainable bag-offreebies. These freebies are some of the tricks we used in training, but the original concepts were not proposed by us. The training details of these freebies will be elaborated in the Appendix, including

在本节中,我们将列出一些可训练的 bag-offreebies。这些 bag-offreebies是我们在训练中使用的一些技巧,但最初的概念并不是我们提出的。这些bag-offreebies的培训细节将在附录中详细说明,包括

(1) Batch normalization in conv-bn-activation topology: This part mainly connects batch normalization layer directly to convolutional layer.The purpose of this is to integrate the mean and variance of batch normalization into the bias and weight of convolutional layer at the inference stage.

(1) conv-bn-activation topology中的batch normalization:这部分主要是将批归一化层直接连接到卷积层,其目的是在推理阶段将批归一化的均值和方差合并到卷积层的偏差和权重中。

(2) Implicit knowledge in YOLOR [81] combined with convolution feature map in addition and multiplication manner: Implicit knowledge in YOLOR can be simplified to a vector by pre-computing at the inference stage. This vector can be combined with the bias and weight of the previous or subsequent convolutional layer.

(2)将YOLOR[81]中的隐含知识与卷积特征图采用加法和乘法相结合的方式相结合:在推理阶段通过预计算将YOLOR中的隐含知识简化为向量。该向量可与前一卷积层或后卷积层的偏置和权重组合。

(3) EMA model: EMA is a technique used in mean teacher [75], and in our system we use EMA model purely as the final inference model.

(3) EMA 模型:EMA 是一种在 mean teacher [75] 中使用的技术,在我们的系统中,我们使用 EMA 模型作为最终的推理模型。

5. Experiments

5.1. Experimental setup

We use Microsoft COCO dataset to conduct experiments and validate our object detection method. All our experiments did not use pre-trained models. That is, all models were trained from scratch. During the development process, we used train 2017 set for training, and then used val 2017 set for verification and choosing hyperparameters. Finally, we show the performance of object detection on the test 2017 set and compare it with the state-of-the-art object detection algorithms. Detailed training parameter settings are described in Appendix.

我们使用 Microsoft COCO 数据集进行实验并验证我们的对象检测方法。我们所有的实验都没有使用预训练模型。也就是说,所有模型都是从头开始训练的。在开发过程中,我们使用 train 2017 set 进行训练,然后使用 val 2017 set 进行验证和选择超参数。最后,我们展示了目标检测在 2017 年测试集上的性能,并将其与最先进的目标检测算法进行了比较。详细的训练参数设置在附录中描述。

We designed basic model for edge GPU, normal GPU, and cloud GPU, and they are respectively called YOLOv7tiny, YOLOv7, and YOLOv7-W6. At the same time, we also use basic model for model scaling for different service requirements and get different types of models. For YOLOv7, we do stack scaling on neck, and use the proposed compound scaling method to perform scaling-up of the depth and width of the entire model, and use this to obtain YOLOv7-X. As for YOLOv7-W6, we use the newly proposed compound scaling method to obtain YOLOv7-E6 and YOLOv7-D6. In addition, we use the proposed EELAN for YOLOv7-E6, and thereby complete YOLOv7E6E. Since YOLOv7-tiny is an edge GPU-oriented architecture, it will use leaky ReLU as activation function. As for other models we use SiLU as activation function. We will describe the scaling factor of each model in detail in Appendix.

**对于YOLOv7,我们对颈部进行stack scaling,并使用提出的复合缩放方法对整个模型的深度和宽度进行缩放,并以此获得YOLOv7-X。对于 YOLOv7-W6,我们使用新提出的复合缩放方法得到 YOLOv7-E6 和 YOLOv7-D6。此外,我们将提议的 EELAN 用于 YOLOv7-E6,从而完成了 YOLOv7E6E。**由于 YOLOv7-tiny 是一个面向边缘 GPU 的架构,它会使用leaky ReLU 作为激活函数。至于其他模型,我们使用 SiLU 作为激活函数。我们将在附录中详细描述每个模型的比例因子。

5.2. Baselines

We choose previous version of YOLO [3, 79] and stateof-the-art object detector YOLOR [81] as our baselines. Table 1 shows the comparison of our proposed YOLOv7 models and those baseline that are trained with the same settings.From the results we see that if compared with YOLOv4, YOLOv7 has 75% less parameters, 36% less computation, and brings 1.5% higher AP. If compared with state-of-theart YOLOR-CSP, YOLOv7 has 43% fewer parameters, 15% less computation, and 0.4% higher AP. In the performance of tiny model, compared with YOLOv4-tiny-31, YOLOv7tiny reduces the number of parameters by 39% and the amount of computation by 49%, but maintains the same AP.On the cloud GPU model, our model can still have a higher AP while reducing the number of parameters by 19% and the amount of computation by 33%.

我们选择以前版本的 YOLO [3, 79] 和最先进的目标检测器 YOLOR [81] 作为我们的基线。表 1 显示了我们提出的 YOLOv7 模型与使用相同设置训练的基线模型的比较。从结果中我们看到,如果与 YOLOv4 相比,YOLOv7 的参数减少了 75%,计算量减少了 36%,AP 提高了 1.5% .如果与 state-of-theart YOLOR-CSP 相比,YOLOv7 的参数减少了 43%,计算量减少了 15%,AP 提高了 0.4%。在tiny模型的性能上,与YOLOv4-tiny-31相比,YOLOv7tiny参数数量减少了39%,计算量减少了49%,但AP保持不变。在云GPU模型上,我们的模型仍然可以有更高的 AP,同时减少 19% 的参数数量和 33% 的计算量。

5.3. Comparison with state-of-the-arts

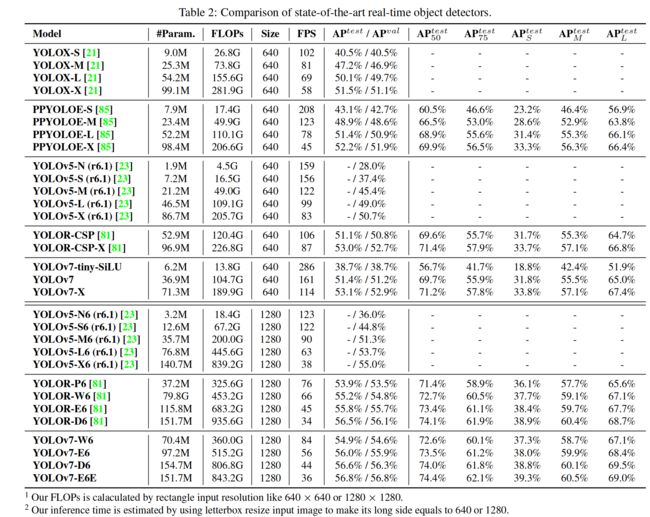

We compare the proposed method with state-of-the-art object detectors for general GPUs and Mobile GPUs, and the results are shown in Table 2. From the results in Table 2 we know that the proposed method has the best speed-accuracy trade-off comprehensively.

我们将所提出的方法与用于通用 GPU 和移动 GPU 的最先进的目标检测器进行比较,结果如表 2 所示。从表 2 的结果中,我们知道所提出的方法具有最佳的速度-准确度权衡全面关闭。

If we compare YOLOv7-tiny-SiLU with YOLOv5-N (r6.1), our method is 127 fps faster and 10.7% more accurate on AP. In addition, YOLOv7 has 51.4% AP at frame rate of 161 fps, while PPYOLOE-L with the same AP has only 78 fps frame rate. In terms of parameter usage, YOLOv7 is 41% less than PPYOLOE-L. If we compare YOLOv7-X with 114 fps inference speed to YOLOv5-L (r6.1) with 99 fps inference speed, YOLOv7-X can improve AP by 3.9%. If YOLOv7X is compared with YOLOv5-X (r6.1) of similar scale, the inference speed of YOLOv7-X is 31 fps faster. In addition, in terms of the amount of parameters and computation, YOLOv7-X reduces 22% of parameters and 8% of computation compared to YOLOv5-X (r6.1), but improves AP by 2.2%.

如果我们将YOLOv7-Tiny-Silu与YOLOv5-N(r6.1)进行比较,我们的方法在AP上的速度快127fps,准确率高10.7%。此外,YOLOv7在161fps的帧速率下有51.4%的AP,而具有相同AP的PPYOLOE-L只有78fps的帧速率。在参数使用方面,YOLOv7比PPYOLOE-L少41%。如果我们比较YOLOv7-X 114fps的推理速度和YOLOv5-L(r6.1)99fps的推理速度,YOLOv7-X可以提高3.9%的AP。如果将YOLOv7X与类似规模的YOLOv5-X(r6.1)进行比较,则YOLOv7-X的推理速度快31fps。此外,在参数和计算量方面,与YOLOv5-X(r6.1)相比,YOLOv7-X减少了22%的参数和8%的计算量,而AP提高了2.2%。

If we compare YOLOv7 with YOLOR using the input resolution 1280, the inference speed of YOLOv7-W6 is 8 fps faster than that of YOLOR-P6, and the detection rate is also increased by 1% AP. As for the comparison between YOLOv7-E6 and YOLOv5-X6 (r6.1), the former has 0.9% AP gain than the latter, 45% less parameters and 63% less computation, and the inference speed is increased by 47%.YOLOv7-D6 has close inference speed to YOLOR-E6, but improves AP by 0.8%. YOLOv7-E6E has close inference speed to YOLOR-D6, but improves AP by 0.3%.

如果使用输入1280的分辨率,将YOLOv7与YOLOR进行比较,则YOLOv7-W6的推理速度比YOLOR-P6快8fps,检测率也提高了1%AP。YOLOv7-E6与YOLOv5-X6(r6.1)相比,前者AP增益0.9%,参数减少45%,计算量减少63%,推理速度提高47%;YOLOv7-D6推理速度接近YOLOR-E6,AP提高0.8%。YOLOv7-E6E的推断速度与YOLOR-D6相近,但AP提高了0.3%。

5.4. Ablation study

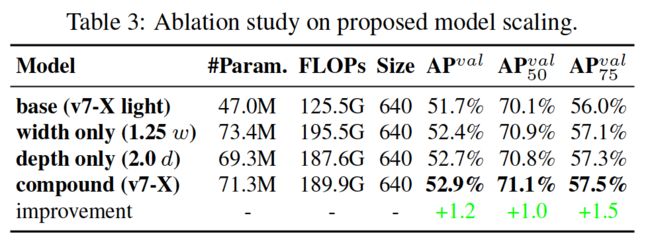

5.4.1 Proposed compound scaling method

Table 3 shows the results obtained when using different model scaling strategies for scaling up. Among them, our proposed compound scaling method is to scale up the depth of computational block by 1.5 times and the width of transition block by 1.25 times. If our method is compared with the method that only scaled up the width, our method can improve the AP by 0.5% with less parameters and amount of computation. If our method is compared with the method that only scales up the depth, our method only needs to increase the number of parameters by 2.9% and the amount of computation by 1.2%, which can improve the AP by 0.2%.It can be seen from the results of Table 3 that our proposed compound scaling strategy can utilize parameters and computation more efficiently.

表3显示了使用不同的模型缩放策略进行放大时所获得的结果。其中,我们提出的复合尺度方法是将计算块的深度放大1.5倍,将过渡块的宽度放大1.25倍。如果与仅放大宽度的方法相比,我们的方法可以以更少的参数和计算量提高AP 0.5%。如果我们的方法与只放大深度的方法相比,我们的方法只需要增加2.9%的参数和1.2%的计算量,可以提高AP 0.2%。从表3的结果可以看出,我们提出的复合缩放策略可以更有效地利用参数和计算量。

5.4.2 Proposed planned re-parameterized model

In order to verify the generality of our proposed planed re-parameterized model, we use it on concatenation-based model and residual-based model respectively for verification. The concatenation-based model and residual-based model we chose for verification are 3-stacked ELAN and CSPDarknet, respectively.

为了验证我们提出的re-parameterized model的通用性,我们分别对基于串联的模型和基于残差的模型进行了验证。我们选择了基于级联的模型和基于残差的模型进行验证,分别是3层ELAN模型和CSPDarknet模型。

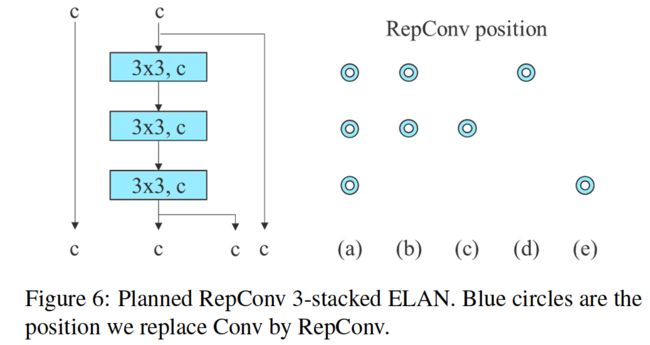

In the experiment of concatenation-based model, we replace the 3×3 convolutional layers in different positions in 3-stacked ELAN with RepConv, and the detailed configuration is shown in Figure 6.

在concatenation-based model的实验中,我们用RepConv替换了3层ELAN中不同位置的3×3卷积层,具体配置如图6所示。

Figure 6: Planned RepConv 3-stacked ELAN. Blue circles are the position we replace Conv by RepConv.

Figure 6: 规划的RepConv 3-堆叠的ELAN。蓝色圆圈是我们用RepConv替换Conv的位置。

From the results shown in Table 4 we see that all higher AP values are present on our proposed planned re-parameterized model.

从表4所示的结果可以看出,我们提出的计划的重新参数化模型上存在所有较高的AP值。

In the experiment dealing with residual-based model, since the original dark block does not have a 3×3 con-volution block that conforms to our design strategy, we additionally design a reversed dark block for the experiment, whose architecture is shown in Figure 7. Since the CSPDarknet with dark block and reversed dark block has exactly the same amount of parameters and operations, it is fair to compare. The experiment results illustrated in Table 5 fully confirm that the proposed planned re-parameterized model is equally effective on residual-based model. We find that the design of RepCSPResNet [85] also fit our design pattern.

在处理residual-based model的实验中,由于 the original dark block没有符合我们的设计策略的3×3卷积块,我们还为实验设计了a reversed dark block,其结构如图7所示。由于具有dark block和reversed dark block的CSPDarknet具有完全相同的参数和运算量,因此可以进行比较。表5所示的实验结果充分证实了所提出的计划再参数化模型与基于残差的模型同样有效。我们发现RepCSPResNet[85]的设计也符合我们的设计模式。

5.4.3 Proposed assistant loss for auxiliary head

In the assistant loss for auxiliary head experiments, we compare the general independent label assignment for lead head and auxiliary head methods, and we also compare the two proposed lead guided label assignment methods. We show all comparison results in Table 6. From the results listed in Table 6, it is clear that any model that increases assistant loss can significantly improve the overall performance.

在auxiliary head实验的辅助损失中,我们比较了lead head和auxiliary head方法的一般独立标签分配,并且我们还比较了两种提出的引导引导标签分配方法。我们在表 6 中展示了所有比较结果。从表 6 中列出的结果可以看出,任何增加辅助损失的模型都可以显着提高整体性能。

In addition, our proposed lead guided label assignment strategy receives better performance than the general independent label assignment strategy in AP, AP50, and AP75. As for our proposed coarse for assistant and fine for lead label assignment strategy, it results in best results in all cases. In Figure 8 we show the objectness map predicted by different methods at auxiliary head and lead head. From Figure 8 we find that if auxiliary head learns lead guided soft label, it will indeed help lead head to extract the residual information from the consistant targets.

此外,我们提出的引导式标签分配策略比 AP、AP50 和 AP75 中的一般独立标签分配策略具有更好的性能。至于我们提出的粗略辅助和精细引导标签分配策略,它在所有情况下都会产生最佳结果。在图 8 中,我们展示了在auxiliary head和lead head上通过不同方法预测的对象图。从图 8 中我们发现,如果auxiliary head学习引导引导软标签,它确实会帮助引导头部从一致目标中提取残差信息。

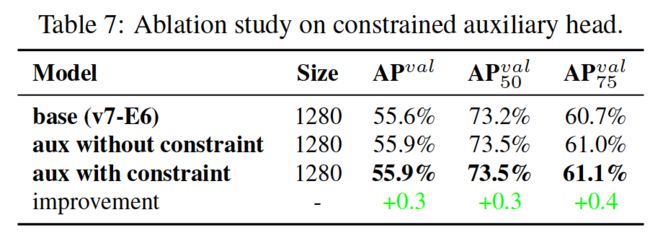

In Table 7 we further analyze the effect of the proposed coarse-to-fine lead guided label assignment method on the decoder of auxiliary head. That is, we compared the results of with/without the introduction of upper bound constraint.

Judging from the numbers in the Table, the method of constraining the upper bound of objectness by the distance from the center of the object can achieve better performance.

在表 7 中,我们进一步分析了所提出的从粗到细的引导标签分配方法对辅auxiliary head解码器的影响。也就是说,我们比较了引入/不引入上限约束的结果。从表中的数字来看,通过到物体中心的距离来约束objectness的上界的方法可以获得更好的性能。从表中的数字来看,通过到物体中心的距离来约束objectness的上界的方法可以获得更好的性能。

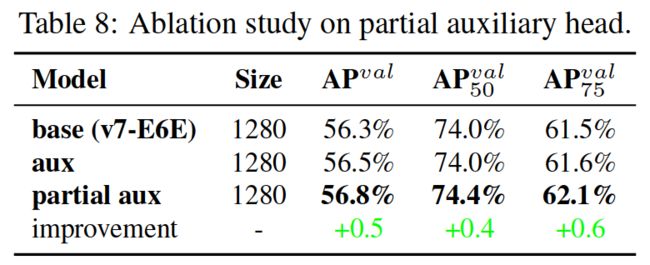

Since the proposed YOLOv7 uses multiple pyramids to jointly predict object detection results, we can directly connect auxiliary head to the pyramid in the middle layer for training. This type of training can make up for information that may be lost in the next level pyramid prediction.

由于提出的 YOLOv7 使用多个金字塔来联合预测目标检测结果,我们可以直接将auxiliary head连接到中间层的金字塔进行训练。这种训练可以弥补下一层金字塔预测中可能丢失的信息。

For the above reasons, we designed partial auxiliary head in the proposed E-ELAN architecture. Our approach is to connect auxiliary head after one of the sets of feature map before merging cardinality, and this connection can make the weight of the newly generated set of feature map not directly updated by assistant loss. Our design allows each pyramid of lead head to still get information from objects with different sizes. Table 8 shows the results obtained using two different methods, i.e., coarse-to-fine lead guided and partial coarse-to-fine lead guided methods. Obviously, the partial coarse-to-fine lead guided method has a better auxiliary effect.

由于上述原因,我们在提议的 E-ELAN 架构中设计了部分auxiliary head。我们的方法是在合并基数之前在其中一组特征图之后连接auxiliary head,这种连接可以使新生成的一组特征图的权重不会被辅助损失直接更新。我们的设计允许pyramid of lead head仍然从不同大小的物体中获取信息。表 8 显示了使用两种不同方法获得的结果,即粗到细导联方法和部分粗到细导联方法。显然,部分由粗到细的导引法具有更好的辅助效果。

6.Conclusions

In this paper we propose a new architecture of realtime object detector and the corresponding model scaling method.

本文提出了一种新的实时目标检测器结构和相应的模型缩放方法。

Furthermore, we find that the evolving process of object detection methods generates new research topics.During the research process, we found the replacement problem of re-parameterized module and the allocation problem of dynamic label assignment.

此外,我们发现目标检测方法的发展过程产生了新的研究课题。在研究过程中,我们发现了重新参数化模块的替换问题和动态标签分配的分配问题。

To solve the problem, we propose the trainable bag-of-freebies method to enhance the accuracy of object detection. Based on the above, we have developed the YOLOv7 series of object detection systems, which receives the state-of-the-art results.

为了解决这个问题,我们提出了可训练的bag-of-freebies方法来提高目标检测的准确性。在此基础上,我们开发了YOLOv7系列物体探测系统,获得了最先进的结果。

7.More comparison

The authors wish to thank National Center for High-performance Computing (NCHC) for providing computational and storage resources.

作者希望感谢国家高性能计算中心(NCHC)提供计算和存储资源。

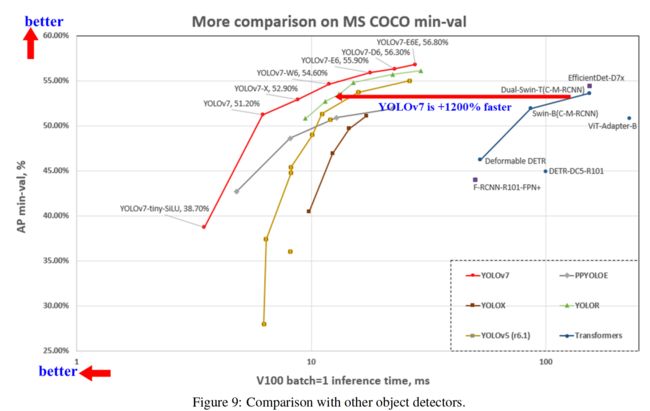

8. More comparison

YOLOv7 surpasses all known object detectors in both speed and accuracy in the range from 5 FPS to 160 FPS and has the highest accuracy 56.8% AP test-dev / 56.8% AP min-val among all known real-time object detectors with 30 FPS or higher on GPU V100. YOLOv7-E6 object detector (56 FPS V100, 55.9% AP) outperforms both transformerbased detector SWIN-L Cascade-Mask R-CNN (9.2 FPS A100, 53.9% AP) by 509% in speed and 2% in accuracy,

and convolutional-based detector ConvNeXt-XL CascadeMask R-CNN (8.6 FPS A100, 55.2% AP) by 551% in speed and 0.7% AP in accuracy, as well as YOLOv7 outperforms: YOLOR, YOLOX, Scaled-YOLOv4, YOLOv5, DETR, Deformable DETR, DINO-5scale-R50, ViT-Adapter-B and many other object detectors in speed and accuracy. More over, we train YOLOv7 only on MS COCO dataset from scratch without using any other datasets or pre-trained weights.

YOLOv7在速度和精度上都超过了所有已知的物体探测器,在5FPS到160FPS的范围内,并且在所有已知的GPU V100上30 FPS或更高的实时物体探测器中,具有最高的准确率56.8%AP TEST-DEV/56.8%AP MIN-VAL。YOLOv7-E6目标探测器(56FPS V100,55.9%AP)比基于变压器的探测器Swin-L Cascade-MASK R-CNN(9.2 FPS A100,53.9%AP)的速度快509%,准确率高2%;基于卷积的探测器ConvNeXt-XL Cascade-MASK R-CNN(8.6 FPS A100,55.2%AP)的速度快551%,准确率0.7%;YOLOv7的性能优于:YOLOR,YOLOX,Scaled-YOLOv4,YOLOv5,DETR,DeForm DETR,Dino-5Scale-R50,R50。VIT-Adapter-B和许多其他物体探测器的速度和精度。此外,我们仅在MS Coco数据集上从头开始训练YOLOv7,而不使用任何其他数据集或预先训练的权重。