第六章 构建安全高效的企业服务(二)

第六章 构建安全高效的企业服务(二)

任务执行和调度(Spring Quartz)

为什么在分布式环境下,使用 JDK线程池 和 Spring线程池 会出现问题呢 ?

- 由于JDK线程池和Spring线程池是基于内存的,各自保存在自己服务器的本地内存,互不共享。

- 在分布式环境下,如果选择的是普通的定时任务(使用JDK线程池或者Spring线程池),假如现在要求每隔10分钟清理一次临时文件,那么多台服务器就会执行相同的操作,这就会导致重复执行任务,甚至会造成冲突。

- 而使用Quartz的话,程序运行所依赖的参数都是保存到数据库,所以说不管部署了多少台应用服务器,它们都会访问同一个数据库。

- 假如现在要求每隔10分钟执行一次任务,两台服务器会在同一时间访问数据库,先到的那台服务器会优先拿到执行任务所需要的参数,然后在数据库中给这些参数进行标记,

- 另一台服务器到达后,发现数据库中的参数已经是运行状态了,就说明已经有其他服务器在执行任务了,那么它就不需要再去处理了。

- 通过这种类似于加锁的方式,多个服务器之间就能够共享任务数据了。

测试

@SpringBootTest

@ContextConfiguration(classes = CommunityApplication.class)

public class ThreadPoolTests {

private static final Logger logger = LoggerFactory.getLogger(ThreadPoolTests.class);

// JDK普通线程池

private ExecutorService executorService = Executors.newFixedThreadPool(5);

// JDK可执行定时任务的线程池

private ScheduledExecutorService scheduledExecutorService = Executors.newScheduledThreadPool(5);

// Spring普通线程池

@Autowired

private ThreadPoolTaskExecutor taskExecutor;

// Spring可执行定时任务的线程池

@Autowired

private ThreadPoolTaskScheduler taskScheduler;

@Autowired

private AlphaService alphaService;

private void sleep(long m) {

try {

Thread.sleep(m);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

// 1.JDK普通线程池

@Test

public void testExecutorService() {

Runnable task = new Runnable() {

@Override

public void run() {

logger.debug("Hello ExecutorService");

}

};

for (int i = 0; i < 10; i++) {

executorService.submit(task);

}

sleep(10000);

}

// 2.JDK定时任务线程池

@Test

public void testScheduledExecutorService() {

Runnable task = new Runnable() {

@Override

public void run() {

logger.debug("Hello ScheduledExecutorService");

}

};

// 以固定的频率执行,先延迟10000毫秒再开始执行,然后每隔1000毫秒执行一次

scheduledExecutorService.scheduleAtFixedRate(task, 10000, 1000, TimeUnit.MILLISECONDS);

sleep(30000);

}

// 3.Spring普通线程池

@Test

public void testThreadPoolTaskExecutor() {

Runnable task = new Runnable() {

@Override

public void run() {

logger.debug("Hello ThreadPoolTaskExecutor");

}

};

for (int i = 0; i < 10; i++) {

taskExecutor.submit(task);

}

sleep(10000);

}

// 4.Spring定时任务线程池

@Test

public void testThreadPoolTaskScheduler() {

Runnable task = new Runnable() {

@Override

public void run() {

logger.debug("Hello ThreadPoolTaskScheduler");

}

};

Date startTime = new Date(System.currentTimeMillis() + 10000);

// 以固定的频率执行,先延迟10000毫秒再开始执行,然后每隔1000毫秒执行一次

taskScheduler.scheduleAtFixedRate(task, startTime, 1000);

sleep(30000);

}

// 5.Spring普通线程池(简化)

@Test

public void testThreadPoolTaskExecutorSimple() {

for (int i = 0; i < 10; i++) {

alphaService.execute1();

}

sleep(10000);

}

// 6.Spring定时任务线程池(简化)

@Test

public void testThreadPoolTaskSchedulerSimple() {

sleep(30000);

}

}

在

AlphaService类里面添加两个方法

/**

* 让该方法在多线程环境下,被异步的调用

* 该方法相当于一个任务

*/

@Async

public void execute1() {

logger.debug("execute1");

}

/**

* 让该方法定时被执行,先延迟10000毫秒再开始执行,然后每隔1000毫秒执行一次

* 只要有程序启动,该方法就会自动被调用

*/

@Scheduled(initialDelay = 10000, fixedRate = 1000)

public void execute2() {

logger.debug("execute2");

}

注意:使用spring线程池,需要做好以下配置

spring:

# 配置spring普通线程池

task:

execution:

pool:

# 核心线程数 5

core-size: 5

# 最大线程数 15

max-size: 15

# 阻塞队列的容量

queue-capacity: 100

# 配置spring定时任务线程池

scheduling:

pool:

size: 5

- ThreadPoolConfig.java

/**

* 线程池配置类

*/

@Configuration

@EnableScheduling // 开启定时任务

@EnableAsync // 开启异步

public class ThreadPoolConfig {

}

导入相关依赖

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-quartzartifactId>

dependency>

什么是 Quartz ?

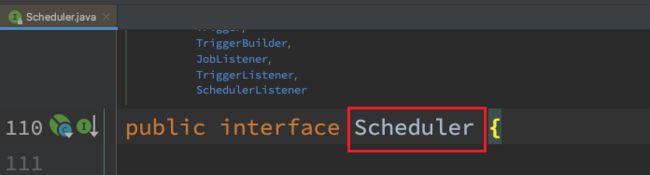

- 这个

Scheduler类是Quartz的核心调度工具,所有的由Quartz来调度的任务都是通过这个接口去调用的

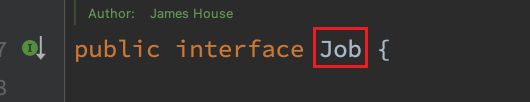

Job类是声明任务,定义一个任务

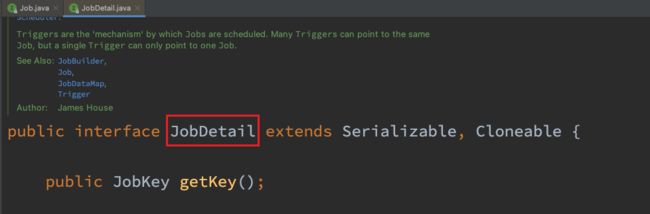

JobDetail用于配置Job详情

Trigeer用来配置job什么时候运行,以什么样的频率反复运行

运行流程

Quartz实现主要有几个方面:

- 通过Job接口定义一个任务

- 通过JobDetail和Trigger接口来配置Job

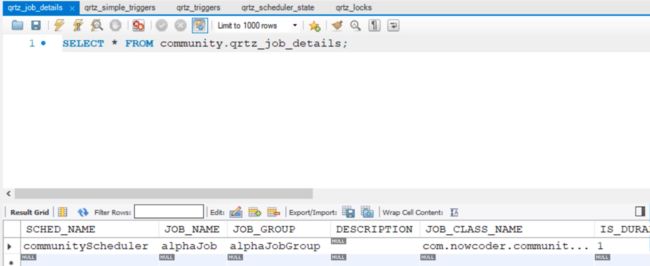

- 配置好以后,当我们程序启动时,Quartz会自动读取配置信息,并且把它读到的配置信息,立刻存到数据库中(有关Quartz的那几张表)

- 以后就是通过那几张表来执行任务,原先的配置就没什么作用了,只是为了初始化数据到数据库表中

新增数据库表(Quartz需要的)

重点需要关注

QRTZ_JOB_DETAILS、QRTZ_SIMPLE_TRIGGERS、QRTZ_TRIGGERS、QRTZ_SCHEDULER_STATE、QRTZ_LOCKS这几张表

QRTZ_JOB_DETAILS:存储有关Job详情的数据QRTZ_SIMPLE_TRIGGERS、QRTZ_TRIGGERS:存储有关触发器信息的数据QRTZ_SCHEDULER_STATE:存储定时器的一些状态QRTZ_LOCKS:存储锁相关的数据

DROP TABLE IF EXISTS QRTZ_FIRED_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_PAUSED_TRIGGER_GRPS;

DROP TABLE IF EXISTS QRTZ_SCHEDULER_STATE;

DROP TABLE IF EXISTS QRTZ_LOCKS;

DROP TABLE IF EXISTS QRTZ_SIMPLE_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_SIMPROP_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_CRON_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_BLOB_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_JOB_DETAILS;

DROP TABLE IF EXISTS QRTZ_CALENDARS;

CREATE TABLE QRTZ_JOB_DETAILS(

SCHED_NAME VARCHAR(120) NOT NULL, # 整个任务的名字

JOB_NAME VARCHAR(190) NOT NULL, # Job的名字

JOB_GROUP VARCHAR(190) NOT NULL, # Jobd的分组

DESCRIPTION VARCHAR(250) NULL, # Job的描述

JOB_CLASS_NAME VARCHAR(250) NOT NULL, # Job对应哪个类

IS_DURABLE VARCHAR(1) NOT NULL,

IS_NONCONCURRENT VARCHAR(1) NOT NULL,

IS_UPDATE_DATA VARCHAR(1) NOT NULL,

REQUESTS_RECOVERY VARCHAR(1) NOT NULL,

JOB_DATA BLOB NULL,

PRIMARY KEY (SCHED_NAME,JOB_NAME,JOB_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

JOB_NAME VARCHAR(190) NOT NULL,

JOB_GROUP VARCHAR(190) NOT NULL,

DESCRIPTION VARCHAR(250) NULL,

NEXT_FIRE_TIME BIGINT(13) NULL,

PREV_FIRE_TIME BIGINT(13) NULL,

PRIORITY INTEGER NULL,

TRIGGER_STATE VARCHAR(16) NOT NULL,

TRIGGER_TYPE VARCHAR(8) NOT NULL,

START_TIME BIGINT(13) NOT NULL,

END_TIME BIGINT(13) NULL,

CALENDAR_NAME VARCHAR(190) NULL,

MISFIRE_INSTR SMALLINT(2) NULL,

JOB_DATA BLOB NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,JOB_NAME,JOB_GROUP)

REFERENCES QRTZ_JOB_DETAILS(SCHED_NAME,JOB_NAME,JOB_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_SIMPLE_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

REPEAT_COUNT BIGINT(7) NOT NULL,

REPEAT_INTERVAL BIGINT(12) NOT NULL,

TIMES_TRIGGERED BIGINT(10) NOT NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_CRON_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

CRON_EXPRESSION VARCHAR(120) NOT NULL,

TIME_ZONE_ID VARCHAR(80),

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_SIMPROP_TRIGGERS

(

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

STR_PROP_1 VARCHAR(512) NULL,

STR_PROP_2 VARCHAR(512) NULL,

STR_PROP_3 VARCHAR(512) NULL,

INT_PROP_1 INT NULL,

INT_PROP_2 INT NULL,

LONG_PROP_1 BIGINT NULL,

LONG_PROP_2 BIGINT NULL,

DEC_PROP_1 NUMERIC(13,4) NULL,

DEC_PROP_2 NUMERIC(13,4) NULL,

BOOL_PROP_1 VARCHAR(1) NULL,

BOOL_PROP_2 VARCHAR(1) NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_BLOB_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

BLOB_DATA BLOB NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

INDEX (SCHED_NAME,TRIGGER_NAME, TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_CALENDARS (

SCHED_NAME VARCHAR(120) NOT NULL,

CALENDAR_NAME VARCHAR(190) NOT NULL,

CALENDAR BLOB NOT NULL,

PRIMARY KEY (SCHED_NAME,CALENDAR_NAME))

ENGINE=InnoDB;

CREATE TABLE QRTZ_PAUSED_TRIGGER_GRPS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_FIRED_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

ENTRY_ID VARCHAR(95) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

INSTANCE_NAME VARCHAR(190) NOT NULL,

FIRED_TIME BIGINT(13) NOT NULL,

SCHED_TIME BIGINT(13) NOT NULL,

PRIORITY INTEGER NOT NULL,

STATE VARCHAR(16) NOT NULL,

JOB_NAME VARCHAR(190) NULL,

JOB_GROUP VARCHAR(190) NULL,

IS_NONCONCURRENT VARCHAR(1) NULL,

REQUESTS_RECOVERY VARCHAR(1) NULL,

PRIMARY KEY (SCHED_NAME,ENTRY_ID))

ENGINE=InnoDB;

CREATE TABLE QRTZ_SCHEDULER_STATE (

SCHED_NAME VARCHAR(120) NOT NULL,

INSTANCE_NAME VARCHAR(190) NOT NULL,

LAST_CHECKIN_TIME BIGINT(13) NOT NULL,

CHECKIN_INTERVAL BIGINT(13) NOT NULL,

PRIMARY KEY (SCHED_NAME,INSTANCE_NAME))

ENGINE=InnoDB;

CREATE TABLE QRTZ_LOCKS (

SCHED_NAME VARCHAR(120) NOT NULL,

LOCK_NAME VARCHAR(40) NOT NULL,

PRIMARY KEY (SCHED_NAME,LOCK_NAME))

ENGINE=InnoDB;

CREATE INDEX IDX_QRTZ_J_REQ_RECOVERY ON QRTZ_JOB_DETAILS(SCHED_NAME,REQUESTS_RECOVERY);

CREATE INDEX IDX_QRTZ_J_GRP ON QRTZ_JOB_DETAILS(SCHED_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_T_J ON QRTZ_TRIGGERS(SCHED_NAME,JOB_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_T_JG ON QRTZ_TRIGGERS(SCHED_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_T_C ON QRTZ_TRIGGERS(SCHED_NAME,CALENDAR_NAME);

CREATE INDEX IDX_QRTZ_T_G ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_GROUP);

CREATE INDEX IDX_QRTZ_T_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_T_N_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_T_N_G_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_GROUP,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_T_NEXT_FIRE_TIME ON QRTZ_TRIGGERS(SCHED_NAME,NEXT_FIRE_TIME);

CREATE INDEX IDX_QRTZ_T_NFT_ST ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_STATE,NEXT_FIRE_TIME);

CREATE INDEX IDX_QRTZ_T_NFT_MISFIRE ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME);

CREATE INDEX IDX_QRTZ_T_NFT_ST_MISFIRE ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_T_NFT_ST_MISFIRE_GRP ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME,TRIGGER_GROUP,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_FT_TRIG_INST_NAME ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,INSTANCE_NAME);

CREATE INDEX IDX_QRTZ_FT_INST_JOB_REQ_RCVRY ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,INSTANCE_NAME,REQUESTS_RECOVERY);

CREATE INDEX IDX_QRTZ_FT_J_G ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,JOB_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_FT_JG ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_FT_T_G ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP);

CREATE INDEX IDX_QRTZ_FT_TG ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,TRIGGER_GROUP);

commit;

配置Quartz

spring:

# 配置quartz

quartz:

# 任务存储方式是jdbc

job-store-type: jdbc

# 调度器的名字

scheduler-name: communityScheduler

properties:

scheduler:

# 调度器id自动生成

instanceId: AUTO

org:

quartz:

jobStore:

# 将任务存到数据库的类

class: org.springframework.scheduling.quartz.LocalDataSourceJobStore

# 将任务存到数据库的驱动

driverDelegateClass: org.quartz.impl.jdbcjobstore.StdJDBCDelegate

# 是否为集群的方式

isClustered: true

threadPool:

# 使用的线程池

class: org.quartz.simpl.SimpleThreadPool

# 线程数

threadCount: 5

任务类

/**

* 定义任务

*

* @author xiexu

* @create 2022-06-11 09:43

*/

public class AlphaJob implements Job {

@Override

public void execute(JobExecutionContext context) throws JobExecutionException {

System.out.println(Thread.currentThread().getName() + ": execute a quartz job");

}

}

配置类

/**

* 这个配置类的作用:仅仅在第一次被读取到,然后初始化到数据库里,

* 以后Quartz是访问数据库去读取任务,而不再读取该配置类了

*

* @author xiexu

* @create 2022-06-11 09:44

*/

@Configuration

public class QuartzConfig {

/**

* FactoryBean:可简化Bean的实例化过程

* 1.Spring通过FactoryBean封装了Bean的实例化过程

* 2.将FactoryBean装配到Spring容器里

* 3.将FactoryBean注入给其他的Bean

* 4.其他的Bean得到的就是FactoryBean所管理的对象实例

*/

// 配置JobDetail(任务详情)

@Bean

public JobDetailFactoryBean alphaJobDetail() {

JobDetailFactoryBean factoryBean = new JobDetailFactoryBean();

// 管理的是哪个Job(任务类)

factoryBean.setJobClass(AlphaJob.class);

// 声明的任务名字

factoryBean.setName("alphaJob");

// 给任务设置分组,多个任务可以同属于一组

factoryBean.setGroup("alphaJobGroup");

// 设置任务可以持久保存

factoryBean.setDurability(true);

// 设置该任务可被恢复

factoryBean.setRequestsRecovery(true);

return factoryBean;

}

/**

* 配置Trigger(触发器):需要依赖于JobDetail的数据

* alphaTrigger(JobDetail alphaJobDetail):得到的就是JobDetailFactoryBean管理的JobDetail对象

*

* @param alphaJobDetail

* @return

*/

@Bean

public SimpleTriggerFactoryBean alphaTrigger(JobDetail alphaJobDetail) {

SimpleTriggerFactoryBean factoryBean = new SimpleTriggerFactoryBean();

factoryBean.setJobDetail(alphaJobDetail);

// 设置触发器的名字

factoryBean.setName("alphaTrigger");

// 设置触发器的分组

factoryBean.setGroup("alphaTriggerGroup");

// 每3秒执行一次任务

factoryBean.setRepeatInterval(3000);

factoryBean.setJobDataMap(new JobDataMap());

return factoryBean;

}

}

- 配置好任务(

AlphaJob.java)、任务详情(alphaJobDetail())、触发器(alphaTrigger())之后, - 只要一启动服务,配置文件就会自动被加载,Quartz就会根据任务详情和触发器,往数据库中插入数据,

- 数据库中一旦有数据,Quartz底层的调度器

Scheduler就会根据这些数据去调度任务。

删除任务

@SpringBootTest

@ContextConfiguration(classes = CommunityApplication.class)

public class QuartzTests {

@Autowired

private Scheduler scheduler; // Quartz的调度器

@Test

public void testDeleteJob() {

try {

// 第一个参数是任务的名字,第二个参数是组名

boolean result = scheduler.deleteJob(new JobKey("alphaJob", "alphaJobGroup"));

System.out.println(result);

} catch (SchedulerException e) {

e.printStackTrace();

}

}

}

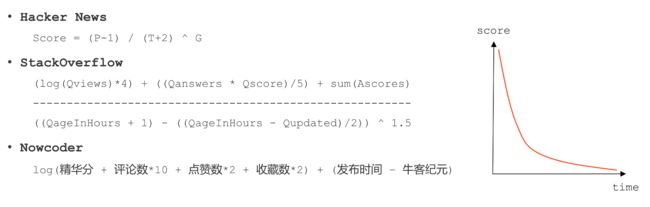

热帖排行

定义任务和配置任务细节和触发器

- PostScoreRefreshJob.java

/**

* 帖子分数刷新的任务

*

* @author xiexu

* @create 2022-06-11 11:49

*/

public class PostScoreRefreshJob implements Job, CommunityConstant {

private static final Logger logger = LoggerFactory.getLogger(PostScoreRefreshJob.class);

@Autowired

private RedisTemplate redisTemplate;

@Autowired

private DiscussPostService discussPostService;

@Autowired

private LikeService likeService;

// 牛客纪元

private static final Date epoch;

static {

try {

epoch = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss").parse("2014-08-01 00:00:00");

} catch (ParseException e) {

throw new RuntimeException("初始化牛客纪元失败!");

}

}

@Override

public void execute(JobExecutionContext jobExecutionContext) throws JobExecutionException {

String redisKey = RedisKeyUtil.getPostScoreKey();

BoundSetOperations operations = redisTemplate.boundSetOps(redisKey);

if (operations.size() == 0) {

logger.info("[任务取消] 没有需要刷新的帖子!");

return;

}

logger.info("[任务开始] 正在刷新帖子分数:" + operations.size());

while (operations.size() > 0) {

this.refresh((Integer) operations.pop());

}

logger.info("[任务结束] 帖子分数刷新完毕!");

}

private void refresh(int postId) {

DiscussPost post = discussPostService.findDiscusspostById(postId);

if (post == null) {

logger.error("该帖子不存在:id = " + postId);

return;

}

// 是否加精

boolean wonderful = post.getStatus() == 1;

// 评论数量

int commentCount = post.getCommentCount();

// 点赞数量

long likeCount = likeService.findEntityLikeCount(ENTITY_TYPE_POST, postId);

// 计算帖子权重

// wonderful ? 75 : 0 --> 精华分

// comment * 10 --> 评论数 * 10

// likeCount * 2 --> 点赞数 * 2

double w = (wonderful ? 75 : 0) + commentCount * 10 + likeCount * 2;

// 分数 = 帖子权重 + 距离天数,因为w如果小于1,log10(w)之后的数是负数,所以这里设置w最小值为1

// 1000 * 3600 * 24 等于1天的毫秒数

double score = Math.log10(Math.max(w, 1)) + (post.getCreateTime().getTime() - epoch.getTime()) / (1000 * 3600 * 24);

// 更新帖子的分数

discussPostService.updateScore(postId, score);

}

}

- QuartzConfig.java

/**

* 这个配置类的作用:仅仅在第一次被读取到,然后初始化到数据库里,

* 以后Quartz是访问数据库去读取任务,而不再读取该配置类了

*

* @author xiexu

* @create 2022-06-11 09:44

*/

@Configuration

public class QuartzConfig {

/**

* FactoryBean:可简化Bean的实例化过程

* 1.Spring通过FactoryBean封装了Bean的实例化过程

* 2.将FactoryBean装配到Spring容器里

* 3.将FactoryBean注入给其他的Bean

* 4.该Bean得到的是FactoryBean所管理的对象实例

*/

// 刷新帖子分数的任务(任务详情)

@Bean

public JobDetailFactoryBean postScoreRefreshJobDetail() {

JobDetailFactoryBean factoryBean = new JobDetailFactoryBean();

factoryBean.setJobClass(PostScoreRefreshJob.class);

factoryBean.setName("postScoreRefreshJob");

factoryBean.setGroup("communityJobGroup");

// 设置任务可以持久保存

factoryBean.setDurability(true);

// 设置该任务可被恢复

factoryBean.setRequestsRecovery(true);

return factoryBean;

}

// 触发器

@Bean

public SimpleTriggerFactoryBean postScoreRefreshTrigger(JobDetail postScoreRefreshJobDetail) {

SimpleTriggerFactoryBean factoryBean = new SimpleTriggerFactoryBean();

factoryBean.setJobDetail(postScoreRefreshJobDetail);

factoryBean.setName("postScoreRefreshTrigger");

factoryBean.setGroup("communityTriggerGroup");

// 每5分钟执行一遍

factoryBean.setRepeatInterval(1000 * 60 * 5);

factoryBean.setJobDataMap(new JobDataMap());

return factoryBean;

}

}

修改和帖子热度相关操作

public class RedisKeyUtil {

private static final String PREFIX_POST = "post";

// 帖子分数

public static String getPostScoreKey() {

return PREFIX_POST + SPLIT + "score";

}

}

业务层(修改帖子列表展示方式,新增参数排序模式orderMode)

- DiscussPostService.java

/**

* 更新帖子的分数

*

* @param id

* @param score

* @return

*/

public int updateScore(int id, double score) {

return discussPostMapper.updateScore(id, score);

}

控制层

- HomeController.java

@RequestMapping(path = "/index", method = RequestMethod.GET)

public String getIndexPage(Model model, Page page, @RequestParam(name = "orderMode", defaultValue = "0") int orderMode) {

// 方法调用前,SpringMVC会自动实例化Model和Page,并将Page注入Model

// 所以,在thymeleaf中可以直接访问Page对象中的数据

page.setRows(discussPostService.findDiscussPostRows(0));

page.setPath("/index?orderMode=" + orderMode);

List<DiscussPost> list = discussPostService.findDiscussPosts(0, page.getOffset(), page.getLimit(), orderMode);

List<Map<String, Object>> discussPosts = new ArrayList<>();

if (list != null) {

for (DiscussPost post : list) {

Map<String, Object> map = new HashMap<>();

map.put("post", post);

User user = userService.findUserById(post.getUserId());

map.put("user", user);

// 给帖子显示点赞数量

long likeCount = likeService.findEntityLikeCount(ENTITY_TYPE_POST, post.getId());

map.put("likeCount", likeCount);

discussPosts.add(map);

}

}

model.addAttribute("discussPosts", discussPosts);

model.addAttribute("orderMode", orderMode);

return "/index";

}

- LikeController.java

@RequestMapping(path = "/like", method = RequestMethod.POST)

@ResponseBody

public String like(int entityType, int entityId, int entityUserId, int postId) {

User user = hostHolder.getUser();

// 点赞

likeService.like(user.getId(), entityType, entityId, entityUserId);

// 数量

long likeCount = likeService.findEntityLikeCount(entityType, entityId);

// 状态

int likeStatus = likeService.findEntityLikeStatus(user.getId(), entityType, entityId);

// 返回的结果

HashMap<String, Object> map = new HashMap<>();

map.put("likeCount", likeCount);

map.put("likeStatus", likeStatus);

// 点赞以后触发点赞事件

if (likeStatus == 1) { // 点赞才触发事件,取消点赞就不用触发事件啦

Event event = new Event().setTopic(TOPIC_LIKE)

// 当前用户点的赞

.setUserId(hostHolder.getUser().getId()).setEntityType(entityType).setEntityId(entityId).setEntityUserId(entityUserId)

// 帖子id

.setData("postId", postId);

// 发布事件

eventProducer.fireEvent(event);

}

// 计算帖子分数

if (entityType == ENTITY_TYPE_POST) { // 如果点赞的是帖子

String redisKey = RedisKeyUtil.getPostScoreKey();

redisTemplate.opsForSet().add(redisKey, postId);

}

return CommunityUtil.getJSONString(0, null, map);

}

- DiscussPostController.java

@RequestMapping(path = "/add", method = RequestMethod.POST)

@ResponseBody

public String addDiscussPost(String title, String content) {

User user = hostHolder.getUser();

if (user == null) {

return CommunityUtil.getJSONString(403, "您还没有登录哦!");

}

DiscussPost post = new DiscussPost();

post.setUserId(user.getId());

post.setTitle(title);

post.setContent(content);

post.setCreateTime(new Date());

discussPostService.addDiscussPost(post);

// 计算帖子分数

String redisKey = RedisKeyUtil.getPostScoreKey();

redisTemplate.opsForSet().add(redisKey, post.getId());

// 报错的情况以后统一处理

return CommunityUtil.getJSONString(0, "发布成功!");

}

// 加精、取消加精

@RequestMapping(path = "/wonderful", method = RequestMethod.POST)

@ResponseBody

public String setWonderful(int id) {

DiscussPost discusspostById = discussPostService.findDiscusspostById(id);

// 1为加精,0为正常,注意:1^1=0,0^1=1

int status = discusspostById.getStatus() ^ 1;

discussPostService.updateStatus(id, status);

// 返回的结果

Map<String, Object> map = new HashMap<>();

map.put("status", status);

// 计算帖子分数

String redisKey = RedisKeyUtil.getPostScoreKey();

redisTemplate.opsForSet().add(redisKey, id);

return CommunityUtil.getJSONString(0, null, map);

}

- CommentController.java

// 添加评论

@RequestMapping(path = "/add/{discussPostId}", method = RequestMethod.POST)

public String addComment(@PathVariable("discussPostId") int discussPostId, Comment comment) {

comment.setUserId(hostHolder.getUser().getId());

comment.setStatus(0);

comment.setCreateTime(new Date());

commentService.addComment(comment);

// 添加评论以后触发评论事件

Event event = new Event().setTopic(TOPIC_COMMENT)

// 当前用户去评论

.setUserId(hostHolder.getUser().getId()).setEntityType(comment.getEntityType()).setEntityId(comment.getEntityId())

// 帖子id

.setData("postId", discussPostId);

if (comment.getEntityType() == ENTITY_TYPE_POST) { // 如果评论的是帖子

DiscussPost target = discussPostService.findDiscusspostById(comment.getEntityId());

event.setEntityUserId(target.getUserId());

// 计算帖子分数

String redisKey = RedisKeyUtil.getPostScoreKey();

redisTemplate.opsForSet().add(redisKey, discussPostId);

} else if (comment.getEntityType() == ENTITY_TYPE_COMMENT) { // 评论的是评论

Comment target = commentService.findCommentById(comment.getEntityId());

event.setEntityUserId(target.getUserId());

}

// 发布事件

eventProducer.fireEvent(event);

// 重定向到帖子详情页

return "redirect:/discuss/detail/" + discussPostId;

}

上传头像至云服务器

导入相关依赖

<dependency>

<groupId>com.qiniugroupId>

<artifactId>qiniu-java-sdkartifactId>

<version>7.10.4version>

dependency>

添加相关配置

# 配置七牛云

qiniu:

key:

access: HbdikY5tejCMoTDKOmzgaTg3y5F-etLyRVa6F-fk

secret: wbk5BmXve6sGoQYLJ5xLZFObt8-YEGxbsMg7LNvh

bucket:

header:

name: community-xheader

url: http://rdayh554v.hn-bkt.clouddn.com

控制层

@Controller

@RequestMapping("/user")

public class UserController implements CommunityConstant {

private static final Logger logger = LoggerFactory.getLogger(UserController.class);

@Value("${community.path.upload}")

private String uploadPath;

@Value("${community.path.domain}")

private String domain; // 域名

@Value("${server.servlet.context-path}")

private String contextPath; // 项目访问路径

@Autowired

private UserService userService;

@Autowired

private HostHolder hostHolder;

@Autowired

private LikeService likeService;

@Autowired

private FollowService followService;

@Value("${qiniu.key.access}")

private String accessKey;

@Value("${qiniu.key.secret}")

private String secretKey;

@Value("${qiniu.bucket.header.name}")

private String headerBucketName;

@Value("${qiniu.bucket.header.url}")

private String headerBucketUrl;

@LoginRequired

@RequestMapping(path = "/setting", method = RequestMethod.GET)

public String getSettingPage(Model model) {

// 生成上传文件的名称

String fileName = CommunityUtil.generateUUID();

// 设置响应信息

StringMap policy = new StringMap();

policy.put("returnBody", CommunityUtil.getJSONString(0));

// 生成上传凭证

Auth auth = Auth.create(accessKey, secretKey);

String uploadToken = auth.uploadToken(headerBucketName, fileName, 3600, policy);

model.addAttribute("uploadToken", uploadToken);

model.addAttribute("fileName", fileName);

// 跳转到账号设置页面

return "/site/setting";

}

// 更新user信息的头像路径

@RequestMapping(path = "/header/url", method = RequestMethod.POST)

@ResponseBody

public String updateHeaderUrl(String fileName) {

if (StringUtils.isBlank(fileName)) {

return CommunityUtil.getJSONString(1, "文件名不能为空!");

}

// 头像的访问路径

String url = headerBucketUrl + "/" + fileName;

userService.updateHeader(hostHolder.getUser().getId(), url);

return CommunityUtil.getJSONString(0, "成功!");

}

}

优化网站性能

#配置caffeine

caffeine:

#缓存的名字

posts:

#一次缓存15个数据

max-size: 15

#缓存过期时间为3分钟

expire-seconds: 180

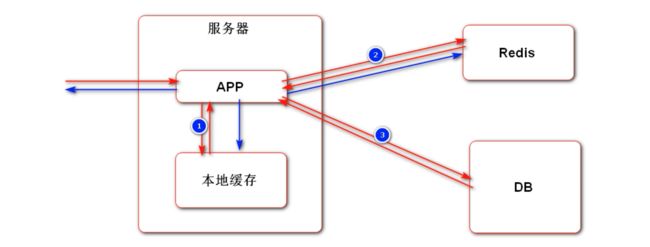

- 当请求访问服务器的时候,应用首先访问本地缓存看看有没有数据,有的话直接返回数据给应用,

- 如果本地缓存没有数据的话,就访问Redis二级缓存看看有没有数据,有数据的话就直接返回数据给应用,

- 如果Redis二级缓存也没有数据的话,这时候就需要访问数据库(DB)了,数据库查找到数据后返回给应用,

- 应用会把数据同步给Redis缓存和本地缓存,然后再返回数据给用户。

导入相关依赖

<dependency>

<groupId>com.github.ben-manes.caffeinegroupId>

<artifactId>caffeineartifactId>

<version>3.1.1version>

dependency>

添加相关配置

# 配置caffeine

caffeine:

# 缓存的名字

posts:

# 一次缓存15个数据

max-size: 15

# 缓存过期时间为3分钟

expire-seconds: 180

业务层

@Service

public class DiscussPostService {

private static final Logger logger = LoggerFactory.getLogger(DiscussPostService.class);

@Autowired

private DiscussPostMapper discussPostMapper;

@Autowired

private SensitiveFilter sensitiveFilter;

@Value("${caffeine.posts.max-size}")

private int maxSize;

@Value("${caffeine.posts.expire-seconds}")

private int expireSeconds;

/**

* Caffeine核心接口:Cache,

* Cache有两个核心子接口:LoadingCac(同步缓存),AsyncLoadingCache(异步缓存)

*/

// 帖子列表的缓存(通过key缓存value)

private LoadingCache<String, List<DiscussPost>> postListCache;

// 帖子总数的缓存(通过key缓存value)

private LoadingCache<Integer, Integer> postRowsCache;

/**

* 被@PostConstruct修饰的方法会在服务器加载Servlet的时候运行,并且只会被服务器执行一次

*/

@PostConstruct

public void init() {

// 初始化帖子列表缓存

postListCache = Caffeine.newBuilder()

// 最大缓存容量

.maximumSize(maxSize)

// 缓存过期时间

.expireAfterWrite(expireSeconds, TimeUnit.SECONDS)

// 如果缓存中没有数据,需要指明怎么从数据库中得到数据并写入缓存的方法

.build(new CacheLoader<String, List<DiscussPost>>() {

@Override

public @Nullable List<DiscussPost> load(String key) throws Exception {

if (key == null && key.length() == 0) {

throw new IllegalArgumentException("参数错误!");

}

// 将key拆分成两个参数

String[] params = key.split(":");

// 参数一定不为空,并且一定是两个参数,否则抛出异常

if (params == null && params.length != 2) {

throw new IllegalArgumentException("参数错误!");

}

int offset = Integer.valueOf(params[0]);

int limit = Integer.valueOf(params[1]);

// 扩展:可以自己再加一个二级缓存 Redis -> Mysql

// 从数据库查数据,获取后将数据放入本地缓存

logger.debug("load post list from DB...");

return discussPostMapper.selectDiscussPosts(0, offset, limit, 1);

}

});

// 初始化帖子总数缓存

postRowsCache = Caffeine.newBuilder()

// 最大缓存容量

.maximumSize(maxSize)

// 缓存过期时间

.expireAfterWrite(expireSeconds, TimeUnit.SECONDS)

// 如果缓存中没有数据,需要指明怎么从数据库中得到数据并写入缓存的方法

.build(new CacheLoader<Integer, Integer>() {

@Override

public @Nullable Integer load(Integer key) throws Exception {

// 从数据库查数据,获取后将数据放入本地缓存

logger.debug("load post rows from DB...");

return discussPostMapper.selectDiscussPostRows(key);

}

});

}

/**

* 查询帖子列表

*

* @param userId 等于0表示首页查询

* @param offset

* @param limit

* @param orderMode 等于1表示查询热门帖子

* @return

*/

public List<DiscussPost> findDiscussPosts(int userId, int offset, int limit, int orderMode) {

// 当访问首页并且是热门帖子列表的时候,才从缓存里取出结果

if (userId == 0 && orderMode == 1) {

return postListCache.get(offset + ":" + limit);

}

// 其他数据都是直接从数据库中取结果

logger.debug("load post list from DB...");

return discussPostMapper.selectDiscussPosts(userId, offset, limit, orderMode);

}

/**

* 查询帖子列表总行数

*

* @param userId 等于0表示首页查询总行数

* @return

*/

public int findDiscussPostRows(int userId) {

// 当访问的是首页查询的时候,才从缓存中取出结果

if (userId == 0) {

return postRowsCache.get(userId);

}

// 其他数据都是直接从数据库中取结果

logger.debug("load post rows from DB...");

return discussPostMapper.selectDiscussPostRows(userId);

}

}