LinkedIn DataHub --- 经验分享

LinkedIn DataHub --- 经验分享

- ⚽⚽Passion begets persistence⚽⚽

-

- 1. Docker command

-

- 1.1 docker quickstart

- 1.2 python3 -m datahub docker nuke --keep-data

- 1.3 docker data volumes

- 2. Error

-

- 2.1 DPI-147:Cannot locate a 64-bit Oracle Client library

- 2.2 UI界面无法cancle

- 3. Delete metadata

- 4. Oracle permission

- 5. Neo4j or elastisearch

- 6. Ingest metadata by json

-

- 6.1 Json template

- 6.2 Json yaml

- 7. Create Lineage

-

- 7.1 Yml template

- 7.2 Run

- 8. Ingest CSV

-

- 8.1 Csv Template

- 8.2 Run

- 9. Transformers

-

- 9.1 Simple Demo

- 10. Actions

-

- 10.1 Install plugin

- 10.2 Config Action

- 10.3 Run

- 10.4 Kafka topic

- 11. Data Quality

-

- 11.1 initial

- 11.2 connect DB

- 11.3 create expectation

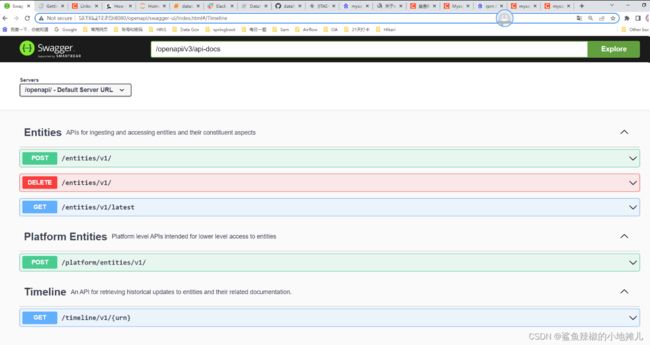

- 12. Openapi

-

- 12.1 Swagger

- 12.2 api test

- 13. Pending

⚽⚽Passion begets persistence⚽⚽

datahub官网地址: https://datahubproject.io/docs/.

github地址: https://github.com/datahub-project/datahub.

在线Demo: https://demo.datahubproject.io.

Recipe Demo: https://github.com/datahub-project/datahub/tree/master/metadata-ingestion/examples/recipes.

1. Docker command

1.1 docker quickstart

1.2 python3 -m datahub docker nuke --keep-data

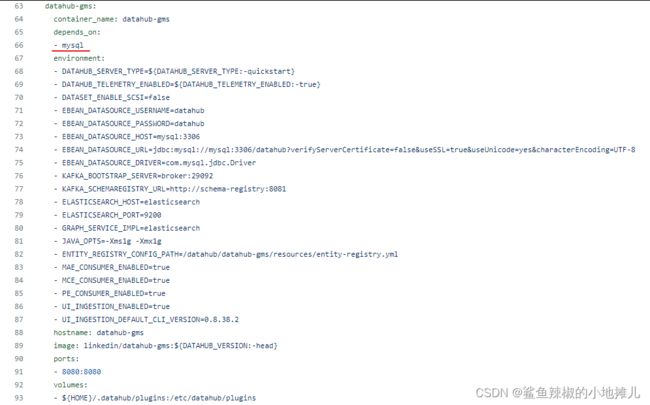

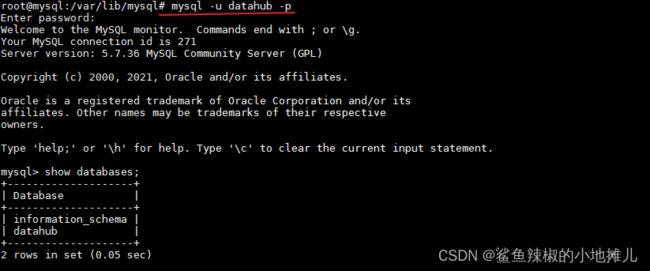

1.3 docker data volumes

2. Error

2.1 DPI-147:Cannot locate a 64-bit Oracle Client library

- 首先确保oracle client安装完成,以及cx_oracle

官网安装: https://blog.csdn.net/weixin_43916074/article/details/124827554.

2.2 UI界面无法cancle

- ingest已经执行完,UI还在转圈圈

暂时无解。

3. Delete metadata

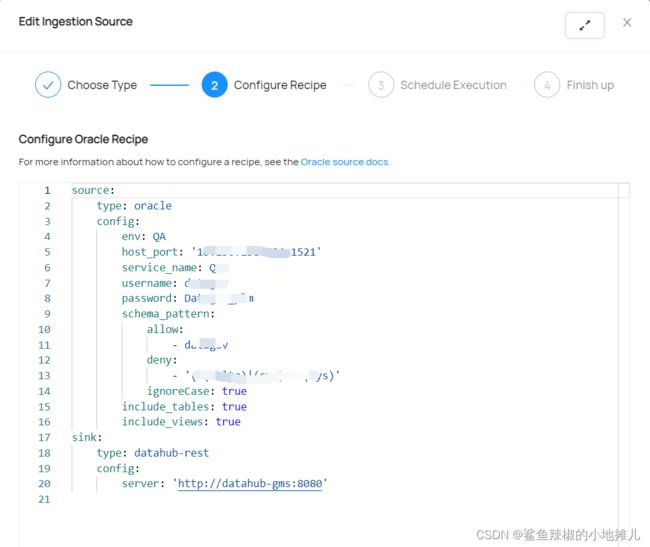

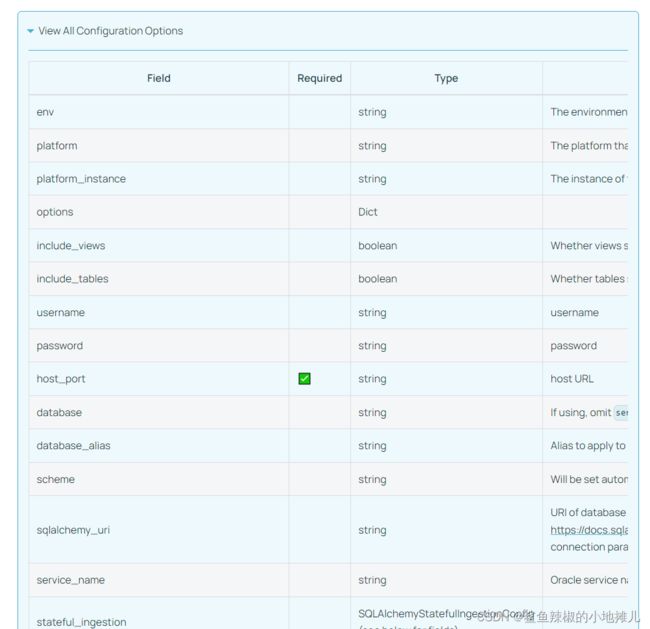

4. Oracle permission

- 设定oracle 账密权限,只能看到一个schema下的table/view

5. Neo4j or elastisearch

6. Ingest metadata by json

官网参考: https://github.com/datahub-project/datahub/blob/master/metadata-ingestion/examples/demo_data/demo_data.json.

6.1 Json template

[

{

"auditHeader": null,

"proposedSnapshot": {

"com.linkedin.pegasus2avro.metadata.snapshot.DatasetSnapshot": {

"urn": "urn:li:dataset:(urn:li:dataPlatform:bigquery,bigquery-schema-data.covid19,QA)",

"aspects": [

{

"com.linkedin.pegasus2avro.schema.SchemaMetadata": {

"schemaName": "bigquery-schema-data.covid19",

"platform": "urn:li:dataPlatform:bigquery",

"version": 0,

"created": {

"time": 1621882982738,

"actor": "urn:li:corpuser:etl",

"impersonator": null

},

"lastModified": {

"time": 1621882982738,

"actor": "urn:li:corpuser:etl",

"impersonator": null

},

"deleted": null,

"dataset": null,

"cluster": null,

"hash": "",

"platformSchema": {

"com.linkedin.pegasus2avro.schema.MySqlDDL": {

"tableSchema": ""

}

},

"fields": [

{

"fieldPath": "county_code",

"jsonPath": null,

"nullable": true,

"description": null,

"type": {

"type": {

"com.linkedin.pegasus2avro.schema.StringType": {}

}

},

"nativeDataType": "String()",

"recursive": false,

"globalTags": null,

"glossaryTerms": null

},

{

"fieldPath": "county_name",

"jsonPath": null,

"nullable": true,

"description": null,

"type": {

"type": {

"com.linkedin.pegasus2avro.schema.StringType": {}

}

},

"nativeDataType": "String()",

"recursive": false,

"globalTags": null,

"glossaryTerms": null

},

{

"fieldPath": "county_number",

"jsonPath": null,

"nullable": true,

"description": null,

"type": {

"type": {

"com.linkedin.pegasus2avro.schema.NumberType": {}

}

},

"nativeDataType": "Integer()",

"recursive": false,

"globalTags": null,

"glossaryTerms": null

},

{

"fieldPath": "hospital_bed_number",

"jsonPath": null,

"nullable": true,

"description": null,

"type": {

"type": {

"com.linkedin.pegasus2avro.schema.NumberType": {}

}

},

"nativeDataType": "Integer()",

"recursive": false,

"globalTags": null,

"glossaryTerms": null

}

],

"primaryKeys": null,

"foreignKeysSpecs": null

}

}

]

}

},

"proposedDelta": null

},

{

"auditHeader": null,

"proposedSnapshot": {

"com.linkedin.pegasus2avro.metadata.snapshot.DatasetSnapshot": {

"urn": "urn:li:dataset:(urn:li:dataPlatform:bigquery,bigquery-sehcma-nan.covid19,QA)",

"aspects": [

{

"com.linkedin.pegasus2avro.schema.SchemaMetadata": {

"schemaName": "bigquery-schema-nan.covid19",

"platform": "urn:li:dataPlatform:bigquery",

"version": 0,

"created": {

"time": 1621882983026,

"actor": "urn:li:corpuser:etl",

"impersonator": null

},

"lastModified": {

"time": 1621882983026,

"actor": "urn:li:corpuser:etl",

"impersonator": null

},

"deleted": null,

"dataset": null,

"cluster": null,

"hash": "",

"platformSchema": {

"com.linkedin.pegasus2avro.schema.MySqlDDL": {

"tableSchema": ""

}

},

"fields": [

{

"fieldPath": "county_code",

"jsonPath": null,

"nullable": true,

"description": null,

"type": {

"type": {

"com.linkedin.pegasus2avro.schema.StringType": {}

}

},

"nativeDataType": "String()",

"recursive": false,

"globalTags": null,

"glossaryTerms": null

},

{

"fieldPath": "county_name",

"jsonPath": null,

"nullable": true,

"description": null,

"type": {

"type": {

"com.linkedin.pegasus2avro.schema.StringType": {}

}

},

"nativeDataType": "String()",

"recursive": false,

"globalTags": null,

"glossaryTerms": null

},

{

"fieldPath": "total_personnel_number",

"jsonPath": null,

"nullable": true,

"description": null,

"type": {

"type": {

"com.linkedin.pegasus2avro.schema.NumberType": {}

}

},

"nativeDataType": "Integer()",

"recursive": false,

"globalTags": null,

"glossaryTerms": null

},

{

"fieldPath": "total_hospital_number",

"jsonPath": null,

"nullable": true,

"description": null,

"type": {

"type": {

"com.linkedin.pegasus2avro.schema.NumberType": {}

}

},

"nativeDataType": "Integer()",

"recursive": false,

"globalTags": null,

"glossaryTerms": null

}

],

"primaryKeys": null,

"foreignKeysSpecs": null

}

}

]

}

},

"proposedDelta": null

}

]

6.2 Json yaml

sudo python3 -m datahub ingest -c xxx.yaml

source:

type: file

config:

# Coordinates

filename: ./xxxx/file.json

# sink configs

sink:

type: 'datahub-rest'

config:

server: 'http://localhost:8080'

7. Create Lineage

官网参考: https://github.com/datahub-project/datahub/blob/master/metadata-ingestion/examples/bootstrap_data/file_lineage.yml.

7.1 Yml template

---

version: 1

lineage:

- entity:

name: topic3

type: dataset

env: DEV

platform: kafka

upstream:

- entity:

name: topic2

type: dataset

env: DEV

platform: kafka

- entity:

name: topic1

type: dataset

env: DEV

platform: kafka

- entity:

name: topic2

type: dataset

env: DEV

platform: kafka

upstream:

- entity:

name: kafka.topic2

env: PROD

platform: snowflake

platform_instance: test

type: dataset

7.2 Run

sudo python3 -m datahub ingest -c xxx.yaml

source:

type: datahub-lineage-file

config:

file: /path/to/file_lineage.yml

preserve_upstream: False

# sink configs

sink:

type: 'datahub-rest'

config:

server: 'http://localhost:8080'

8. Ingest CSV

官网参考: https://datahubproject.io/docs/generated/ingestion/sources/csv.

8.1 Csv Template

官网参考: https://github.com/datahub-project/datahub/blob/master/metadata-ingestion/examples/demo_data/csv_enricher_demo_data.csv.

注意事项:

- 新增属性domain

- 当前版本会新建glossaryTerm,与目标冲突,解决方法就是用id

8.2 Run

sudo python3 -m datahub ingest -c xxx.yaml

source:

type: csv-enricher

config:

filename: /xxxx/csv_xxxx.csv

delimiter: ','

array_delimiter: '|'

# sink configs

sink:

type: 'datahub-rest'

config:

server: 'http://localhost:8080'

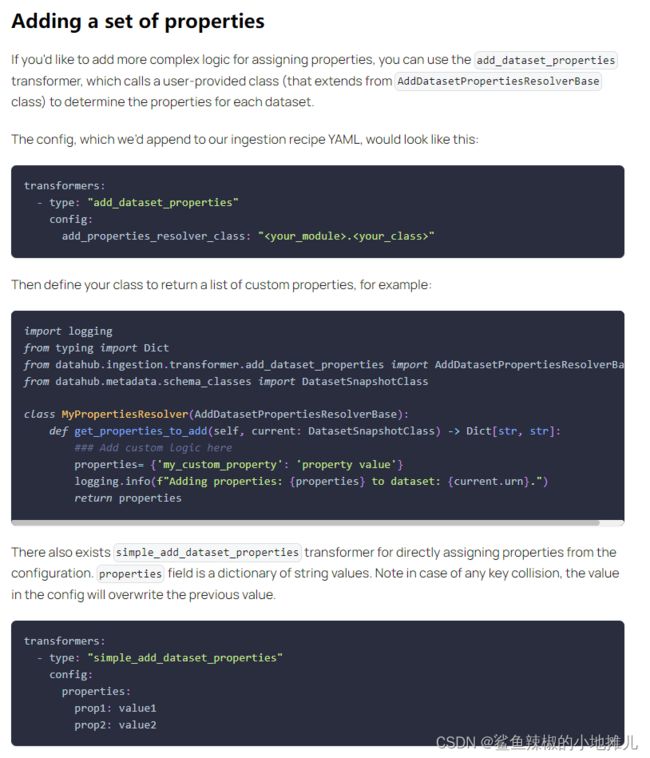

9. Transformers

官网参考: https://datahubproject.io/docs/metadata-ingestion/transformers.

9.1 Simple Demo

sudo python3 -m datahub ingest -c xxx.yaml

//结合第六项的json一起使用

source:

type: file

config:

# Coordinates

filename: ./xxxx/file.json

transformers:

- type: "simple_add_dataset_properties"

config:

properties:

prop1: value1

# sink configs

sink:

type: 'datahub-rest'

config:

server: 'http://localhost:8080'

10. Actions

官网参考: https://datahubproject.io/docs/actions.

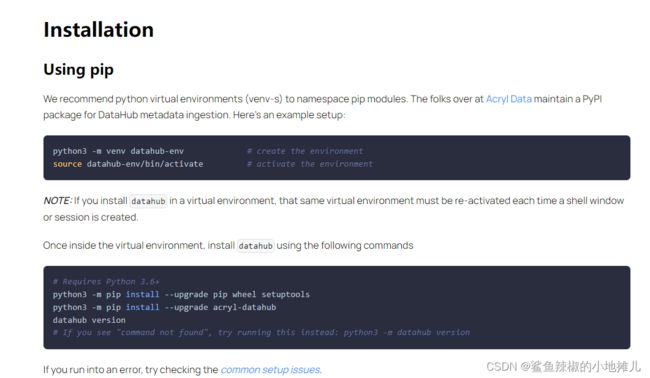

10.1 Install plugin

- Install Cli

sudo python3 -m pip install --upgrade pip wheel setuptools

sudo python3 -m pip install --upgrade acryl-datahub

sudo datahub --version- Install Action

sudo python3 -m pip install --upgrade pip wheel setuptools

sudo python3 -m pip install --upgrade acryl-datahub-actions

sudo datahub actions version

10.2 Config Action

官网参考: https://datahubproject.io/docs/actions.

- Action Pipeline Name (Should be unique and static)

- Source Configurations

- Transform + Filter Configurations

- Action Configuration

- Pipeline Options (Optional)

- DataHub API configs (Optional - required for select actions)

# 1. Required: Action Pipeline Name

name: <action-pipeline-name>

# 2. Required: Event Source - Where to source event from.

source:

type: <source-type>

config:

# Event Source specific configs (map)

# 3a. Optional: Filter to run on events (map)

filter:

event_type: <filtered-event-type>

event:

# Filter event fields by exact-match

<filtered-event-fields>

# 3b. Optional: Custom Transformers to run on events (array)

transform:

- type: <transformer-type>

config:

# Transformer-specific configs (map)

# 4. Required: Action - What action to take on events.

action:

type: <action-type>

config:

# Action-specific configs (map)

# 5. Optional: Additional pipeline options (error handling, etc)

options:

retry_count: 0 # The number of times to retry an Action with the same event. (If an exception is thrown). 0 by default.

failure_mode: "CONTINUE" # What to do when an event fails to be processed. Either 'CONTINUE' to make progress or 'THROW' to stop the pipeline. Either way, the failed event will be logged to a failed_events.log file.

failed_events_dir: "/tmp/datahub/actions" # The directory in which to write a failed_events.log file that tracks events which fail to be processed. Defaults to "/tmp/logs/datahub/actions".

# 6. Optional: DataHub API configuration

datahub:

server: "http://localhost:8080" # Location of DataHub API

# token: <your-access-token> # Required if Metadata Service Auth enabled

官网提供的demo

# 1. Action Pipeline Name

name: "hello_world"

# 2. Event Source: Where to source event from.

source:

type: "kafka"

config:

connection:

bootstrap: ${KAFKA_BOOTSTRAP_SERVER:-localhost:9092}

schema_registry_url: ${SCHEMA_REGISTRY_URL:-http://localhost:8081}

# 3. Action: What action to take on events.

action:

type: "hello_world"

10.3 Run

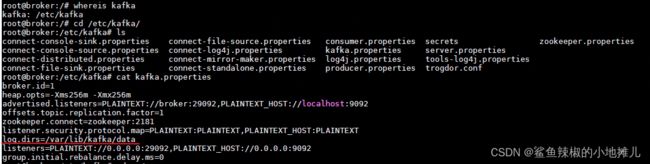

10.4 Kafka topic

- 丢人丢大了

- 找到kafka容器

docker ps

- 进入kafka容器

docker exec -it 58 /bin/bash

cd /etc/kafka

cat kafka.properties- log就是data存放的地方

cd /var/lib/kafka/data- 里面就是topic和offset

- 查看消费者组

./kafka-consumer-groups --bootstrap-server localhost:9092 --list

- 查看topic消息

cd /usr/bin

./kafka-console-consumer --bootstrap-server localhost:9092 --from-beginning --topic PlatformEvent_v1

11. Data Quality

官网参考: https://datahubproject.io/docs/metadata-ingestion/integration_docs/great-expectations/.

11.1 initial

- install GE

sudo pip3 install ‘acryl-datahub[great-expectations]’- 初始化init

/usr/local/python3/bin/great_expectations init- 查询版号

/usr/local/python3/bin/great_expectations --version

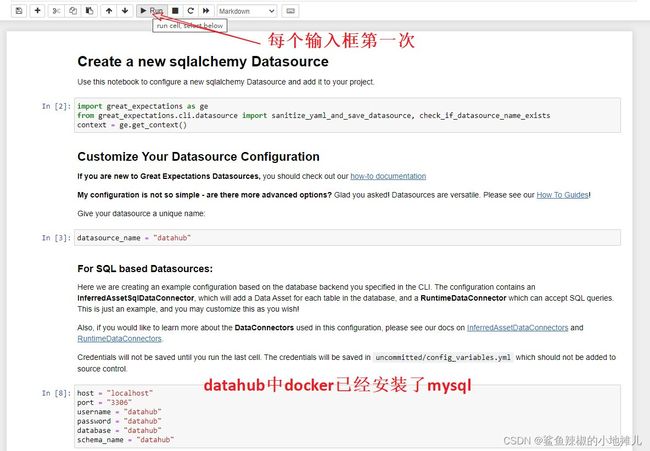

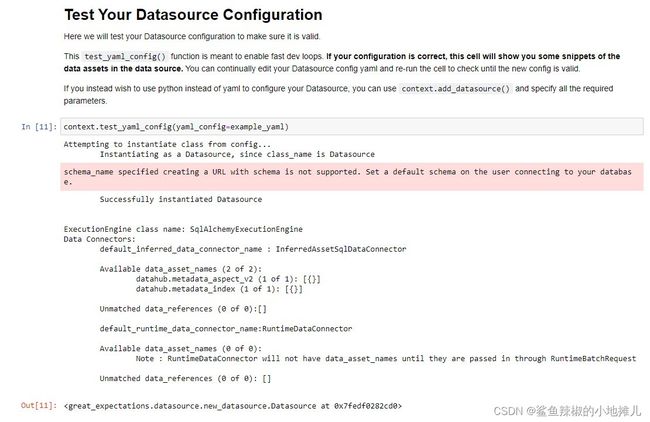

11.2 connect DB

按照官网,创建checkpoint并执行

/usr/local/python3/bin/great_expectations -v checkpoint run demo_checkpoint.yaml不出以外,报警啦

Could not find Checkpoint ‘demo_checkpoint.yaml’ (or its configuration is invalid)

/usr/local/python3/bin/great_expectations datasource new --no-jupyter

enter option 2 =>我用的mysql

enter option 2

重新执行上一步

/usr/local/python3/bin/great_expectations datasource new --no-jupyter

按照提示继续

jupyter notebook /home/os-nan.zhao/great_expectations/uncommitted/datasource_new.ipynb --allow-root --ip 0.0.0.0

在git中找到mysql的config

docker mysql config: https://github.com/datahub-project/datahub/blob/master/docker/quickstart/docker-compose-without-neo4j.quickstart.yml.

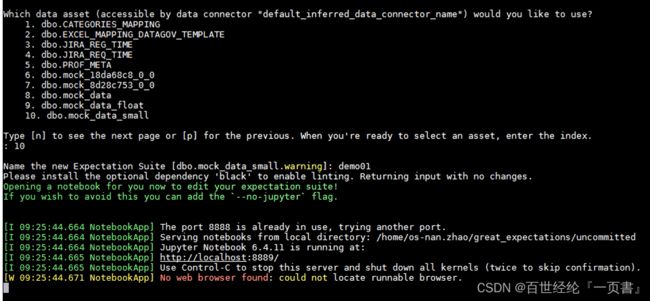

11.3 create expectation

- 另开窗口,继续执行

/usr/local/python3/bin/great_expectations suite new- select 2

enter option 2

- Index of the table of which you want to create the suite

enter option 10

- Enter the file name

demo01

- 晕死,没有开8889的port

这个datahub,真难提前开好所有port- 编辑

/usr/local/python3/bin/great_expectations suite edit --no-jupyter

jupyter notebook /great_expectations/uncommitted/edit_.ipynb --allow-root --ip 0.0.0.0- 执行

/usr/local/python3/bin/great_expectations checkpoint new --no-jupyter- next

jupyter notebook /great_expectations/uncommitted/edit_checkpoint_.ipynb --allow-root --ip 0.0.0.0

Great Expectation website: https://docs.greatexpectations.io/docs/guides/connecting_to_your_data/database/mysql.

12. Openapi

12.1 Swagger

12.2 api test

13. Pending

学习datahub的时光即快乐又痛苦,快乐是捡起了docker,从陌生到熟悉到熟练。第一次从零开始,安装学习使用一个网上资源基本没有的软件,所有问题都要去slack上面去提问,再次感谢热心的社区人员。他们真的很怒力,基本每个月都会release 三个版本,可怜了我提交的feature request,还没有实现。哈哈。

对于一个开源的软件来说,真的很厉害了,界面时尚,效能也很牛,但是很多地方颗粒度都不够细,虽然支持Hana,却不支持SAP,最后又回归到了SAP的information steward,

SAP IS website: https://www.sap.com/products/technology-platform/data-profiling-steward.html.

SAP IS document website: https://help.sap.com/docs/SAP_INFORMATION_STEWARD.