GhostNet 网络原理与 Tensorflow2.0 实现

文章目录

- 介绍

- Ghost Module

- Ghost Bottleneck

- GhostNet

- 代码实现

-

- 1、用类实现

- 2、用函数实现

介绍

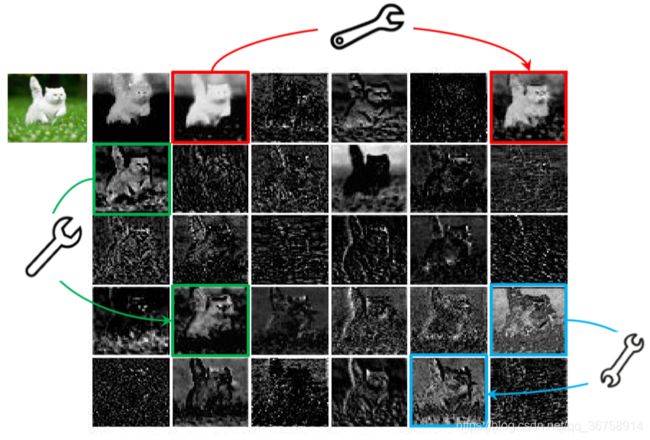

在 GhostNet: More Features from Cheap Operations 一文中,作者发现,在一个训练好的深度神经网络中,通常会包含一些相似的特征图,以保证对输入数据有更全面的理解。如下图所示,在 ResNet-50 中,将经过第一个残差块处理后的特征图拿出来,三对相似的特征图示例用相同颜色的框注释。 因此,作者提出:将用于获得这张特征图的卷积核数量减少一半(则得到的特征图的通道数会减少一半),然后将得到的特征图进行线性运算(在下图中用扳手表示),得到缺少的另一半特征图,最后将这两部分特征图合并,得到和原来特征图相同的尺寸。

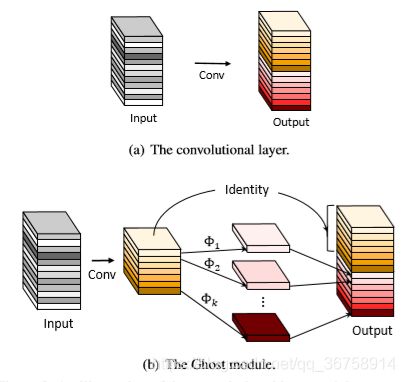

Ghost Module

下面第一张图是正常的卷积过程;第二张图代表 Ghost 模块,即先用含一半卷积核的正常卷积提取特征图,再将这个特征图放入到线性运算中得到新的特征图,最终合并这两部分。线性运算指的是 3x3 或 5x5 的深度级卷积过程。

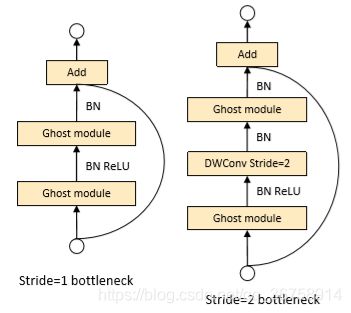

Ghost Bottleneck

Ghost bottleneck 类似于 ResNet 中的基本残差块,主要由两个堆叠的 Ghost 模块组成。第一个 Ghost 模块用作扩展层,增加通道数。这里将输出通道数与输入通道数之比称为 expansion ratio。第二个 Ghost 模块用来减少通道数,以与 shortcut 路径匹配。然后,使用 shortcut 连接这两个 Ghost 模块的输入和输出。出于效率考虑,Ghost 模块中的初始卷积是点卷积。

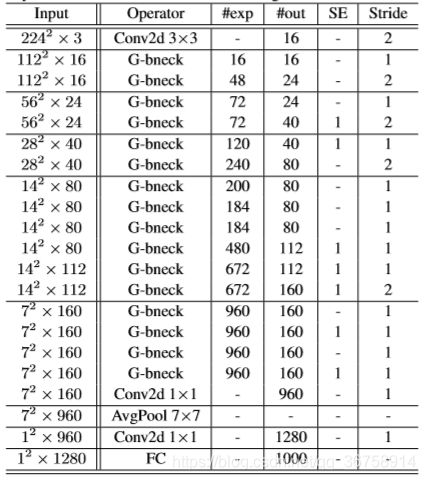

GhostNet

代码实现

1、用类实现

import tensorflow as tf

from tensorflow.keras.layers import GlobalAveragePooling2D, Conv2D, Concatenate, BatchNormalization, DepthwiseConv2D, Lambda, Reshape, Layer, Activation, add

from math import ceil

class SEModule(Layer):

def __init__(self, filters, ratio):

super(SEModule, self).__init__()

self.pooling = GlobalAveragePooling2D()

self.conv1 = Conv2D(int(filters / ratio), (1, 1), strides=(1, 1), padding='same',

use_bias=False, activation=None)

self.conv2 = Conv2D(int(filters), (1, 1), strides=(1, 1), padding='same',

use_bias=False, activation=None)

self.relu = Activation('relu')

self.hard_sigmoid = Activation('hard_sigmoid')

def call(self, inputs):

x = self.pooling(inputs)

x = Reshape((1, 1, int(x.shape[1])))(x)

x = self.relu(self.conv1(x))

excitation = self.hard_sigmoid(self.conv2(x))

x = inputs * excitation

return x

class GhostModule(Layer):

def __init__(self, out, ratio, convkernel, dwkernel):

super(GhostModule, self).__init__()

self.ratio = ratio

self.out = out

self.conv_out_channel = ceil(self.out * 1.0 / ratio)

self.conv = Conv2D(int(self.conv_out_channel), (convkernel, convkernel), use_bias=False,

strides=(1, 1), padding='same', activation=None)

self.depthconv = DepthwiseConv2D(dwkernel, 1, padding='same', use_bias=False,

depth_multiplier=ratio-1, activation=None)

self.concat = Concatenate()

def call(self, inputs):

x = self.conv(inputs)

if self.ratio == 1:

return x

dw = self.depthconv(x)

dw = dw[:, :, :, :int(self.out - self.conv_out_channel)]

output = self.concat([x, dw])

return output

class GBNeck(Layer):

def __init__(self, dwkernel, strides, exp, out, ratio, use_se):

super(GBNeck, self).__init__()

self.strides = strides

self.use_se = use_se

self.conv = Conv2D(out, (1, 1), strides=(1, 1), padding='same',

activation=None, use_bias=False)

self.relu = Activation('relu')

self.depthconv1 = DepthwiseConv2D(dwkernel, strides, padding='same', depth_multiplier=ratio-1,

activation=None, use_bias=False)

self.depthconv2 = DepthwiseConv2D(dwkernel, strides, padding='same', depth_multiplier=ratio-1,

activation=None, use_bias=False)

for i in range(5):

setattr(self, f"batchnorm{i+1}", BatchNormalization())

self.ghost1 = GhostModule(exp, ratio, 1, 3)

self.ghost2 = GhostModule(out, ratio, 1, 3)

self.se = SEModule(exp, ratio)

def call(self, inputs):

x = self.batchnorm1(self.depthconv1(inputs))

x = self.batchnorm2(self.conv(x))

y = self.relu(self.batchnorm3(self.ghost1(inputs)))

if self.strides > 1:

y = self.relu(self.batchnorm4(self.depthconv2(y)))

if self.use_se:

y = self.se(y)

y = self.batchnorm5(self.ghost2(y))

return add([x, y])

class GhostNet(tf.keras.Model):

def __init__(self, classes):

super(GhostNet, self).__init__()

self.classes = classes

self.conv1 = Conv2D(16, (3, 3), strides=(2, 2), padding='same',

activation=None, use_bias=False)

self.conv2 = Conv2D(960, (1, 1), strides=(1, 1), padding='same',

activation=None, use_bias=False)

self.conv3 = Conv2D(1280, (1, 1), strides=(1, 1), padding='same',

activation=None, use_bias=False)

self.conv4 = Conv2D(self.classes, (1, 1), strides=(1, 1), padding='same',

activation=None, use_bias=False)

for i in range(3):

setattr(self, f"batchnorm{i+1}", BatchNormalization())

self.relu = Activation('relu')

self.softmax = Activation('softmax')

self.pooling = GlobalAveragePooling2D()

self.dwkernels = [3, 3, 3, 5, 5, 3, 3, 3, 3, 3, 3, 5, 5, 5, 5, 5]

self.strides = [1, 2, 1, 2, 1, 2, 1, 1, 1, 1, 1, 2, 1, 1, 1, 1]

self.exps = [16, 48, 72, 72, 120, 240, 200, 184, 184, 480, 672, 672, 960, 960, 960, 960]

self.outs = [16, 24, 24, 40, 40, 80, 80, 80, 80, 112, 112, 160, 160, 160, 160, 160]

self.ratios = [2] * 16

self.use_ses = [False, False, False, True, True, False, False, False,

False, True, True, True, False, True, False, True]

for i, args in enumerate(zip(self.dwkernels, self.strides, self.exps, self.outs, self.ratios, self.use_ses)):

setattr(self, f"gbneck{i}", GBNeck(*args))

def call(self, inputs):

x = self.relu(self.batchnorm1(self.conv1(inputs)))

# Iterate through Ghost Bottlenecks

for i in range(16):

x = getattr(self, f"gbneck{i}")(x)

x = self.relu(self.batchnorm2(self.conv2(x)))

x = self.pooling(x)

x = Reshape((1, 1, int(x.shape[1])))(x)

x = self.relu(self.batchnorm3(self.conv3(x)))

x = self.conv4(x)

x = tf.squeeze(x, 1)

x = tf.squeeze(x, 1)

output = self.softmax(x)

return output

inputs = np.zeros((1, 224, 224, 3), np.float32)

model = GhostNet(10)

2、用函数实现

import tensorflow as tf

from tensorflow.keras.layers import GlobalAveragePooling2D, Conv2D, Concatenate, BatchNormalization, DepthwiseConv2D, Lambda, Reshape, Layer, Activation, add

from math import ceil

inputs = np.zeros((1, 224, 224, 3), dtype=np.float32)

def SEModule(inputs, filters, ratio):

x = inputs

x = GlobalAveragePooling2D()(x)

x = Reshape((1, 1, int(x.shape[1])))(x)

x = Conv2D(int(filters / ratio), (1, 1), strides=(1, 1), padding='same', use_bias=False, activation=None)(x)

x = Activation('relu')(x)

x = Conv2D(int(filters), (1, 1), strides=(1, 1), padding='same', use_bias=False, activation=None)(x)

excitation = Activation('hard_sigmoid')(x)

x = inputs * excitation

return x

def GhostModule(inputs, out, ratio, convkernel, dwkernel):

x = inputs

conv_out_channel = ceil(out * 1.0 / ratio)

x = Conv2D(int(conv_out_channel), (convkernel, convkernel), use_bias=False,

strides=(1, 1), padding='same', activation=None)(x)

if ratio == 1:

return x

else:

dw = DepthwiseConv2D(dwkernel, 1, padding='same', use_bias=False,

depth_multiplier=ratio-1, activation=None)(x)

dw = dw[:, :, :, :int(out - conv_out_channel)]

output = Concatenate()([x, dw])

return output

def GBNeck(inputs, dwkernel, strides, exp, out, ratio, use_se):

x = DepthwiseConv2D(dwkernel, strides, padding='same', depth_multiplier=ratio-1,

activation=None, use_bias=False)(inputs)

x = BatchNormalization()(x)

x = Conv2D(out, (1, 1), strides=(1, 1), padding='same',

activation=None, use_bias=False)(x)

x = BatchNormalization()(x)

y = GhostModule(inputs, exp, ratio, 1, 3)

y = BatchNormalization()(y)

y = Activation('relu')(y)

if strides > 1:

y = DepthwiseConv2D(dwkernel, strides, padding='same', depth_multiplier=ratio-1,

activation=None, use_bias=False)(y)

y = BatchNormalization()(y)

y = Activation('relu')(y)

if use_se:

SEModule(y, exp, ratio)

y = GhostModule(y, out, ratio, 1, 3)

y = BatchNormalization()(y)

return add([x, y])

dwkernels = [3, 3, 3, 5, 5, 3, 3, 3, 3, 3, 3, 5, 5, 5, 5, 5]

strides = [1, 2, 1, 2, 1, 2, 1, 1, 1, 1, 1, 2, 1, 1, 1, 1]

exps = [16, 48, 72, 72, 120, 240, 200, 184, 184, 480, 672, 672, 960, 960, 960, 960]

outs = [16, 24, 24, 40, 40, 80, 80, 80, 80, 112, 112, 160, 160, 160, 160, 160]

ratios = [2] * 16

use_ses = [False, False, False, True, True, False, False, False,

False, True, True, True, False, True, False, True]

def GhostNet(classes):

img_input = tf.keras.layers.Input(shape=(224, 224, 3))

x = Conv2D(16, (3, 3), strides=(2, 2), padding='same', activation=None, use_bias=False)(img_input)

x = BatchNormalization()(x)

x = Activation('relu')(x)

for i in range(16):

x = GBNeck(x, dwkernels[i], strides[i], exps[i], outs[i], ratios[i], use_ses[i])

x = Conv2D(960, (1, 1), strides=(1, 1), padding='same', activation=None, use_bias=False)(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = GlobalAveragePooling2D()(x)

x = Reshape((1, 1, int(x.shape[1])))(x)

x = Conv2D(1280, (1, 1), strides=(1, 1), padding='same', activation=None, use_bias=False)(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = Conv2D(classes, (1, 1), strides=(1, 1), padding='same', activation=None, use_bias=False)(x)

x = tf.squeeze(x, 1)

x = tf.squeeze(x, 1)

output = Activation('softmax')(x)

model = tf.keras.Model(img_input, output)

return model

model = GhostNet(classes=10)

model(inputs).shape