重读《Deep Residual Learning for Image Recognition》之进一步理解残差网络的神秘(附Pytorch代码)

残差网络

- 前言:

- 一、理论相关

-

- 1.摘要(Abstract)

- 2.介绍(Introduction)

- 3.深度残差学习

-

- 3.1残差学习

- 3.2网络结构

- 4.实验结果

- 二、代码实现

-

- 1.Pytorch内置

- 2.手动实现

-

- 2.1残差块实现

- 2.2残差网实现

- 2.3代码编写注意点

- 参考:

前言:

残差网络是由来自Microsoft Research的4位学者提出的卷积神经网络,在2015年的ImageNet大规模视觉识别竞赛(ImageNet Large Scale Visual Recognition Challenge, ILSVRC)中获得了图像分类和物体识别的优胜。 残差网络的特点是容易优化,并且能够通过增加相当的深度来提高准确率。其内部的残差块使用了跳跃连接,缓解了在深度神经网络中增加深度带来的梯度消失问题。

一、理论相关

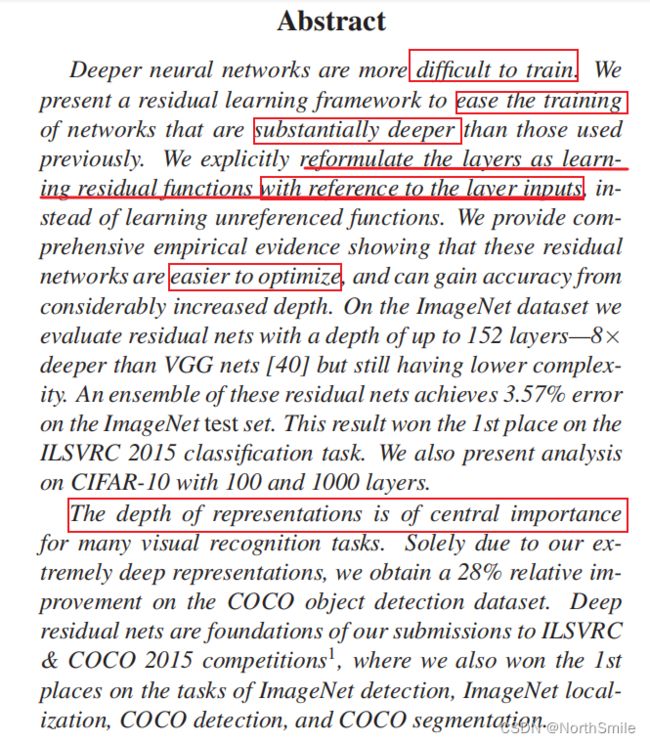

1.摘要(Abstract)

- 作者提出残差学习框架用于简化网络训练,有助于构建更深更大的神经网络;

- 将网络层视为参考层输入的残差学习函数;

- 残差网络更容易进行优化;

- 对于许多视觉识别任务,特征表示的深度是非常重要的,换句话说较深的网络可能获取到更好的性能;

2.介绍(Introduction)

图像分类任务中,分类器以一种“端到端”的方式进行网络的训练学习,通常可以很自然地将一些低级、中级以及高级视觉特征进行融合,且随着网络层的堆叠加深,其提取的特征就愈加丰富,特征级别也就更高。很多现有工作已经证明神经网络的深度对于网络性能的提升具有严重的影响。

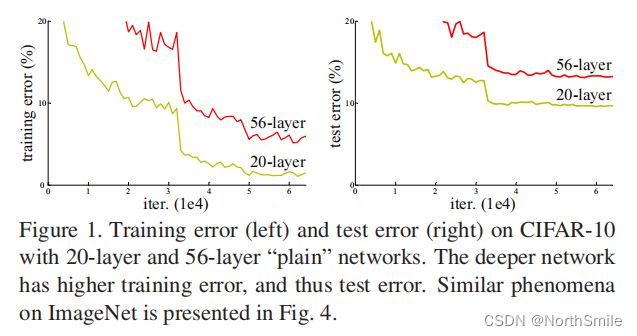

既然”网络深度“如此重要,而我们又是通过顺序堆叠网络层的方式扩展网络深度,那么很自然地会得到一个问题:学习一个更优越的网络是否像堆叠网络层一样容易呢?(Is learning better networks as easy as stacking more layers?)答案是否定的,因为不合适的网络深度将会带来梯度消失或者梯度爆炸的问题,进而影响网络的最后性能。

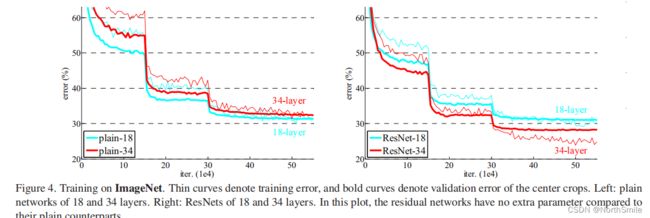

图中清晰地看到,对于两个不同深度的普通神经网络,较深网络的性能并没有得到提升,反而发生了一定的退化:左图中56层网络的训练误差更大,导致网络的分类准确率降低。这说明虽然合适的网络深度可以提升性能,但盲目堆叠网络层以扩展网络深度的方式并不可取。

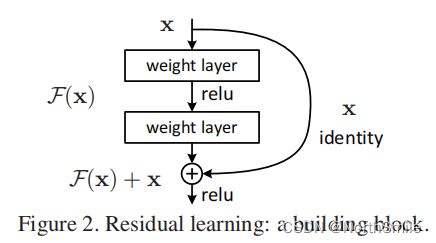

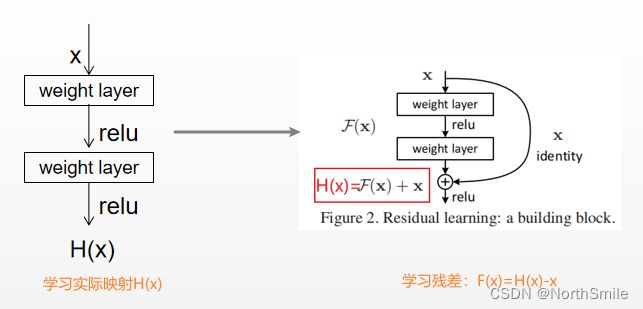

为了解决加深网络带来的性能退化问题,作者提出了残差学习框架:

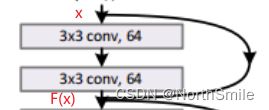

假设我们期望网络层对于输入x可以得到输出Η(x),普通网络是这么做的;现在残差学习框架转而期望学习H(x)-x的之间的差,即学习残差映射F(x),很显然地有H(x)=F(x)+x。这种学习目标之间的变化类似下图:

此时其实网络学习的残差映射是更简单更快捷的,残差块学习残差F(x),然后通过跳跃连接将输入x直接与F(x)进行相加构造实际映射H(x)。下面引用原文中的一句话:

To the extreme, if an identity mapping were optimal, it would be easier to push the residual to zero than to fit an identity mapping by a stack of nonlinear layers.

翻译:极端情况下,如果恒等映射是最优的,将残差推到0比通过堆叠一串非线性层拟合此一致性映射容易的多。

怎么理解呢?假设普通网络学习的实际映射为输入x的一个恒等映射,即H(x)=x,很明显残差F(x)=H(x)-x=0。F(x)=0难道不比弄一堆网络层学一个函数映射简单吗?

需要注意此处的残差块中通过跳跃连接传递输入x的方式为恒等映射,即不对输入做任何运算操作。这样的跳跃连接既不会引入任何额外的参数也不会增加网络的计算复杂度,其实近几年残差块已经有了很多变种。

总之,作者通过大量的实验证明了以下两点:

(1)残差网的结构更利于优化;

(2)残差网可以在扩展网络深度的同时,提高网络性能;

3.深度残差学习

3.1残差学习

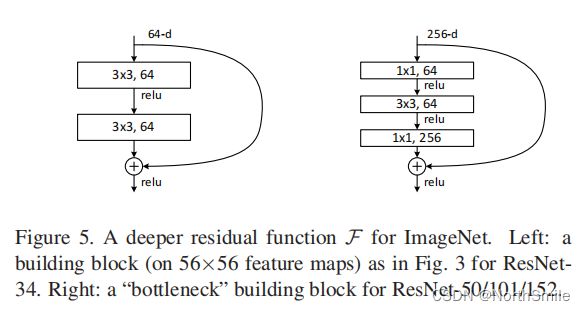

作者提出两种不同的残差块结构,完整的残差网络由数个这样的残差块堆叠构成,残差块结构如下:

(1)需要知道残差学习是借助“跳跃连接”实现的,输入x在通过残差块学习得到残差映射F(x)后,输入x通过跳跃连接直接传递到残差块的输出点,然后x与F(x)通过“元素级加法”得到实现映射H(x),用公式可表示为如下形式:

![]()

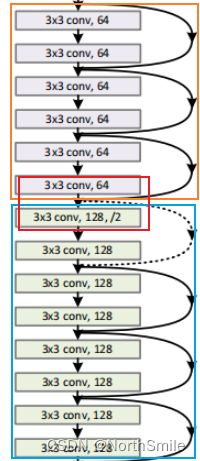

这种方式通过跳跃连接直接传递输入x,不会引入额外的参数也不会增加模块的计算复杂性,在后面的网络结构中可看到使用“实线跳跃连接”的残差块即为这种方式,此时输入x与残差F(x)具有相同的维度:

(2)通常我们不会在一整个网络中每个卷积块都使用相同数目的输出通道数,即filters的数目,但是需要注意如果我们仅增大卷积层的输出通道数则会引入成倍增加的计算复杂度,为了保持计算复杂度,我们则需要在残差块的输入层降低输入特征的维度,一般可通过设置残差块的第一个卷积层合适的卷积步长实现。但是这样还没结束,有没有发现此时残差F(x)的维度改变了呢?是的,相对于输入特征x,F(x)的所有维度都进行了改变,我们知道x与F(x)之间要进行“元素级加法”,而“元素级加法”需要x与F(x)之间维度必须一致,所以我们需要通过一定的方法让他们的维度保持一致,在本文中,作者通过在跳跃连接传递x的时候使用1×1卷积对他的维度进行改变,以使x与F(x)维度保持一致。

公式描述如下:

![]()

这种方式的目的仅仅是为了保持x与F(x)之间的维度一致,所以通常只在相邻残差块之间通道数改变时使用,绝大多数情况下仅使用第一种方式。

如上图中橙色框中所有卷积层的输出通道均为64,且输入x_orange的维度保持不变假设为(C,H,W),所以橙色框的最终输出out_orange维度为(C,H,W);蓝色框中对卷积层的通道数进行了扩展,为橙色框的二倍,所以要在蓝色块最开始的输出层处使用步长为2的卷积进行降维,得到残差F(x)维度为(C/2,H,W),此时蓝色框输入x_blue为与F(x)维度保持一致,需在虚线跳跃连接中使用1×1卷积对x_blue维度进行改变,在这里其实是使用步长为2,输出通道为128的1×1卷积实现。

3.2网络结构

1)34层残差网,网络结构如下图中右子图所示,共包含34个具有学习参数的网络层(33个卷积层+1个全连接层):

这里要说一下有两种不同的跳跃连接:

2)34层残差网的网络结构参数细节及残差网的变种,其中类似18、34这样的数字表示该网络中共有这么些需要学习参数的网络层,且将整个网络结构中的特征提取模块划分为5个子模块conv1、conv_2x等,每个子模块中的卷积层的输出拥有相同的通道数。此外,需要注意在conv_3x、conv_4x、conv_5x的第一个卷积层中需要设置卷积步长为2,进行特征下采样。

需要注意只有18层、34层残差网中使用的是两层残差块,其他的几个变种使用的是三层“瓶颈结构”残差块。

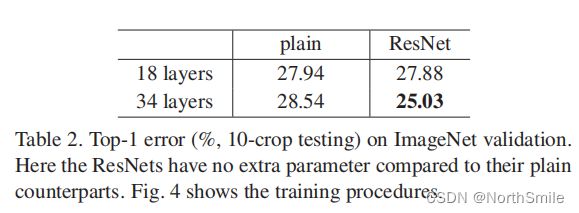

4.实验结果

这里仅展示Res-Net34在ImageNet图像分类任务中得到的实验结果:

上图呈现了普通网络以及对应的残差网络的训练、验证误差对比情况:

- 1)以普通网络为例展示了简单堆叠卷积层以扩展网络深度可能会造成性能退化,更深的网络其误差越大;

- 2)对应的残差网网络更深,误差越小;

说明本文提出的残差学习框架很好地解决了扩展网络深度带来的性能退化问题,且网络性能随着深度的增加获得了一定的提高。

二、代码实现

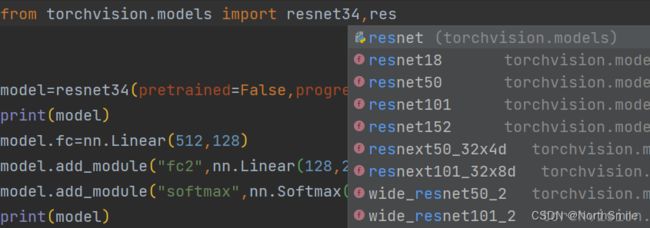

1.Pytorch内置

如果大家只是想使用resnet,或者对其进行微调,或者想利用其进行特征提取,我们可以直接通过Pytorch内置的model加载合适的resnet,比如resnet34:

model=resnet34(pretrained=False,progress=True)

在加载模型时,需要注意pretrained参数,该参数决定了是否使用已经在ImageNet数据集上预训练好的resnet:

- pretrained=False,表示只加载残差网络的网络结构;

- pretrained=True,表示加载预训练好的残差网络;

2.手动实现

2.1残差块实现

首先进行两种残差块的代码编写,这是完成残差网的基础:

1)二层残差块

'''

手动搭建残差网

'''

# 两层残差块

class ResBlock_2(nn.Module):

def __init__(self,in_channels=64,out_channels=64,stride=1,is_downsample=False):

super(ResBlock_2, self).__init__()

self.in_channels=in_channels

self.out_channels=out_channels

self.stride=stride

self.BasicBlock=nn.Sequential(

nn.Conv2d(in_channels=self.in_channels,out_channels=self.out_channels,kernel_size=3,stride=self.stride,padding=1),

nn.BatchNorm2d(self.out_channels),

nn.ReLU(),

nn.Conv2d(in_channels=self.out_channels, out_channels=self.out_channels, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(self.out_channels),

)

self.is_downsample=is_downsample # 表示跳跃连接是否对输入x进行维度改变

self.downsample=nn.Sequential(

nn.Conv2d(in_channels=self.in_channels, out_channels=self.out_channels, kernel_size=1, stride=self.stride, padding=0),

nn.BatchNorm2d(self.out_channels),

)

def forward(self,x):

input=x

residual_x=self.BasicBlock(input)

# print(residual_x.shape)

if self.is_downsample:

x=self.downsample(input)

# print(x.shape)

return nn.ReLU()(residual_x+x) # 元素级加法

2)三层残差块

# 三层残差块

class ResBlock_3(nn.Module):

def __init__(self,in_channels=64,out_channels=[64,256],stride=1,is_downsample=False):

super(ResBlock_3, self).__init__()

self.in_channels = in_channels

self.channels1 = out_channels[0]

self.channels2 = out_channels[1]

self.stride = stride

self.BasicBlock=nn.Sequential(

nn.Conv2d(in_channels=self.in_channels,out_channels=self.channels1,kernel_size=1,stride=self.stride,padding=0),

nn.BatchNorm2d(self.channels1),

nn.ReLU(),

nn.Conv2d(in_channels=self.channels1, out_channels=self.channels1, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(self.channels1),

nn.ReLU(),

nn.Conv2d(in_channels=self.channels1, out_channels=self.channels2, kernel_size=1, stride=1, padding=0),

nn.BatchNorm2d(self.channels2),

)

self.is_downsample=is_downsample # 表示跳跃连接是否对输入x进行维度改变

self.downsample=nn.Sequential(

nn.Conv2d(in_channels=self.in_channels, out_channels=self.channels2, kernel_size=1, stride=self.stride, padding=0),

nn.BatchNorm2d(self.channels2),

)

def forward(self, x):

input=x

residual_x=self.BasicBlock(input)

if self.is_downsample:

x=self.downsample(input)

return nn.ReLU()(residual_x+x) # 元素级加法

2.2残差网实现

1)此处以实现ResNet18和ResNet34为例,进行代码编写(因为他们使用的残差块都是二层残差块,通过设置不同的参数即可实现):

'''

搭建残差网时需要注意的几个点:

0、残差网结构:conv1->conv2_x->conv3_x->conv4_x->conv5_x->global_avg_pooling->fc(softmax);

1、说明每个block需要多少残差块组成,比如34层网络中conv2_x由3个残差块组成;

2、需要说明每个block中卷积层的输出通道数;

3、在通道数进行扩展的残差块输入层需要设置卷积步长为2;

'''

class ResNet(nn.Module):

def __init__(self,blocks_of_layers=[2,2,2,2],basic_channels=64):

super(ResNet, self).__init__()

# conv1

self.conv1=nn.Conv2d(in_channels=3,out_channels=64,kernel_size=7,stride=2,padding=3)

self.bn1=nn.BatchNorm2d(64)

self.relu1=nn.ReLU()

self.max_pool1=nn.MaxPool2d(kernel_size=3,stride=2,padding=1)

# 堆叠残差块conv2_x->conv3_x->conv4_x->conv5_x

self.blocks_of_layers=blocks_of_layers

self.channels = basic_channels

self.layers_name=['layer1','layer2','layer3','layer4',]

self.blocks_group=nn.Sequential()

for i in range(len(self.blocks_of_layers)):

if i==0:

layer=self.get_layer(self.blocks_of_layers[i], self.channels,self.channels,stride=1)

else:

layer = self.get_layer(blocks_of_layers[i], int(self.channels * math.pow(2, i - 1)),

int(self.channels * math.pow(2, i)), stride=2, is_downsample=True)

self.blocks_group.add_module(name=self.layers_name[i],module=layer)

# global_avg_pooling->fc(softmax)

self.fc_infeatures=int(self.channels*math.pow(2,i))

self.avg_pool=nn.AvgPool2d(kernel_size=7)

self.flatten=nn.Flatten()

self.fc=nn.Linear(self.fc_infeatures,1000)

def forward(self,x):

x=self.conv1(x)

x = self.bn1(x)

x = self.relu1(x)

x = self.max_pool1(x)

x=self.blocks_group(x)

x=self.avg_pool(x)

x=self.flatten(x)

x=self.fc(x)

return nn.Softmax()(x)

def get_layer(self,nums,in_channels,out_channels,stride,is_downsample=False):

layers=nn.Sequential()

for i in range(nums):

if i==0:

layers.add_module("BasicBlock{}".format(i),

ResBlock_2(in_channels, out_channels, stride, is_downsample=is_downsample))

else:

layers.add_module("BasicBlock{}".format(i), ResBlock_2(out_channels, out_channels, 1))

return layers

打印的网络结构:

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU()

(max_pool1): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(blocks_group): Sequential(

(layer1): Sequential(

(BasicBlock0): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock1): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock2): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer2): Sequential(

(BasicBlock0): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock1): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock2): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock3): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer3): Sequential(

(BasicBlock0): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock1): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock2): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock3): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock4): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock5): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer4): Sequential(

(BasicBlock0): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock1): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(BasicBlock2): ResBlock_2(

(BasicBlock): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(downsample): Sequential(

(0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

)

(avg_pool): AvgPool2d(kernel_size=7, stride=7, padding=0)

(flatten): Flatten(start_dim=1, end_dim=-1)

(fc): Linear(in_features=512, out_features=1000, bias=True)

)

2)测试案例

x=torch.randint(10,size=(1,3,224,224),dtype=torch.float32)

# net18=ResNet(blocks_of_layers=[2,2,2,2],basic_channels=64)

# out=net18(x)

net34=ResNet(blocks_of_layers=[3,4,6,3],basic_channels=64)

out=net34(x)

print(out.shape)

torch.Size([1, 1000])

可看到输出特征的形状是符合我们的预期的。

2.3代码编写注意点

1)残差网络最后是接一个全局平均池化层:global average pooling和全连接层:Linear,Pytorch内部是没有可直接调用的global average pooling函数,如何实现请参考https://blog.csdn.net/qq_43665602/article/details/126656713;

2)编写ResNet50等变种时,只需要简单的改变一下残差块的组合方式即可,且此时使用的残差块为三层“瓶颈结构”残差块;

3)需要注意在Conv_3x开始的残差组中的第一个残差块的第一个卷积层的卷积步长需要设置为2,且在“元素加法”之前需先对输入x进行下采样操作,以保证残差F(x)与输入x的维度一致;

参考:

1.《Deep Residual Learning for Image Recognition》

2.百度百科《残差网络》

声明:代码没有进行太多的注释,如有理解上的问题,可私信或者评论留言,我定会知无不言!