&3_神经网络基本操作(快速搭建 保存提取 批训练)

神经网络基本操作

- 快速搭建

- 保存提取

-

- 保存

- 提取

-

- 提取网络

- 提取参数

- 批训练

-

- BATCH_SIZE

- Optimizer优化器

快速搭建

# method 1 第一种搭建方式

class Net(torch.nn.Module):

def __init__(self, n_feature, n_hidden, n_output):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(n_feature, n_hidden) # hidden layer

self.out = torch.nn.Linear(n_hidden, n_output) # output layer

def forward(self, x):

x = F.relu(self.hidden(x)) # activation function for hidden layer

x = self.out(x)

return x

net1 = Net(n_feature=2, n_hidden=10, n_output=2) # define the network

# method 2 快速搭建方式 在括号里一层一层地垒神经层 similar as method 1

net2 = torch.nn.Sequential(

torch.nn.Linear(2, 10),

torch.nn.ReLU(), # 如果有激励函数,添加在中间

torch.nn.Linear(10, 2),

)

net2 多显示了一些内容,把激励函数也一同纳入进去了, 但是 net1 中, 激励函数实际上是在 forward() 功能中才被调用的. 这也就说明了, 相比 net2, net1 的好处就是, 可以根据你的个人需要更加个性化前向传播过程, 比如(RNN).

保存提取

训练模型后,需要对模型进行保存,留到下次要用的时候直接提取直接用

保存

保存有两种方法:保存整个神经网络,只保存神经网络中的参数

def save():

# save net1 快速搭建法

net1 = torch.nn.Sequential(

torch.nn.Linear(1, 10),

torch.nn.ReLU(),

torch.nn.Linear(10, 1)

)

# 训练所有参数

optimizer = torch.optim.SGD(net1.parameters(), lr=0.5)

loss_func = torch.nn.MSELoss()

# 训练100步

for t in range(100):

prediction = net1(x)

loss = loss_func(prediction, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 2 ways to save the net 训练结束后保存训练参数

torch.save(net1, 'net.pkl') # A: save entire net (参数, 保存名字) 保存整个神经网络 保存计算图

torch.save(net1.state_dict(), 'net_params.pkl') # B: save only the parameters 不保留整个神经网络,只保留图里面的节点参数,速度更快

提取

两种方法恢复保存的神经网络

提取网络

通过保存的整个神经网络直接load

def restore_net():

# restore entire net1 to net2

net2 = torch.load('net.pkl')

prediction = net2(x)

提取参数

这种方式将会提取所有的参数, 然后再放到新建网络中。该方法适用于只保存了参数

def restore_params():

# restore only the parameters in net1 to net3 首先要建立和之前一样的神经网络

net3 = torch.nn.Sequential(

torch.nn.Linear(1, 10),

torch.nn.ReLU(),

torch.nn.Linear(10, 1)

)

# copy net1's parameters into net3 再把参数load进来

net3.load_state_dict(torch.load('net_params.pkl'))

prediction = net3(x)

批训练

当需要训练的数据量很大时,无法一次训练完,需要分批进行训练。

torch中数据结构DataLoader,使用它来包装自己的数据, 进行批训练

将 (numpy array 或其他) 数据形式装换成 Tensor, 然后再放进这个包装器中.

import torch

# Data是进行小批训练的途径

import torch.utils.data as Data

torch.manual_seed(1) # reproducible

# 批训练的大小,从一大批数据里抽取5个5个

BATCH_SIZE = 5

# BATCH_SIZE = 8

x = torch.linspace(1, 10, 10) # this is x data (torch tensor) 从1-10的10个点

y = torch.linspace(10, 1, 10) # this is y data (torch tensor) 从10-1的10个点

# 定义数据库 Data.TensorDataset(data_tensor = x, target_tensor = y) data_tensor训练的数据;target_tenso算误差的数据

torch_dataset = Data.TensorDataset(x, y)

# 使用loader将训练变成一小批一小批的

loader = Data.DataLoader(

dataset=torch_dataset, # torch TensorDataset format 将数据放入loader

batch_size=BATCH_SIZE, # mini batch size 定义批大小

shuffle=True, # random shuffle for training 要不要在训练的时候随机打乱数据顺序 false不打乱,true打乱

num_workers=2, # subprocesses for loading data 用几个进程提取,提高效率

)

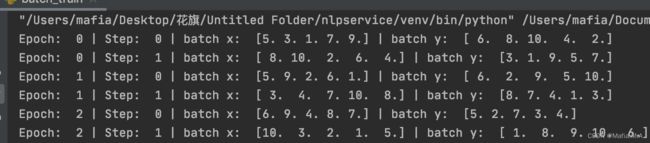

def show_batch():

# 这批数据一共有10个, 将这批数据整体训练3次

for epoch in range(3): # train entire dataset 3 times

# 每一次整体训练需要训练完所有数据,每次整体训练需要分批训练step,比如此处共10个数据,批大小为5,所以需要step=2两步才能完成一次训练

# enumerate 是loader在每一次提取的时候,施加一个step索引,第一个就是第一步,第二个就是第二步

for step, (batch_x, batch_y) in enumerate(loader): # for each training step

# train your data...

# 打印信息

print('Epoch: ', epoch, '| Step: ', step, '| batch x: ',

batch_x.numpy(), '| batch y: ', batch_y.numpy())

if __name__ == '__main__':

show_batch()

运行结果:

每步都导出了5个数据进行学习. 然后每个 epoch 的导出数据都是先打乱了以后再导出.

BATCH_SIZE

BATCH_SIZE = 8时, step=0 会导出8个数据, 但是, step=1 时数据库中的数据不够 8个,则会导出剩余的

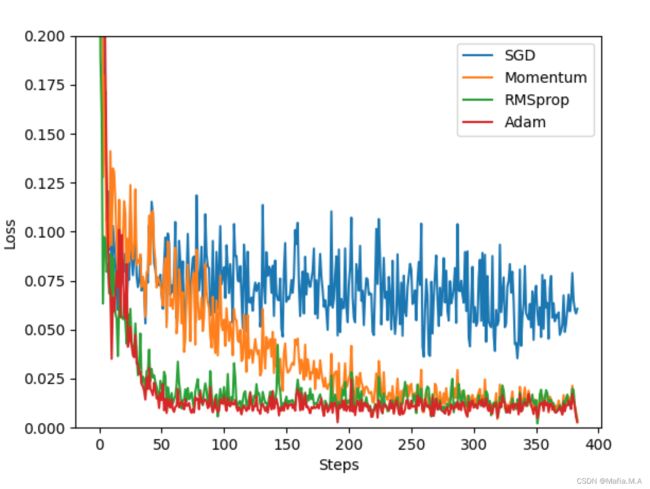

Optimizer优化器

优化器用于加快神经网络的训练

几种常见的优化器, SGD, Momentum, RMSprop, Adam.

"""

View more, visit my tutorial page: https://mofanpy.com/tutorials/

My Youtube Channel: https://www.youtube.com/user/MorvanZhou

Dependencies:

torch: 0.4

matplotlib

"""

import torch

import torch.utils.data as Data

import torch.nn.functional as F

import matplotlib

matplotlib.use('TkAgg')

import matplotlib.pyplot as plt

# torch.manual_seed(1) # reproducible

LR = 0.01

BATCH_SIZE = 32

EPOCH = 12

# fake dataset

x = torch.unsqueeze(torch.linspace(-1, 1, 1000), dim=1)

y = x.pow(2) + 0.1*torch.normal(torch.zeros(*x.size()))

# put dateset into torch dataset

torch_dataset = Data.TensorDataset(x, y)

loader = Data.DataLoader(dataset=torch_dataset, batch_size=BATCH_SIZE, shuffle=True, num_workers=2,)

# default network

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(1, 20) # hidden layer

self.predict = torch.nn.Linear(20, 1) # output layer

def forward(self, x):

x = F.relu(self.hidden(x)) # activation function for hidden layer

x = self.predict(x) # linear output

return x

if __name__ == '__main__':

# different nets

net_SGD = Net()

net_Momentum = Net()

net_RMSprop = Net()

net_Adam = Net()

# 全部放在list中

nets = [net_SGD, net_Momentum, net_RMSprop, net_Adam]

# different optimizers

opt_SGD = torch.optim.SGD(net_SGD.parameters(), lr=LR)

opt_Momentum = torch.optim.SGD(net_Momentum.parameters(), lr=LR, momentum=0.8)

opt_RMSprop = torch.optim.RMSprop(net_RMSprop.parameters(), lr=LR, alpha=0.9)

opt_Adam = torch.optim.Adam(net_Adam.parameters(), lr=LR, betas=(0.9, 0.99))

# 将优化器也放在list中

optimizers = [opt_SGD, opt_Momentum, opt_RMSprop, opt_Adam]

loss_func = torch.nn.MSELoss() # 回归误差计算公式

losses_his = [[], [], [], []] # record loss 将误差记录下来,四个项,分别记录这四个误差曲线

# training

for epoch in range(EPOCH):

print('Epoch: ', epoch)

for step, (b_x, b_y) in enumerate(loader): # for each training step

for net, opt, l_his in zip(nets, optimizers, losses_his):

output = net(b_x) # get output for every net

loss = loss_func(output, b_y) # compute loss for every net

opt.zero_grad() # clear gradients for next train

loss.backward() # backpropagation, compute gradients

opt.step() # apply gradients

l_his.append(loss.data.numpy()) # loss recoder

labels = ['SGD', 'Momentum', 'RMSprop', 'Adam']

for i, l_his in enumerate(losses_his):

plt.plot(l_his, label=labels[i])

plt.legend(loc='best')

plt.xlabel('Steps')

plt.ylabel('Loss')

plt.ylim((0, 0.2))

plt.show()