1012循环神经网络 RNN 的实现

实现了给定一段字符作为输入,采用RNN预测接下来的文本,这本身就是一个时间序列预测问题。

与预测数值数据的时间序列不同的是,对于字符串来说,需要先“按token分割文本行”,然后“按出现的频率给每个token编号”,得到“编码后的文本”和“词表vocab”,再进行训练或预测;另外,在数据传入网络前,需要对每个token进行one-hot编码,每个token转化为一个向量。

在预测数值数据的时间序列问题中,数据本身就是数值,因此不需要按token编码和one-hot编码,也就是说之前文章里的vocab_size等于1,当然这里已经没有vocab了,再叫vocab_size不太合适,因此下面的代码中改为input_size。

首先,一波导入

import math

import torch

from matplotlib import pyplot as plt

import random

from torch import nn

import numpy as np

import time

from d2l import torch as d2l

from IPython import display定义数据迭代器

- 用于生成一个小批量子序列的函数batch的seq_data_iter_random()和seq_data_iter_sequential()都没改变

- class SeqDataLoader的定义中,从初始化时接受外部传入的数据corpus(list类型),同时去掉了vocab

- load_data()配合SeqDataLoader的定义进行了修改

# # 定义使用随机抽样生成一个小批量子序列的函数,小批量的形状:(批量大小,时间步数)

def seq_data_iter_random(corpus, batch_size, num_steps):

"""使用随机抽样生成一个小批量子序列"""

# 从随机偏移量开始对序列进行分区,随机范围包括num_steps-1

corpus = corpus[random.randint(0, num_steps - 1):] # 产生0~num_steps之间的随机整数,丢掉corpus中该数前面的一些元素

# 减去1,是因为我们需要考虑label

num_subseqs = (len(corpus) - 1) // num_steps # 计算产生的子序列的个数

# 长度为num_steps的子序列的起始索引

initial_indices = list(range(0, num_subseqs * num_steps, num_steps))

# 在随机抽样的迭代过程中,来自两个相邻的、随机的、小批量中的子序列不一定在原始序列上相邻

random.shuffle(initial_indices)

def data(pos):

# 返回从pos位置开始的长度为num_steps的序列

return corpus[pos: pos + num_steps]

num_batches = num_subseqs // batch_size # 计算batch的个数

for i in range(0, batch_size * num_batches, batch_size):

# 在这里,initial_indices包含子序列的随机起始索引

initial_indices_per_batch = initial_indices[i: i + batch_size] # 拿出一个batch的子序列起始索引

X = [data(j) for j in initial_indices_per_batch]

Y = [data(j + 1) for j in initial_indices_per_batch]

yield torch.tensor(X), torch.tensor(Y) # 可迭代对象,每次一个batch

# # 定义使用顺序分区生成一个小批量子序列的函数,小批量的形状:(批量大小,时间步数)

def seq_data_iter_sequential(corpus, batch_size, num_steps): # @save

"""使用顺序分区生成一个小批量子序列"""

# 从随机偏移量开始划分序列

offset = random.randint(0, num_steps)

num_tokens = ((len(corpus) - offset - 1) // batch_size) * batch_size

Xs = torch.tensor(corpus[offset: offset + num_tokens])

Ys = torch.tensor(corpus[offset + 1: offset + 1 + num_tokens])

Xs, Ys = Xs.reshape(batch_size, -1), Ys.reshape(batch_size, -1)

num_batches = Xs.shape[1] // num_steps

for i in range(0, num_steps * num_batches, num_steps):

X = Xs[:, i: i + num_steps]

Y = Ys[:, i: i + num_steps]

yield X, Y

# # 定义加载序列数据的迭代器SeqDataLoader类

class SeqDataLoader:

"""加载序列数据的迭代器"""

def __init__(self, corpus, batch_size, num_steps, use_random_iter):

if use_random_iter:

self.data_iter_fn = seq_data_iter_random

else:

self.data_iter_fn = seq_data_iter_sequential

self.corpus = corpus

self.batch_size, self.num_steps = batch_size, num_steps

def __iter__(self):

return self.data_iter_fn(self.corpus, self.batch_size, self.num_steps)

# # 定义访问数据集,并产生可迭代对象和词表的函数

def load_data(corpus, batch_size, num_steps, use_random_iter=False):

"""返回数据集的迭代器"""

data_iter = SeqDataLoader(corpus, batch_size, num_steps, use_random_iter)

return data_iter定义模型

- 取消了one_hot编码,对X的形状调整放到了模型外面

# # 完整的RNN模型,输入的input和输出的output都是一个tensor

class RNNModel(nn.Module):

"""循环神经网络模型"""

def __init__(self, num_hiddens, input_size, **kwargs):

super(RNNModel, self).__init__(**kwargs)

# # 定义RNN层

# 输入的形状为(num_steps, batch_size, input_size) # input_size 就是 vocab_size

# 输出的形状为(num_steps, batch_size, num_hiddens)

self.rnn = nn.RNN(input_size, num_hiddens)

self.input_size = self.rnn.input_size

self.num_hiddens = self.rnn.hidden_size

# 如果RNN是双向的(之后将介绍),num_directions应该是2,否则应该是1

if not self.rnn.bidirectional:

self.num_directions = 1

self.linear = nn.Linear(self.num_hiddens, self.input_size)

else:

self.num_directions = 2

self.linear = nn.Linear(self.num_hiddens * 2, self.input_size)

def forward(self, inputs, state):

# inputs的形状为(num_steps, batch_size, input_size)

# Y是所有时间步的隐藏状态,state是最后一个时间步的隐藏状态

# Y的形状为(num_steps, batch_size, hidden_size),state为(1,batch_size, hidden_size)

Y, state = self.rnn(inputs, state)

# 全连接层首先将Y的形状改为(num_steps*batch_size, hidden_size)

# 它的输出形状是(num_steps*batch_size,input_size)。

output = self.linear(Y.reshape((-1, Y.shape[-1])))

return output, state

def begin_state(self, device, batch_size=1):

if not isinstance(self.rnn, nn.LSTM):

# nn.GRU以张量作为隐状态

return torch.zeros((self.num_directions * self.rnn.num_layers, batch_size, self.num_hiddens),

device=device)

else:

# nn.LSTM以元组作为隐状态

return (torch.zeros((self.num_directions * self.rnn.num_layers, batch_size, self.num_hiddens),

device=device),

torch.zeros((self.num_directions * self.rnn.num_layers, batch_size, self.num_hiddens),

device=device))

预测

- 由于数据类型的变化,改变了outputs的存放方式

# 预测函数 prefix为list类型,返回的output是一个list类型

def predict(prefix, num_preds, net, device):

"""在prefix后面生成新字符"""

state = net.begin_state(batch_size=1, device=device) # 生成初始隐藏状态,预测的时候batch_size=1

outputs = [prefix[0]] # 获取第一个字符的索引

# 将outputs最新的数据(上一次预测得到的)作为新的输入

get_input = lambda: torch.tensor(outputs[-1], device=device).reshape((1, 1, 1))

for y in prefix[1:]: # 预热期

_, state = net(get_input(), state) # 输入和隐藏状态传入网络,更新隐藏状态

outputs.append(y) # 把真实值放入输出

for _ in range(num_preds): # 预测num_preds步

y, state = net(get_input(), state) # 输入和隐藏状态传入网络,更新预测值和隐藏状态

outputs = outputs + y.reshape(-1).to('cpu').detach().numpy().tolist() # 把预测值(转化为list后)放入输出

return outputs

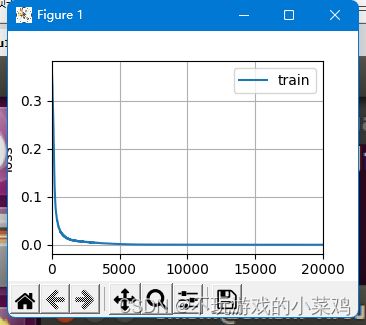

训练

- train_epoch()中在X输入net前进行了形状调整,因为训练很稳定,没有采用梯度裁剪

- 换用MSELoss()计算损失,采用torch.optim.Adam作为优化器

# # 定义一个函数在一个迭代周期内训练模型

def train_epoch(net, train_iter, loss, updater, device, use_random_iter):

"""训练网络一个迭代周期("""

state, timer = None, Timer()

metric = Accumulator(2) # 训练损失之和, num_steps*batch_size

# 循环遍历每个batch

for X, Y in train_iter:

# 这里X和Y的形状为(batch_size, num_steps)

if state is None or use_random_iter:

# 在第一次迭代或使用随机抽样时初始化state

state = net.begin_state(batch_size=X.shape[0], device=device)

else:

if isinstance(net, nn.Module) and not isinstance(state, tuple):

# state对于nn.GRU是个张量

state.detach_()

else:

# state对于nn.LSTM或对于我们从零开始实现的模型是个张量

for s in state:

s.detach_()

y = Y.T.reshape(-1)

X = X.T.reshape(X.shape[1], X.shape[0], -1)

X, y = X.to(device), y.to(device)

y_hat, state = net(X, state)

l = loss(y_hat.reshape(-1), y).mean()

if isinstance(updater, torch.optim.Optimizer):

updater.zero_grad()

l.backward()

# grad_clipping(net, 1)

updater.step()

else:

l.backward()

# grad_clipping(net, 1)

# 因为已经调用了mean函数

updater(batch_size=1)

metric.add(l * y.numel(), y.numel())

return metric[0] / metric[1], metric[1] / timer.stop()

# # 训练模型

def train(net, train_iter, lr, num_epochs, device, use_random_iter=False):

"""训练模型"""

loss = nn.MSELoss()

animator = Animator(xlabel='epoch', ylabel='loss',

legend=['train'], xlim=[0, num_epochs])

# 优化器

if isinstance(net, nn.Module):

# updater = torch.optim.SGD(net.parameters(), lr)

updater = torch.optim.Adam(net.parameters(), lr)

else:

updater = lambda batch_size: sgd(net.params, lr, batch_size)

# 训练和预测

for epoch in range(num_epochs):

print(f"\nEpoch {epoch + 1}\n-------------------------------")

ppl, speed = train_epoch(net, train_iter, loss, updater, device, use_random_iter)

if (epoch + 1) % 10 == 0:

animator.add(epoch + 1, [ppl])

print(f'perplexity {ppl}, {speed} tokens/sec {str(device)}')来自d2l库中的辅助工具

# # 定义累加器的类,用于累加每个batch的运行状态数据(损失和准确度)

class Accumulator:

"""在n个变量上累加"""

def __init__(self, n): # 初始化,n为累加器的列数

self.data = [0.0] * n # list * int 意思是将数组重复 int 次并依次连接形成一个新数组

def add(self, *args): # data和args对应列累加

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self): # 重置

self.data = [0.0] * len(self.data)

def __getitem__(self, idx): # 索引

return self.data[idx]

# # 定义记录多次运行时间的Timer类

class Timer:

"""记录多次运行时间"""

def __init__(self):

"""初始化"""

self.tik = None

self.times = []

self.start()

def start(self):

"""启动计时器"""

self.tik = time.time()

def stop(self):

"""停止计时器并在列表中记录时间"""

self.times.append(time.time() - self.tik)

return self.times[-1]

def avg(self):

"""返回平均时间"""

return sum(self.times) / len(self.times)

def sum(self):

"""返回时间的总和"""

return sum(self.times)

def cumsum(self):

"""返回累计时间"""

return np.array(self.times).cumsum().tolist()

# # 定义用于生成动画的Animator类

class Animator:

"""For plotting data in animation."""

def __init__(self, xlabel=None, ylabel=None, legend=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), nrows=1, ncols=1,

figsize=(3.5, 2.5)):

"""Defined in :numref:`sec_softmax_scratch`"""

# Incrementally plot multiple lines

if legend is None:

legend = []

d2l.use_svg_display()

self.fig, self.axes = d2l.plt.subplots(nrows, ncols, figsize=figsize)

if nrows * ncols == 1:

self.axes = [self.axes, ]

# Use a lambda function to capture arguments

self.config_axes = lambda: d2l.set_axes(

self.axes[0], xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

self.X, self.Y, self.fmts = None, None, fmts

def add(self, x, y):

# Add multiple data points into the figure

if not hasattr(y, "__len__"):

y = [y]

n = len(y)

if not hasattr(x, "__len__"):

x = [x] * n

if not self.X:

self.X = [[] for _ in range(n)]

if not self.Y:

self.Y = [[] for _ in range(n)]

for i, (a, b) in enumerate(zip(x, y)):

if a is not None and b is not None:

self.X[i].append(a)

self.Y[i].append(b)

self.axes[0].cla()

for x, y, fmt in zip(self.X, self.Y, self.fmts):

self.axes[0].plot(x, y, fmt)

self.config_axes()

display.display(self.fig)

plt.draw()

plt.pause(0.001)

display.clear_output(wait=True)生成数据、训练、预测

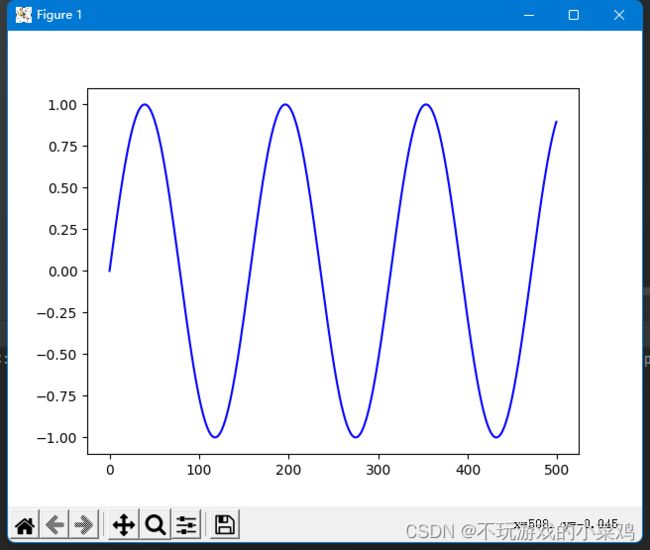

# # 生成数据

Time = list(range(0, 500))

corpus = [math.sin(x * 0.04) for x in Time]

input_size = 1

# 绘图查看生成的数据

plt.plot(corpus, 'b-')

print(len(Time))

print(len(corpus))

plt.show()

# # 定义超参数

batch_size = 8

num_steps = 50

num_hiddens = 16

num_epochs = 20000

learning_rate = 0.0001

train_iter = load_data(corpus, batch_size, num_steps)

# i = 0

# for X, Y in train_iter:

# i = i + 1

# print(i)

# print('X: ', X.shape)

# print(X.dtype)

# print('Y: ', Y.shape)

# print(Y.dtype)

# # 检查torch.cuda是否可用,否则继续使用CPU

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(f'-------------------------------\n'

f'Using {device} device\n'

f'-------------------------------')

net = RNNModel(num_hiddens=num_hiddens, input_size=input_size)

net = net.to(device)

print(f'-------------------------------\n'

f'Train Model\n'

f'-------------------------------')

train(net, train_iter, learning_rate, num_epochs, device)

plt.show()

print(f'-------------------------------\n'

f'Predict\n'

f'-------------------------------')

print('1')

output = predict(corpus[:1], 500-1, net, device)

# 绘图查看生成的数据

plt.plot(corpus, 'b-', label='real')

plt.plot(output, 'm-', label='predicted')

plt.xlabel('time')

plt.ylabel('loss')

plt.title('prefix=1')

plt.show()

print('10')

output = predict(corpus[:10], 500-10, net, device)

# 绘图查看生成的数据

plt.plot(corpus, 'b-', label='real')

plt.plot(output, 'm-', label='predicted')

plt.xlabel('time')

plt.ylabel('loss')

plt.title('prefix=10')

plt.show()

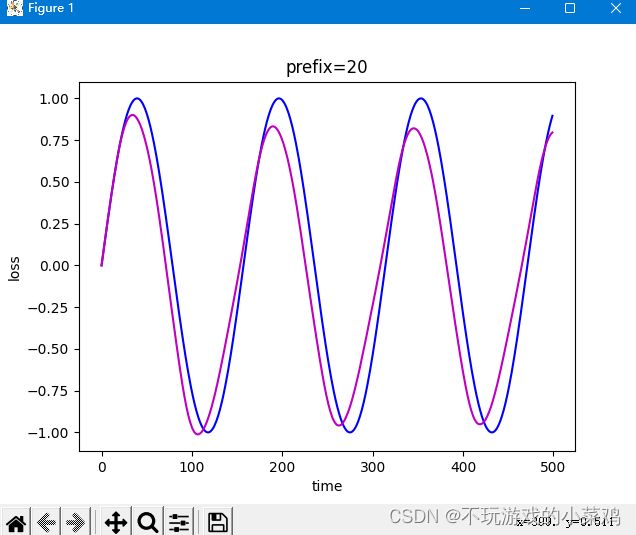

print('20')

output = predict(corpus[:20], 500-20, net, device)

# 绘图查看生成的数据

plt.plot(corpus, 'b-', label='real')

plt.plot(output, 'm-', label='predicted')

plt.xlabel('time')

plt.ylabel('loss')

plt.title('prefix=20')

plt.show()

print('50')

output = predict(corpus[:50], 500-50, net, device)

# 绘图查看生成的数据

plt.plot(corpus, 'b-', label='real')

plt.plot(output, 'm-', label='predicted')

plt.xlabel('time')

plt.ylabel('loss')

plt.title('prefix=50')

plt.show()

print('200')

output = predict(corpus[:200], 500-200, net, device)

# 绘图查看生成的数据

plt.plot(corpus, 'b-', label='real')

plt.plot(output, 'm-', label='predicted')

plt.xlabel('time')

plt.ylabel('loss')

plt.title('prefix=200')

plt.show()

print('400')

output = predict(corpus[:400], 500-400, net, device)

# 绘图查看生成的数据

plt.plot(corpus, 'b-', label='real')

plt.plot(output, 'm-', label='predicted')

plt.xlabel('time')

plt.ylabel('loss')

plt.title('prefix=400')

plt.show()