Opencv4.5.5 + Opencv4.5.5_contrib 图像拼接

Opencv4.5.5 + Opencv4.5.5_contrib 图像拼接

文章目录

- Opencv4.5.5 + Opencv4.5.5_contrib 图像拼接

-

- 1、编译Opencv4.5.5 + Opencv4.5.5_contrib

- 2、图像拼接

1、编译Opencv4.5.5 + Opencv4.5.5_contrib

编译过程可参考 opencv-4.5.3 + opencv_contrib-4.5.3 + vtk-9.0.3编译(全流程)。只需关注有关Opencv+Opencv_contrib编译的内容即可。以下是编译好后的内容:

注意:在CMake过程中请务必勾选OPENCV_ENABLE_NONFREE这一项,否则将导致你无法使用SURF角点检测算法。(原因吗??,请往下看)

2、图像拼接

运行环境 vs2017 + opencv4.5.5

配置好opencv,使用SURF进行图像拼接:

错误原因:该算法获得专利,不在本配置中,设置OPENCV_ENABLE_NONFREE CMake选项并重建库。

解决方法:

(1)、打开Cmake,选中如下路径。注意:此解决方法是基于已经编译过Opencv + Opencv_contrib,但未勾选OPENCV_ENABLE_NONFREE此选项的人群。如果你未编译,那么就请你编译后再来(玩笑话,只要在编译的时候勾选此选项就不会出现此错误了)。

(2)、找到OPENCV_ENABLE_NONFREE选项,勾选他,然后点击Configure,重新生成,往后的操作依照第一次编译的顺序重新来过即可。(所以在此请注意,如果没有勾选此选项,其实就跟重新编译没有区别)。

(3)、插入测试代码,进行图像拼接:

//计算透视变换后的顶点坐标

void calcCorners(cv::Mat homo, cv::Mat rightImage, std::vector<cv::Point2f> &sceneCorners)

{

//[x',y', w'] = [u', v', w'] * 透视矩阵

// x = x' / w'; y = y' / w'

double v1[] = { 0, 0, 1 }; //左上角坐标

double v2[] = { 0, 0, 0 }; //透视变换之后的左上角坐标

cv::Mat V1 = cv::Mat(3, 1, CV_64FC1, v1);

cv::Mat V2 = cv::Mat(3, 1, CV_64FC1, v2);

V2 = homo * V1;

sceneCorners[0].x = v2[0] / v2[2];

sceneCorners[0].y = v2[1] / v2[2];

//左下角(0, rightImage.rows, 1)

v1[0] = 0; v1[1] = rightImage.rows; v1[2] = 1;

V1 = cv::Mat(3, 1, CV_64FC1, v1);

V2 = homo * V1;

sceneCorners[1].x = v2[0] / v2[2];

sceneCorners[1].y = v2[1] / v2[2];

//右上角(rightImage.cols, 0, 1)

v1[0] = rightImage.cols; v1[1] = 0; v1[2] = 1;

V1 = cv::Mat(3, 1, CV_64FC1, v1);

V2 = homo * V1;

sceneCorners[2].x = v2[0] / v2[2];

sceneCorners[2].y = v2[1] / v2[2];

//右下角(rightImage.cols, rightImage.rows, 1)

v1[0] = rightImage.cols; v1[1] = rightImage.rows; v1[2] = 1;

V1 = cv::Mat(3, 1, CV_64FC1, v1);

V2 = homo * V1;

sceneCorners[3].x = v2[0] / v2[2];

sceneCorners[3].y = v2[1] / v2[2];

}

//图像融合

void optimizeSeam(cv::Mat leftImage, cv::Mat leftImageTransform, cv::Mat rightImageTransform, cv::Mat &dstImage, std::vector<cv::Point2f> sceneCorners)

{

//开始位置,即重叠区域左边界

int startPosition = minVal(sceneCorners[0].x, sceneCorners[1].x);

//重叠区域的宽度

double processWidth = leftImage.cols - startPosition;

int rows = dstImage.rows;

int cols = leftImage.cols;

//rightImage中像素的权重

double alpha = 1;

for (int i = 0; i < rows; i++)

{

cv::Vec3b *l = leftImageTransform.ptr<cv::Vec3b>(i);

cv::Vec3b *r = rightImageTransform.ptr<cv::Vec3b>(i);

cv::Vec3b *d = dstImage.ptr<cv::Vec3b>(i);

for (int j = startPosition; j < cols; j++)

{

//如果遇到图像rightImageTransform中无像素的黑点,则完全拷贝leftImage中的数据

if (r[j][0] == 0 && r[j][1] == 0 && r[j][2] == 0)

{

alpha = 1;

}

else

{

//rightImage中像素的权重,与当前处理点距重叠区域左边界的距离成正比,实验证明,这种方法确实好

alpha = (processWidth - (j - startPosition)) / processWidth;

}

d[j][0] = l[j][0] * alpha + r[j][0] * (1 - alpha);

d[j][1] = l[j][1] * alpha + r[j][1] * (1 - alpha);

d[j][2] = l[j][2] * alpha + r[j][2] * (1 - alpha);

}

}

return;

}

//层次递进融合

void overLappedofImg(cv::Mat parmImg1, cv::Mat parmImg2, cv::Mat& dstImg1, cv::Mat &dstImg2)

{

int cols = parmImg1.cols;

int rows = parmImg1.rows;

cv::Mat tempImg1(rows, cols, CV_8UC3, cv::Scalar::all(0));

cv::Mat tempImg2(rows, cols, CV_8UC3, cv::Scalar::all(0));

dstImg1 = cv::Mat(rows, cols, CV_8UC3, cv::Scalar(255, 255, 255));

dstImg2 = cv::Mat(rows, cols, CV_8UC3, cv::Scalar(255, 255, 255));

for (int i = 0; i < rows; i++)

{

cv::Vec3b *rgb1 = parmImg1.ptr<cv::Vec3b>(i);

cv::Vec3b *temRgb1 = tempImg1.ptr<cv::Vec3b>(i);

cv::Vec3b *rgb2 = parmImg2.ptr<cv::Vec3b>(i);

cv::Vec3b *temRgb2 = tempImg2.ptr<cv::Vec3b>(i);

for (int j = 0; j < cols; j++)

{

if (rgb1[j][0] > 0 || rgb1[j][1] > 0 || rgb1[j][2] > 0)

{

temRgb1[j] = cv::Vec3b(255, 255, 255);

}

else

{

temRgb1[j] = cv::Vec3b(0, 0, 0);

}

if (rgb2[j][0] > 0 || rgb2[j][1] > 0 || rgb2[j][2] > 0)

{

temRgb2[j] = cv::Vec3b(255, 255, 255);

}

else

{

temRgb2[j] = cv::Vec3b(0, 0, 0);

}

}

}

cv::Mat tempImg3;

//求交集

cv::bitwise_and(tempImg1, tempImg2, tempImg3);

int minCol = parmImg1.cols;

int maxCol = 0;

for (int i = 0; i < rows; i++)

{

cv::Vec3b *rgb = tempImg3.ptr<cv::Vec3b>(i);

for (int j = 0; j < cols; j++)

{

if (rgb[j][0] == 255 && rgb[j][1] == 255 && rgb[j][2] == 255)

{

if (j < minCol)

{

minCol = j;

}

if(j > maxCol)

{

maxCol = j;

}

}

}

}

for (int i = 0; i < rows; i++)

{

cv::Vec3b *rgb1 = dstImg1.ptr<cv::Vec3b>(i);

cv::Vec3b *rgb2 = dstImg2.ptr<cv::Vec3b>(i);

for (int j = minCol; j < maxCol + 1; j++)

{

int val = cv::saturate_cast<uchar>((float(maxCol - j)) / (float(maxCol - minCol)) * 255);

rgb1[j] = cv::Vec3b(val, val, val);

val = cv::saturate_cast<uchar>((float(j - minCol)) / (float(maxCol - minCol)) * 255);

rgb2[j] = cv::Vec3b(val, val, val);

}

}

return;

}

//基于Opencv 中的 Stitch 进行图像拼接

//优点:适应部分倾斜/尺度变换和畸形的图像,拼接效果好,使用简单

//缺点:需要足够的相同特征区域进行匹配,速度很慢。可GUP加速,但需要支持cuda安装的电脑

bool opencvStitchFun(cv::Mat leftImage, cv::Mat rightImage, cv::Mat &dstImage)

{

//输入图像必须是3通道的

if (leftImage.type() != CV_8UC3 || rightImage.type() != CV_8UC3)

{

return false;

}

//全景拼接

//cv::Stitcher::PANORAMA -> 创建全景照片的模式。期望在透视转换下的图像和项目产生的pano到球体。

//cv::Stitcher::SCANS -> 组成扫描的模式。默认情况下,在仿射变换下的期望图像不补偿曝光。

cv::Ptr<cv::Stitcher> stitcher = cv::Stitcher::create(cv::Stitcher::PANORAMA);

std::vector<cv::Mat> imgSet;

imgSet.push_back(leftImage);imgSet.push_back(rightImage);

cv::Stitcher::Status status = stitcher->stitch(imgSet, dstImage);

if (status != cv::Stitcher::OK)

{

std::cout << "不能拼接图像" << std::endl;

return false;

}

return true;

}

//基于Opencv 中的SIFT 进行图像拼接

//优点:对旋转、尺度缩放、亮度变化保持不变性,对视角变化、仿射变化、噪声也有一定程度的稳定性

//缺点:特征信息量大,计算时间较长

bool opencvSiftFun(cv::Mat leftImage, cv::Mat rightImage, cv::Mat &dstImage)

{

//提取角点

//hessianThreshold海塞矩阵阈值,在此调整精度,值越大点越少,越精确

cv::Ptr<cv::SIFT> SiftDetector = cv::SIFT::create(5000);

//提取特征点 + 特征描述子

std::vector<cv::KeyPoint> keyPoints1, keyPoints2;

cv::Mat features1, features2;

SiftDetector->detectAndCompute(leftImage, cv::Mat(), keyPoints1, features1);

SiftDetector->detectAndCompute(rightImage, cv::Mat(), keyPoints2, features2);

//精细匹配

cv::FlannBasedMatcher matcher;

std::vector<std::vector<cv::DMatch>> matchePoints;

std::vector<cv::DMatch> GoodMatchePoints;

std::vector<cv::Mat> train_desc(1, features2);

matcher.add(train_desc);

matcher.train();

matcher.knnMatch(features1, matchePoints, 2);

for (int i = 0; i < matchePoints.size(); i++)

{

if (matchePoints[i][0].distance < 0.4 * matchePoints[i][1].distance)

{

GoodMatchePoints.push_back(matchePoints[i][0]);

}

}

cv::Mat matchFocusGraph;

cv::drawMatches(leftImage, keyPoints1, rightImage, keyPoints2, GoodMatchePoints, matchFocusGraph,

cv::Scalar::all(-1), cv::Scalar::all(-1), std::vector<char>(), cv::DrawMatchesFlags::DEFAULT);

//求映射矩阵

std::vector<cv::Point2f> imagePoints1, imagePoints2;

for (int i = 0; i < GoodMatchePoints.size(); i++)

{

imagePoints1.push_back(keyPoints2[GoodMatchePoints[i].trainIdx].pt);

imagePoints2.push_back(keyPoints1[GoodMatchePoints[i].queryIdx].pt);

}

//该函数能找到并返回源平面和目标平面之间的转换矩阵H,以便于返回投影错误率达到最小

//但此函数实现存在一个前提,对应点位个数不能少于4个

if (imagePoints1.size() <= 4 && imagePoints2.size() <= 4)

{

std::cout << "不能拼接图像" << std::endl;

return false;

}

cv::Mat homo = cv::findHomography(imagePoints1, imagePoints2, cv::RANSAC);

//计算配准图的四个顶点坐标

std::vector<cv::Point2f> obj_corners(4);

obj_corners[0] = cv::Point(0, 0);

obj_corners[1] = cv::Point(rightImage.cols, 0);

obj_corners[2] = cv::Point(rightImage.cols, rightImage.rows);

obj_corners[3] = cv::Point(0, rightImage.rows);

std::vector<cv::Point2f> sceneCorners(4);

cv::perspectiveTransform(obj_corners, sceneCorners, homo);

//透视变换

int Iwidth = MAX(MAX(sceneCorners[1].x, sceneCorners[2].x), leftImage.cols);

int Iheight = MAX(MAX(sceneCorners[2].y, sceneCorners[3].y), leftImage.rows);

cv::Mat imageTransform1, imageTransform2;

cv::Mat h(3, 3, CV_32FC1, cv::Scalar::all(0));

h = (cv::Mat_<double>(3, 3) << 1, 0, 0, 0, 1, 0, 0, 0, 1);

cv::warpPerspective(leftImage, imageTransform1, h, cv::Size(Iwidth, Iheight));

cv::warpPerspective(rightImage, imageTransform2, homo, cv::Size(Iwidth, Iheight));

//创建拼接后的图像

cv::addWeighted(imageTransform1, 1, imageTransform2, 1, 0, dstImage);

optimizeSeam(leftImage, imageTransform1, imageTransform2, dstImage, sceneCorners);

return true;

}

//基于Opencv 中的 ORB 进行图像拼接

//优点:ORB算法比SIFT算法快100倍,比SUFT算法快10倍。

//缺点:ORB不能解决尺度不变性

bool opencvOrpFun(cv::Mat leftImage, cv::Mat rightImage, cv::Mat &dstImage)

{

//提取角点

//在这里调整精度,值越小点越少,越精准

cv::Ptr<cv::ORB> OrpDetector = cv::ORB::create(500);

//提取特征点+特征描述子

std::vector<cv::KeyPoint> keyPoints1, keyPoints2;

cv::Mat features1, features2;

OrpDetector->detectAndCompute(leftImage, cv::Mat(), keyPoints1, features1);

OrpDetector->detectAndCompute(rightImage, cv::Mat(), keyPoints2, features2);

//精细匹配

cv::flann::Index flannIndex(features2, cv::flann::LshIndexParams(12, 20, 2), cvflann::FLANN_DIST_HAMMING);

std::vector<cv::DMatch> GoodMatchePoints;

cv::Mat macthIndex(features1.rows, 2, CV_32SC1), matchDistance(features1.rows, 2, CV_32FC1);

flannIndex.knnSearch(features1, macthIndex, matchDistance, 2, cv::flann::SearchParams());

//Lowe's algorithm,获取优秀匹配点

for (int i = 0; i < matchDistance.rows; i++)

{

if (matchDistance.at<float>(i, 0) < 0.4 * matchDistance.at<float>(i, 1))

{

cv::DMatch dmatches(i, macthIndex.at<int>(i, 0), matchDistance.at<float>(i, 0));

GoodMatchePoints.push_back(dmatches);

}

}

cv::Mat matchFocusGraph;

cv::drawMatches(leftImage, keyPoints1, rightImage, keyPoints2, GoodMatchePoints, matchFocusGraph,

cv::Scalar::all(-1), cv::Scalar::all(-1), std::vector<char>(), cv::DrawMatchesFlags::DEFAULT);

//求映射矩阵

std::vector<cv::Point2f> imagePoints1, imagePoints2;

for (int i = 0; i < GoodMatchePoints.size(); i++)

{

imagePoints1.push_back(keyPoints2[GoodMatchePoints[i].trainIdx].pt);

imagePoints2.push_back(keyPoints1[GoodMatchePoints[i].queryIdx].pt);

}

//该函数能找到并返回源平面和目标平面之间的转换矩阵H,以便于返回投影错误率达到最小

//但此函数实现存在一个前提,对应点位个数不能少于4个

if (imagePoints1.size() <= 4 && imagePoints2.size() <= 4)

{

std::cout << "不能拼接图像" << std::endl;

return false;

}

cv::Mat homo = cv::findHomography(imagePoints1, imagePoints2, cv::RANSAC);

//计算配准图的四个顶点坐标

std::vector<cv::Point2f> obj_corners(4);

obj_corners[0] = cv::Point(0, 0);

obj_corners[1] = cv::Point(rightImage.cols, 0);

obj_corners[2] = cv::Point(rightImage.cols, rightImage.rows);

obj_corners[3] = cv::Point(0, rightImage.rows);

std::vector<cv::Point2f> sceneCorners(4);

cv::perspectiveTransform(obj_corners, sceneCorners, homo);

//透视变换

int Iwidth = MAX(MAX(sceneCorners[1].x, sceneCorners[2].x), leftImage.cols);

int Iheight = MAX(MAX(sceneCorners[2].y, sceneCorners[3].y), leftImage.rows);

cv::Mat imageTransform1, imageTransform2;

cv::Mat h(3, 3, CV_32FC1, cv::Scalar::all(0));

h = (cv::Mat_<double>(3, 3) << 1, 0, 0, 0, 1, 0, 0, 0, 1);

cv::warpPerspective(leftImage, imageTransform1, h, cv::Size(Iwidth, Iheight));

cv::warpPerspective(rightImage, imageTransform2, homo, cv::Size(Iwidth, Iheight));

//创建拼接后的图像

cv::addWeighted(imageTransform1, 1, imageTransform2, 1, 0, dstImage);

optimizeSeam(leftImage, imageTransform1, imageTransform2, dstImage, sceneCorners);

return true;

}

//基于Opencv 中的 FAST 进行图像拼接

//优点:计算速度超快,可达到实时性

//缺点:当图片中的噪点较多时,它的健壮性并不好

bool opencvFastFun(cv::Mat leftImage, cv::Mat rightImage, cv::Mat &dstImage)

{

//提取角点

//threshold是指边缘轨迹点和中心点的差值

cv::Ptr<cv::FastFeatureDetector> FastDetector = cv::FastFeatureDetector::create(30);

//提取特征点+特征描述子

std::vector<cv::KeyPoint> keyPoints1, keyPoints2;

cv::Mat features1, features2;

FastDetector->detect(leftImage, keyPoints1);

FastDetector->detect(rightImage, keyPoints2);

//提取特征描述子 因为opencv中没有fast的专用描述子提取器,我们借用SIFT来实现描述子的提取

cv::Ptr<cv::SIFT> SiftDetector = cv::SIFT::create();

SiftDetector->compute(leftImage, keyPoints1, features1);

SiftDetector->compute(rightImage, keyPoints2, features2);

//暴力匹配BFMatcher

cv::BFMatcher matcher;

std::vector<std::vector<cv::DMatch>> matchePoints;

std::vector<cv::DMatch> GoodMatchePoints;

std::vector<cv::Mat> train_desc(1, features2);

matcher.add(train_desc);

matcher.train();

matcher.knnMatch(features1, matchePoints, 2);

// Lowe's algorithm,获取优秀匹配点

for (int i = 0; i < matchePoints.size(); i++)

{

if (matchePoints[i][0].distance < 0.1 * matchePoints[i][1].distance)

{

GoodMatchePoints.push_back(matchePoints[i][0]);

}

}

cv::Mat matchFocusGraph;

cv::drawMatches(leftImage, keyPoints1, rightImage, keyPoints2, GoodMatchePoints, matchFocusGraph,

cv::Scalar::all(-1), cv::Scalar::all(-1), std::vector<char>(), cv::DrawMatchesFlags::DEFAULT);

//求映射矩阵

std::vector<cv::Point2f> imagePoints1, imagePoints2;

for (int i = 0; i < GoodMatchePoints.size(); i++)

{

imagePoints1.push_back(keyPoints2[GoodMatchePoints[i].trainIdx].pt);

imagePoints2.push_back(keyPoints1[GoodMatchePoints[i].queryIdx].pt);

}

//该函数能找到并返回源平面和目标平面之间的转换矩阵H,以便于返回投影错误率达到最小

//但此函数实现存在一个前提,对应点位个数不能少于4个

if (imagePoints1.size() <= 4 && imagePoints2.size() <= 4)

{

std::cout << "不能拼接图像" << std::endl;

return false;

}

cv::Mat homo = cv::findHomography(imagePoints1, imagePoints2, cv::RANSAC);

//计算配准图的四个顶点坐标

std::vector<cv::Point2f> obj_corners(4);

obj_corners[0] = cv::Point(0, 0);

obj_corners[1] = cv::Point(rightImage.cols, 0);

obj_corners[2] = cv::Point(rightImage.cols, rightImage.rows);

obj_corners[3] = cv::Point(0, rightImage.rows);

std::vector<cv::Point2f> sceneCorners(4);

cv::perspectiveTransform(obj_corners, sceneCorners, homo);

//透视变换

int Iwidth = MAX(MAX(sceneCorners[1].x, sceneCorners[2].x), leftImage.cols);

int Iheight = MAX(MAX(sceneCorners[2].y, sceneCorners[3].y), leftImage.rows);

cv::Mat imageTransform1, imageTransform2;

cv::Mat h(3, 3, CV_32FC1, cv::Scalar::all(0));

h = (cv::Mat_<double>(3, 3) << 1, 0, 0, 0, 1, 0, 0, 0, 1);

cv::warpPerspective(leftImage, imageTransform1, h, cv::Size(Iwidth, Iheight));

cv::warpPerspective(rightImage, imageTransform2, homo, cv::Size(Iwidth, Iheight));

//创建拼接后的图像

cv::addWeighted(imageTransform1, 1, imageTransform2, 1, 0, dstImage);

optimizeSeam(leftImage, imageTransform1, imageTransform2, dstImage, sceneCorners);

return true;

}

//基于Opencv 中的 SURF 进行图像拼接

//优点:计算速度较快,在实验室环境下可达到实时性,SURF在亮度变化下匹配效果最好

//缺点:精确度要差与SIFT算法,但对于拼接任务来说无伤大雅

bool opencvSurfFun(cv::Mat leftImage, cv::Mat rightImage, cv::Mat &dstImage)

{

//提取角点

cv::Ptr<cv::xfeatures2d::SURF> SurfDetector;

//hessianThreshold海塞矩阵阈值,在此调整精度,值越大点越少,越精确

SurfDetector = cv::xfeatures2d::SURF::create(5000);

//提取特征点+特征描述子

std::vector<cv::KeyPoint> keyPoints1, keyPoints2;

cv::Mat features1, features2;

SurfDetector->detectAndCompute(leftImage, cv::Mat(), keyPoints1, features1);

SurfDetector->detectAndCompute(rightImage, cv::Mat(), keyPoints2, features2);

//绘制角点

//cv::Mat leftKeyPointImage, rightKeyPointImage;

//cv::drawKeypoints(leftImage, keyPoints1, leftKeyPointImage, cv::Scalar::all(-1), cv::DrawMatchesFlags::DEFAULT);

//cv::drawKeypoints(rightImage, keyPoints2, rightKeyPointImage, cv::Scalar::all(-1), cv::DrawMatchesFlags::DEFAULT);

//精细匹配

cv::FlannBasedMatcher matcher;

std::vector<std::vector<cv::DMatch>> matchePoints;

std::vector<cv::DMatch> GoodMatchePoints;

std::vector<cv::Mat> train_desc(1, features2);

matcher.add(train_desc);

matcher.train();

matcher.knnMatch(features1, matchePoints, 2);

//Lowe's algorithm,获取优秀匹配点

for (int i = 0; i < matchePoints.size(); i++)

{

if (matchePoints[i][0].distance < 0.4 * matchePoints[i][1].distance)

{

GoodMatchePoints.push_back(matchePoints[i][0]);

}

}

cv::Mat matchFocusGraph;

cv::drawMatches(leftImage, keyPoints1, rightImage, keyPoints2, GoodMatchePoints, matchFocusGraph,

cv::Scalar::all(-1), cv::Scalar::all(-1), std::vector<char>(), cv::DrawMatchesFlags::DEFAULT);

//求映射矩阵

std::vector<cv::Point2f> imagePoints1, imagePoints2;

for (int i = 0; i < GoodMatchePoints.size(); i++)

{

imagePoints1.push_back(keyPoints2[GoodMatchePoints[i].trainIdx].pt);

imagePoints2.push_back(keyPoints1[GoodMatchePoints[i].queryIdx].pt);

}

//该函数能找到并返回源平面和目标平面之间的转换矩阵H,以便于返回投影错误率达到最小

//但此函数实现存在一个前提,对应点位个数不能少于4个

if (imagePoints1.size() <= 4 && imagePoints2.size() <= 4)

{

std::cout << "不能拼接图像" << std::endl;

return false;

}

cv::Mat homo = cv::findHomography(imagePoints1, imagePoints2, cv::RANSAC);

//计算配准图的四个顶点坐标(此方法可能造成像素信息损失)

/*std::vector sceneCorners(4);

calcCorners(homo, rightImage, sceneCorners);*/

//透视变换

/*cv::Mat rightImageTransform;

cv::warpPerspective(rightImage, rightImageTransform, homo, cv::Size(maxVal(sceneCorners[2].x, sceneCorners[3].x), leftImage.rows));*/

//创建拼接后的图像

/*int stitchWidth = rightImageTransform.cols;

int stitchHeight = rightImageTransform.rows;

dstImage = cv::Mat(cv::Size(stitchWidth, stitchHeight), CV_8UC3, cv::Scalar(0, 0, 0));

rightImageTransform.copyTo(dstImage);

leftImage.copyTo(dstImage(cv::Rect(0, 0, leftImage.cols, leftImage.rows)));*/

//图像融合

/*optimizeSeam(leftImage, rightImageTransform, dstImage, sceneCorners);*/

//计算配准图的四个顶点坐标

std::vector<cv::Point2f> obj_corners(4);

obj_corners[0] = cv::Point(0, 0);

obj_corners[1] = cv::Point(rightImage.cols, 0);

obj_corners[2] = cv::Point(rightImage.cols, rightImage.rows);

obj_corners[3] = cv::Point(0, rightImage.rows);

std::vector<cv::Point2f> sceneCorners(4);

cv::perspectiveTransform(obj_corners, sceneCorners, homo);

//透视变换

int Iwidth = MAX(MAX(sceneCorners[1].x, sceneCorners[2].x), leftImage.cols);

int Iheight = MAX(MAX(sceneCorners[2].y, sceneCorners[3].y), leftImage.rows);

cv::Mat imageTransform1, imageTransform2;

cv::Mat h(3, 3, CV_32FC1, cv::Scalar::all(0));

h = (cv::Mat_<double>(3, 3) << 1, 0, 0, 0, 1, 0, 0, 0, 1);

cv::warpPerspective(leftImage, imageTransform1, h, cv::Size(Iwidth, Iheight));

cv::warpPerspective(rightImage, imageTransform2, homo, cv::Size(Iwidth, Iheight));

//图像融合(时间复杂度较高,拼接效果较差)

/*cv::Mat midImg1, midImg2;

overLappedofImg(imageTransform1, imageTransform2, midImg1, midImg2);

cv::Mat tempImg1, tempImg2;

tempImg1 = imageTransform1.mul(midImg1) / 255;

tempImg2 = imageTransform2.mul(midImg2) / 255;*/

//拼接合成最终图像

/*addWeighted(tempImg1, 1, tempImg2, 1, 0, dstImage);*/

//创建拼接后的图像

cv::addWeighted(imageTransform1, 1, imageTransform2, 1, 0, dstImage);

optimizeSeam(leftImage, imageTransform1, imageTransform2, dstImage, sceneCorners);

return true;

}

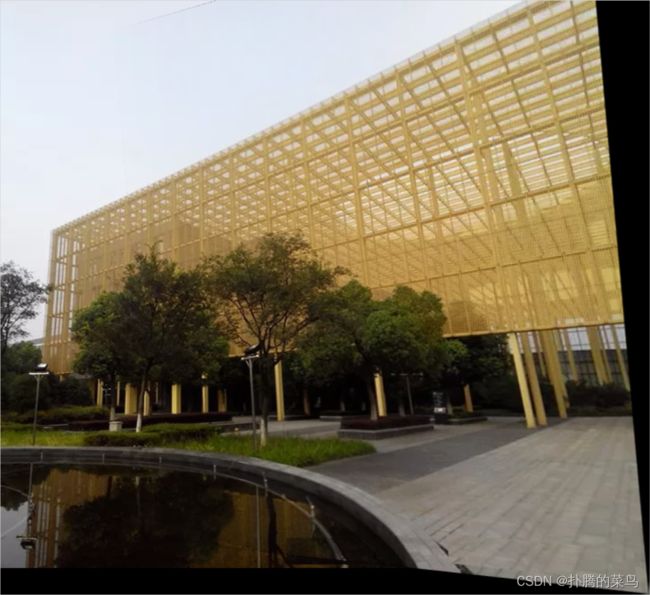

(4)、效果展示:

代码参考博客(在此基础上已更新和修改):

https://www.cnblogs.com/skyfsm/p/7401523.html

https://www.cnblogs.com/skyfsm/p/7411961.html

对原理感兴趣的可进入以下博客进行学习:

https://www.cnblogs.com/wangguchangqing/p/4853263.html

https://www.cnblogs.com/ronny/p/4045979.html