【云原生 | Kubernetes 系列】--Envoy指标监控

1. Envoy指标和日志

1.1 Envoy统计

-

Envoy状态统计

- Stats Sink

- 配置案例

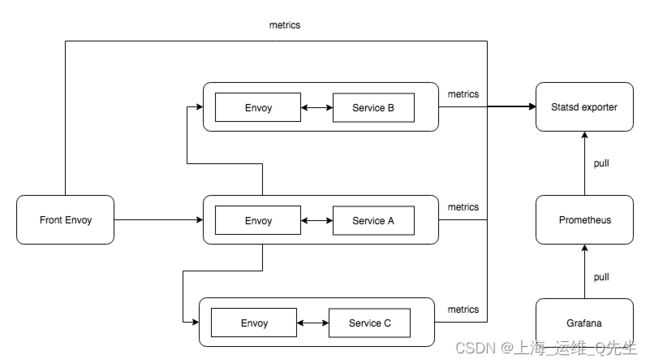

- 将指标数据纳入监控系统: statsd_exporter+Prometheus+grafana

-

访问日志

- 格式规则和命令操作符

- 配置语法和配置案例

- 日志存储检索系统: filebeat+elasticsearch+kibana

-

分布式跟踪

- 分布式跟踪基础概念

- 分布式跟踪工作机制

- Envoy的分布式跟踪

- 使用示例: Zipkin跟踪服务

- 使用示例: Jaeger跟踪服务

1.2 可观测性应用

日志,指标和跟踪是应用程序可观测性的三大支柱.前二者更多是属于传统的"以主机为中心"的模型,而跟踪则"以流程为中心",“请求链路为中心”

- 日志: 随时间发生的离散事件,不可变时间戳记录.对单体应用很有效,但分布式系统的故障通常会由多个不同组件之间的互联事件触发

ElasticStack, Splunk, Fluntd… - 指标: 由监控系统时序性收集和记录的固定类型的可聚合数据,同样对单体应用较有效,但他们无法提供足够的信息来理解分布式系统中调用(RPC)的生命周期

Statsd, Prometheus - 跟踪: 跟踪是跟随一个事务或一个请求从开始到结束的整个生命周期的过程,包括其所流经的组件

- 一系列具有因果排序的事件,有助于了解分布式系统中相关请求的生命周期

- 有助于用户了解在特定事件发生之前发生的时间,从而构建完整的事务流程

分布式跟踪(Distributed Tracing)

- 分布式跟踪是跟踪和分析分布式系统中所有服务中的请求或事务发生的情况的过程

- 跟踪意味着获取在每个服务中生成原始数据

- 分析意味着使用各种搜索,聚合,可视化和其他分析工具,帮助用户挖掘原始跟踪数据的价值

1.3 Envoy状态统计

Envoy统计数据大体可以分为三类:

- 下游: 主要由侦听器,HTTP连接管理器和TCP代理过滤器等生成

- 上游: 主要由连接池,路由器,过滤器和TCP代理过滤器等生成

- Envoy服务器:记录了Envoy服务器实例的工作细节

Envoy统计数据列席主要有三类,数据类型均为无符号整数:

- Counter: 累加型的计数器数据,单调递增

- Gauge: 常规指标数据,可增减

- Histogram: 柱状图数据

几乎所有统计数据都可以通过admin接口的/stats获取到

1.4 stats相关配置

stats的配置参数位于Bootstrap配置文件的顶级配置段

{

...

"stats_sinks": [], # stats_sink列表;

"stats_config": "{...}", # stats内部处理机制;

"stats_flush_interval": "{...}", # stats数据刷写至sinks的频率,出于性能考虑,Envoy仅周期性刷写counters和gauges,默认时长为5000ms;

“stats_flush_on_admin”: “...” # 仅在admin接口上收到查询请求时才刷写数据;

...

}

stats_sinks为Envoy的可选配置,统计数据默认没有配置任何暴露机制,但需要存储长期的指标数据则应该手动定制此配置

stats_sinks:

name: ... # 要初始化的Sink的名称,名称必须匹配于Envoy内置支持的sink,包括envoy.stat_sinks.dog_statsd、envoy.stat_sinks.graphite_statsd、envoy.stat_sinks.hystrix、

# envoy.stat_sinks.metrics_service、envoy.stat_sinks.statsd和envoy.stat_sinks.wasm几个;它们的功用类似于Prometheus的exporter;

typed_config: {...} # Sink的配置;各Sink的配置方式有所不同,下面给出的参数是为statd专用;

address: {...} # StatsdSink服务的访问端点,也可以使用下面的tcp_cluster_name指定为配置在Envoy上的Sink服务器组成集群;

tcp_cluster_name: ... # StatsdSink集群的名称,与address互斥;

prefix: ... # StatsdSink的自定义前缀,可选参数;

Envoy的统计信息由规范字符串表示法进行表示,这些字符串的动态部分可被玻璃标签(tag),并可由用户通过tag specifier进行配置

- config.metrics.v3.StatsConfig

- config.metrics.v3.TagSpecifier

1.5 配置示例: 将统计数据存入Prometheus

stats_sink集群定义

...

stats_sinks:

- name: envoy.statsd

typed_config:

"@type": type.googleapis.com/envoy.config.metrics.v3.StatsdSink

tcp_cluster_name: statsd_exporter

prefix: front-envoy

...

static_resources:

clusters:

- name: colord

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: colord

endpoints:

- lb_endpoints:

- endpoint:

address: { socket_address: { address: myservice, port_value: 80 } }

Prometheus定义

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'statsd'

scrape_interval: 5s

static_configs:

- targets: ['statsd_exporter:9102'] # statsd_exporter需要可以被dns解析或hosts解析

labels:

group: 'services'

2. 使用Prometheus和Grafana监控Envoy Mesh

2.1 docker-compose

10个Service:

- front-envoy:Front Proxy,地址为172.31.70.10

- 6个后端服务

- service_a_envoy和service_a:对应于Envoy中的service_a集群,会调用service_b和service_c;

- service_b_envoy和service_b:对应于Envoy中的service_b集群;

- service_c_envoy和service_c:对应于Envoy中的service_c集群;

- 1个statsd_exporter服务

- 1个prometheus服务

- 1个grafana服务

version: '3.3'

services:

front-envoy:

image: envoyproxy/envoy-alpine:v1.21-latest

environment:

- ENVOY_UID=0

- ENVOY_GID=0

volumes:

- "./front_envoy/envoy-config.yaml:/etc/envoy/envoy.yaml"

networks:

envoymesh:

ipv4_address: 172.31.70.10

aliases:

- front-envoy

- front

ports:

- 8088:80

- 9901:9901

service_a_envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- "./service_a/envoy-config.yaml:/etc/envoy/envoy.yaml"

networks:

envoymesh:

aliases:

- service_a_envoy

- service-a-envoy

ports:

- 8786:8786

- 8788:8788

service_a:

build: service_a/

networks:

envoymesh:

aliases:

- service_a

- service-a

ports:

- 8081:8081

service_b_envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- "./service_b/envoy-config.yaml:/etc/envoy/envoy.yaml"

networks:

envoymesh:

aliases:

- service_b_envoy

- service-b-envoy

ports:

- 8789:8789

service_b:

build: service_b/

networks:

envoymesh:

aliases:

- service_b

- service-b

ports:

- 8082:8082

service_c_envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- "./service_c/envoy-config.yaml:/etc/envoy/envoy.yaml"

networks:

envoymesh:

aliases:

- service_c_envoy

- service-c-envoy

ports:

- 8790:8790

service_c:

build: service_c/

networks:

envoymesh:

aliases:

- service_c

- service-c

ports:

- 8083:8083

statsd_exporter:

image: prom/statsd-exporter:v0.22.3

networks:

envoymesh:

ipv4_address: 172.31.70.66

aliases:

- statsd_exporter

ports:

- 9125:9125

- 9102:9102

prometheus:

image: prom/prometheus:v2.30.3

volumes:

- "./prometheus/config.yaml:/etc/prometheus.yaml"

networks:

envoymesh:

ipv4_address: 172.31.70.67

aliases:

- prometheus

ports:

- 9090:9090

command: "--config.file=/etc/prometheus.yaml"

grafana:

image: grafana/grafana:8.2.2

volumes:

- "./grafana/grafana.ini:/etc/grafana/grafana.ini"

- "./grafana/datasource.yaml:/etc/grafana/provisioning/datasources/datasource.yaml"

- "./grafana/dashboard.yaml:/etc/grafana/provisioning/dashboards/dashboard.yaml"

- "./grafana/dashboard.json:/etc/grafana/provisioning/dashboards/dashboard.json"

networks:

envoymesh:

ipv4_address: 172.31.70.68

aliases:

- grafana

ports:

- 3000:3000

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.70.0/24

2.2 envoy.yaml

2.2.1 front envoy

通过stats_sinks将日志写入statsd_exporter,并加front-envoy头

将所有80的流量都交给了service_a

node:

id: front-envoy

cluster: mycluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

stats_sinks:

- name: envoy.statsd

typed_config:

"@type": type.googleapis.com/envoy.config.metrics.v3.StatsdSink

tcp_cluster_name: statsd_exporter

prefix: front-envoy

static_resources:

listeners:

- name: http_listener

address:

socket_address:

address: 0.0.0.0

port_value: 80

filter_chains:

filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

use_remote_address: true

add_user_agent: true

stat_prefix: ingress_80

codec_type: AUTO

generate_request_id: true

route_config:

name: local_route

virtual_hosts:

- name: http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_a

http_filters:

- name: envoy.filters.http.router

clusters:

- name: statsd_exporter

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: statsd_exporter

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: statsd_exporter

port_value: 9125

- name: service_a

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_a

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_a_envoy

port_value: 8786

2.2.2 service_a

通过stats_sinks将日志写入statsd_exporter,并加service-a头

ingress,将入流量交给cluster: service_a

egress,将出流量导向service_b和service_c

node:

id: service-a

cluster: mycluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

stats_sinks:

- name: envoy.statsd

typed_config:

"@type": type.googleapis.com/envoy.config.metrics.v3.StatsdSink

tcp_cluster_name: statsd_exporter

prefix: service-a

static_resources:

listeners:

- name: service-a-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8786

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: service-a-svc-http-route

virtual_hosts:

- name: service-a-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_a

http_filters:

- name: envoy.filters.http.router

- name: service-b-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8788

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: service-b-svc-http-route

virtual_hosts:

- name: service-b-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_b

http_filters:

- name: envoy.filters.http.router

- name: service-c-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8791

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: service-c-svc-http-route

virtual_hosts:

- name: service-c-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_c

http_filters:

- name: envoy.filters.http.router

clusters:

- name: statsd_exporter

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: statsd_exporter

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: statsd_exporter

port_value: 9125

- name: service_a

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_a

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_a

port_value: 8081

- name: service_b

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_b

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_b_envoy

port_value: 8789

- name: service_c

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_c

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_c_envoy

port_value: 8790

2.2.3 service_b

通过stats_sinks将日志写入statsd_exporter,并加service-b头

ingress,将入流量交给cluster: service_b

15%的概率注入503错误

node:

id: service-b

cluster: mycluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

stats_sinks:

- name: envoy.statsd

typed_config:

"@type": type.googleapis.com/envoy.config.metrics.v3.StatsdSink

tcp_cluster_name: statsd_exporter

prefix: service-b

static_resources:

listeners:

- name: service-b-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8789

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: service-b-svc-http-route

virtual_hosts:

- name: service-b-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_b

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

abort:

http_status: 503

percentage:

numerator: 15

denominator: HUNDRED

- name: envoy.filters.http.router

typed_config: {}

clusters:

- name: statsd_exporter

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: statsd_exporter

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: statsd_exporter

port_value: 9125

- name: service_b

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_b

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_b

port_value: 8082

2.2.4 service_c

通过stats_sinks将日志写入statsd_exporter,并加service-c头

ingress,将入流量交给cluster: service_c

10%的概率注入1s延迟

node:

id: service-c

cluster: mycluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

stats_sinks:

- name: envoy.statsd

typed_config:

"@type": type.googleapis.com/envoy.config.metrics.v3.StatsdSink

tcp_cluster_name: statsd_exporter

prefix: service-c

static_resources:

listeners:

- name: service-c-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8790

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

stat_prefix: ingress_8786

codec_type: AUTO

route_config:

name: service-c-svc-http-route

virtual_hosts:

- name: service-c-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_c

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

delay:

fixed_delay: 1s

percentage:

numerator: 10

denominator: HUNDRED

- name: envoy.filters.http.router

typed_config: {}

clusters:

- name: statsd_exporter

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: statsd_exporter

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: statsd_exporter

port_value: 9125

- name: service_c

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_c

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_c

port_value: 8083

2.4 测试

2.4.1 业务流向

持续访问front envoy172.31.70.10.

front envoy将请求转发给service_a,并将监控写入statsd_exporter

service_a通过ingress将流量导入,再通过egress将去service_b和service_c的流量导出.

将监控写入statsd_exporter

service_b通过ingress导入流量并做应答,同时有15%概率触发503错误.

将监控写入statsd_exporter

service_c通过ingress导入流量并做应答,同时有10%概率延迟1s应答

将监控写入statsd_exporter

# while true; do curl 172.31.70.10; sleep 0.$RANDOM; done

Calling Service B: Hello from service B.

Hello from service A.

Hello from service C.

Calling Service B: Hello from service B.

Hello from service A.

Hello from service C.

Calling Service B: Hello from service B.

Hello from service A.

Hello from service C.

Calling Service B: Hello from service B.

Hello from service A.

Hello from service C.

Calling Service B: Hello from service B.

Hello from service A.

Hello from service C.

Calling Service B: Hello from service B.

Hello from service A.

Hello from service C.

Calling Service B: Hello from service B.

Hello from service A.

Hello from service C.

Calling Service B: Hello from service B.

Hello from service A.

2.4.2 Prometheus数据采集

prometheus将以下内容映射到容器的/etc/prometheus.yaml,对statsd_exporter:9102进行数据采集

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'statsd'

scrape_interval: 5s

static_configs:

- targets: ['statsd_exporter:9102']

labels:

group: 'services'

通过宿主机的9090端口可以在prometheus控制台上读取到几个container采集的监控数据

2.4.3 Grafana展示

当流量到front envoy时,将所有流量转给service_a,所以没有front_envoy到service_b和service_c的数据

service_a到service_b的流量会有15%的被注入了503的报错,此时可以看到grafana上有2xx和5xx的显示

C是没有注入503错误的所以没有5XX的错误,但在命令行while true的执行过程中会明显感觉到部分响应会有1s左右的卡顿