深度学习10——卷积神经网络

目录

1.全连接网络复习

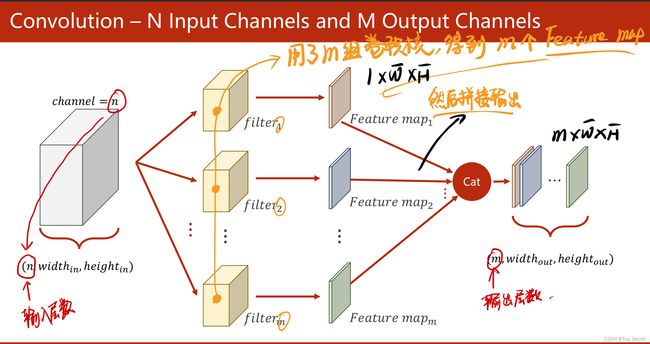

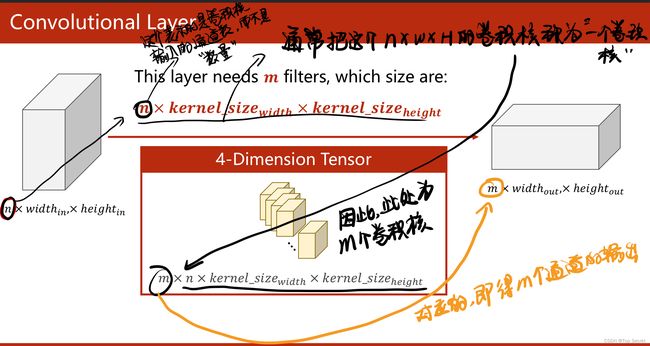

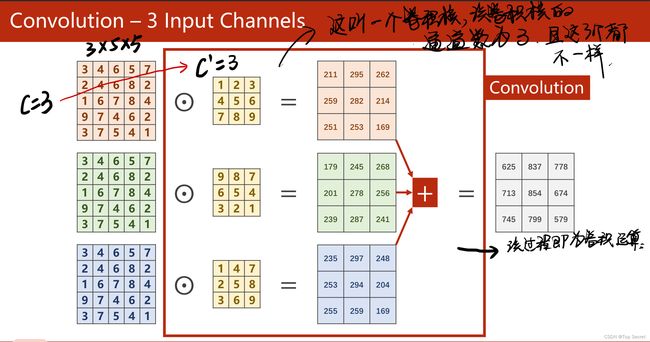

2.卷积

2.1 卷积核编辑

2.2 卷积层的基本实现

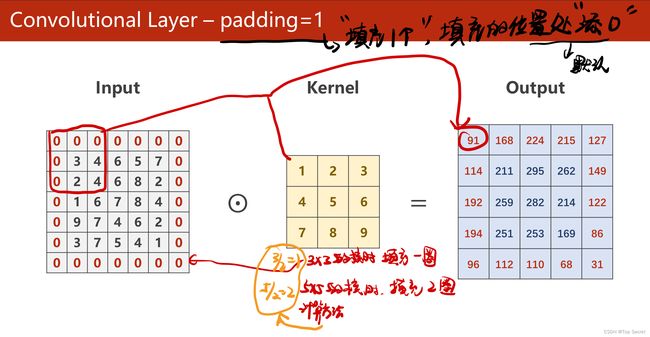

2.3 padding填充

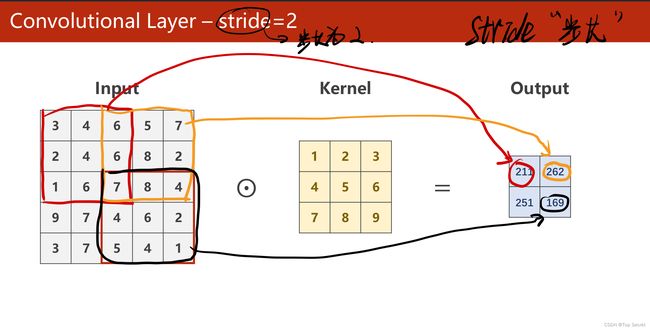

2.4 stride步长

2.5 池化层

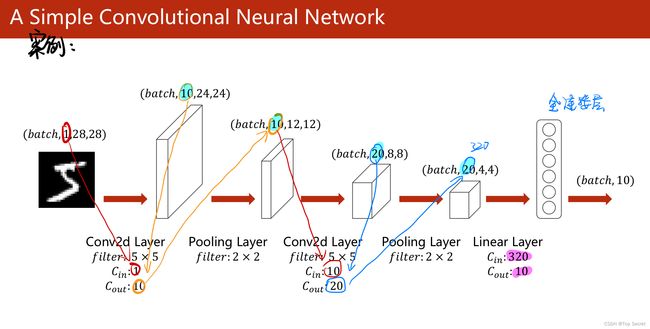

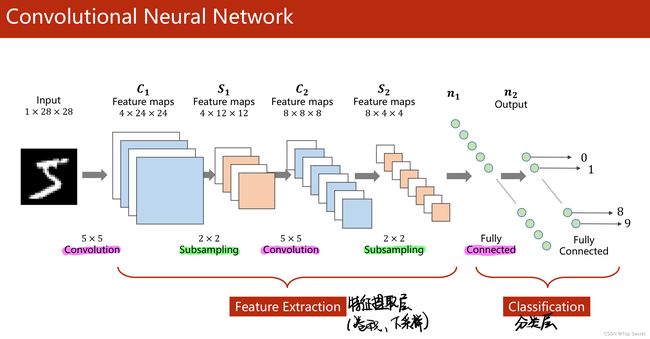

3. CNN实例

3.1 完整代码:

3.1.1 cpu训练

3.1.2 GPU上训练

4. 卷积神经网络进阶

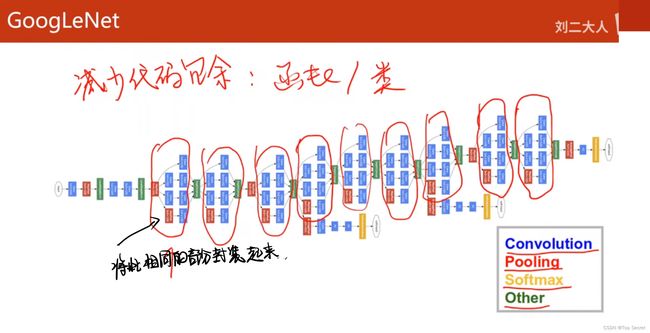

4.1 GoogLeNet

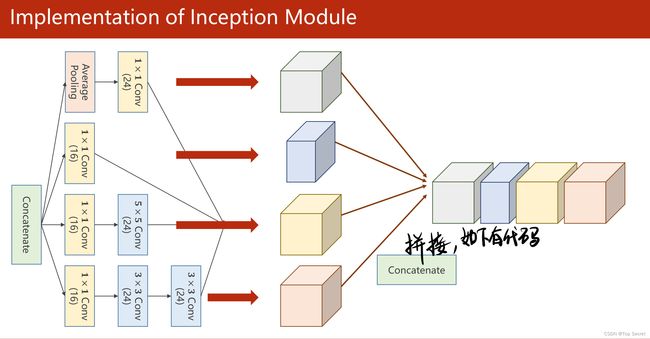

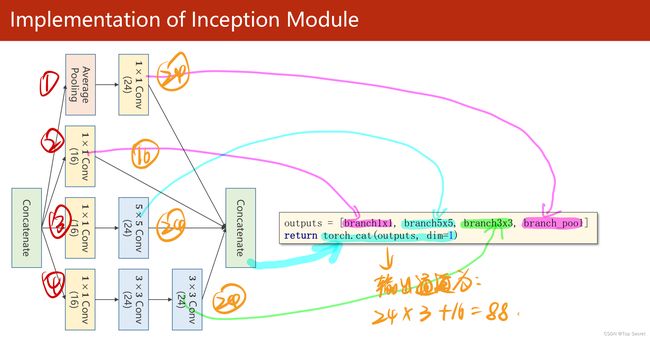

4.2 inception Module

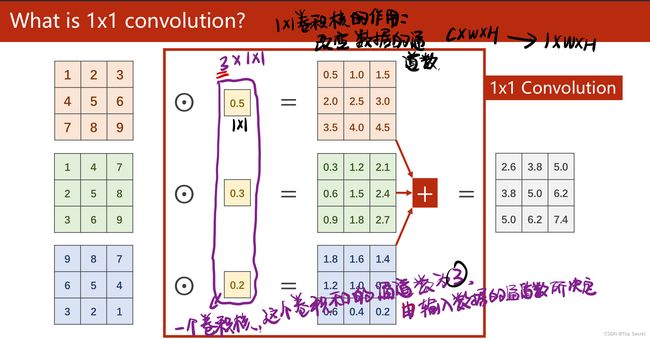

4.2.1 1*1卷积及其作用

4.2.2 implemetaion of inception Module编辑

4.2.3 Inception Module 以及模型构建的实现代码

完整代码:

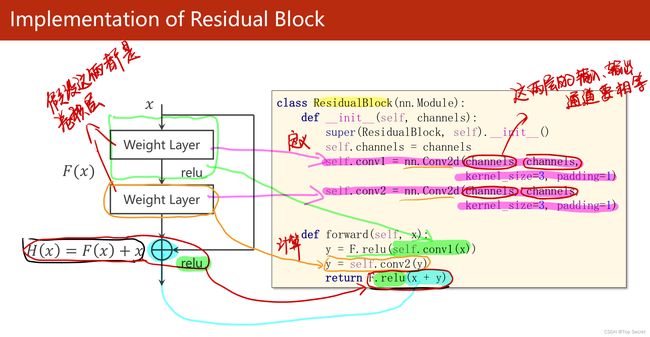

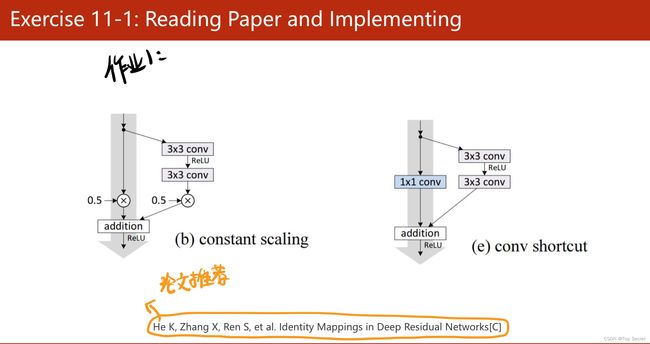

4.3 residual Network

4.3.1 Residual Block的代码实现

参考文章:

PyTorch 深度学习实践 第10讲_错错莫的博客-CSDN博客

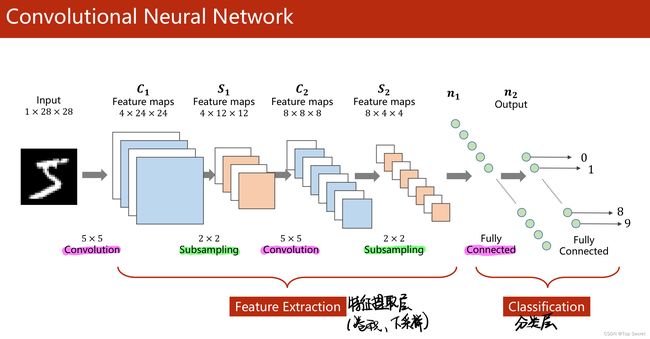

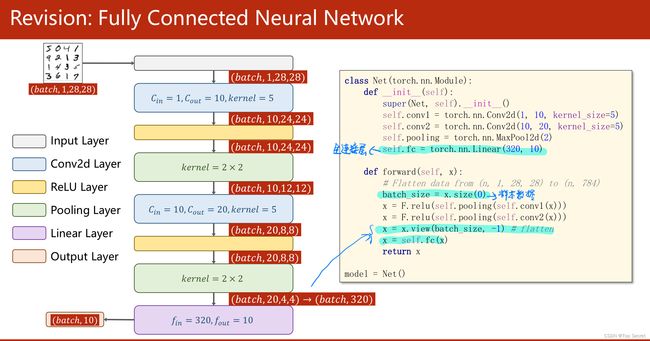

1.全连接网络复习

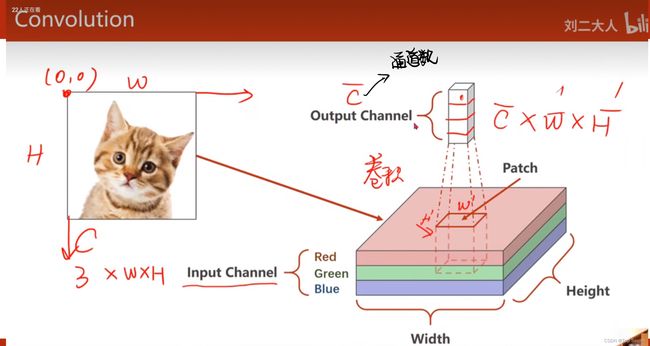

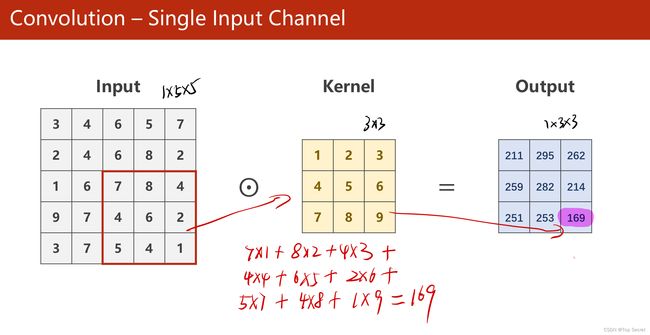

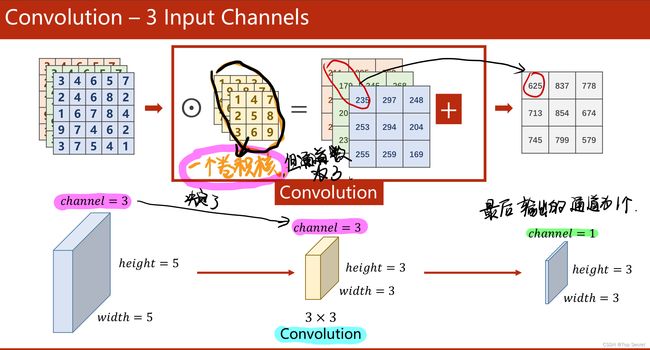

2.卷积

2.1 卷积核

2.2 卷积层的基本实现

import torch

in_channels, out_channels = 5, 10 #定义输入输出的通道数

width, hight = 100, 100 #定义图片的大小

kernel_size = 3 # 定义的卷积核是一个3*3的

batch_size = 1

#输入层

input = torch.randn(batch_size,

in_channels,

width,

hight

)

# 定义卷积层

conv_layer = torch.nn.Conv2d(

in_channels,

out_channels,

kernel_size=kernel_size

)

#定义输出层

output = conv_layer(input)

print(input.shape)

print(output.shape)

print(conv_layer.weight.shape)torch.Size([1, 5, 100, 100])

torch.Size([1, 10, 98, 98])

torch.Size([10, 5, 3, 3])2.3 padding填充

import torch

#以下数据假设是输入的图像像素点

input =[

3,4,6,5,7,

2,4,6,8,2,

1,6,7,8,4,

9,7,4,6,2,

3,7,5,4,1

]

input = torch.Tensor(input).view(1,1,5,5) #将input的数据转化为tensor类型,并设置B,C,W,H为1,1,5,5

#padding填充

conv_layer = torch.nn.Conv2d(1,1,kernel_size=3,padding=1,bias=False) #前两个数字对应的是输入与输出对应的通道数

kernel = torch.Tensor([1,2,3,4,5,6,7,8,9]).view(1,1,3,3) # 构造卷积核,view(输出的通道(卷积核的个数),输入的通道数(卷积核的通道数),3*3)

conv_layer.weight.data = kernel.data # 将卷积核的权重赋给卷积层

output = conv_layer(input) #做卷积运算

print(output)

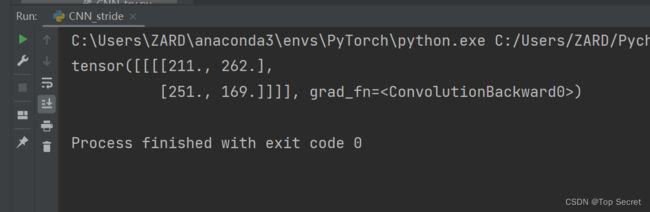

2.4 stride步长

import torch

#以下数据假设是输入的图像像素点

input =[

3,4,6,5,7,

2,4,6,8,2,

1,6,7,8,4,

9,7,4,6,2,

3,7,5,4,1

]

input = torch.Tensor(input).view(1,1,5,5) #将input的数据转化为tensor类型,并设置B,C,W,H为1,1,5,5

#stride步长

conv_layer = torch.nn.Conv2d(1,1,kernel_size=3,stride=2,bias=False)

kernel = torch.Tensor([1,2,3,4,5,6,7,8,9]).view(1,1,3,3) # 构造卷积核,view(输出的通道(卷积核的个数),输入的通道数(卷积核的通道数),3*3)

conv_layer.weight.data = kernel.data # 将卷积核的权重赋给卷积层

output = conv_layer(input) #做卷积运算

print(output)

2.5 池化层

import torch

#以下数据假设是输入的图像像素点

input =[

3,4,6,5,7,

2,4,6,8,2,

1,6,7,8,4,

9,7,4,6,2,

3,7,5,4,1

]

input = torch.Tensor(input).view(1,1,5,5) #将input的数据转化为tensor类型,并设置B,C,W,H为1,1,5,5

#池化层

maxpooling_layer = torch.nn.MaxPool2d(kernel_size=2)

output = maxpooling_layer(input) #做卷积运算

print(output)

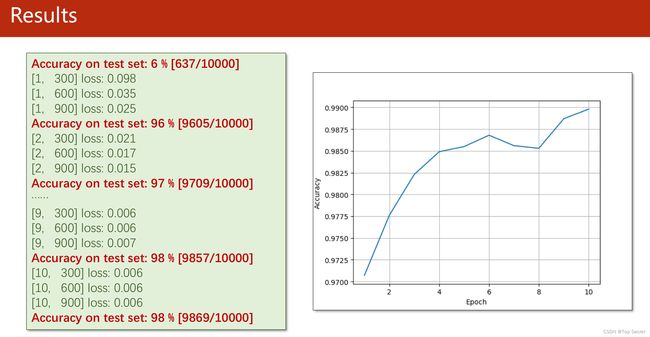

3. CNN实例

3.1 完整代码:

代码说明:

1、torch.nn.Conv2d(1,10,kernel_size=3,stride=2,bias=False)

1是指输入的Channel,灰色图像是1维的;10是指输出的Channel,也可以说第一个卷积层需要10个卷积核;kernel_size=3,卷积核大小是3x3;stride=2进行卷积运算时的步长,默认为1;bias=False卷积运算是否需要偏置bias,默认为False。padding = 0,卷积操作是否补0。

2、self.fc = torch.nn.Linear(320, 10),这个320获取的方式,可以通过x = x.view(batch_size, -1) # print(x.shape)可得到(64,320),64指的是batch,320就是指要进行全连接操作时,输入的特征维度。

3.1.1 cpu训练

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

# prepare dataset

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

# design model using class 构建网络模型

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5) #卷积核为5*5

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10) #全连接层,320 = 20*4*4

def forward(self, x):

# flatten data from (n,1,28,28) to (n, 784)

batch_size = x.size(0) #样本的数据量

x = F.relu(self.pooling(self.conv1(x))) #执行第一层卷积运算

x = F.relu(self.pooling(self.conv2(x)))

# 以下两步用于全连接运算

x = x.view(batch_size, -1) # -1 此处自动算出的是320

x = self.fc(x)

return x

model = Net()

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# training cycle forward, backward, update

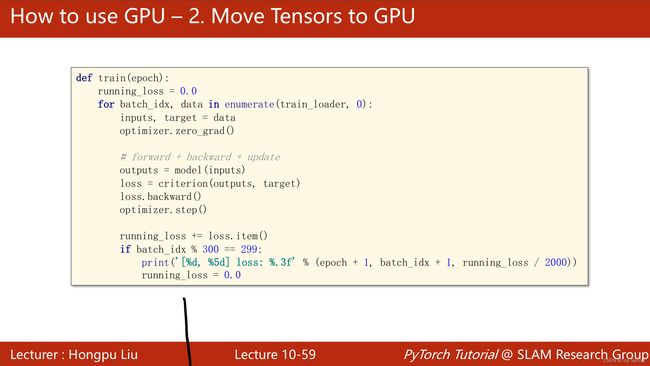

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100 * correct / total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()C:\Users\ZARD\anaconda3\envs\PyTorch\python.exe C:/Users/ZARD/PycharmProjects/pythonProject/机器学习库/PyTorch实战课程内容/lecture10/CNN01.py

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Using downloaded and verified file: ../dataset/mnist/MNIST\raw\train-images-idx3-ubyte.gz

Extracting ../dataset/mnist/MNIST\raw\train-images-idx3-ubyte.gz to ../dataset/mnist/MNIST\raw

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Using downloaded and verified file: ../dataset/mnist/MNIST\raw\train-labels-idx1-ubyte.gz

Extracting ../dataset/mnist/MNIST\raw\train-labels-idx1-ubyte.gz to ../dataset/mnist/MNIST\raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz to ../dataset/mnist/MNIST\raw\t10k-images-idx3-ubyte.gz

100%|██████████| 1648877/1648877 [00:30<00:00, 53892.68it/s]

Extracting ../dataset/mnist/MNIST\raw\t10k-images-idx3-ubyte.gz to ../dataset/mnist/MNIST\raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz to ../dataset/mnist/MNIST\raw\t10k-labels-idx1-ubyte.gz

100%|██████████| 4542/4542 [00:03<00:00, 1153.06it/s]

Extracting ../dataset/mnist/MNIST\raw\t10k-labels-idx1-ubyte.gz to ../dataset/mnist/MNIST\raw

[1, 300] loss: 0.737

[1, 600] loss: 0.213

[1, 900] loss: 0.147

accuracy on test set: 96 %

[2, 300] loss: 0.118

[2, 600] loss: 0.105

[2, 900] loss: 0.084

accuracy on test set: 97 %

[3, 300] loss: 0.080

[3, 600] loss: 0.071

[3, 900] loss: 0.075

accuracy on test set: 98 %

[4, 300] loss: 0.065

[4, 600] loss: 0.060

[4, 900] loss: 0.064

accuracy on test set: 98 %

[5, 300] loss: 0.058

[5, 600] loss: 0.054

[5, 900] loss: 0.050

accuracy on test set: 98 %

[6, 300] loss: 0.046

[6, 600] loss: 0.051

[6, 900] loss: 0.049

accuracy on test set: 98 %

[7, 300] loss: 0.044

[7, 600] loss: 0.046

[7, 900] loss: 0.043

accuracy on test set: 98 %

[8, 300] loss: 0.040

[8, 600] loss: 0.044

[8, 900] loss: 0.038

accuracy on test set: 98 %

[9, 300] loss: 0.039

[9, 600] loss: 0.037

[9, 900] loss: 0.038

accuracy on test set: 98 %

[10, 300] loss: 0.038

[10, 600] loss: 0.035

[10, 900] loss: 0.034

accuracy on test set: 98 %

Process finished with exit code 0

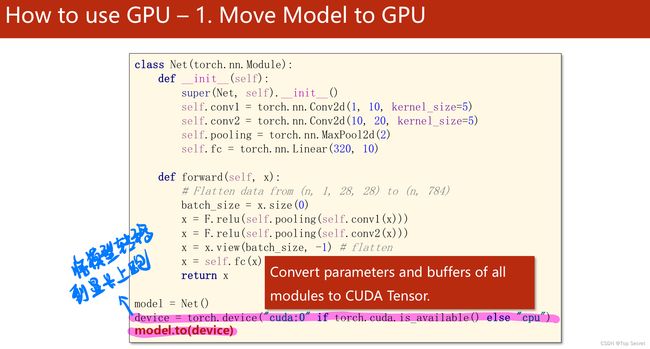

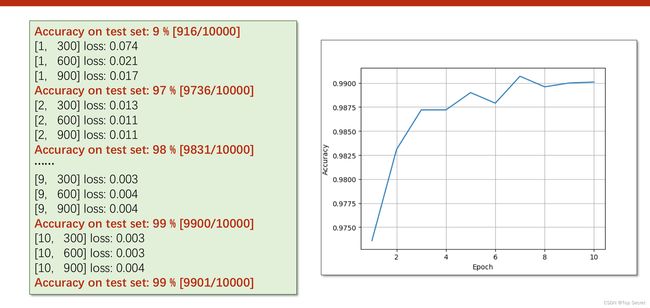

3.1.2 GPU上训练

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

import matplotlib.pyplot as plt

# prepare dataset

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

# design model using class

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# flatten data from (n,1,28,28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size, -1) # -1 此处自动算出的是320

# print("x.shape",x.shape)

x = self.fc(x)

return x

model = Net()

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# training cycle forward, backward, update

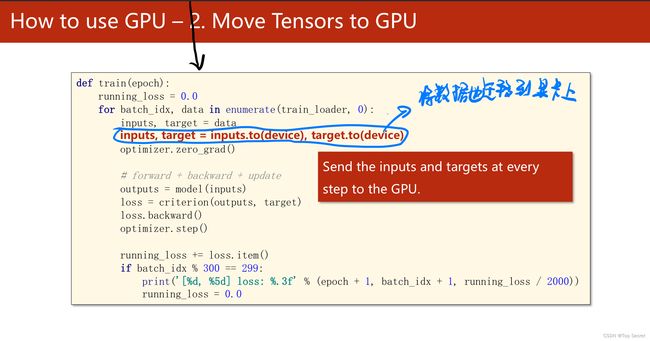

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch+1, batch_idx+1, running_loss/300))

running_loss = 0.0

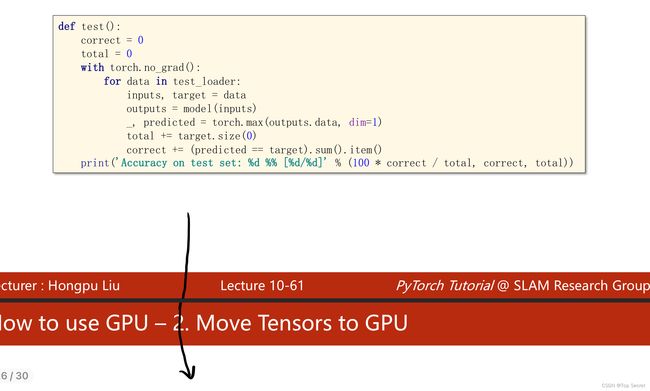

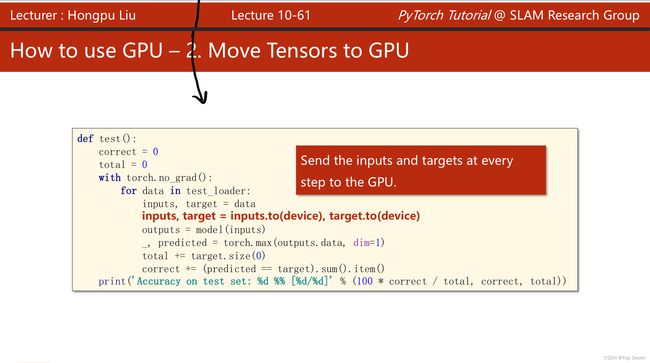

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100*correct/total))

return correct/total

if __name__ == '__main__':

epoch_list = []

acc_list = []

for epoch in range(10):

train(epoch)

acc = test()

epoch_list.append(epoch)

acc_list.append(acc)

plt.plot(epoch_list,acc_list)

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.show()

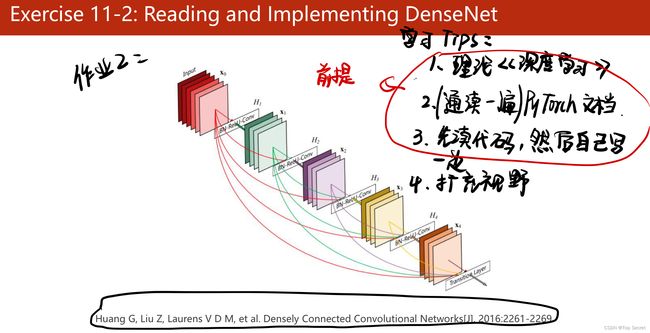

4. 卷积神经网络进阶

4.1 GoogLeNet

4.2 inception Module

4.2.1 1*1卷积及其作用

4.2.2 implemetaion of inception Module

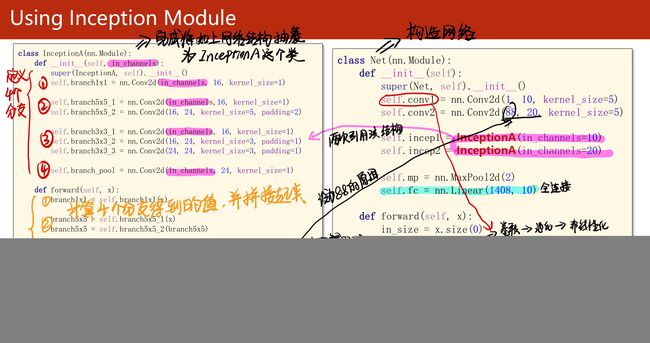

4.2.3 Inception Module 以及模型构建的实现代码

#将某分支网络抽象为一个Inception类

class Inception(nn.Module):

def __init__(self,in_channels):

super(Inception,self).__init__()

#定义如下4个分支结构

self.branch1x1 = nn.Conv2d(in_channels,16,kernel_size=1) # 该分支为一层卷积核为1*1的16通道的卷积层

#该分支为由两层构成,第一层卷积核1*1,输出通道为16;第二层卷积核为5*5,输出通道为24

self.branch5x5_1 = nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_2 = nn.Conv2d(16,24,kernel_size=5,padding=2)

#第三分支

self.branch3x3_1 = nn.Conv2d(in_channels,16,kernel_size=1)

self.branch3x3_2 = nn.Conv2d(16,24,kernel_size=3,padding=1)

self.branch3x3_3 = nn.Conv2d(24,24,kernel_size=3,padding=1)

#第四分支:池化分支

self.branch_pool = nn.Conv2d(in_channels,24,kernel_size=1)

#计算各层分支的卷积和

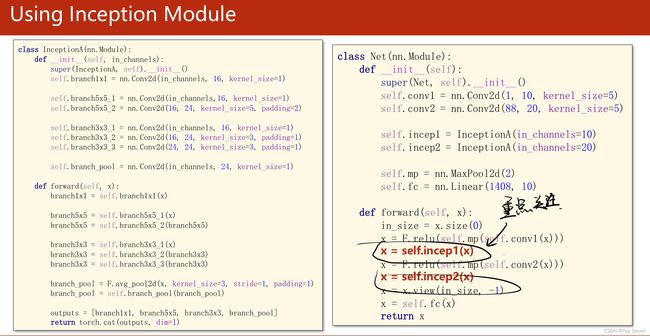

def forward(self,x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_3(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

branch_pool = F.avg_pool2d(x,kernel_size=3,stride=1,padding=1)

branch_pool = self.branch_pool(branch_pool)

output = [branch1x1,branch5x5,branch3x3,branch_pool]

return torch.cat(output,dim=1)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1,10,kernel_size=5)

self.conv2 = nn.Conv2d(88,20,kernel_size=5)

#实例化分支网络结构Inception

self.incep1 = Inception(in_channels=10)

self.incep2 = Inception(in_channels=20)

self.mp = nn.MaxPool2d(2) #池化

self.fc = nn.Linear(1408,10) #实例化全连接层,全连接层的实质就是一个线性层

def forward(self,x):

in_side = x.size(0)

x = F.relu(self.mp(self.conv1(x)))

x = self.incep1(x)

x = F.relu(self.mp(self.conv2(x)))

x = self.incep2(x)

#全连接层

x = x.view(in_side,-1)

x = self.fc(x)

return x完整代码:

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

# prepare datasets 数据集的准备

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))]) # 归一化,均值和方差

train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

#将某分支网络抽象为一个Inception类

class Inception(nn.Module):

def __init__(self,in_channels):

super(Inception,self).__init__()

#定义如下4个分支结构

self.branch1x1 = nn.Conv2d(in_channels, 16, kernel_size=1) # 该分支为一层卷积核为1*1的16通道的卷积层

#该分支为由两层构成,第一层卷积核1*1,输出通道为16;第二层卷积核为5*5,输出通道为24

self.branch5x5_1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch5x5_2 = nn.Conv2d(16, 24, kernel_size=5, padding=2)

#第三分支

self.branch3x3_1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch3x3_2 = nn.Conv2d(16, 24, kernel_size=3, padding=1)

self.branch3x3_3 = nn.Conv2d(24, 24, kernel_size=3, padding=1)

#第四分支:池化分支

self.branch_pool = nn.Conv2d(in_channels,24,kernel_size=1)

#计算各层分支的卷积和

def forward(self,x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

branch_pool = F.avg_pool2d(x,kernel_size=3,stride=1,padding=1)

branch_pool = self.branch_pool(branch_pool)

output = [branch1x1,branch5x5,branch3x3,branch_pool]

return torch.cat(output,dim=1)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1,10,kernel_size=5)

self.conv2 = nn.Conv2d(88,20,kernel_size=5)

#实例化分支网络结构Inception

self.incep1 = Inception(in_channels=10)

self.incep2 = Inception(in_channels=20)

self.mp = nn.MaxPool2d(2) #池化

self.fc = nn.Linear(1408,10) #实例化全连接层,全连接层的实质就是一个线性层

def forward(self,x):

in_side = x.size(0)

x = F.relu(self.mp(self.conv1(x)))

x = self.incep1(x)

x = F.relu(self.mp(self.conv2(x)))

x = self.incep2(x)

#全连接层

x = x.view(in_side,-1)

x = self.fc(x)

return x

model = Net()

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# training cycle forward, backward, update

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100 * correct / total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

结果:

C:\Users\ZARD\anaconda3\envs\PyTorch\python.exe C:/Users/ZARD/PycharmProjects/pythonProject/机器学习库/PyTorch实战课程内容/lecture10/CNN_plus.py

[1, 300] loss: 0.884

[1, 600] loss: 0.224

[1, 900] loss: 0.144

accuracy on test set: 96 %

[2, 300] loss: 0.108

[2, 600] loss: 0.095

[2, 900] loss: 0.087

accuracy on test set: 97 %

[3, 300] loss: 0.077

[3, 600] loss: 0.068

[3, 900] loss: 0.069

accuracy on test set: 98 %

[4, 300] loss: 0.060

[4, 600] loss: 0.060

[4, 900] loss: 0.062

accuracy on test set: 98 %

4.3 residual Network

4.3.1 Residual Block的代码实现

代码:

# design model using class

class ResidualBlock(nn.Module):

def __init__(self, channels):

super(ResidualBlock, self).__init__()

self.channels = channels

self.conv1 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)

def forward(self, x):

y = F.relu(self.conv1(x))

y = self.conv2(y)

return F.relu(x + y)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=5)

self.conv2 = nn.Conv2d(16, 32, kernel_size=5) # 88 = 24x3 + 16

self.rblock1 = ResidualBlock(16)

self.rblock2 = ResidualBlock(32)

self.mp = nn.MaxPool2d(2)

self.fc = nn.Linear(512, 10) # 暂时不知道1408咋能自动出来的

def forward(self, x):

in_size = x.size(0)

x = self.mp(F.relu(self.conv1(x)))

x = self.rblock1(x)

x = self.mp(F.relu(self.conv2(x)))

x = self.rblock2(x)

x = x.view(in_size, -1)

x = self.fc(x)

return x

完整代码:

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

# prepare dataset

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))]) # 归一化,均值和方差

train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

# design model using class

class ResidualBlock(nn.Module):

def __init__(self, channels):

super(ResidualBlock, self).__init__()

self.channels = channels

self.conv1 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)

def forward(self, x):

y = F.relu(self.conv1(x))

y = self.conv2(y)

return F.relu(x + y)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=5)

self.conv2 = nn.Conv2d(16, 32, kernel_size=5) # 88 = 24x3 + 16

self.rblock1 = ResidualBlock(16)

self.rblock2 = ResidualBlock(32)

self.mp = nn.MaxPool2d(2)

self.fc = nn.Linear(512, 10) # 暂时不知道1408咋能自动出来的

def forward(self, x):

in_size = x.size(0)

x = self.mp(F.relu(self.conv1(x)))

x = self.rblock1(x)

x = self.mp(F.relu(self.conv2(x)))

x = self.rblock2(x)

x = x.view(in_size, -1)

x = self.fc(x)

return x

model = Net()

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# training cycle forward, backward, update

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100 * correct / total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test() 运行结果:

C:\Users\ZARD\anaconda3\envs\PyTorch\python.exe C:/Users/ZARD/PycharmProjects/pythonProject/机器学习库/PyTorch实战课程内容/lecture10/aaa.py

[1, 300] loss: 0.509

[1, 600] loss: 0.155

[1, 900] loss: 0.107

accuracy on test set: 97 %

[2, 300] loss: 0.087

[2, 600] loss: 0.076

[2, 900] loss: 0.073

accuracy on test set: 98 %

import torch

from torch.utils.data import DataLoader #我们要加载数据集的

from torchvision import transforms #数据的原始处理

from torchvision import datasets #pytorch十分贴心的为我们直接准备了这个数据集

import torch.nn.functional as F#激活函数

import torch.optim as optim

batch_size = 64

#我们拿到的图片是pillow,我们要把他转换成模型里能训练的tensor也就是张量的格式

transform = transforms.Compose([transforms.ToTensor()])

#加载训练集,pytorch十分贴心的为我们直接准备了这个数据集,注意,即使你没有下载这个数据集

#在函数中输入download=True,他在运行到这里的时候发现你给的路径没有,就自动下载

train_dataset = datasets.MNIST(root='../data', train=True, download=True, transform=transform)

train_loader = DataLoader(dataset=train_dataset, shuffle=True, batch_size=batch_size)

#同样的方式加载一下测试集

test_dataset = datasets.MNIST(root='../data', train=False, download=True, transform=transform)

test_loader = DataLoader(dataset=test_dataset, shuffle=False, batch_size=batch_size)

#接下来我们看一下模型是怎么做的

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

#定义了我们第一个要用到的卷积层,因为图片输入通道为1,第一个参数就是1

#输出的通道为10,kernel_size是卷积核的大小,这里定义的是5x5的

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

#看懂了上面的定义,下面这个你肯定也能看懂

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

#再定义一个池化层

self.pooling = torch.nn.MaxPool2d(2)

#最后是我们做分类用的线性层

self.fc = torch.nn.Linear(320, 10)

#下面就是计算的过程

def forward(self, x):

# Flatten data from (n, 1, 28, 28) to (n, 784)

batch_size = x.size(0) #这里面的0是x大小第1个参数,自动获取batch大小

#输入x经过一个卷积层,之后经历一个池化层,最后用relu做激活

x = F.relu(self.pooling(self.conv1(x)))

#再经历上面的过程

x = F.relu(self.pooling(self.conv2(x)))

#为了给我们最后一个全连接的线性层用

#我们要把一个二维的图片(实际上这里已经是处理过的)20x4x4张量变成一维的

x = x.view(batch_size, -1) # flatten

#经过线性层,确定他是0~9每一个数的概率

x = self.fc(x)

return x

model = Net()#实例化模型

#把计算迁移到GPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

#定义一个损失函数,来计算我们模型输出的值和标准值的差距

criterion = torch.nn.CrossEntropyLoss()

#定义一个优化器,训练模型咋训练的,就靠这个,他会反向的更改相应层的权重

optimizer = optim.SGD(model.parameters(),lr=0.1,momentum=0.5)#lr为学习率

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):#每次取一个样本

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

#优化器清零

optimizer.zero_grad()

# 正向计算一下

outputs = model(inputs)

#计算损失

loss = criterion(outputs, target)

#反向求梯度

loss.backward()

#更新权重

optimizer.step()

#把损失加起来

running_loss += loss.item()

#每300次输出一下数据

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 2000))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():#不用算梯度

for data in test_loader:

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

outputs = model(inputs)

#我们取概率最大的那个数作为输出

_, predicted = torch.max(outputs.data, dim=1)

total += target.size(0)

#计算正确率

correct += (predicted == target).sum().item()

print('Accuracy on test set: %d %% [%d/%d]' % (100 * correct / total, correct, total))

if __name__=='__main__':

for epoch in range(10):

train(epoch)

if epoch % 10 == 9:

test()