pytorch学习(三)——模型层

文章目录

- 1. 自定义模型层

- 2. 使用预训练模型

- 3. 模型构建风格

-

- 3.1 使用 `add_module` 方法

- 3.2 添加进 `Sequential`

- 3.3 Sequential作为模型容器

- 3.4 ModuleList作为模型容器

- 3.5 ModuleDict作为模型容器

当我们构建了数据管道能够将数据一个batch一个batch的取出来后,下一步就是构建模型了,模型的构建将很大程度的影响学习的效果,pytorch的模型层全部都在 torch.nn 模块下。

1. 自定义模型层

如果需要查看模型层的各个API及每个API的作用,大家可以去官网查看,网址放在这里了:https://pytorch.org/docs/stable/nn.html#shuffle-layers

如果需要自己定义模型层,那么需要继承 nn.Module 模块,类的初始化方法 __init__ 第一行必须调用父类的方法并定义模型层,必须实现 forward(input) 方法并将各层连接起来才行。

下面展示一个示例:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()#调用父类的初始化方法

# 3层lstm

self.lstm = nn.LSTM(input_size = 3,hidden_size = 3,num_layers = 5,batch_first = True)

self.linear = nn.Linear(3,3)

self.block = Block()

# 重写forward方法

def forward(self,x_input):

# 定义网络参数传递过程

x = self.lstm(x_input)[0][:,-1,:]

x = self.linear(x)

y = self.block(x,x_input)

return y

2. 使用预训练模型

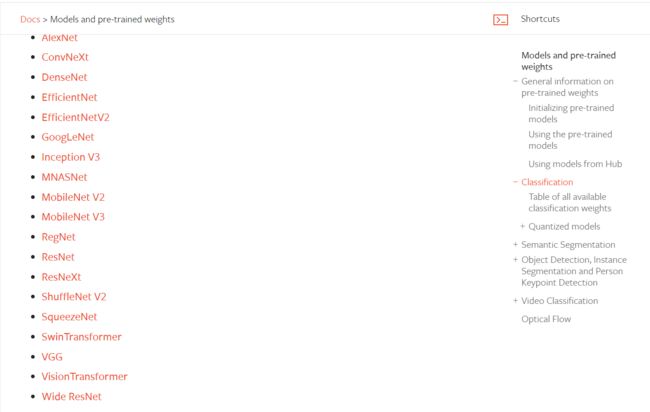

因为迁移学习所带来的的影响,使用预训练的模型往往能够带来更好的效果,在pytorch中,很多预训练模型都集成到了 torchvision 中的 models 模块,可以在官网中查看支持的各个已训练好的模型,官网网址 https://pytorch.org/vision/stable/models.html,调用方法也十分简单,比如调用残差神经网络,只需要使用下面的语句即可

from torchvision import models

model = models.resnet152(pretrained=True)

上面的 pretrained=True 表示将模型下载下来,默认的下载路径为 C:\Users\ASUS\.cache\torch\hub\checkpoints ,下载的模型都存储在这里。

3. 模型构建风格

pytorch构建模型时有许多中风格可以用来添加网络层,第一种就是上面的那种继承 nn.Module 并且自定义类的风格,下面还有几种风格。

3.1 使用 add_module 方法

使用 add_module 能够往模型中添加模型层,示例如下

net = nn.Sequential()

net.add_module("conv1",nn.Conv2d(in_channels=3,out_channels=32,kernel_size = 3))

net.add_module("pool1",nn.MaxPool2d(kernel_size = 2,stride = 2))

net.add_module("conv2",nn.Conv2d(in_channels=32,out_channels=64,kernel_size = 5))

net.add_module("pool2",nn.MaxPool2d(kernel_size = 2,stride = 2))

net.add_module("dropout",nn.Dropout2d(p = 0.1))

net.add_module("adaptive_pool",nn.AdaptiveMaxPool2d((1,1)))

net.add_module("flatten",nn.Flatten())

net.add_module("linear1",nn.Linear(64,32))

net.add_module("relu",nn.ReLU())

net.add_module("linear2",nn.Linear(32,1))

net.add_module("sigmoid",nn.Sigmoid())

print(net)

3.2 添加进 Sequential

net = nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=32,kernel_size = 3),

nn.MaxPool2d(kernel_size = 2,stride = 2),

nn.Conv2d(in_channels=32,out_channels=64,kernel_size = 5),

nn.MaxPool2d(kernel_size = 2,stride = 2),

nn.Dropout2d(p = 0.1),

nn.AdaptiveMaxPool2d((1,1)),

nn.Flatten(),

nn.Linear(64,32),

nn.ReLU(),

nn.Linear(32,1),

nn.Sigmoid()

)

print(net)

这种方式构建时不能给每个层指定名称。

3.3 Sequential作为模型容器

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=32,kernel_size = 3),

nn.MaxPool2d(kernel_size = 2,stride = 2),

nn.Conv2d(in_channels=32,out_channels=64,kernel_size = 5),

nn.MaxPool2d(kernel_size = 2,stride = 2),

nn.Dropout2d(p = 0.1),

nn.AdaptiveMaxPool2d((1,1))

)

self.dense = nn.Sequential(

nn.Flatten(),

nn.Linear(64,32),

nn.ReLU(),

nn.Linear(32,1),

nn.Sigmoid()

)

def forward(self,x):

x = self.conv(x)

y = self.dense(x)

return y

3.4 ModuleList作为模型容器

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.layers = nn.ModuleList([

nn.Conv2d(in_channels=3,out_channels=32,kernel_size = 3),

nn.MaxPool2d(kernel_size = 2,stride = 2),

nn.Conv2d(in_channels=32,out_channels=64,kernel_size = 5),

nn.MaxPool2d(kernel_size = 2,stride = 2),

nn.Dropout2d(p = 0.1),

nn.AdaptiveMaxPool2d((1,1)),

nn.Flatten(),

nn.Linear(64,32),

nn.ReLU(),

nn.Linear(32,1),

nn.Sigmoid()]

)

def forward(self,x):

for layer in self.layers:

x = layer(x)

return x

net = Net()

print(net)

3.5 ModuleDict作为模型容器

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.layers_dict = nn.ModuleDict({"conv1":nn.Conv2d(in_channels=3,out_channels=32,kernel_size = 3),

"pool": nn.MaxPool2d(kernel_size = 2,stride = 2),

"conv2":nn.Conv2d(in_channels=32,out_channels=64,kernel_size = 5),

"dropout": nn.Dropout2d(p = 0.1),

"adaptive":nn.AdaptiveMaxPool2d((1,1)),

"flatten": nn.Flatten(),

"linear1": nn.Linear(64,32),

"relu":nn.ReLU(),

"linear2": nn.Linear(32,1),

"sigmoid": nn.Sigmoid()

})

def forward(self,x):

layers = ["conv1","pool","conv2","pool","dropout","adaptive",

"flatten","linear1","relu","linear2","sigmoid"]

for layer in layers:

x = self.layers_dict[layer](x)

return x

net = Net()

print(net)