k8s学习笔记3-搭建k8s metrics server

k8s学习笔记3-搭建k8s metrics server

- 一.介绍

- 二.原理

- 三.部署

-

- yaml文件下载

- 部署

-

- a.镜像问题

- b.500错误

- 四.验证

- 五.参考资料

一.介绍

metrics-servery用途:

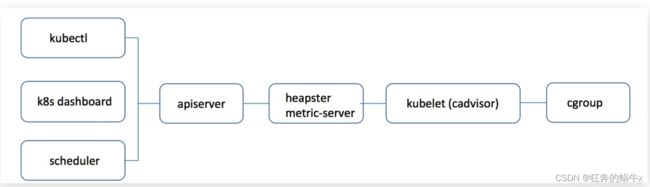

metric-server主要用来通过aggregate api向其它组件(kube-scheduler、HorizontalPodAutoscaler、Kubernetes集群客户端等)提供集群中的pod和node的cpu和memory的监控指标,弹性伸缩中的podautoscaler就是通过调用这个接口来查看pod的当前资源使用量来进行pod的扩缩容的。

需要注意的是:

1.metric-server提供的是实时的指标(实际是最近一次采集的数据,保存在内存中),并没有数据库来存储

2.这些数据指标并非由metric-server本身采集,而是由每个节点上的cadvisor采集,metric-server只是发请求给cadvisor并将metric格式的数据转换成aggregate api

3.由于需要通过aggregate api来提供接口,需要集群中的kube-apiserver开启该功能(开启方法可以参考官方社区的文档)

关于k8s metrics-server的部署,可以直接看本文章的部署部分,就是将官网中的yaml文件下载下来,然后修改2个两个地方即可,一个是由于网络问题无法下载的镜像,一个是报500错误问题,这2个地方,修改一下,然后直接应用,就可以ok了。

二.原理

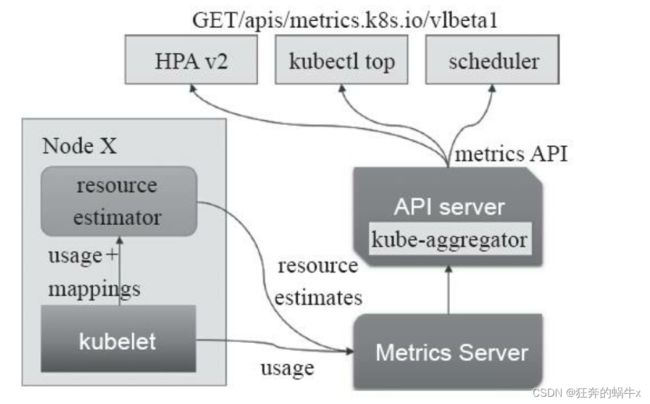

Metrics server定时从Kubelet的Summary API(类似/ap1/v1/nodes/nodename/stats/summary)采集指标信息,这些聚合过的数据将存储在内存中,且以metric-api的形式暴露出去。

Metrics server复用了api-server的库来实现自己的功能,比如鉴权、版本等,为了实现将数据存放在内存中吗,去掉了默认的etcd存储,引入了内存存储(即实现Storage interface)。

因为存放在内存中,因此监控数据是没有持久化的,可以通过第三方存储来拓展。

来看下Metrics-Server的架构:

从 Kubelet、cAdvisor 等获取度量数据,再由metrics-server提供给 Dashboard、HPA 控制器等使用。本质上metrics-server相当于做了一次数据的转换,把cadvisor格式的数据转换成了kubernetes的api的json格式。由此我们不难猜测,metrics-server的代码中必然存在这种先从metric中获取接口中的所有信息,再解析出其中的数据的过程。我们给metric-server发送请求时,metrics-server中已经定期从中cadvisor获取好数据了,当请求发过来时直接返回缓存中的数据。

数据的流转:

三.部署

yaml文件下载

官方地址是:https://github.com/kubernetes-sigs/metrics-server

最新的版本是v0.6.1

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

部署

直接使用如下命令部署

kubectl apply -f components.yaml

因为镜像在国外网站,无法进行拉取镜像,我们首先用docker search一下,看docker hub上有没有镜像,然后逐个去测试,下载,版本要使用v0.6.1

a.镜像问题

1.搜索docker hub上的metrics-server镜像

root@k8s-master1:~# docker search metrics-server

NAME 改2个两个地方即可,一个是由于网络问题无法下载的镜像,一个是报500错误问题,这2个地方,修改 DESCRIPTION STARS OFFICIAL AUTOMATED

mirrorgooglecontainers/metrics-server-amd64 17

bitnami/metrics-server Bitnami Docker Image for Metrics Server 8 [OK]

rancher/metrics-server 5

eipwork/metrics-server 2

cytopia/metrics-server-prom A Docker image on which Prometheus can scrap… 2

rancher/metrics-server-amd64 2

willdockerhub/metrics-server sync from k8s.gcr.io/metrics-server/metrics-… 1

kanewinter/metrics-server-prom-aws Added aws-iam-authenticator to cytopia/metri… 1

vivareal/metrics-server-exporter metrics-server exporter 1

radishgz/metrics-server-amd64 gcr.io/google_containers/metrics-server-amd6… 1 [OK]

anjia0532/metrics-server-amd64 1

htcfive/metrics-server-amd64 metrics-server-amd64:v0.3.6 0

ibmcom/metrics-server-s390x 0

ibmcom/metrics-server-ppc64le 0

dyrnq/metrics-server k8s.gcr.io/metrics-server/metrics-server 0

f3n9/metrics-server mirror of k8s.gcr.io/metrics-server-amd64:v0… 0

ifnoelse/metrics-server-amd64 metrics-server-amd64 0 [OK]

ghouscht/metrics-server-exporter Export metrics-server metrics to prometheus 0

ibmcom/metrics-server-amd64 0

roywangtj/metrics-server-amd64 metrics-server-amd64 0 [OK]

ibmcom/metrics-server 0

v5cn/metrics-server sync k8s.gcr.io/metrics-server/metrics-serve… 0

yametech/metrics-server-amd64 0

cloudnil/metrics-server-amd64 metrics-server-amd64 0 [OK]

carlziess/metrics-server-amd64-v0.2.1 metrics-server-amd64:v0.2.1 0 [OK]

root@k8s-master1:~#

2.下载到合适的镜像后,先将其tag一下,然后push到之前搭建的harbor网站上去

root@k8s-master1:~# docker tag willdockerhub/metrics-server:v0.6.1 registry.harbor.com/library/metrics-server:v0.6.1

root@k8s-master1:~# docker push registry.harbor.com/library/metrics-server:v0.6.1

3.修改下载的components.yaml的文件

root@k8s-master1:~# sed -i s#k8s.gcr.io/metrics-server/metrics-server:v0.6.1#registry.harbor.com/test/metrics-server:v0.6.1#g components.yaml

4.删除之前部署的metrics-server,并且重新部署

root@k8s-master1:~# kubectl delete -f components.yaml

serviceaccount "metrics-server" deleted

clusterrole.rbac.authorization.k8s.io "system:aggregated-metrics-reader" deleted

clusterrole.rbac.authorization.k8s.io "system:metrics-server" deleted

rolebinding.rbac.authorization.k8s.io "metrics-server-auth-reader" deleted

clusterrolebinding.rbac.authorization.k8s.io "metrics-server:system:auth-delegator" deleted

clusterrolebinding.rbac.authorization.k8s.io "system:metrics-server" deleted

service "metrics-server" deleted

deployment.apps "metrics-server" deleted

apiservice.apiregistration.k8s.io "v1beta1.metrics.k8s.io" deleted

root@k8s-master1:~# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

root@k8s-master1:~#

5.查看metrics-server能否能够正常启动,发现无法正常启动,并且报500错误

root@k8s-master1:~# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-74586cf9b6-476cz 1/1 Running 3 (5h32m ago) 26h

coredns-74586cf9b6-trxrm 1/1 Running 4 (5h32m ago) 26h

etcd-k8s-master1 1/1 Running 3 (5h32m ago) 26h

kube-apiserver-k8s-master1 1/1 Running 3 (5h32m ago) 26h

kube-controller-manager-k8s-master1 1/1 Running 22 (20m ago) 26h

kube-proxy-8gjdf 1/1 Running 4 (5h19m ago) 26hPU% MEMORY(bytes) MEMORY%

master1 272m 3% 4272Mi 29%

node1 384m 5% 9265Mi 30%

node2 421m 5% 14476Mi 48%

kube-proxy-h5c7j 1/1 Running 4 (5h32m ago) 26h

kube-proxy-kwf78 1/1 Running 5 (5h20m ago) 26h

kube-scheduler-k8s-master1 1/1 Running 24 (20m ago) 26h

metrics-server-54f89f765b-t92qm 0/1 Running 0 36s

root@k8s-master1:~# kubectl describe pods -n kube-system metrics-server-54f89f765b-t92qm

........

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 50s default-scheduler Successfully assigned kube-system/metrics-server-54f89f765b-t92qm to k8s-node1

Normal Pulled 48s kubelet Container image "registry.harbor.com/library/metrics-server:v0.6.1" already present on machine

Normal Created 48s kubelet Created container metrics-server

Normal Started 48s kubelet Started container metrics-server

Warning Unhealthy 9s (x2 over 19s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 500

root@k8s-master1:~#

b.500错误

解决方案1:直接忽略证书验证

出现500错误后,先删除components.yaml部署的metrics-server

kubecrl delete -f components.yaml

出现500错误后,修改components.yaml文件,增加参数–kubelet-insecure-tls即可

.....

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

image: registry.harbor.com/library/metrics-server:v0.6.1

imagePullPolicy: IfNotPresent

livenessProbe:

......

修改好之后,再次应用

root@k8s-master1:~# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

root@k8s-master1:~#

解决方案2:

在各个节点修改kubelet的配置文件:vim /var/lib/kubelet/config.yaml,

增加serverTLSBootstrap: true

.......

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

serverTLSBootstrap: true

重启服务:

systemctl restart kubelet.service

查看认证信息:

root@k8s-master1:~# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-2z952 13m kubernetes.io/kubelet-serving system:node:k8s-master1 <none> Pending

csr-56sx7 15m kubernetes.io/kubelet-serving system:node:k8s-node2 <none> Pending

csr-ckts9 14m kubernetes.io/kubelet-serving system:node:k8s-node1 <none> Pending

通过认证: kubectl certificate approve csr-2z952 csr-56sx7 csr-ckts9

root@k8s-master1:~# kubectl certificate approve csr-2z952 csr-56sx7 csr-ckts9

certificatesigningrequest.certificates.k8s.io/csr-2z952 approved

certificatesigningrequest.certificates.k8s.io/csr-56sx7 approved

certificatesigningrequest.certificates.k8s.io/csr-ckts9 approved

root@k8s-master1:~# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-2z952 16m kubernetes.io/kubelet-serving system:node:k8s-master1 <none> Approved,Issued

csr-56sx7 19m kubernetes.io/kubelet-serving system:node:k8s-node2 <none> Approved,Issued

csr-ckts9 17m kubernetes.io/kubelet-serving system:node:k8s-node1 <none> Approved,Issued

root@k8s-master1:~#

四.验证

1.查看原生apiserver是否有metrics.k8s.io/v1beta1

root@k8s-master1:~# kubectl api-versions|grep metrics

metrics.k8s.io/v1beta1

2.查看metrics server pod是否运行正常

root@k8s-master1:~# kubectl get pods -n=kube-system |grep metrics

metrics-server-654cffbc87-87g2k 1/1 Running 0 5m36s

3.使用kubectl top 命令查看node和pod的cpu以及内存

root@k8s-master1:~# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master1 55m 5% 1535Mi 81%

k8s-node1 12m 1% 1233Mi 65%

k8s-node2 12m 1% 1288Mi 68%

root@k8s-master1:~# kubectl top pods -n kube-system

NAME CPU(cores) MEMORY(bytes)

coredns-74586cf9b6-476cz 2m 15Mi

coredns-74586cf9b6-trxrm 2m 17Mi

etcd-k8s-master1 17m 71Mi

kube-apiserver-k8s-master1 62m 347Mi

kube-controller-manager-k8s-master1 14m 43Mi

kube-proxy-8gjdf 1m 9Mi

kube-proxy-h5c7j 1m 22Mi

kube-proxy-kwf78 1m 9Mi

kube-scheduler-k8s-master1 4m 15Mi

metrics-server-654cffbc87-87g2k 3m 13Mi

五.参考资料

1.Kubernetes核心指标监控——Metrics Server

https://www.cnblogs.com/zhangmingcheng/p/15770672.html

2.关于RBAC

https://blog.csdn.net/luanpeng825485697/article/details/88375842

https://blog.csdn.net/qq_35745940/article/details/120693490?ops_request_misc=%257B%2522request%255Fid%2522%253A%2522165871012416782391833879%2522%252C%2522scm%2522%253A%252220140713.130102334…%2522%257D&request_id=165871012416782391833879&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2allsobaiduend~default-1-120693490-null-null.142v33pc_rank_34,185v2control&utm_term=k8s%20rbac&spm=1018.2226.3001.4187

3.关于错误500的解决方案

https://blog.csdn.net/qq_41582883/article/details/114301817