2020 Domain Adaptation 最新论文:插图速览(一)

2020 Domain Adaptation 最新论文:插图速览(一)

| Semantic Segmentation | Person Re-identification |

目录

2020 Domain Adaptation 最新论文:插图速览(一)

Semantic Segmentation

CSCL: Critical Semantic-Consistent Learning for Unsupervised Domain Adaptation

Domain Adaptive Semantic Segmentation Using Weak Labels

Content-Consistent Matching for Domain Adaptive Semantic Segmentation

Domain Adaptation Through Task Distillation

Unsupervised Domain Adaptation for Semantic Segmentation of NIR Images through Generative Latent Search

Unsupervised Intra-domain Adaptation for Semantic Segmentation through Self-Supervision

FDA: Fourier Domain Adaptation for Semantic Segmentation

Differential Treatment for Stuff and Things: A Simple Unsupervised Domain Adaptation Method for Semantic Segmentation

Unsupervised Instance Segmentation in Microscopy Images via Panoptic Domain Adaptation and Task Re-weighting

Learning Texture Invariant Representation for Domain Adaptation of Semantic Segmentation

MSeg: A Composite Dataset for Multi-domain Semantic Segmentation

Cross-Domain Semantic Segmentation via Domain-Invariant Interactive Relation Transfer

Person Re-identification

Unsupervised Domain Adaptation with Noise Resistible Mutual-Training for Person Re-identification

Joint Disentangling and Adaptation for Cross-Domain Person Re-Identification

Multiple Expert Brainstorming for Domain Adaptive Person Re-identification

Online Joint Multi-Metric Adaptation from Frequent Sharing-Subset Mining for Person Re-Identification

AD-Cluster: Augmented Discriminative Clustering for Domain Adaptive Person Re-identification

Smoothing Adversarial Domain Attack and p-Memory Reconsolidation for Cross-Domain Person Re-Identification

--------------------------------------------------------------------

Semantic Segmentation

--------------------------------------------------------------------

CSCL: Critical Semantic-Consistent Learning for Unsupervised Domain Adaptation

[paper]

Domain Adaptive Semantic Segmentation Using Weak Labels

[paper]

Content-Consistent Matching for Domain Adaptive Semantic Segmentation

[paper] [GitHub]

Domain Adaptation Through Task Distillation

[paper]

Fig. 1: Raw visual inputs (a) may significantly vary across different domains, yet they often share common recognition labels (b). In this work, we use these recognition labels to transfer tasks between different domains.

Fig. 2: Our method first distills a source model to a proxy model that uses labels as inputs. As proxy labels generalize to the target domain, a second stage of distillation is performed to produce a target model.

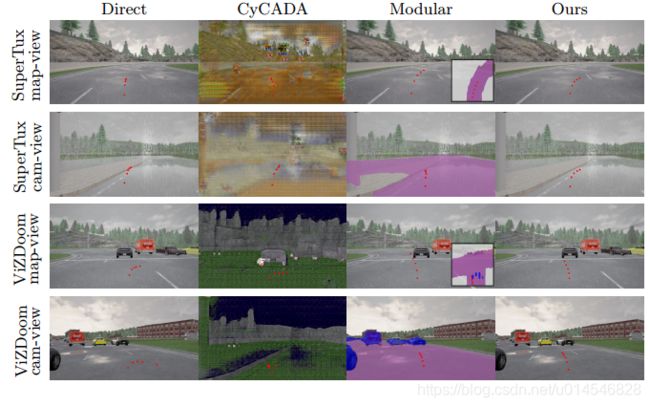

Fig. 3: We compare visual domains by their raw monocular images and corresponding semantic representations. While the domains vary significantly in their raw images, they are quite similar in their semantic modalities. However, note that the predicted modalities used by a modular pipeline are not perfect. For example, in the bottom-most row, the map-view prediction fails to capture the yellow car in view directly left of the agent. When supplied to the downstream driving policy, this vision failure can result in unintended behavior.

Fig. 4: We qualitatively examine how four different driving policies transfer to CARLA. Each policy is evaluated at the same state over four transfer methods, with predicted waypoints shown in red. Inferred modality is displayed for CyCADA and Modular. As shown, an inaccurate modality is used by a modular driving policy when transferring from SuperTuxKart via camera-view semantic segmentation. The median is misclassified as drivable road and the predicted waypoints direct the agent off of the road. (Best viewed on screen.)

Unsupervised Domain Adaptation for Semantic Segmentation of NIR Images through Generative Latent Search

[paper]

Abstract.

Segmentation of the pixels corresponding to human skin is an essential first step in multiple applications ranging from surveillance to heart-rate estimation from remote-photoplethysmography. However, the existing literature considers the problem only in the visible-range of the EM-spectrum which limits their utility in low or no light settings where the criticality of the application is higher. To alleviate this problem, we consider the problem of skin segmentation from the Near-infrared images. However, Deep learning based state-of-the-art segmentation techniques demands large amounts of labelled data that is unavailable for the current problem. Therefore we cast the skin segmentation problem as that of target-independent Unsupervised Domain Adaptation (UDA) where we use the data from the Red-channel of the visible-range to develop skin segmentation algorithm on NIR images. We propose a method for targetindependent segmentation where the ‘nearest-clone’ of a target image in the source domain is searched and used as a proxy in the segmentation network trained only on the source domain. We prove the existence of ‘nearest-clone’ and propose a method to find it through an optimization algorithm over the latent space of a Deep generative model based on variational inference. We demonstrate the efficacy of the proposed method for NIR skin segmentation over the state-of-the-art UDA segmentation methods on the two newly created skin segmentation datasets in NIR domain despite not having access to the target NIR data. Additionally, we report state-of-the-art results for adaption from Synthia to Cityscapes which is a popular setting in Unsupervised Domain Adaptation for semantic segmentation. The code and datasets are available at https://github.com/ambekarsameer96/GLSS.

[2020 CVPR]

Unsupervised Intra-domain Adaptation for Semantic Segmentation through Self-Supervision

[paper]

Figure 1: We propose a two-step self-supervised domain adaptation technique for semantic segmentation. Previous works solely adapt the segmentation model from the source domain to the target domain. Our work also consider adapting from the clean map to the noisy map within the target domain.

Open Compound Domain Adaptation

[paper]

Figure 2: Overview of disentangling domain characteristics and curriculum domain adaptation. We separate characteristics specific to domains from those discriminative between classes. It is achieved by a class-confusion algorithm in an unsupervised manner. The teased out domain feature is used to construct a curriculum for domain-robust learning.

Figure 3: Overview of the memory-enhanced deep neural network. We enhance our network with a memory module that facilitates knowledge transfer from the source domain to target domain instances, so that the network can dynamically balance the input information and the memory-transferred knowledge for more agility towards previously unseen domains.

Figure 4: t-SNE Visualization of our (a) class-discriminative features, (b) domain features, and (c) curriculum. Our framework disentangles the mixed-domain data into class-discriminative factors and domain-focused factors. We use the domain-focused factors to construct a learning curriculum for domain adaptation.

FDA: Fourier Domain Adaptation for Semantic Segmentation

[paper]

Figure 1. Spectral Transfer: Mapping a source image to a target “style” without altering semantic content. A randomly sampled target image provides the style by swapping the low-frequency component of the spectrum of the source image with its own. The outcome “source image in target style” shows a smaller domain gap perceptually and improves transfer learning for semantic segmentation as measured in the benchmarks in Sect. 3.

Figure 2. Effect of the size of the domain β, shown in Fig. 1, where the spectrum is swapped: increasing β will decrease the domain gap but introduce artifacts (see zoomed insets). We tune β until artifacts in the transformed images become obvious and use a single value for some experiments. In other experiments, we maintain multiple values simultaneously in a multi-scale setting (Table 1).

Figure 3. Charbonnier penalty used for robust entropy minimization, visualized for different values of the parameter η.

Figure 4. Larger β generalizes better if trained from scratch, but induce more bias when combined with Self-supervised Training.

Differential Treatment for Stuff and Things: A Simple Unsupervised Domain Adaptation Method for Semantic Segmentation

[paper]

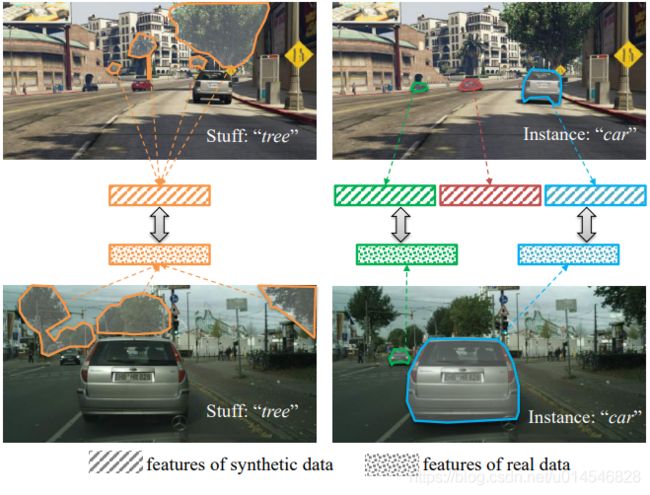

Figure 1. Illustration of the proposed Stuff Instance Matching (SIM) structure. By matching the most similar stuff regions and things (i.e., instances) with differential treatment, we can adapt the features more accurately from the source domain to the target domain.

Figure 3. Framework. 1) The overall structure is shown on the left. The solid lines represent the first step training procedure in Eqn (12), and the dash lines along with the solid lines represent the second step training procedure in Eqn (13). The blue lines correspond to the flow direction of the source domain data, and the orange lines correspond to the flow direction of target domain data. ∩ is an operation defined in Eqn (4); + is an operation defined in Eqn (11) and is only effective in the second step training procedure. 2) The specific module design is shown on the right. h, w and c represent the height, width and channels for the feature maps; H, W and n represent the height, width and class number for the output maps of the semantic head. For SH, the input ground truth label map supervise the the semantic segmentation task, and the semantic head also generates a predicted label map joining the operations of ∩ and +. For SM and IM, the grey dash lines represent the matching operation defined in Eqn (6) and (8) respectively.

Unsupervised Instance Segmentation in Microscopy Images via Panoptic Domain Adaptation and Task Re-weighting

[paper]

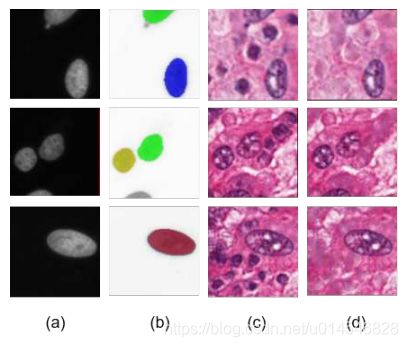

Figure 1. Example images of our proposed framework. (a) fluorescence microscopy images; (b) real histopathology images; (c) our synthesized histopathology images; (d) nuclei segmentation generated by our proposed UDA method; (e) ground truth.

Figure 2. Overall architecture for our proposed CyC-PDAM architecture. The annotations of the real histopathology patches are not used during training.

Figure 3. Detailed illustration of Panoptic Domain Adaptive Mask R-CNN (PDAM). Ci and F C represent a convolution layer, and a fully connected layer, respectively. Ri1 and Ri2 mean the first and second convolutional layers in the ith residual block, respectively. ReLU and normalization layers after each convolutional block are omitted for brevity.

Figure 4. Visual results for the effectiveness of nuclei inpainting mechanism. (a) original fluorescence microscopy patches; (b) corresponding nuclei annotations; (c) initial synthesized images from CycleGAN; (d) final synthesized images after nuclei inpainting mechanism.

Learning Texture Invariant Representation for Domain Adaptation of Semantic Segmentation

[paper]

Figure 1: Process of learning texture-invariant representation. We consider both the stylized image and the translated image as the source image. The red line indicates the flow of the source image and the blue line indicates the flow of the target image. By segmentation loss of the stylized source data, the model learns texture-invariant representation. By adversarial loss, the model reduces the distribution gap in feature space.

Figure 2: Texture comparison. Original GTA5 [20] images (first column), generated images by CycleGAN [29] (second column) and by Style-swap [4] (third column).

Figure 3: Results of stylization.

MSeg: A Composite Dataset for Multi-domain Semantic Segmentation

[paper]

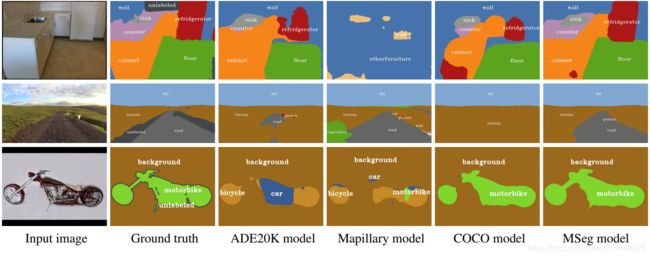

Figure 1: MSeg unifies multiple semantic segmentation datasets by reconciling their taxonomies and resolving incompatible annotations. This enables training models that perform consistently across domains and generalize better. Input images in this figure were taken (top to bottom) from the ScanNet [8], WildDash [44], and Pascal VOC [10] datasets, none of which were seen during training.

Figure 2: Visualization of a subset of the class mapping from each dataset to our unified taxonomy. This figure shows 40 of the 194 classes; see the supplement for the full list. Each filled circle means that a class with that name exists in the dataset, while an empty circle means that there is no pixel from that class in the dataset. A rectangle indicates that a split and/or merge operation was performed to map to the specified class in MSeg. Rectangles are zoomed-in in the right panel. Merge operations are shown with straight lines and split operations are shown with dashed lines. (Best seen in color.)

Figure 3: Procedure for determining the set of categories in the MSeg taxonomy. See the supplement for more details.

Figure 4: Semantic classes in MSeg. Left: pixel counts of MSeg classes, in log scale. Right: percentage of pixels from each component dataset that contribute to each class. Any single dataset is insufficient for describing the visual world.

Cross-Domain Semantic Segmentation via Domain-Invariant Interactive Relation Transfer

[paper]

Figure 1: The overall architectural of our proposed model. The images from source and target domains will be input to both the fine-grained and coarse-grained components. Our method addresses the adaptation of semantic segmentation neural networks through modeling domain-invariant interaction between the fined-grained component using pixel-level category information and the coarse-grained component using image-level category information. In the corse-grained component, the region expansion units are specified by different colors. The notation “Conv” denotes a convolutional layer.

--------------------------------------------------------------------

Person Re-identification

--------------------------------------------------------------------

[2020 ECCV]

Unsupervised Domain Adaptation with Noise Resistible Mutual-Training for Person Re-identification

[paper]

Joint Disentangling and Adaptation for Cross-Domain Person Re-Identification

[paper]

Abstract

Although a significant progress has been witnessed in supervised person re-identification (re-id), it remains challenging to generalize re-id models to new domains due to the huge domain gaps. Recently, there has been a growing interest in using unsupervised domain adaptation to address this scalability issue. Existing methods typically conduct adaptation on the representation space that contains both id-related and id-unrelated factors, thus inevitably undermining the adaptation efficacy of id-related features. In this paper, we seek to improve adaptation by purifying the representation space to be adapted. To this end, we propose a joint learning framework that disentangles id-related/unrelated features and enforces adaptation to work on the id-related feature space exclusively. Our model involves a disentangling module that encodes cross-domain images into a shared appearance space and two separate structure spaces, and an adaptation module that performs adversarial alignment and self-training on the shared appearance space. The two modules are co-designed to be mutually beneficial. Extensive experiments demonstrate that the proposed joint learning framework outperforms the state-of-the-art methods by clear margins.

Fig. 1: An overview of the proposed joint disentangling and adaptation framework. The disentangling module encodes images of two domains into a shared appearance space (id-related) and a separate source/target structure space (idunrelated) via cross-domain image generation. Our adaptation module is exclusively conducted on the id-related feature space, encouraging the intra-class similarity and inter-class difference of the disentangled appearance features.

Fig. 2: A schematic overview of the cross-domain cycle-consistency image generation. Our disentangling and adaptation modules are connected by the shared appearance encoder. The two domains also share the image and domain discriminators, but have their own structure encoders and decoders. A dashed line indicates that the input image to the source/target structure encoder is converted to gray-scale.

Multiple Expert Brainstorming for Domain Adaptive Person Re-identification

[paper]

Abstract

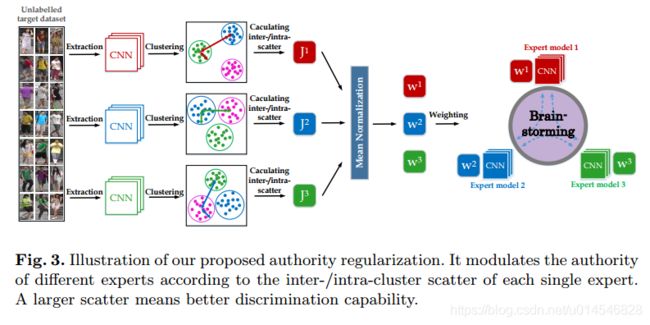

Often the best performing deep neural models are ensembles of multiple base-level networks, nevertheless, ensemble learning with respect to domain adaptive person re-ID remains unexplored. In this paper, we propose a multiple expert brainstorming network (MEB-Net) for domain adaptive person re-ID, opening up a promising direction about model ensemble problem under unsupervised conditions. MEBNet adopts a mutual learning strategy, where multiple networks with different architectures are pre-trained within a source domain as expert models equipped with specific features and knowledge, while the adaptation is then accomplished through brainstorming (mutual learning) among expert models. MEB-Net accommodates the heterogeneity of experts learned with different architectures and enhances discrimination capability of the adapted re-ID model, by introducing a regularization scheme about authority of experts. Extensive experiments on large-scale datasets (Market-1501 and DukeMTMC-reID) demonstrate the superior performance of MEB-Net over the state-of-the-arts. Code is available at https://github.com/YunpengZhai/MEB-Net.

[2020 CVPR]

Online Joint Multi-Metric Adaptation from Frequent Sharing-Subset Mining for Person Re-Identification

[paper]

Figure 1. The normalized pair-wise distance distributions of both training and testing samples based on the well-trained HA-CNN model on Market1501 dataset demonstrate the severe training-testing data distribution shifting issue, where the extremely challenging hard negative distractors (in blue box) will significantly influence the retrieval accuracy (the Original top-10 retrieval results). Even using the state-of-the-art online re-ranking method [45] (RR), the ground-truth (in red box) still has a lower rank than the distractors. Our method succeeds in handling the distractors so that the true-match is successfully re-ranked to the top position in the list (Ours).

Figure 2. The online testing query and gallery samples are fed into the offline learned baseline model to obtain the feature descriptors firstly. The proposed frequent sharing-subset (SSSet) mining model is performed to the extracted features to generate multiple sharingsubsets which are further utilized by the proposed joint multi-metric adaptation model (The same sample may be contained by multiple SSSets since it shares different visual similarity relationships with different samples.). By fusing the learned matching metrics for each query and gallery sample, our final ranking list is obtained by a bi-directional retrieval matching (Sec. 3.5).

Figure 3. A CFI-Tree is constructed based on T . The same identity may be contained by multiple ti so that there may be multiple nodes for the same identity.

AD-Cluster: Augmented Discriminative Clustering for Domain Adaptive Person Re-identification

[paper]

Figure 1. AD-Cluster alternatively trains an image generator and a feature encoder, which respectively Maximizes intra-cluster distance (i.e., increase the diversity of sample space) and Minimizes intra-cluster distance in feature space (i.e., decrease the distance in new feature space). It enforces the discrimination ability of re-ID models in an adversarial min-max manner. (Best viewed in color)

Figure 2. The flowchart of the proposed AD-Cluster: The AD-Cluster consists of three components including density-based clustering, adaptive sample augmentation, and discriminative feature learning. Density-based clustering estimates sample pseudo-labels in the target domain. Adaptive sample augmentation maximizes the sample diversity cross cameras while retaining the original pseudo-labels. Discriminative learning drives the feature extractor to minimize the intra-cluster distance. Ldiv denotes the diversity loss and Ltri indicates the triplet loss. (Best viewed in color)

Figure 3. The proposed adversarial min-max learning: With a fixed feature encoder f, the generator g learns to generate samples that maximizes intra-cluster distance. With a fixed generator g, the feature encoder f learns to minimize the intra-cluster distance and maximize the inter-cluster distance under the guide of triplet loss.

Figure 4. The sparsely and incorrectly distributed person image features of different identities are grouped to more compact and correct clusters through the iterative clustering process. (Best viewed in color with zoom in.)

Smoothing Adversarial Domain Attack and p-Memory Reconsolidation for Cross-Domain Person Re-Identification

[paper]

Figure 1. The overview of our proposed method. It consists of two main components, i.e., a smoothing adversarial domain attack (SADA) and a p-memory Reconsolidation (pMR). First, we train a (Ns +Nt)-class camera-based classifier using both the source and target images. Second, this camera-based classifier forces the source images to align the target images. Third, the aligned source images are used to pretrain a deep model as a transferred model that is applied to the target domain for Density-Based Spatial Clustering (DBSC). Because the knowledge transferred from the source domain will be forgotten during the target distribution mining, a pMR module is naturally introduced to reconsolidate the transferred knowledge. The pMR module takes the aligned source images as input with probability p and the target images with probability 1 − p .

Figure 2. Transfer amnesia problem. We evaluate the rank-1 accuracy and mean Average Precision (mAP) on the test set of the DukeMTMC-reID dataset during the self-training on the Market1501 dataset. The self-training model is initialized with the pretrained model on DukeMTMC-reID.