门控循环单元(GRU)【动手学深度学习v2】

理论

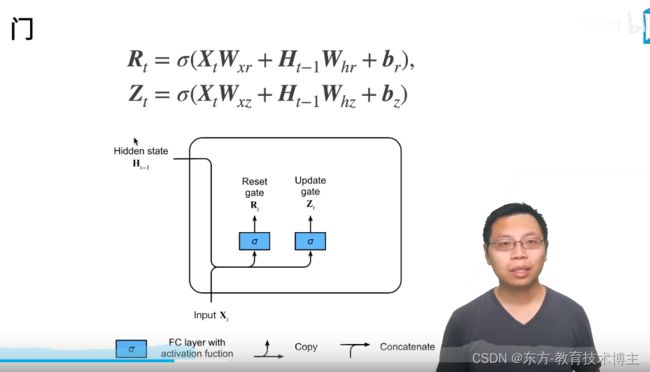

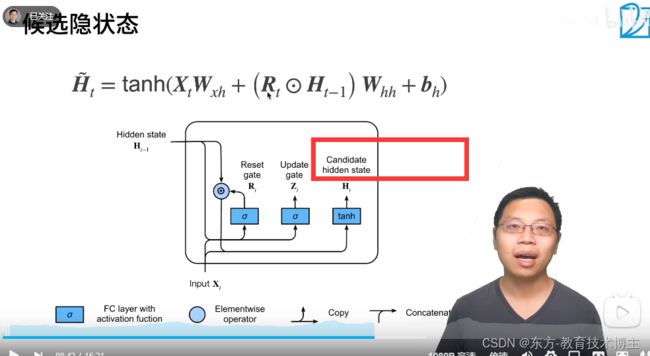

这里面的  这个符号值得是 按元素相乘。

这个符号值得是 按元素相乘。

Rt理解为 和Ht 长度一样的一维向量。(这么理解)

这里如果Rt长的像0的话,那么乘出来的结果,就也像0。

要是像0 的话,相当于是说 把上一刻的隐藏转态给遗忘掉。

在这里Rt是可以学的。

全是0的话,相当于回到初始转态。

全是1的话,都保留。

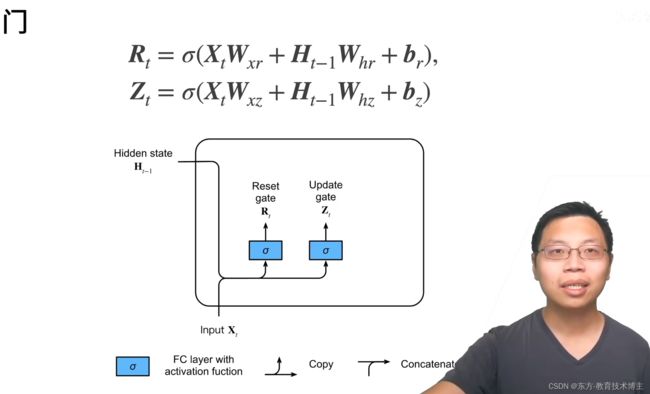

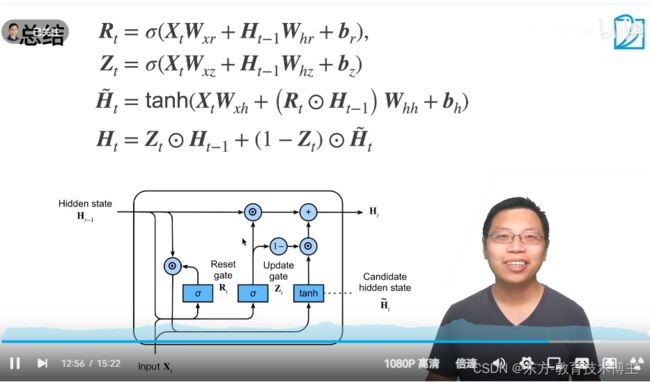

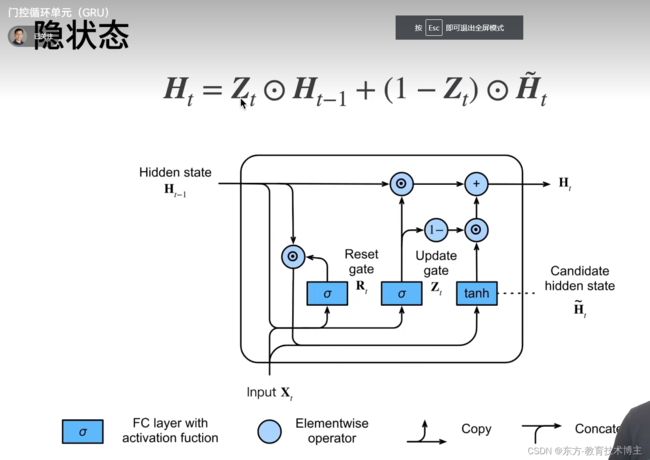

真正的隐状态:

假设Zt都等于1,那么就相当于只看过去的状态,不看现在的状态,

如果Zt都等于0,那么相当于只看当前状态,

Zt可以理解为 过去隐藏状态 ,在当前隐藏状态中,占了多少的权重。

感觉GRU里reset和update这个命名挺精髓的:

1.理论上Whh完全具备reset的能力,使得公式退化为全连接(Whh全为0),但是专门加了一个reset,且sigmoid为激活函数(特性是输出值0-1,且模仿的是0到1的阶跃),给我的感觉是在设计网络的时候专门引导网络更容易重置Ht-1项,在这里reset名副其实。

2.而update的设计,让我想到的是resnet里面引入X的操作,只是这里引入的是Ht-1,而且通过update门来控制两边的权重;如果忽略update门的权重,可以看做resnet当中关于Ht和Ht-1的残差块(resnet是f(x)与x的块)。但是在这里因为update门也是sigmoid函数,使得函数以较大的可能性在使用Ht-1和ht两种情况中做选择,相对于RNN当中,它给的是输出Ht-1的可能,那这个update门是不是叫remember门更为合适。

欢迎指正,特别是老师!

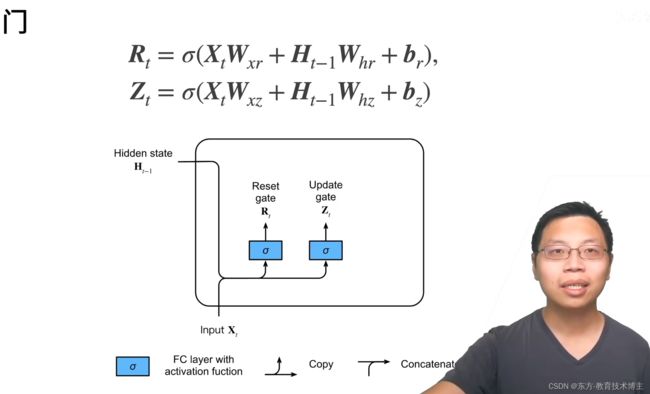

特别的:

Z里面全0,

R里面全1的情况,相当于把前面的隐藏状态直接拿过来用。

就是RNN。

代码实现

其实实现 不算是 复杂,

就是上面图里面的公式,拿过来到

从零实现:

import torch

from torch import nn

from d2l import torch as d2l

batch_size, num_steps = 32, 35

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

# 初始化模型参数

def get_params(vocab_size, num_hiddens, device):

num_inputs = num_outputs = vocab_size

def normal(shape):

return torch.randn(size=shape, device=device) * 0.01

def three():

return (normal(

(num_inputs, num_hiddens)), normal((num_hiddens, num_hiddens)),

torch.zeros(num_hiddens, device=device))

W_xz, W_hz, b_z = three() # GRU多了这两行

W_xr, W_hr, b_r = three() # GRU多了这两行

W_xh, W_hh, b_h = three()

W_hq = normal((num_hiddens, num_outputs))

b_q = torch.zeros(num_outputs, device=device)

params = [W_xz, W_hz, b_z, W_xr, W_hr, b_r, W_xh, W_hh, b_h, W_hq, b_q]

for param in params:

param.requires_grad_(True)

return params

# 定义隐藏状态的初始化函数

def init_gru_state(batch_size, num_hiddens, device):

return (torch.zeros((batch_size, num_hiddens), device=device),)

# 定义门控循环单元模型

def gru(inputs, state, params):

W_xz, W_hz, b_z, W_xr, W_hr, b_r, W_xh, W_hh, b_h, W_hq, b_q = params

H, = state

outputs = []

for X in inputs:

Z = torch.sigmoid((X @ W_xz) + (H @ W_hz) + b_z)

R = torch.sigmoid((X @ W_xr) + (H @ W_hr) + b_r)

H_tilda = torch.tanh((X @ W_xh) + ((R * H) @ W_hh) + b_h)

H = Z * H + (1 - Z) * H_tilda

Y = H @ W_hq + b_q

outputs.append(Y)

return torch.cat(outputs, dim=0), (H,)

# 训练

vocab_size, num_hiddens, device = len(vocab), 256, d2l.try_gpu()

num_epochs, lr = 500, 1

model = d2l.RNNModelScratch(len(vocab), num_hiddens, device, get_params,

init_gru_state, gru)

d2l.train_ch8(model, train_iter, vocab, lr, num_epochs, device)

简洁实现:

# 简洁实现

num_inputs = vocab_size

gru_layer = nn.GRU(num_inputs, num_hiddens)

model = d2l.RNNModel(gru_layer, len(vocab))

model = model.to(device)

d2l.train_ch8(model, train_iter, vocab, lr, num_epochs, device)

运行结果:

time traveller

<Figure size 350x250 with 1 Axes>

time traveller ate ate te ate ate te ate ate te ate ate te ate a

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time travellere the the the the the the the the the the the the

<Figure size 350x250 with 1 Axes>

time travellere the the the the the the the the the the the the

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time travellere the the the the the the the the the the the the

<Figure size 350x250 with 1 Axes>

time traveller an the the the the the the the the the the the th

<Figure size 350x250 with 1 Axes>

time travellerererererererererererererererererererererererererer

<Figure size 350x250 with 1 Axes>

time travellere the the the the the the the the the the the the

<Figure size 350x250 with 1 Axes>

time travellere the the the the the the the the the the the the

<Figure size 350x250 with 1 Axes>

time travellere the the the the the the the the the the the the

<Figure size 350x250 with 1 Axes>

time travellere the the the the the the the the the the the the

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time traveller the the thered the the thered the the thered the

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time traveller the the the the the the the the the the the the t

<Figure size 350x250 with 1 Axes>

time traveller the time traveller the time traveller the time tr

<Figure size 350x250 with 1 Axes>

time traveller the the thing the time the traceller the thing th

<Figure size 350x250 with 1 Axes>

time traveller the three dimensions of the time trave all the ti

<Figure size 350x250 with 1 Axes>

time traveller the three dimensions of the time traveller the tr

<Figure size 350x250 with 1 Axes>

time traveller the proples that is a somient and the three and t

<Figure size 350x250 with 1 Axes>

time traveller the three dimensions of the three dimensions of t

<Figure size 350x250 with 1 Axes>

time traveller than a man a cour a courar this is in time travel

<Figure size 350x250 with 1 Axes>

time traveller than a man existery light of shace and they tound

<Figure size 350x250 with 1 Axes>

time traveller that is the time travellerit s ageat on the than

<Figure size 350x250 with 1 Axes>

time traveller scollised of ho s all phace and they thinkness ge

<Figure size 350x250 with 1 Axes>

time travellerist four dimensions of space why is thend whing th

<Figure size 350x250 with 1 Axes>

time travellerit s agee trantionirg inewald the ment light of sh

<Figure size 350x250 with 1 Axes>

time travellerit that is the stacl proceeded anyreal inds reccan

<Figure size 350x250 with 1 Axes>

time traveller spoce the about in the foom the prowel instimited

<Figure size 350x250 with 1 Axes>

time traveller proceeded anyreal thing so move about in all only

<Figure size 350x250 with 1 Axes>

time traveller smiling and they it isspone of the grove is a mom

<Figure size 350x250 with 1 Axes>

time traveller sot a on thisknow of cheirumone fire iftine in an

<Figure size 350x250 with 1 Axes>

time travellerit soug i now have abeet in wilb have no means of

<Figure size 350x250 with 1 Axes>

time traveller for so it will be convenient to speak of himwas e

<Figure size 350x250 with 1 Axes>

time traveller proceeded anyreal body must have extension in fou

<Figure size 350x250 with 1 Axes>

time traveller for so it will be convenient to speak of himwas e

<Figure size 350x250 with 1 Axes>

time travelleryou can show black is white by argument said filby

<Figure size 350x250 with 1 Axes>

time travelleryou can show black is white by argument said filby

<Figure size 350x250 with 1 Axes>

time travelleryou can show black is white by argument said filby

<Figure size 350x250 with 1 Axes>

time traveller for so it will be convenient to speak of himwas e

<Figure size 350x250 with 1 Axes>

time travelleryou can show black is white by argument said filby

<Figure size 350x250 with 1 Axes>

time travelleryou can show black is white by argument said filby

<Figure size 350x250 with 1 Axes>

time travellery contenter mimensions of space ghic dimensions of

<Figure size 350x250 with 1 Axes>

perplexity 1.2, 9001.5 tokens/sec on cpu

time travellery contenter mimensions of space ghic dimensions of

travelleryou can showarde thing there was is alw ys canemer