NLP (二): word2vec

目录

- 基于推理的方法和神经网络

-

- 基于计数的方法的问题

- 基于推理的方法的概要

- 基于推理 v.s. 基于计数

- 神经网络中单词的处理方法

- 简单的 word2vec

-

- CBOW (continuous bag-of-words)

-

- CBOW 模型的推理

- 最大化对数似然

- CBOW 模型的学习

- word2vec 的权重和分布式表示

- 学习数据的准备

- CBOW 模型的实现

- skip-gram

-

- skip-gram

- skip-gram 模型的概率表示

- CBOW v.s. skip-gram

- word2vec 的高速化

-

- Embedding 层

- Negative Sampling

-

- 从多分类到二分类

- 负采样

- 改进版 word2vec 的学习

-

- CBOW 模型的实现

- skip-gram 模型的实现

- CBOW 模型的学习代码 (PTB 数据集)

- 单词向量的评价方法

-

- most similar task (相似度)

- analogy task (类推问题)

- 参考文献

基于推理的方法和神经网络

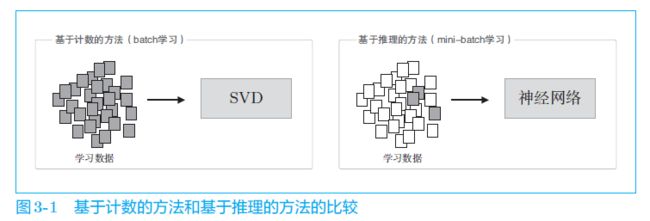

- 用向量表示单词的研究比较成功的方法大致可以分为两种:一种是基于计数的方法;另一种是基于推理的方法。两者的背景都是分布式假设

基于计数的方法的问题

- 基于计数的方法在处理大规模语料库时会出现问题。在现实世界中,语料库处理的单词数量非常大

- 比如,据说英文的词汇量超过 100 万个。如果词汇量超过 100 万个,那么使用基于计数的方法就需要生成一个 100 万 × × × 100 万的庞大矩阵,但对如此庞大的矩阵执行 SVD 显然是不现实的

- 基于计数的方法使用整个语料库的统计数据(共现矩阵和 PPMI 等),通过一次处理(SVD 等)获得单词的分布式表示。而基于推理的方法使用神经网络,通常在 mini-batch 数据上进行学习。这意味着神经网络一次只需要看一部分学习数据,并反复更新权重。这意味着,在词汇量很大的语料库中,即使 SVD 等的计算量太大导致计算机难以处理,神经网络也可以在部分数据上学习

- 同时,如果对语料库进行了更新,基于计数的方法需要重新计算,而基于推理的方法则可以很容易地进行参数的增量学习

基于推理的方法的概要

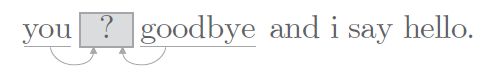

- 基于推理的方法将分布式假设归结为了下面的预测问题:当给出周围的单词(上下文)时,预测 “?” 处会出现什么单词。在这样的框架中,使用语料库来学习模型,使之能做出正确的预测,作为模型学习的产物,我们得到了单词的分布式表示

基于推理 v.s. 基于计数

- 这里有一个常见的误解,那就是基于推理的方法在准确度方面优于基于计数的方法。实际上,有研究表明,就单词相似性的定量评价而言,基于推理的方法和基于计数的方法难分上下

- 另外一个重要的事实是,基于推理的方法和基于计数的方法存在关联性。具体地说,使用了 skip-gram 和 Negative Sampling 的模型被证明与对整个语料库的共现矩阵(实际上会对矩阵进行一定的修改)进行特殊矩阵分解的方法具有相同的作用。换句话说,这两个方法论(在某些条件下)是“相通”的

- paper: Neural Word Embedding as Implicit Matrix Factorization

- 此外,在 word2vec 之后,有研究人员提出了 GloVe 方法。GloVe 方法融合了基于推理的方法和基于计数的方法。该方法的思想是,将整个语料库的统计数据的信息纳入损失函数,进行 mini-batch 学习。据此,这两个方法论成功地被融合在了一起

- paper: GloVe: Global Vectors for Word Representation

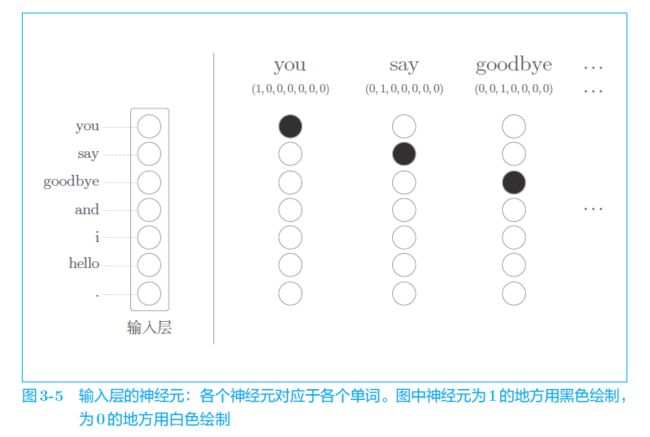

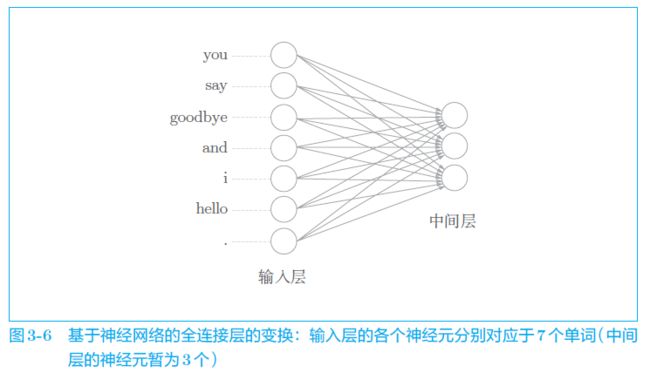

神经网络中单词的处理方法

# 这里省略了偏置 b

c = np.array([[1, 0, 0, 0, 0, 0, 0]]) # 输入 (1 x 7) 考虑了 mini-batch 的处理

W = np.random.randn(7, 3) # 权重

h = np.dot(c, W) # 中间节点

print(h)

# [[-0.70012195 0.25204755 -0.79774592]]

简单的 word2vec

- word2vec 一词最初用来指程序或者工具,但是随着该词的流行,在某些语境下,也指神经网络的模型。正确地说,CBOW (continuous bag-of-words) 模型和 skip-gram 模型是 word2vec 中使用的两个神经网络

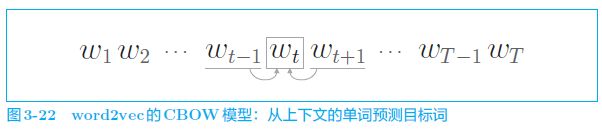

CBOW (continuous bag-of-words)

Bag-Of-Words 是 “一袋子单词” 的意思,这意味着袋子中单词的顺序被忽视了

CBOW 模型的推理

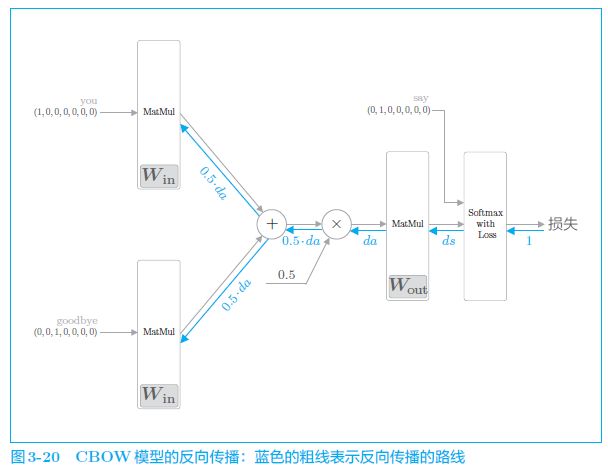

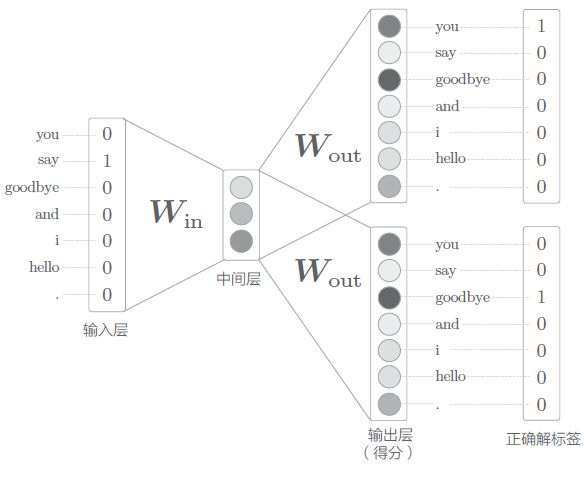

- 下图是 CBOW 模型的网络。它有两个输入层 (这里对上下文只考虑两个单词;如果考虑 N N N 个单词,则有 N N N 个输入层),经过中间层到达输出层

- 这里,从输入层到中间层的变换由相同的全连接层(权重为 W i n W_{in} Win)完成,中间层的神经元是各个输入层经全连接层变换后得到的值的“平均”。从中间层到输出层神经元的变换由另一个全连接层(权重为 W o u t W_{out} Wout)完成;在输出层后加一个 Softmax 层即可得到每个单词的出现概率

- 其中, W i n W_{in} Win 即为我们要的单词的分布式表示 (也可以说是第一个隐藏层的输出神经元: e T = i T W i n e^T=i^TW_{in} eT=iTWin)

from common.layers import MatMul

# 样本的上下文数据

c0 = np.array([[1, 0, 0, 0, 0, 0, 0]])

c1 = np.array([[0, 0, 1, 0, 0, 0, 0]])

# 权重的初始值

W_in = np.random.randn(7, 3)

W_out = np.random.randn(3, 7)

# 生成层

in_layer0 = MatMul(W_in)

in_layer1 = MatMul(W_in)

out_layer = MatMul(W_out)

# 正向传播

h0 = in_layer0.forward(c0)

h1 = in_layer1.forward(c1)

h = 0.5 * (h0 + h1)

s = out_layer.forward(h)

print(s)

# [[ 0.30916255 0.45060817 -0.77308656 0.22054131 0.15037278

# -0.93659277 -0.59612048]]

最大化对数似然

- CBOW 模型可以建模为后验概率的形式:

y k = P ( w t ∣ w t − 1 , w t + 1 ) y_k=P(w_t|w_{t-1},w_{t+1}) yk=P(wt∣wt−1,wt+1) - 如果加上交叉熵误差,那么可以把损失函数写为 负对数似然 (negative log likelihood) 的形式:

L = − ∑ k t k log y k = − log P ( w t ∣ w t − 1 , w t + 1 ) L=-\sum_kt_k\log y_k=-\log P(w_{t}|w_{t-1},w_{t+1}) L=−k∑tklogyk=−logP(wt∣wt−1,wt+1)如果将其扩展到整个语料库,则损失函数可以写为下式,最小化损失函数也就是最大化对数似然:

L = − 1 T ∑ t = 1 T log P ( w t ∣ w t − 1 , w t + 1 ) L=-\frac{1}{T}\sum_{t=1}^T\log P(w_{t}|w_{t-1},w_{t+1}) L=−T1t=1∑TlogP(wt∣wt−1,wt+1)

CBOW 模型的学习

将 Softmax 层和 Cross Entropy Error 层统一为 Softmax with Loss 层

word2vec 的权重和分布式表示

- word2vec 中使用的网络有两个权重; 一般而言,输入侧的权重 W i n W_{in} Win 的每一行对应于各个单词的分布式表示。另外,输出侧的权重 W o u t W_{out} Wout 也同样保存了对单词含义进行了编码的向量。只是,输出侧的权重在列方向上保存了各个单词的分布式表示

- 那么,我们最终应该使用哪个权重作为单词的分布式表示呢?这里有三个选项

- A. 只使用输入侧的权重

- B. 只使用输出侧的权重

- C. 同时使用两个权重 (根据如何组合这两个权重,存在多种方式,其中一个方式就是简单地将这两个权重相加)

- 就 word2vec(特别是 skip-gram 模型)而言,最受欢迎的是方案 A

学习数据的准备

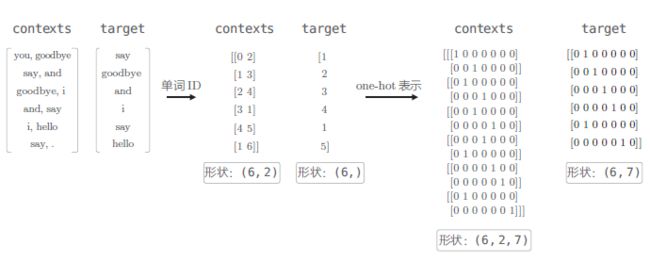

这里我们仍以 “You say goodbye and I say hello.” 这个只有一句话的语料库为例进行说明

- 将语料库中的目标单词作为目标词,将其周围的单词作为上下文提取出来。我们对语料库中的所有单词都执行该操作(两端的单词除外),可以得到 contexts(上下文)和 target。contexts 的各行成为神经网络的输入,target 的各行成为正确解标签

# corpus 为用 word_id 表示的语料库

def create_contexts_target(corpus, window_size=1):

target = corpus[window_size:-window_size]

contexts = []

for idx in range(window_size, len(corpus)-window_size):

cs = []

for t in range(-window_size, window_size + 1):

if t == 0:

continue

cs.append(corpus[idx + t])

contexts.append(cs)

return np.array(contexts), np.array(target)

from common.util import preprocess, create_contexts_target, convert_one_hot

text = 'You say goodbye and I say hello.'

corpus, word_to_id, id_to_word = preprocess(text)

contexts, target = create_contexts_target(corpus, window_size=1)

print(contexts)

# [[0 2]

# [1 3]

# [2 4]

# [3 1]

# [4 5]

# [1 6]]

print(target)

# [1 2 3 4 1 5]

# 将上下文和目标词转化为 one-hot 表示

vocab_size = len(word_to_id)

target = convert_one_hot(target, vocab_size)

contexts = convert_one_hot(contexts, vocab_size)

CBOW 模型的实现

import sys

sys.path.append('..')

import numpy as np

from common.layers import MatMul, SoftmaxWithLoss

class SimpleCBOW:

def __init__(self, vocab_size, hidden_size):

V, H = vocab_size, hidden_size

# 初始化权重

W_in = 0.01 * np.random.randn(V, H).astype('f') # 32 位

W_out = 0.01 * np.random.randn(H, V).astype('f')

# 生成层

self.in_layer = MatMul(W_in)

self.out_layer = MatMul(W_out)

self.loss_layer = SoftmaxWithLoss()

# 将所有的权重和梯度整理到列表中

layers = [self.in_layer, self.out_layer]

self.params, self.grads = [], []

for layer in layers:

self.params += layer.params

self.grads += layer.grads

# 将单词的分布式表示设置为成员变量

self.word_vecs = W_in

def forward(self, contexts, target):

h = self.in_layer.forward((contexts[:, 0] + contexts[:, 1]) * 0.5)

score = self.out_layer.forward(h)

loss = self.loss_layer.forward(score, target)

return loss

def backward(self, dout=1):

ds = self.loss_layer.backward(dout)

da = self.out_layer.backward(ds)

self.in_layer.backward(da)

return None

学习的实现

# coding: utf-8

import sys

sys.path.append('..') # 为了引入父目录的文件而进行的设定

from common.trainer import Trainer

from common.optimizer import Adam

from simple_cbow import SimpleCBOW

from common.util import preprocess, create_contexts_target, convert_one_hot

window_size = 1

hidden_size = 5

batch_size = 3

max_epoch = 1000

text = 'You say goodbye and I say hello.'

corpus, word_to_id, id_to_word = preprocess(text)

vocab_size = len(word_to_id)

contexts, target = create_contexts_target(corpus, window_size)

target = convert_one_hot(target, vocab_size)

contexts = convert_one_hot(contexts, vocab_size)

model = SimpleCBOW(vocab_size, hidden_size)

optimizer = Adam()

trainer = Trainer(model, optimizer)

trainer.fit(contexts, target, max_epoch, batch_size)

trainer.plot()

word_vecs = model.word_vecs

for word_id, word in id_to_word.items():

print(word, word_vecs[word_id])

skip-gram

skip-gram

import sys

sys.path.append('..')

import numpy as np

from common.layers import MatMul, SoftmaxWithLoss

class SimpleSkipGram:

def __init__(self, vocab_size, hidden_size):

V, H = vocab_size, hidden_size

# 初始化权重

W_in = 0.01 * np.random.randn(V, H).astype('f')

W_out = 0.01 * np.random.randn(H, V).astype('f')

# 生成层

self.in_layer = MatMul(W_in)

self.out_layer = MatMul(W_out)

self.loss_layer1 = SoftmaxWithLoss()

self.loss_layer2 = SoftmaxWithLoss()

# 将所有的权重和梯度整理到列表中

layers = [self.in_layer, self.out_layer]

self.params, self.grads = [], []

for layer in layers:

self.params += layer.params

self.grads += layer.grads

# 将单词的分布式表示设置为成员变量

self.word_vecs = W_in

def forward(self, contexts, target):

h = self.in_layer.forward(target)

s = self.out_layer.forward(h)

l1 = self.loss_layer1.forward(s, contexts[:, 0])

l2 = self.loss_layer2.forward(s, contexts[:, 1])

loss = l1 + l2

return loss

def backward(self, dout=1):

dl1 = self.loss_layer1.backward(dout)

dl2 = self.loss_layer2.backward(dout)

ds = dl1 + dl2

dh = self.out_layer.backward(ds)

self.in_layer.backward(dh)

return None

skip-gram 模型的概率表示

- skip-gram 模型可以建模为后验概率的形式:

P ( w t − 1 , w t + 1 ∣ w t ) P(w_{t-1},w_{t+1}|w_t) P(wt−1,wt+1∣wt)假定上下文单词间没有相关性 (条件独立),则

P ( w t − 1 , w t + 1 ∣ w t ) = P ( w t − 1 ∣ w t ) P ( w t + 1 ∣ w t ) P(w_{t-1},w_{t+1}|w_t)=P(w_{t-1}|w_t)P(w_{t+1}|w_t) P(wt−1,wt+1∣wt)=P(wt−1∣wt)P(wt+1∣wt) - 如果加上交叉熵误差,那么损失函数为:

L = − log P ( w t − 1 , w t + 1 ∣ w t ) = − ( log P ( w t − 1 ∣ w t ) + log P ( w t + 1 ∣ w t ) ) L=-\log P(w_{t-1},w_{t+1}|w_t)=-(\log P(w_{t-1}|w_t)+\log P(w_{t+1}|w_t)) L=−logP(wt−1,wt+1∣wt)=−(logP(wt−1∣wt)+logP(wt+1∣wt))如果将其扩展到整个语料库,则损失函数可以写为下式:

L = − 1 T ∑ t = 1 T ( log P ( w t − 1 ∣ w t ) + log P ( w t + 1 ∣ w t ) ) L=-\frac{1}{T}\sum_{t=1}^T(\log P(w_{t-1}|w_t)+\log P(w_{t+1}|w_t)) L=−T1t=1∑T(logP(wt−1∣wt)+logP(wt+1∣wt))

CBOW v.s. skip-gram

- 从单词的分布式表示的准确度来看,在大多数情况下,skip-grm 模型的结果更好。特别是随着语料库规模的增大,在低频词和类推问题的性能方面,skip-gram 模型往往会有更好的表现 (这可能是因为 skip-gram 模型训练时使用的任务更难)

- 就学习速度而言, CBOW 模型比 skip-gram 模型要快。这是因为 skip-gram 模型需要根据上下文数量计算相应个数的损失,计算成本变大

word2vec 的高速化

- 在之前实现的 word2vec 模型中,有两个地方存在性能瓶颈:

Embedding 层

- 如下图所示,one-hot 向量与权重的乘积其实就等价于从权重矩阵中取出某一行

- Embedding 层即为一个从权重参数中抽取 “单词 ID 对应行 (向量)” 的层。也就是说,在这个 Embedding 层存放词嵌入 (分布式表示)

单词的密集向量表示称为词嵌入(word embedding) 或者 单词的分布式表示(distributed representation)

class Embedding:

def __init__(self, W):

self.params = [W]

self.grads = [np.zeros_like(W)]

self.idx = None

def forward(self, idx):

W, = self.params

self.idx = idx

out = W[idx]

return out

def backward(self, dout):

dW, = self.grads

dW[...] = 0

np.add.at(dW, self.idx, dout) # 将 dout 加到 dW 上,需要进行加法的行由 self.idx 指定

# for i, word_id in enumerate(self.idx):

# dW[word_id] += dout[i]

return None

这里要注意,在反向传播时,如果写成

dW[self.idx] = dout会出问题:当idx中元素重复时 (e.g.idx= [0, 2, 0, 4]),同一个单词反向传播得到的多个梯度就会只累计一次:

为了解决这个问题,需要像上面的代码一样,对同一个单词反向传播得到的多个梯度进行累加

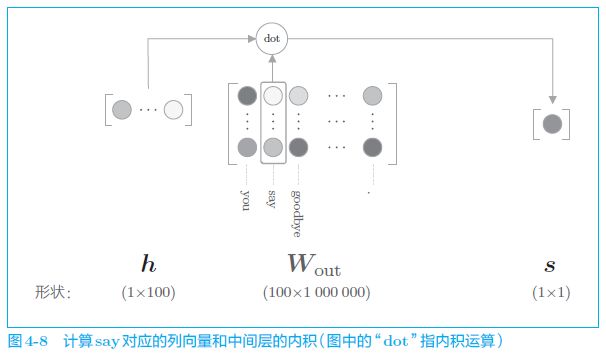

Negative Sampling

- Negative Sampling 用于减少中间层的神经元和权重矩阵 W o u t W_{out} Wout 的乘积以及 Softmax 层的计算量。使用 Negative Sampling 替代 Softmax,无论词汇量有多大,都可以使计算量保持较低或恒定

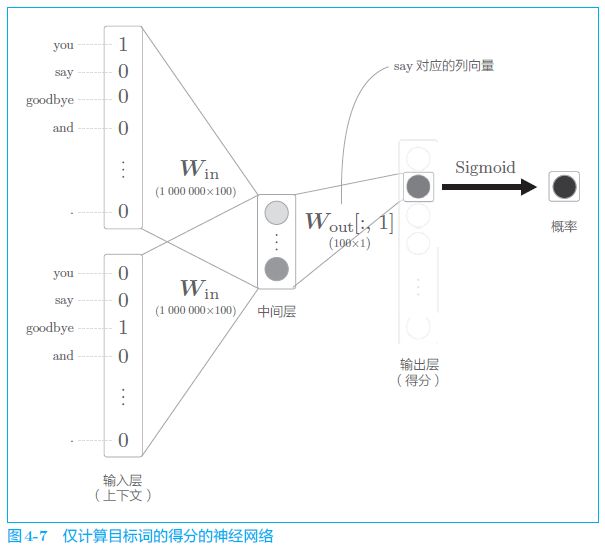

从多分类到二分类

- 用二分类拟合多分类是理解负采样的重点

网络改为二分类后,输入 “you” 和 “goodbye”,只需输出中间单词是 “say” 的概率 (Yes/No 问题)

Sigmoid With Loss

- 类似于多分类误差,在将 s s s 送入 Sigmoid 层得到概率后,再计算交叉熵误差即可。反向传播推导如下:

L = − t log y − ( 1 − t ) log ( 1 − y ) , y = 1 1 + e − x ∴ ∂ L ∂ y = − t y + ( 1 − t ) 1 1 − y , ∂ y ∂ x = y ( 1 − y ) ∴ ∂ L ∂ x = ∂ L ∂ y ∂ y ∂ x = − t ( 1 − y ) + ( 1 − t ) y = y − t L=-t\log y-(1-t)\log(1-y),\quad y=\frac{1}{1+e^{-x}}\\ \therefore \frac{\partial L}{\partial y}=-\frac{t}{y}+(1-t)\frac{1}{1-y},\quad \frac{\partial y}{\partial x}=y(1-y)\\ \therefore \frac{\partial L}{\partial x}=\frac{\partial L}{\partial y}\frac{\partial y}{\partial x}=-t(1-y)+(1-t)y=y-t L=−tlogy−(1−t)log(1−y),y=1+e−x1∴∂y∂L=−yt+(1−t)1−y1,∂x∂y=y(1−y)∴∂x∂L=∂y∂L∂x∂y=−t(1−y)+(1−t)y=y−t

class EmbeddingDot:

def __init__(self, W):

self.embed = Embedding(W)

self.params = self.embed.params

self.grads = self.embed.grads

self.cache = None # 保存正向传播时的计算结果

def forward(self, h, idx):

target_W = self.embed.forward(idx)

out = np.sum(target_W * h, axis=1)

self.cache = (h, target_W)

return out

def backward(self, dout):

h, target_W = self.cache

dout = dout.reshape(dout.shape[0], 1)

dtarget_W = dout * h

self.embed.backward(dtarget_W)

dh = dout * target_W

return dh

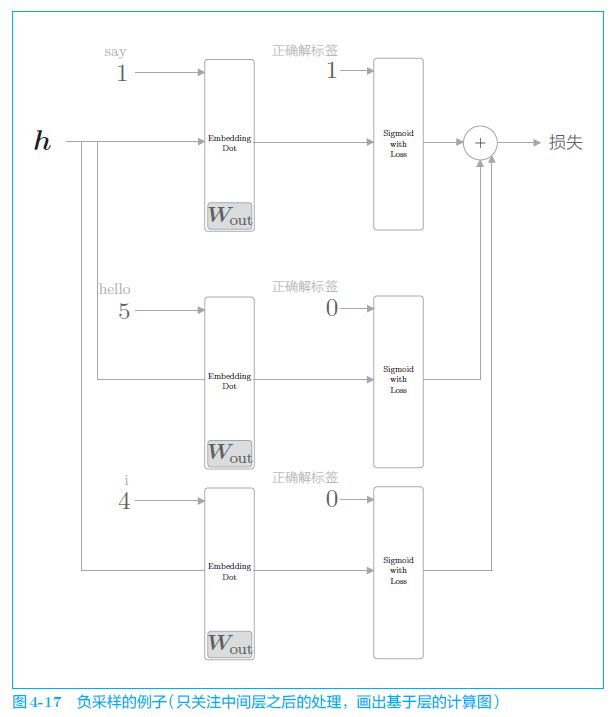

负采样

- 如果我们按照之前多分类的采样方法只采样正例,那么二分类的神经网络只需一直输出 1 即可使损失函数一直为 0,因此我们需要对负例进行采样

- 负采样方法既可以求将正例作为目标词时的损失,同时也可以采样若干个负例 (并不是采样全部负例),对这些负例求损失。然后,将这些数据的损失加起来,将其结果作为最终的损失

负采样的采样方法

- 具体来说,就是让语料库中经常出现的单词容易被抽到,让语料库中不经常出现的单词难以被抽到:先计算语料库中各个单词的出现概率 P ( w i ) P(w_i) P(wi),然后使用下式将出现概率进行平滑处理后得到最终的概率分布 (为了防止低频单词被忽略),然后使用这个概率分布对单词进行采样

# 负采样

class UnigramSampler:

def __init__(self, corpus, power, sample_size): # corpus 为单词 ID 列表格式的语料

self.sample_size = sample_size # 每个单词对的负例采样个数

self.vocab_size = None

self.word_p = None

counts = collections.Counter()

for word_id in corpus:

counts[word_id] += 1

vocab_size = len(counts)

self.vocab_size = vocab_size

self.word_p = np.zeros(vocab_size)

for i in range(vocab_size):

self.word_p[i] = counts[i]

self.word_p = np.power(self.word_p, power)

self.word_p /= np.sum(self.word_p)

def get_negative_sample(self, target):

batch_size = target.shape[0]

if not GPU:

# 针对每一个上下文单词对,都采样 sample_size 个负例

negative_sample = np.zeros((batch_size, self.sample_size), dtype=np.int32)

for i in range(batch_size):

p = self.word_p.copy()

target_idx = target[i]

p[target_idx] = 0

p /= p.sum()

negative_sample[i, :] = np.random.choice(self.vocab_size, size=self.sample_size, replace=False, p=p)

else:

# 在用 GPU (cupy)计算时,优先速度;有时目标词存在于负例中

negative_sample = np.random.choice(self.vocab_size, size=(batch_size, self.sample_size),

replace=True, p=self.word_p)

return negative_sample

unigram 是“1 个(连续)单词”的意思。同样地,bigram 是“2个连续单词”的意思,trigram 是“3 个连续单词”的意思。这里使用

UnigramSampler这个名字,是因为我们以 1个单词为对象创建概率分布。如果是 bigram,则 以 (‘you’, ‘say’)、(‘you’, ‘goodbye’)……这样的 2 个单词的组合为对象创建概率分布

# 包含 CBOW 中的 Embedding Dot + Sigmoid with loss

class NegativeSamplingLoss:

def __init__(self, W, corpus, power=0.75, sample_size=5):

self.sample_size = sample_size

self.sampler = UnigramSampler(corpus, power, sample_size)

# 包含 1 个正例用的层和 sample_size 个负例用的层,但要学习的权重其实就只有 W

# 生成 sample_size + 1 个层是为了保留前向传播的结果用于之后的反向传播

self.loss_layers = [SigmoidWithLoss() for _ in range(sample_size + 1)]

self.embed_dot_layers = [EmbeddingDot(W) for _ in range(sample_size + 1)]

self.params, self.grads = [], []

for layer in self.embed_dot_layers:

self.params += layer.params

self.grads += layer.grads

def forward(self, h, target):

batch_size = target.shape[0]

negative_sample = self.sampler.get_negative_sample(target)

# 正例的正向传播

score = self.embed_dot_layers[0].forward(h, target)

correct_label = np.ones(batch_size, dtype=np.int32)

loss = self.loss_layers[0].forward(score, correct_label)

# 负例的正向传播

negative_label = np.zeros(batch_size, dtype=np.int32)

for i in range(self.sample_size):

negative_target = negative_sample[:, i]

score = self.embed_dot_layers[1 + i].forward(h, negative_target)

loss += self.loss_layers[1 + i].forward(score, negative_label)

return loss

def backward(self, dout=1):

dh = 0

# 反向传播时,依次调用各层的 backward() 函数即可

for l0, l1 in zip(self.loss_layers, self.embed_dot_layers):

dscore = l0.backward(dout)

dh += l1.backward(dscore)

return dh

改进版 word2vec 的学习

CBOW 模型的实现

- 相比于之前的简单的

SimpleCBOW类,改进版CBOW类使用了 Embedding 层和 Negative Sampling Loss 层。此外,还将上下文部分扩展为可以处理任意的窗口大小

class CBOW:

def __init__(self, vocab_size, hidden_size, window_size, corpus):

V, H = vocab_size, hidden_size

# 初始化权重 (由于采用了 Embedding 层,输入、输出层的权重大小均为 V x H)

W_in = 0.01 * np.random.randn(V, H).astype('f')

W_out = 0.01 * np.random.randn(V, H).astype('f')

# 生成层

self.in_layers = []

for i in range(2 * window_size):

layer = Embedding(W_in) # 所有 Embedding 层共享同一组权重

self.in_layers.append(layer)

self.ns_loss = NegativeSamplingLoss(W_out, corpus, power=0.75, sample_size=5)

# 将所有的权重和梯度整理到列表中

layers = self.in_layers + [self.ns_loss]

self.params, self.grads = [], []

for layer in layers:

self.params += layer.params

self.grads += layer.grads

# 将单词的分布式表示设置为成员变量

self.word_vecs = W_in

def forward(self, contexts, target):

h = 0

# 由于使用 Embedding 层,contexts 存储的是 word-id 而非 one-hot vector

for i, layer in enumerate(self.in_layers):

h += layer.forward(contexts[:, i])

h *= 1 / len(self.in_layers)

loss = self.ns_loss.forward(h, target)

return loss

def backward(self, dout=1):

dout = self.ns_loss.backward(dout)

dout *= 1 / len(self.in_layers)

for layer in self.in_layers:

layer.backward(dout)

return None

skip-gram 模型的实现

class SkipGram:

def __init__(self, vocab_size, hidden_size, window_size, corpus):

V, H = vocab_size, hidden_size

rn = np.random.randn

# 初始化权重

W_in = 0.01 * rn(V, H).astype('f')

W_out = 0.01 * rn(V, H).astype('f')

# 生成层

self.in_layer = Embedding(W_in)

self.loss_layers = []

for i in range(2 * window_size):

layer = NegativeSamplingLoss(W_out, corpus, power=0.75, sample_size=5)

self.loss_layers.append(layer)

# 将所有的权重和梯度整理到列表中

layers = [self.in_layer] + self.loss_layers

self.params, self.grads = [], []

for layer in layers:

self.params += layer.params

self.grads += layer.grads

# 将单词的分布式表示设置为成员变量

self.word_vecs = W_in

def forward(self, contexts, target):

h = self.in_layer.forward(target)

loss = 0

for i, layer in enumerate(self.loss_layers):

loss += layer.forward(h, contexts[:, i])

return loss

def backward(self, dout=1):

dh = 0

for i, layer in enumerate(self.loss_layers):

dh += layer.backward(dout)

self.in_layer.backward(dh)

return None

CBOW 模型的学习代码 (PTB 数据集)

# coding: utf-8

import sys

sys.path.append('..')

from common import config

# 在用GPU运行时,请打开下面的注释(需要cupy)

# ===============================================

# config.GPU = True

# ===============================================

from common.np import *

import pickle

from common.trainer import Trainer

from common.optimizer import Adam

from cbow import CBOW

from skip_gram import SkipGram

from common.util import create_contexts_target, to_cpu, to_gpu

from dataset import ptb

# 设定超参数

window_size = 5

hidden_size = 100

batch_size = 100

max_epoch = 10

# 读入数据

corpus, word_to_id, id_to_word = ptb.load_data('train')

vocab_size = len(word_to_id)

contexts, target = create_contexts_target(corpus, window_size)

if config.GPU:

contexts, target = to_gpu(contexts), to_gpu(target)

# 生成模型等

model = CBOW(vocab_size, hidden_size, window_size, corpus)

# model = SkipGram(vocab_size, hidden_size, window_size, corpus)

optimizer = Adam()

trainer = Trainer(model, optimizer)

# 开始学习

trainer.fit(contexts, target, max_epoch, batch_size)

trainer.plot()

# 保存必要数据,以便后续使用

word_vecs = model.word_vecs

if config.GPU:

word_vecs = to_cpu(word_vecs)

params = {}

params['word_vecs'] = word_vecs.astype(np.float16)

params['word_to_id'] = word_to_id

params['id_to_word'] = id_to_word

pkl_file = 'cbow_params.pkl' # or 'skipgram_params.pkl'

with open(pkl_file, 'wb') as f:

pickle.dump(params, f, -1)

单词向量的评价方法

# coding: utf-8

import sys

sys.path.append('..')

from common.util import most_similar, analogy

import pickle

pkl_file = 'cbow_params.pkl'

# pkl_file = 'skipgram_params.pkl'

with open(pkl_file, 'rb') as f:

params = pickle.load(f)

word_vecs = params['word_vecs']

word_to_id = params['word_to_id']

id_to_word = params['id_to_word']

most similar task (相似度)

- 单词相似度的评价通常使用人工创建的单词相似度评价集来评估。比如,cat 和 animal 的相似度是 8,cat 和 car 的相似度是 2……类似这样,用 0 ~ 10 的分数人工地对单词之间的相似度打分。然后,比较人给出的分数和 word2vec 给出的余弦相似度,考察它们之间的相关性

下面我们只是简单地查询一下某些单词的 Top5 最相似单词

# most similar task

querys = ['you', 'year', 'car', 'toyota']

for query in querys:

most_similar(query, word_to_id, id_to_word, word_vecs, top=5)

- 这里直接加载作者训练好的参数跑下结果。 查询给定单词的其他 5 个最相似的单词,效果还是不错的:

[query] you

we: 0.6103515625

someone: 0.59130859375

i: 0.55419921875

something: 0.48974609375

anyone: 0.47314453125

[query] year

month: 0.71875

week: 0.65234375

spring: 0.62744140625

summer: 0.6259765625

decade: 0.603515625

[query] car

luxury: 0.497314453125

arabia: 0.47802734375

auto: 0.47119140625

disk-drive: 0.450927734375

travel: 0.4091796875

[query] toyota

ford: 0.55078125

instrumentation: 0.509765625

mazda: 0.49365234375

bethlehem: 0.47509765625

nissan: 0.474853515625

analogy task (类推问题)

类推问题

- 由 word2vec 获得的单词的分布式表示不仅可以将近似单词聚拢在一起,还可以捕获更复杂的模式,其中一个具有代表性的例子是因 “king − man + woman = queen” 而出名的类推问题 (类比问题)。更准确地说,使用 word2vec 的单词的分布式表示,可以通过向量的加减法来解决类推问题

- 如图 4-20 所示,要解决类推问题,需要在单词向量空间上寻找尽可能使 “man → woman” 向量和 “king → ?” 向量接近的单词,也就是找到离向量

vec(‘king’) + vec(‘woman’) − vec(‘man’)最近的单词向量 (可以通过计算余弦相似度求得)

def analogy(a, b, c, word_to_id, id_to_word, word_matrix, top=5, answer=None):

for word in (a, b, c):

if word not in word_to_id:

print('%s is not found' % word)

return

print('\n[analogy] ' + a + ':' + b + ' = ' + c + ':?')

a_vec, b_vec, c_vec = word_matrix[word_to_id[a]], word_matrix[word_to_id[b]], word_matrix[word_to_id[c]]

query_vec = b_vec - a_vec + c_vec

query_vec = normalize(query_vec)

similarity = np.dot(word_matrix, query_vec)

if answer is not None:

print("==>" + answer + ":" + str(np.dot(word_matrix[word_to_id[answer]], query_vec)))

count = 0

for i in (-1 * similarity).argsort():

if np.isnan(similarity[i]):

continue

if id_to_word[i] in (a, b, c):

continue

print(' {0}: {1}'.format(id_to_word[i], similarity[i]))

count += 1

if count >= top:

return

基于类推问题的评价方法

- 基于诸如 “king : queen = man : ?” 这样的类推问题,根据正确率测量单词的分布式表示的优劣。图 4-23 的 Semantics 列显示的是推断单词含义的类推问题(像 “king : queen = actor : actress” 这样询问单词含义的问题)的正确率,Syntax 列是询问单词形态信息的问题,比如 “bad : worst = good : best”

由图 4-23 可知:语料库越大,结果越好 (始终需要大数据);单词向量的维数必须适中 (太大会导致精度变差)

下面也只是简单地查询一下几个类推问题的 Top5 答案

# analogy task

print('-'*50)

analogy('king', 'man', 'queen', word_to_id, id_to_word, word_vecs)

analogy('take', 'took', 'go', word_to_id, id_to_word, word_vecs)

analogy('car', 'cars', 'child', word_to_id, id_to_word, word_vecs)

analogy('good', 'better', 'bad', word_to_id, id_to_word, word_vecs)

- 同样直接加载作者训练好的参数跑一下。这里特意选取了一些效果还不错的例子,说明 word2vec 已经在一定程度上捕捉到了时态、语义、语法等多方面的信息:

[analogy] king:man = queen:?

woman: 5.16015625

veto: 4.9296875

ounce: 4.69140625

earthquake: 4.6328125

successor: 4.609375

[analogy] take:took = go:?

went: 4.55078125

points: 4.25

comes: 3.98046875

oct.: 3.90625

[analogy] car:cars = child:?

children: 5.21875

average: 4.7265625

yield: 4.20703125

cattle: 4.1875

priced: 4.1796875

[analogy] good:better = bad:?

more: 6.6484375

less: 6.0625

rather: 5.21875

slower: 4.734375

greater: 4.671875

参考文献

- 《深度学习进阶: 自然语言处理》

- Distributed Representations of Words and Phrases and their Compositionality

- Efficient Estimation of Word Representations in Vector Space