RNN第三周-天气预测

本周只复现了代码

>- ** 本文为[365天深度学习训练营](https://mp.weixin.qq.com/s/xLjALoOD8HPZcH563En8bQ) 中的学习记录博客**

>- ** 参考文章:第R3周:LSTM-火灾温度预测(训练营内部可读)**

>- ** 作者:[K同学啊](https://mp.weixin.qq.com/s/xLjALoOD8HPZcH563En8bQ)**import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation,Dropout

from tensorflow.keras.callbacks import EarlyStopping

from tensorflow.keras.layers import Dropout

from sklearn.metrics import classification_report,confusion_matrix

from sklearn.metrics import r2_score

from sklearn.metrics import mean_absolute_error , mean_absolute_percentage_error , mean_squared_errordata = pd.read_csv("D:/DeepLearning/weatherAUS.csv")

df = data.copy()

data.head()| Date | Location | MinTemp | MaxTemp | Rainfall | Evaporation | Sunshine | WindGustDir | WindGustSpeed | WindDir9am | ... | Humidity9am | Humidity3pm | Pressure9am | Pressure3pm | Cloud9am | Cloud3pm | Temp9am | Temp3pm | RainToday | RainTomorrow | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2008-12-01 | Albury | 13.4 | 22.9 | 0.6 | NaN | NaN | W | 44.0 | W | ... | 71.0 | 22.0 | 1007.7 | 1007.1 | 8.0 | NaN | 16.9 | 21.8 | No | No |

| 1 | 2008-12-02 | Albury | 7.4 | 25.1 | 0.0 | NaN | NaN | WNW | 44.0 | NNW | ... | 44.0 | 25.0 | 1010.6 | 1007.8 | NaN | NaN | 17.2 | 24.3 | No | No |

| 2 | 2008-12-03 | Albury | 12.9 | 25.7 | 0.0 | NaN | NaN | WSW | 46.0 | W | ... | 38.0 | 30.0 | 1007.6 | 1008.7 | NaN | 2.0 | 21.0 | 23.2 | No | No |

| 3 | 2008-12-04 | Albury | 9.2 | 28.0 | 0.0 | NaN | NaN | NE | 24.0 | SE | ... | 45.0 | 16.0 | 1017.6 | 1012.8 | NaN | NaN | 18.1 | 26.5 | No | No |

| 4 | 2008-12-05 | Albury | 17.5 | 32.3 | 1.0 | NaN | NaN | W | 41.0 | ENE | ... | 82.0 | 33.0 | 1010.8 | 1006.0 | 7.0 | 8.0 | 17.8 | 29.7 | No | No |

5 rows × 23 columns

data.describe()| MinTemp | MaxTemp | Rainfall | Evaporation | Sunshine | WindGustSpeed | WindSpeed9am | WindSpeed3pm | Humidity9am | Humidity3pm | Pressure9am | Pressure3pm | Cloud9am | Cloud3pm | Temp9am | Temp3pm | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 143975.000000 | 144199.000000 | 142199.000000 | 82670.000000 | 75625.000000 | 135197.000000 | 143693.000000 | 142398.000000 | 142806.000000 | 140953.000000 | 130395.00000 | 130432.000000 | 89572.000000 | 86102.000000 | 143693.000000 | 141851.00000 |

| mean | 12.194034 | 23.221348 | 2.360918 | 5.468232 | 7.611178 | 40.035230 | 14.043426 | 18.662657 | 68.880831 | 51.539116 | 1017.64994 | 1015.255889 | 4.447461 | 4.509930 | 16.990631 | 21.68339 |

| std | 6.398495 | 7.119049 | 8.478060 | 4.193704 | 3.785483 | 13.607062 | 8.915375 | 8.809800 | 19.029164 | 20.795902 | 7.10653 | 7.037414 | 2.887159 | 2.720357 | 6.488753 | 6.93665 |

| min | -8.500000 | -4.800000 | 0.000000 | 0.000000 | 0.000000 | 6.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 980.50000 | 977.100000 | 0.000000 | 0.000000 | -7.200000 | -5.40000 |

| 25% | 7.600000 | 17.900000 | 0.000000 | 2.600000 | 4.800000 | 31.000000 | 7.000000 | 13.000000 | 57.000000 | 37.000000 | 1012.90000 | 1010.400000 | 1.000000 | 2.000000 | 12.300000 | 16.60000 |

| 50% | 12.000000 | 22.600000 | 0.000000 | 4.800000 | 8.400000 | 39.000000 | 13.000000 | 19.000000 | 70.000000 | 52.000000 | 1017.60000 | 1015.200000 | 5.000000 | 5.000000 | 16.700000 | 21.10000 |

| 75% | 16.900000 | 28.200000 | 0.800000 | 7.400000 | 10.600000 | 48.000000 | 19.000000 | 24.000000 | 83.000000 | 66.000000 | 1022.40000 | 1020.000000 | 7.000000 | 7.000000 | 21.600000 | 26.40000 |

| max | 33.900000 | 48.100000 | 371.000000 | 145.000000 | 14.500000 | 135.000000 | 130.000000 | 87.000000 | 100.000000 | 100.000000 | 1041.00000 | 1039.600000 | 9.000000 | 9.000000 | 40.200000 | 46.70000 |

data.dtypesDate object Location object MinTemp float64 MaxTemp float64 Rainfall float64 Evaporation float64 Sunshine float64 WindGustDir object WindGustSpeed float64 WindDir9am object WindDir3pm object WindSpeed9am float64 WindSpeed3pm float64 Humidity9am float64 Humidity3pm float64 Pressure9am float64 Pressure3pm float64 Cloud9am float64 Cloud3pm float64 Temp9am float64 Temp3pm float64 RainToday object RainTomorrow object dtype: object

data['Date'] = pd.to_datetime(data['Date'])

data['Date']0 2008-12-01

1 2008-12-02

2 2008-12-03

3 2008-12-04

4 2008-12-05

...

145455 2017-06-21

145456 2017-06-22

145457 2017-06-23

145458 2017-06-24

145459 2017-06-25

Name: Date, Length: 145460, dtype: datetime64[ns]

data['year'] = data['Date'].dt.year

data['Month'] = data['Date'].dt.month

data['day'] = data['Date'].dt.day| Date | Location | MinTemp | MaxTemp | Rainfall | Evaporation | Sunshine | WindGustDir | WindGustSpeed | WindDir9am | ... | Pressure3pm | Cloud9am | Cloud3pm | Temp9am | Temp3pm | RainToday | RainTomorrow | year | Month | day | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2008-12-01 | Albury | 13.4 | 22.9 | 0.6 | NaN | NaN | W | 44.0 | W | ... | 1007.1 | 8.0 | NaN | 16.9 | 21.8 | No | No | 2008 | 12 | 1 |

| 1 | 2008-12-02 | Albury | 7.4 | 25.1 | 0.0 | NaN | NaN | WNW | 44.0 | NNW | ... | 1007.8 | NaN | NaN | 17.2 | 24.3 | No | No | 2008 | 12 | 2 |

| 2 | 2008-12-03 | Albury | 12.9 | 25.7 | 0.0 | NaN | NaN | WSW | 46.0 | W | ... | 1008.7 | NaN | 2.0 | 21.0 | 23.2 | No | No | 2008 | 12 | 3 |

| 3 | 2008-12-04 | Albury | 9.2 | 28.0 | 0.0 | NaN | NaN | NE | 24.0 | SE | ... | 1012.8 | NaN | NaN | 18.1 | 26.5 | No | No | 2008 | 12 | 4 |

| 4 | 2008-12-05 | Albury | 17.5 | 32.3 | 1.0 | NaN | NaN | W | 41.0 | ENE | ... | 1006.0 | 7.0 | 8.0 | 17.8 | 29.7 | No | No | 2008 | 12 | 5 |

5 rows × 26 columns

data.drop('Date',axis=1,inplace=True)data.columnsIndex(['Location', 'MinTemp', 'MaxTemp', 'Rainfall', 'Evaporation', 'Sunshine',

'WindGustDir', 'WindGustSpeed', 'WindDir9am', 'WindDir3pm',

'WindSpeed9am', 'WindSpeed3pm', 'Humidity9am', 'Humidity3pm',

'Pressure9am', 'Pressure3pm', 'Cloud9am', 'Cloud3pm', 'Temp9am',

'Temp3pm', 'RainToday', 'RainTomorrow', 'year', 'Month', 'day'],

dtype='object')

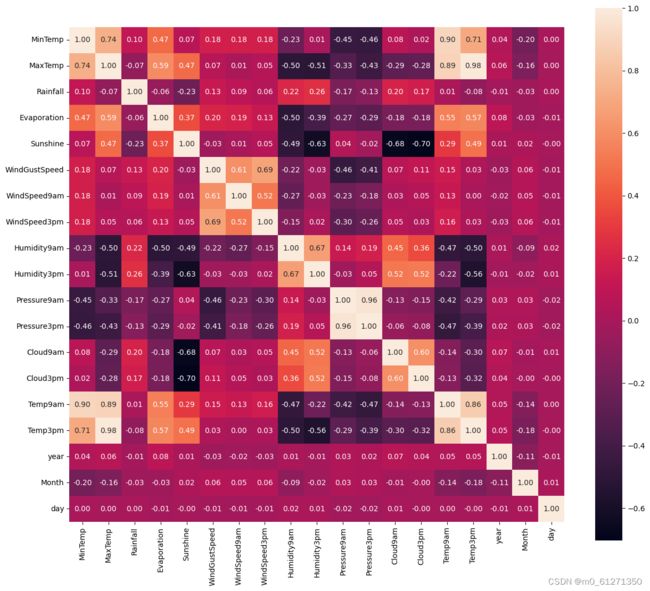

plt.figure(figsize=(15,13))

# data.corr()表示了data中的两个变量之间的相关性

ax = sns.heatmap(data.corr(), square=True, annot=True, fmt='.2f')

ax.set_xticklabels(ax.get_xticklabels(), rotation=90)

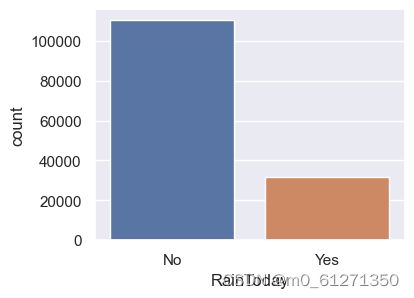

plt.show()sns.set(style="darkgrid")

plt.figure(figsize=(4,3))

sns.countplot(x='RainTomorrow',data=data)

plt.figure(figsize=(4,3))

sns.countplot(x='RainToday',data=data)x=pd.crosstab(data['RainTomorrow'],data['RainToday'])

x| RainToday | No | Yes |

|---|---|---|

| RainTomorrow | ||

| No | 92728 | 16858 |

| Yes | 16604 | 14597 |

y=x/x.transpose().sum().values.reshape(2,1)*100

yy.plot(kind="bar",figsize=(4,3),color=['#006666','#d279a6']);

x=pd.crosstab(data['Location'],data['RainToday'])

# 获取每个城市下雨天数和非下雨天数的百分比

y=x/x.transpose().sum().values.reshape((-1, 1))*100

# 按每个城市的雨天百分比排序

y=y.sort_values(by='Yes',ascending=True )

color=['#cc6699','#006699','#006666','#862d86','#ff9966' ]

y.Yes.plot(kind="barh",figsize=(15,20),color=color)data.columns

Index(['Location', 'MinTemp', 'MaxTemp', 'Rainfall', 'Evaporation', 'Sunshine',

'WindGustDir', 'WindGustSpeed', 'WindDir9am', 'WindDir3pm',

'WindSpeed9am', 'WindSpeed3pm', 'Humidity9am', 'Humidity3pm',

'Pressure9am', 'Pressure3pm', 'Cloud9am', 'Cloud3pm', 'Temp9am',

'Temp3pm', 'RainToday', 'RainTomorrow', 'year', 'Month', 'day'],

dtype='object')

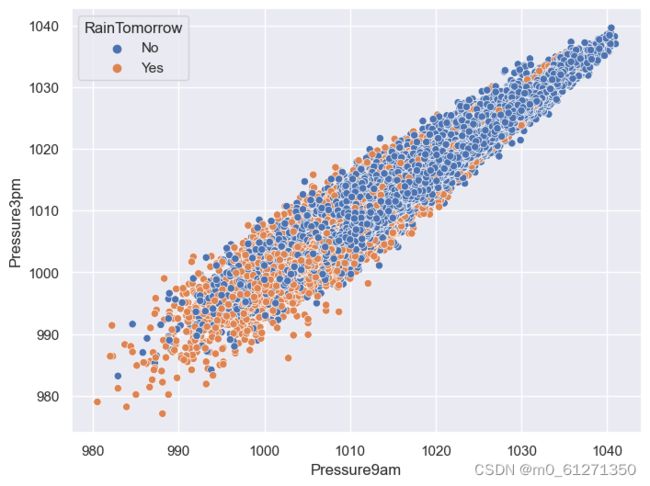

plt.figure(figsize=(8,6))

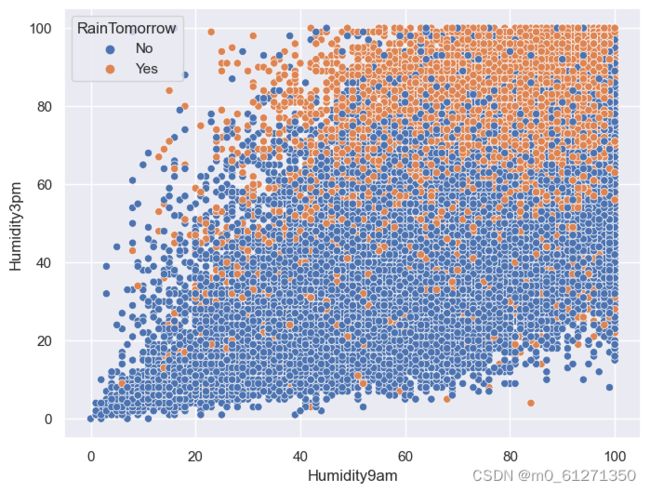

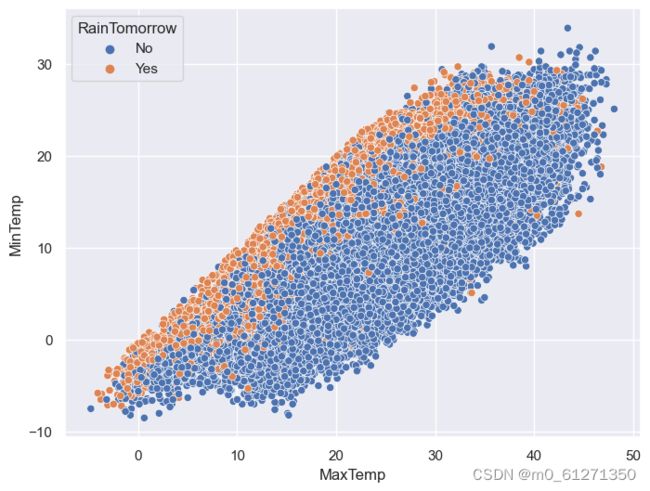

sns.scatterplot(data=data,x='Pressure9am',y='Pressure3pm',hue='RainTomorrow');plt.figure(figsize=(8,6))

sns.scatterplot(data=data,x='Humidity9am',y='Humidity3pm',hue='RainTomorrow');

plt.figure(figsize=(8,6))

sns.scatterplot(x='MaxTemp', y='MinTemp', data=data, hue='RainTomorrow');

# 每列中缺失数据的百分比

data.isnull().sum()/data.shape[0]*100Location 0.000000 MinTemp 1.020899 MaxTemp 0.866905 Rainfall 2.241853 Evaporation 43.166506 Sunshine 48.009762 WindGustDir 7.098859 WindGustSpeed 7.055548 WindDir9am 7.263853 WindDir3pm 2.906641 WindSpeed9am 1.214767 WindSpeed3pm 2.105046 Humidity9am 1.824557 Humidity3pm 3.098446 Pressure9am 10.356799 Pressure3pm 10.331363 Cloud9am 38.421559 Cloud3pm 40.807095 Temp9am 1.214767 Temp3pm 2.481094 RainToday 2.241853 RainTomorrow 2.245978 year 0.000000 Month 0.000000 day 0.000000 dtype: float64

# 在该列中随机选择数进行填充

lst=['Evaporation','Sunshine','Cloud9am','Cloud3pm']

for col in lst:

fill_list = data[col].dropna()

data[col] = data[col].fillna(pd.Series(np.random.choice(fill_list, size=len(data.index))))s = (data.dtypes == "object")

object_cols = list(s[s].index)

object_cols['Location', 'WindGustDir', 'WindDir9am', 'WindDir3pm', 'RainToday', 'RainTomorrow']

# inplace=True:直接修改原对象,不创建副本

# data[i].mode()[0] 返回频率出现最高的选项,众数

for i in object_cols:

data[i].fillna(data[i].mode()[0], inplace=True)t = (data.dtypes == "float64")

num_cols = list(t[t].index)

num_cols['MinTemp', 'MaxTemp', 'Rainfall', 'Evaporation', 'Sunshine', 'WindGustSpeed', 'WindSpeed9am', 'WindSpeed3pm', 'Humidity9am', 'Humidity3pm', 'Pressure9am', 'Pressure3pm', 'Cloud9am', 'Cloud3pm', 'Temp9am', 'Temp3pm']

# .median(), 中位数

for i in num_cols:

data[i].fillna(data[i].median(), inplace=True)data.isnull().sum()Location 0 MinTemp 0 MaxTemp 0 Rainfall 0 Evaporation 0 Sunshine 0 WindGustDir 0 WindGustSpeed 0 WindDir9am 0 WindDir3pm 0 WindSpeed9am 0 WindSpeed3pm 0 Humidity9am 0 Humidity3pm 0 Pressure9am 0 Pressure3pm 0 Cloud9am 0 Cloud3pm 0 Temp9am 0 Temp3pm 0 RainToday 0 RainTomorrow 0 year 0 Month 0 day 0 dtype: int64

from sklearn.preprocessing import LabelEncoder

label_encoder = LabelEncoder()

for i in object_cols:

data[i] = label_encoder.fit_transform(data[i])X = data.drop(['RainTomorrow','day'],axis=1).values

y = data['RainTomorrow'].valuesX_train, X_test, y_train, y_test = train_test_split(X,y,test_size=0.25,random_state=101)scaler = MinMaxScaler()

scaler.fit(X_train)

X_train = scaler.transform(X_train)

X_test = scaler.transform(X_test)from tensorflow.keras.optimizers import Adam

model = Sequential()

model.add(Dense(units=24,activation='tanh',))

model.add(Dense(units=18,activation='tanh'))

model.add(Dense(units=23,activation='tanh'))

model.add(Dropout(0.5))

model.add(Dense(units=12,activation='tanh'))

model.add(Dropout(0.2))

model.add(Dense(units=1,activation='sigmoid'))

optimizer = tf.keras.optimizers.Adam(learning_rate=1e-4)

model.compile(loss='binary_crossentropy',

optimizer=optimizer,

metrics="accuracy")early_stop = EarlyStopping(monitor='val_loss',

mode='min',

min_delta=0.001,

verbose=1,

patience=25,

restore_best_weights=True)model.fit(x=X_train,

y=y_train,

validation_data=(X_test, y_test), verbose=1,

callbacks=[early_stop],

epochs = 10,

batch_size = 32

)Epoch 1/10 3410/3410 [==============================] - 11s 2ms/step - loss: 0.4621 - accuracy: 0.7971 - val_loss: 0.3905 - val_accuracy: 0.8301 Epoch 2/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3989 - accuracy: 0.8307 - val_loss: 0.3805 - val_accuracy: 0.8353 Epoch 3/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3908 - accuracy: 0.8342 - val_loss: 0.3775 - val_accuracy: 0.8366 Epoch 4/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3870 - accuracy: 0.8363 - val_loss: 0.3752 - val_accuracy: 0.8375 Epoch 5/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3846 - accuracy: 0.8365 - val_loss: 0.3744 - val_accuracy: 0.8370 Epoch 6/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3831 - accuracy: 0.8373 - val_loss: 0.3734 - val_accuracy: 0.8373 Epoch 7/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3820 - accuracy: 0.8377 - val_loss: 0.3724 - val_accuracy: 0.8387 Epoch 8/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3807 - accuracy: 0.8382 - val_loss: 0.3725 - val_accuracy: 0.8391 Epoch 9/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3798 - accuracy: 0.8382 - val_loss: 0.3713 - val_accuracy: 0.8391 Epoch 10/10 3410/3410 [==============================] - 8s 2ms/step - loss: 0.3798 - accuracy: 0.8375 - val_loss: 0.3711 - val_accuracy: 0.8389

import matplotlib.pyplot as plt

acc = model.history.history['accuracy']

val_acc = model.history.history['val_accuracy']

loss = model.history.history['loss']

val_loss = model.history.history['val_loss']

epochs_range = range(10)

plt.figure(figsize=(14, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()