python opencv图像笔记

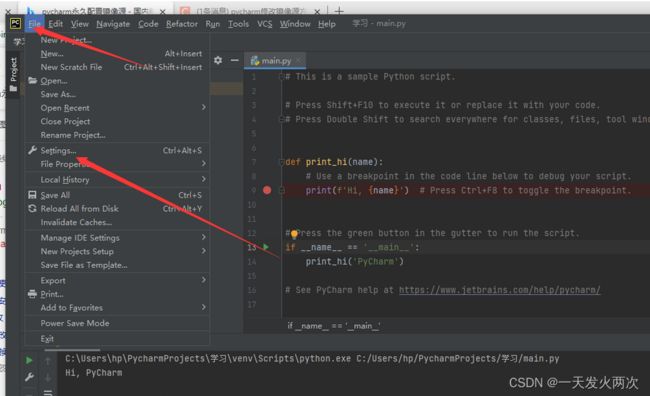

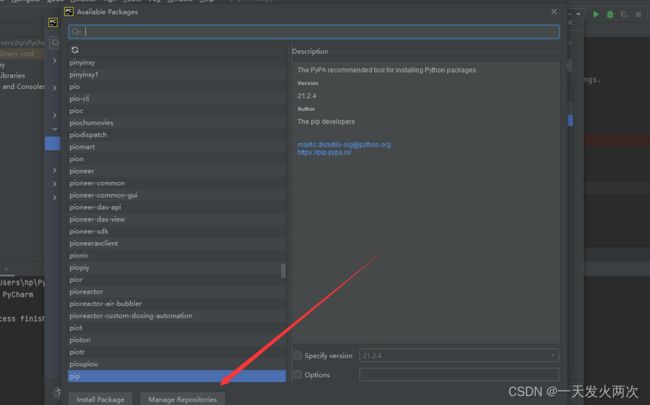

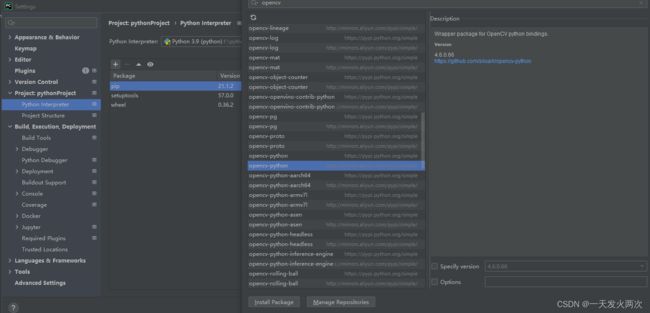

下载依赖

选择镜像源,这样下载快一点

切换源

清华:https://pypi.tuna.tsinghua.edu.cn/simple

阿里云:http://mirrors.aliyun.com/pypi/simple/

中国科技大学 https://pypi.mirrors.ustc.edu.cn/simple/

安装依赖

第一个打开图片的代码

import cv2

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

cv2.imshow('展示',img)

#等待一段时间

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图

创建和显示窗口

namedWindow(winname,flag) 命名窗口名称和窗口类型

imshow() 显示窗口

destoryAllWindows() 销毁所有窗口

resizeWindow() 设置窗口大小

namedWindow(winname,flag)中flag的参数

- WINDOW_NORMAL 可以拖动大小

- WINDOW_AUTOSIZE 操作窗口的用户不能改变窗口的大小,窗口的大小取决于图像的大小。

- WINDOW_OPENGL 创建的窗口可支持OPENGL。OpenGL(英语:Open Graphics Library,译名:开放图形库或者“开放式图形库”)是用于渲染2D、3D矢量图形的跨语言、跨平台的应用程序编程接口(API)。

- WINDOW_FULLSCREEN 创建的窗口以全屏的形式显示…但实际操作中发现并没有实现全屏显示

- WINDOW_FREERATIO 图像窗口可以以任意宽高比例显示,即不固定宽高比例

- WINDOW_KEEPRATIO 图像窗口的宽高比例保持不变。

- WINDOW_GUI_EXPANDED 图像窗口可以添加状态栏和工具栏。

- WINDOW_GUI_NORMAL 老式窗口,即没有状态栏和工具栏的图像窗口

演示

import cv2

def start():

cv2.namedWindow("窗口",cv2.WINDOW_NORMAL)

cv2.resizeWindow("窗口",1920,1080)

cv2.imshow("窗口",0)

key=cv2.waitKey(0)

if(key & 0xFF==ord('q')):

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

加载显示图片

imread(path,flag)

imread(path,flag)中flag的参数

cv2.IMREAD_COLOR:默认参数,读入一副彩色图片,忽略alpha通道,可用1作为实参替代

cv2.IMREAD_GRAYSCALE:读入灰度图片,可用0作为实参替代

cv2.IMREAD_UNCHANGED:顾名思义,读入完整图片,包括alpha通道,可用-1作为实参替代

PS:alpha通道,又称A通道,是一个8位的灰度通道,该通道用256级灰度来记录图像中的透明度复信息,定义透明、不透明和半透明区域,其中黑表示全透明,白表示不透明,灰表示半透明

演示:

import cv2

def start():

cv2.namedWindow("窗口",cv2.WINDOW_NORMAL)

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png',cv2.IMREAD_GRAYSCALE)

cv2.imshow("窗口",img)

key=cv2.waitKey(0)

if(key & 0xFF==ord('q')):

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

保存图片

imwrite(name,img)

name,要保存的文件名

img,是Mat类型

import cv2

def start():

cv2.namedWindow("窗口",cv2.WINDOW_NORMAL)

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png',cv2.IMREAD_GRAYSCALE)

cv2.imshow("窗口",img)

key=cv2.waitKey(0)

print(key)

if(key & 0xFF==ord('q')):

cv2.destroyAllWindows()

elif(key & 0xFF==ord('s')):

cv2.imwrite("C:\\Users\\Zz\\Desktop\\img.png",img)

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

调用摄像头

VideoCapure() 视频采集器 一般设备号从0开始 第二个参数为api,不同平台api不一样

cap.read() 读取视频帧 返回两个值,一个为状态值,读到帧为true,另一个为视频帧

cap.release() 释放资源

import cv2

def start():

# 创建窗口

cv2.namedWindow("窗口",cv2.WINDOW_NORMAL)

#获取视频设备

cap=cv2.VideoCapture("http://admin:[email protected]:8081")

while True:

ret,frame=cap.read()

cv2.imshow("窗口",frame)

key=cv2.waitKey(1)

if (key & 0xFF == ord('q')):

break

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

从多媒体文件中读取视频帧

import cv2

def start():

# 创建窗口

cv2.namedWindow("窗口",cv2.WINDOW_NORMAL)

#获取多媒体视频

cap=cv2.VideoCapture("C:\\Users\\Zz\\Desktop\\2.mp4")

while True:

ret,frame=cap.read()

cv2.imshow("窗口",frame)

key=cv2.waitKey(10)

if (key & 0xFF == ord('q')):

break

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

将视频数据录制成多媒体

VideoWriter 参数一为输出文件 参数二为多媒体文件格式(VideoWrite_fourcc) 参数三为帧率 参数四为分辨率大小 参数四为是否带有颜色默认true

write

release

cv.VideoWriter_fourcc('M','J','P','G') 或者cv.VideoWriter_fourcc(*'MJPG')

代码演示

import cv2

def start():

#fourcc= cv2.VideoWriter_fourcc('M','J','P','G')

#因为这里保存的是视频,所以用MP4v

fourcc = cv2.VideoWriter_fourcc('m', 'p', '4', 'v')

vw=cv2.VideoWriter("C:\\Users\\Zz\\Desktop\\3.mp4",fourcc,25,(1920,1080))

# 创建窗口

cv2.namedWindow("窗口",cv2.WINDOW_NORMAL)

#获取多媒体视频

cap=cv2.VideoCapture("C:\\Users\\Zz\\Desktop\\2.mp4")

while True:

ret,frame=cap.read()

cv2.imshow("窗口",frame)

#写数据到多媒体

vw.write(frame)

key=cv2.waitKey(1)

if (key & 0xFF == ord('q')):

break

cap.release()

cv2.destroyAllWindows()

#释放资源

vw.release()

if __name__ == '__main__':

start()

代码优化

1.视频窗口被撑大

因为视频分辨率比较大,把窗口撑大,我们可以在循环中设置窗口大小

while True:

ret,frame=cap.read()

cv2.imshow("窗口",frame)

cv2.resizeWindow("窗口",1920,1080)

#写数据到多媒体

vw.write(frame)

key=cv2.waitKey(1)

if (key & 0xFF == ord('q')):

break

2.检测摄像头是否被打开

isOpened()

while cap.isOpened():

ret,frame=cap.read()

if ret == True:

cv2.imshow("窗口",frame)

cv2.resizeWindow("窗口",1920,1080)

#写数据到多媒体

vw.write(frame)

key=cv2.waitKey(1)

if (key & 0xFF == ord('q')):

break

else:

break

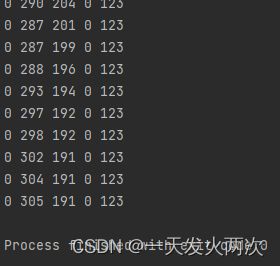

openCV控制鼠标

setMouseCallback(winname,callback,userdata) 第一个参数是窗口名 第二个参数是回调函数 第三个函数是给回调函数传参

callback(event,x,y,flags,userdata) event:鼠标移动、按下左键 x,y:鼠标坐标 flags:鼠标键及组合键

实操代码

import cv2

import numpy as np

def mouse_callback(event,x,y,flags,userdata):

print(event,x,y,flags,userdata)

def start():

cv2.namedWindow("mouse",cv2.WINDOW_NORMAL)

cv2.resizeWindow('mouse',640,360)

#设置鼠标回调

cv2.setMouseCallback('mouse',mouse_callback,"123")

#生成纯黑照片

img=np.zeros((360,640,3),np.uint8)

while True:

cv2.imshow("mouse",img)

key = cv2.waitKey(1)

if key & 0xFF ==ord('q'):

break

if __name__ == '__main__':

start()

TrackBar 控件

createTrackbar(trackbarname,winname,value:trackbar,count,callback,userdata) 第一个参数控件的名字,第二个是窗口的名字,第三个是当前值

第四个是最大值 最小值为0。第五个是回调函数和传参

getTrackbarPos(trackbarname,winname)输出当前值

import cv2

import numpy as np

def callback():

pass

def start():

cv2.namedWindow('tracker',cv2.WINDOW_NORMAL)

cv2.createTrackbar('R','tracker',0,255,callback)

cv2.createTrackbar('G', 'tracker', 0, 255, callback)

cv2.createTrackbar('B', 'tracker', 0, 255, callback)

img=np.zeros((480,460,3),np.uint8)

while True:

r=cv2.getTrackbarPos('R',"tracker")

g=cv2.getTrackbarPos('G', "tracker")

b=cv2.getTrackbarPos('B', "tracker")

#操作所有像素

img[:] = [b,g,r]

cv2.imshow("tracker", img)

key=cv2.waitKey(10)

if (key &0xFF ==ord('q')):

break

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

opencv中的色彩空间

- 色彩空间变换

- 像素访问

- 矩阵的加减乘除

- 基本图形的绘制

颜色空间

- RGB:人眼的色彩空间 (主要用在显示器物理硬件中)

- opencv默认使用BGR

- HSV/HSB/HSL 第一个HSV是色相色调饱和度 第二个是明亮度 第三个???

- YUV 主要用于视频 ,视频储存节省空间

HSV (主要用在opencv)

- Hue:色相,即色彩,如红色、蓝色

- Saturation : 饱和度,颜色的纯度

- Value:明度

HSL

- Hue:色相,即色彩,如红色、蓝色

- Saturation : 饱和度,颜色的纯度

- Ligthness:明度

YUV

三种类型 对像素的描述 黑白 色彩

- YUV4:2:0

- YUV4:2:2

- YUV4:4:4

色彩空间转换

import cv2

import numpy as np

def callback():

pass

def start():

cv2.namedWindow('color',cv2.WINDOW_NORMAL)

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

cv2.imshow("color",img)

colorspace = [cv2.COLOR_BGR2RGBA,cv2.COLOR_BGR2BGRA,cv2.COLOR_BGR2GRAY,cv2.COLOR_BGR2HSV,cv2.COLOR_BGR2YUV]

cv2.createTrackbar('curcolor','color',0,len(colorspace)-1,callback)

while True:

index=cv2.getTrackbarPos('curcolor','color')

#颜色转换API

cvt_img=cv2.cvtColor(img,colorspace[index])

cv2.imshow('color',cvt_img)

key=cv2.waitKey(10)

if (key &0xFF ==ord('q')):

break

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

Numpy

Opencv中用到的矩阵都要转换为Numpy数组

Numpy是一个高度优化的python数值库

Numpy基本操作

- 创建矩阵

- 检索与赋值[y,x]

- 获取子矩阵[:,:]

创建矩阵 array()

创建全0矩阵 zeros() / ones

创建全值数组 full()

创建单元数组 identity/eye()

1.array

- a=np.array([2,3,4])

- c=np.array([[2,3,4],[6,5,4]])

2.zeros

- a=np.zeros((480,640,3),np.uint8)

(480,640,3) (行的个数,列的个数,通道数/层数)

np.uint8是矩阵中的数据类型

3.ones

- a=np.ones((480,640,3),np.uint8)

(480,640,3) (行的个数,列的个数,通道数/层数)

np.uint8是矩阵中的数据类型

4.full

- a=np.full((480,640,3),255,np.uint8)

(480,640,3) (行的个数,列的个数,通道数/层数)

np.uint8是矩阵中的数据类型

255是每个元素的数值

5.identity 单元矩阵

- c=np.identity(3)

斜对角是1,其它为0

[1,0,0]

[0,1,0]

[0,0,1]

**5.eye **

- c=np.eye(3,4,k=2)

斜对角是1,其它为0

k=2 代表从第二个开始

[0,0,1,0]

[0,0,0,1]

[0,0,0,0]

Numpy 检索与赋值

- [y,x] //灰度

- [y,x,channel] //RGB

import cv2

import numpy as np

def callback():

pass

def start():

cv2.namedWindow('color',cv2.WINDOW_NORMAL)

img = np.zeros((720,720,3),np.uint8)

x = 0

while x<360:

#绿色通道,所以会成为绿色

img[360,x,1]=255

x=x+1

cv2.imshow("color",img)

key=cv2.waitKey(0)

if (key &0xFF ==ord('q')):

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

这样赋值也可以

img[360, x] = [0,0,255]

Region of Image(ROI)

Numpy获取子矩阵

- [y1:y2:x1:x2]

- [:,:] 所有图像操作

roi=img[100:200:,100:200]

roi[:,:]=[0,255,0]

img[100:200:,100:200]= [0,255,0]

cv2.imshow("color",img)

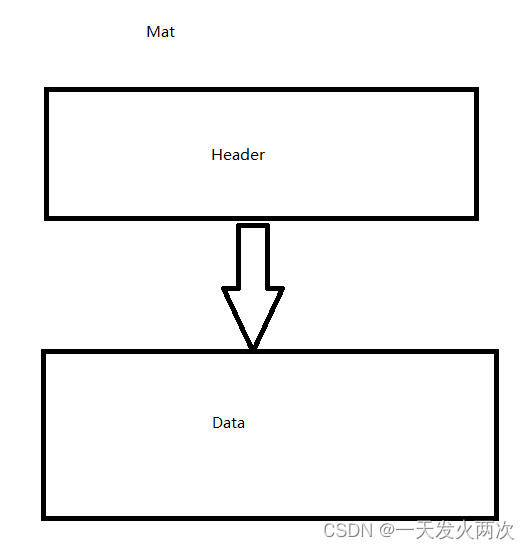

opencv最重要的结构体Mat

Mat是什么? Mat是一个矩阵

Mat有什么好处? 可以通过umpy矩阵的方式来访问

class CV_EXPORTS Mat{

public:

...

int dims; //维数

int rows,cols; //行列数

uchar *data //储存数据的指针

int *refcount; //引用计数 避免内存泄漏

...

}

| 字段 | 说明 |

|---|---|

| dims | 维度 |

| rows | 行数 |

| cols | 列数 |

| depth | 像素位深 |

| channels | 通道数RGB是3 |

| size | 矩阵大小 |

| type | dep+dt+chs CV_8UC3 |

| data | 存放数据 |

Mat 拷贝

import cv2

import numpy as np

def callback():

pass

def start():

cv2.namedWindow('color',cv2.WINDOW_NORMAL)

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

#浅拷贝

img2=img

index = 0

img2[10:500,10:500]=[0,255,255]

cv2.imshow("color",img)

while True:

key=cv2.waitKey(10)

if (key &0xFF ==ord('q')):

break

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

深拷贝

# 深拷贝

img2=img.copy()

访问Mat的属性

- img.shape ------------- 打印结果: (4032,3024,3) #高度,长度和通道数

- img.size ------------- 打印结果: 36578304 #高度x长度x通道数=

- img.dtype ------------- 打印结果:uint8 位深

通道分离与合并

- split(mat) 分离通道

- merge((ch1,ch2,…))融合通道

import cv2

import numpy as np

def callback():

pass

def start():

cv2.namedWindow('color',cv2.WINDOW_NORMAL)

img = np.zeros((480,640,3),np.uint8)

b,g,r=cv2.split(img)

b[10:100,10:100]=255

g[210:300,210:300]=110

r[50:250,50:250]=255

img2 = cv2.merge((b,g,r))

cv2.imshow("color", img2)

cv2.waitKey(0)

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

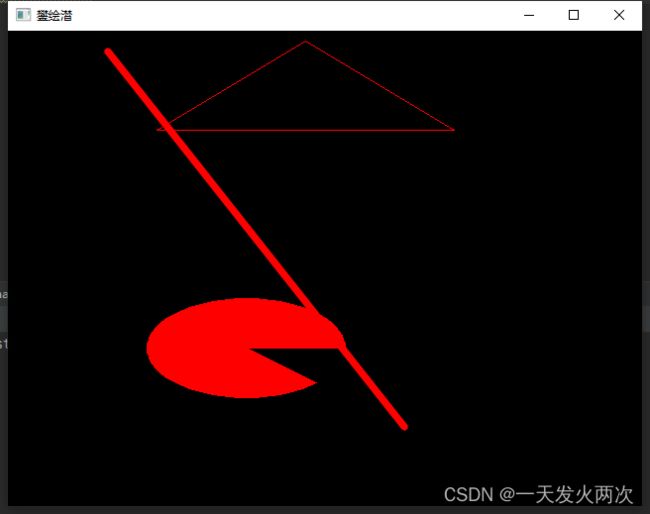

opencv绘制图形

划线

line(img,开始点,结束点,颜色...)

参数详解

- img:在哪个图像上画线

- 开始点结束点:指定线的开始位置和结束位置

- 颜色、线宽、线形

- Shift:坐标缩放比例

import cv2

import numpy as np

def start():

img=np.zeros((480,640,3),np.uint8)

#画线(x,y) 5是线宽 16是线型(抗锯齿)

cv2.line(img,(100,20),(400,400),(0,0,255),5,16)

cv2.line(img, (200, 20), (400, 600), (0, 0, 255), 5, 4)

cv2.imshow("画板", img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

ellipse(img,中心点,长宽的一半,角度,从哪个角度开始,从哪个角度结束...)

是按顺时针计算,0度从右侧开始

#画椭圆

cv2.ellipse(img,(240,320),(100,50),0,0,360,(0,0,255))

#画椭圆

cv2.ellipse(img,(240,320),(100,50),0,45,360,(0,0,255),-1)

opencv 绘制多边形

polylines(img,点集,是否闭环,颜色,...) #点集必须是32位

代码:

#画多边形

pts= np.array([(300,10),(150,100),(450,100)],np.int32)

cv2.polylines(img,[pts],True,(0,0,255))

fillPoly(img,点集,颜色)

代码:

#填充多边形

cv2.fillPoly(img,[pts],(255,0,0))

opencv绘制文本

cv2.putText(img,字符串,启始点,字体,字号,...)

代码:

#绘制文本

cv2.putText(img,"我超hello!!",(250,150),cv2.FONT_HERSHEY_PLAIN,1,(0,0,255))

运行效果:

课后作业画板

import cv2

import numpy as np

start=(0,0)

end=(0,0)

num=0

def mouse_callback(event,x,y,flags,userdata):

global start

global end

#print(event, x, y, flags, userdata)

if(event& cv2.EVENT_LBUTTONDOWN==cv2.EVENT_LBUTTONDOWN):

start=(x,y)

print(start)

elif(event& cv2.EVENT_LBUTTONUP==cv2.EVENT_LBUTTONUP):

end=(x,y)

print(end)

le= (abs( start[0]-end[0]),abs(start[1]-end[1]))

print(le)

if(userdata[0]=='c'):

cv2.ellipse(userdata[2],start,le,0,0,360,userdata[1])

else:

cv2.line(userdata[2],start,end,userdata[1],5)

cv2.imshow("drawtable",userdata[2])

def start():

#创建一个黑色图片

img = np.zeros((480,640,3),np.uint8)

#显示黑色图片

cv2.imshow("drawtable",img)

# 通过读取按键来执行

shape = 'l'

color = (0, 0, 225)

#监听鼠标

cv2.setMouseCallback("drawtable",mouse_callback,[shape, color,img])

while True:

# 等待获取按键

key = cv2.waitKey(0)

if(panduankey(key,'q')):

break

elif(panduankey(key,'c')):

shape='c'

elif(panduankey(key,'l')):

shape='l'

elif(panduankey(key,'r')):

color=(0,0,225)

elif (panduankey(key, 'b')):

color =(225,0,225)

cv2.setMouseCallback("drawtable", mouse_callback, [shape, color,img])

cv2.destroyAllWindows()

def panduankey(key,let):

if (key &0xFF== ord(let)):

return True

else:

return False

if __name__ == '__main__':

start()

图运算

- 两个图相加

- 两个图相减

- 两个图相乘

- 两个图相除

1.两张图相加

import cv2

import numpy as np

def start():

cv2.namedWindow('color', cv2.WINDOW_NORMAL)

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

img2= np.ones(img.shape,np.uint8) *50

img3= cv2.add(img,img2)

cv2.imshow('color',img3)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:图片更白了

2.两张图相减

subtract(A,B) #A减B

3.两张图相乘

multiply(A,B) #亮的更多一些

4.两张图相除

divide(A,B) #暗的更多一些

图像融合

addWeighted(A,alpha,B,bate,gamma)

- alpha和beta是 a和b权重

- gamma 静态权重

代码:

import cv2

import numpy as np

def start():

cv2.namedWindow('color', cv2.WINDOW_NORMAL)

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

img2= cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr2.png')

img3= cv2.addWeighted(img,0.7,img2,0.3,0)

cv2.imshow('color',img3)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图

图像位运算

- 与

- 或

- 非

- 异或

1.非运算

bitwise_not(img)

代码:

import cv2

import numpy as np

def start():

cv2.namedWindow('color', cv2.WINDOW_NORMAL)

img = np.zeros((200,200),np.uint8)

img[50:150,50:150] =255

img2= cv2.bitwise_not(img)

cv2.imshow('img', img)

cv2.imshow('img2',img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

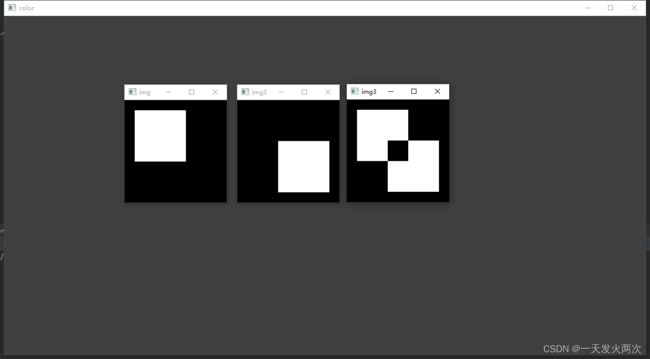

cv2.bitwise_and(img,img2)

代码:

import cv2

import numpy as np

def start():

cv2.namedWindow('color', cv2.WINDOW_NORMAL)

img = np.zeros((200,200),np.uint8)

img2 = np.zeros((200, 200), np.uint8)

img[20:120,20:120] =255

img2[80:180,80:180] =255

img3= cv2.bitwise_and(img,img2)

cv2.imshow('img', img)

cv2.imshow('img2',img2)

cv2.imshow('img3', img3)

cv2.waitKey(0)

if __name__ == '__main__':

start()

cv2.bitwise_or(img,img2)

效果:

![]()

4.异或运算

cv2.bitwise_xor(img,img2)

给图片添加水印

- 引入一张图片

- 创建一个logo

- 计算在什么位置添加,在添加的地方变黑

- 利用add 将logo与图片叠加到一起

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

logo = np.zeros((200,200,3),np.uint8)

mask= np.zeros((200,200),np.uint8)

#绘制logo

logo[20:120,20:120] =[0,0,255]

logo[80:180, 80:180] = [0, 255, 0]

mask[20:120,20:120] =255

mask[80:180, 80:180] = 255

#对mask求反

m=cv2.bitwise_not(mask)

#选择img添加logo的位置

roi =img[0:200,0:200]

#与m进行与操作

tmp=cv2.bitwise_and(roi,roi,mask=m)

dst=cv2.add(tmp,logo)

img[0:200,0:200] = dst

cv2.imshow('img', img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

演示:

图片加汉字生成二维码

首先引入 qrcode和pillow库

然后下载字体文件下载地址

代码:

import cv2

import numpy as np

from PIL import Image,ImageDraw,ImageFont

import qrcode

'''把二维码放置在原图的右下角'''

def comblex_pic(base_img, myQR):

myQR_w, myQR_h = myQR.size

base_img_w, base_img_h = base_img.size

paste_location = (base_img_w-myQR_w, base_img_h-myQR_h, base_img_w, base_img_h)

base_img.paste(myQR, paste_location)

return base_img

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

logo = np.zeros((200,200,3),np.uint8)

mask= np.zeros((200,200),np.uint8)

#绘制logo

logo[20:120,20:120] =[0,0,255]

logo[80:180, 80:180] = [0, 255, 0]

mask[20:120,20:120] =255

mask[80:180, 80:180] = 255

#对mask求反

m=cv2.bitwise_not(mask)

#选择img添加logo的位置

roi =img[0:200,0:200]

#与m进行与操作

tmp=cv2.bitwise_and(roi,roi,mask=m)

dst=cv2.add(tmp,logo)

img[0:200,0:200] = dst

#将img转换为PIL格式

img_pil=Image.fromarray(cv2.cvtColor(img,cv2.COLOR_BGR2RGB))

#字体路径

font=ImageFont.truetype('font.ttf',38)

#字体颜色

fillColor=(0,0,225)

draw=ImageDraw.Draw(img_pil)

draw.text((1000,500),"这是一条信息",font=font,fill=fillColor)

#二维码

imgqrc = qrcode.make("https://www.bilibili.com/")

# 二维码添加至原图右下角

comblepic = comblex_pic(img_pil, imgqrc)

# 显示图片

comblepic.show()

print(img)

# 转回opencv格式

img = cv2.cvtColor(np.asarray(img_pil), cv2.COLOR_RGB2BGR)

cv2.imshow('img', img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

图像的变换

1.图像的缩放

resize(src,dst,dsize,fx,fy,interpolation) //第一个参数图片地址 第二个参数输出参数(python中不用) 第三个参数缩放大小 fx:x轴缩放因子 fy:y轴的缩放因子 interpolation 缩放算法

interpolation 缩放算法:

- INTER_NEAREST 临近插值,速度快,效果差

- INTER_LINEAR 双线性插值,原图中的四个点(默认)

- INTER_CUBIC 三次插值,原图中的16个点

- INTER_AREA,效果最好

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

img2= cv2.resize(img,(400,400)) // img2 = cv2.resize(img, None,fx=0.3,fy=0.3,interpolation=cv2.INTER_AREA)

#与m进行与操作

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

2.图像反转

flip(img,flipCode)

- fileCode==0,上下

- fileCode>0,左右

- fileCode<0,上下+左右

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

img2=cv2.flip(img,0)

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

3.图像的旋转

rotate(img,rotateCode)

- ROTATE_90_CLOCKWIZE

- ROTATE_180

- ROTATE_90_COUNTERCLOCKWISE (旋转270度)

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

img2=cv2.rotate(img,cv2.ROTATE_90_COUNTERCLOCKWISE)

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

3.图像的仿射变换

仿射变换是图像的旋转、缩放、平移的总称

warpAffine(src,M,dsize,flags,mode,value)

M:变换矩阵

dsize:输出尺寸大小

flags:与resize中的插值算法一致

mode:边界外推法标志

value:填充边界的值

平移矩阵

矩阵中每个像素由(x,y)组成

因此,其变换矩阵是2x2的矩阵

平移向量位2x1的向量,所以平移矩阵位2x3矩阵

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

#必须是32位,因为计算精度较高

M=np.float32([[1,0,100],[0,1,0]])

h,w,ch=img.shape

img2=cv2.warpAffine(img,M,(w,h))

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

图片:

变换矩阵

getRotationMatrix2D(center,angle,scale)

center 中心点

angle 角度

scale 缩放比例

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

#必须是32位,因为计算精度较高

#M=np.float32([[1,0,100],[0,1,0]])

h,w,ch=img.shape

M2= cv2.getRotationMatrix2D((100,100),15,1.5)

img2=cv2.warpAffine(img,M2,(w,h))

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:

中心点旋转

#(w/2,h/2)中心点

M2= cv2.getRotationMatrix2D((w/2,h/2),15,1.5)

修改图片修改后的大小

#如果想要修改图片大小,需要在这里修改

img2 = cv2.warpAffine(img, M2, (w/2, h/2))

变换矩阵 二

getAffineTransform(src[],dst[])

三个点可以确定变换的位置

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

#必须是32位,因为计算精度较高

src=np.float32([[400,300],[800,300],[400,1000]])

dst=np.float32([[200,400],[600,500],[150,1100]])

h,w,ch=img.shape

#(w/2,h/2)中心点

M2= cv2.getAffineTransform(src,dst)

print(M2)

#如果想要修改图片大小,需要在这里修改

img2 = cv2.warpAffine(img, M2, (w, h))

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:

滤波器

滤波的作用

一幅图像通过滤波器得到另一幅图像;

其中滤波器又称为卷积核,滤波的过程成为卷积;

图像卷积的效果 —

锐化

卷积的过程

卷积的几个基本概念

- 卷积核的大小

- 锚点

- 边界扩充

- 步长

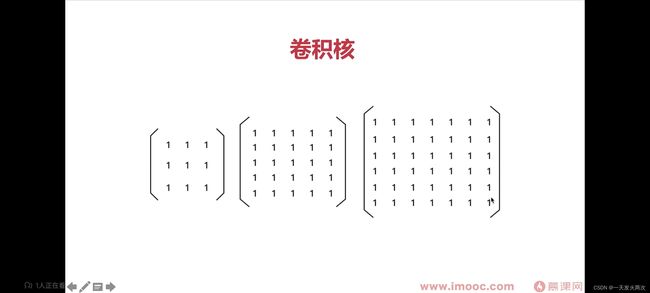

卷积核的大小

卷积核一般是奇数,如3x3,5x5、7x7

原因是:

- 增加padding的原因

- 保证锚点在中间,防止位置发生偏移的原因

卷积核大小的影响

在深度学习中,卷积核越大,得到的信息(感受野)越大,提取的特征越好,同时计算量也越大

边界扩充

- 当卷积核大于1且不进行边界扩充,输出尺寸将相应缩小

- 当卷积核以标准方式进行边界扩充,则输出数据的空间尺寸将与输入相等

计算公式

N = (W - F + 2P)/S+1

N输出图像大小

W源图大小;F卷积核大小;P扩充尺寸

S步长大小

实战图像卷积

低通滤波与高通滤波

- 低通滤波可以去除噪音和平滑图像

- 高通滤波可以帮助查找图像边缘

图像卷积

filter2D(src,ddepth,kernel,anchor,delta,borderType)

ddepth 位深 一般-1

kernel 卷积核

anchor 锚点

delta 默认是0

borderType 边界类型

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

kernal=np.ones((5,5),np.float32)/25

img2=cv2.filter2D(img,-1,kernal)

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:

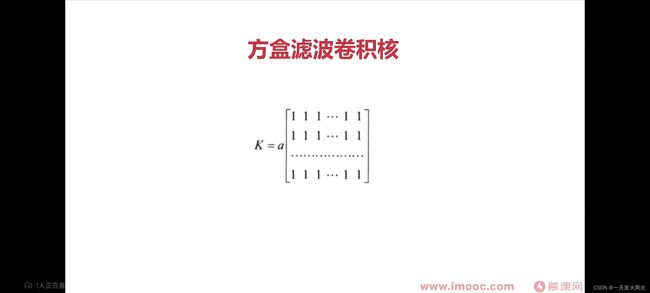

方盒滤波与均值滤波

方盒滤波参数 normalize

normalize = true , a= 1/(W x H)

normalize = false , a= 1

当 normalize = true时 方盒滤波==平均滤波

两个滤波的API

boxFilter(src,ddepth,ksize,anchor,normalize,borderType)

ddepth 输出位深 一般保持一致

ksize 卷积核大小

anchor 锚点 取默认值

normalize

bordeType

方盒滤波

blur(src,ksize,anchor,borderType)

平均滤波

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

#卷积核

#kernal=np.ones((5,5),np.float32)/25

img2=cv2.blur(img,(5,5))

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图

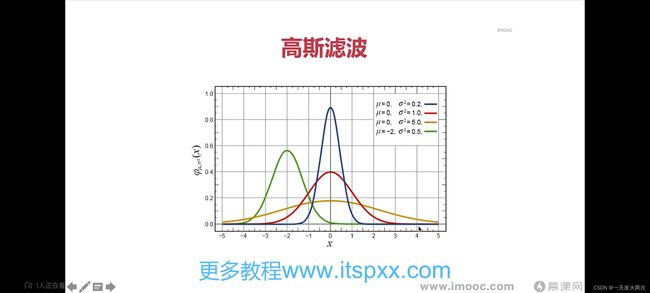

高斯滤波

GaussianBlur(img,kernel,sigmaX,sigmaY,...)

kernel 核大小

sigmaX 重心滤波延展的宽度 x轴 到中心点的最大差距 越大越模糊

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

#卷积核

#kernal=np.ones((5,5),np.float32)/25

img2=cv2.GaussianBlur(img,(5,5),sigmaX=1)

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

中值滤波

假设有一个数组【1556789】,取其中的中间值作为卷积的结果值

中值滤波的优点是对胡椒噪音效果明显

中值滤波API

中值滤波API

medianBlur(img,ksize)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\2.png')

#5x5

img2=cv2.medianBlur(img,5)

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

双边滤波

可以保留边缘,同时可以对边缘内的区域进行平滑处理。

双边滤波作用就是进行美颜

双边滤波API

bilateralFilter(img,d,sigmaColor,sigmaSpace,...)

d 直径 dilter大小

sigmaColor 边缘

sigmaSpace 平面上

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

img2=cv2.bilateralFilter(img,7,20,50)

cv2.imshow('img', img2)

cv2.waitKey(0)

if __name__ == '__main__':

start()

高通滤波

- Sobel(索贝尔)(高斯)------抗噪音好-----缺点:必须将横轴和纵轴检测的边缘加在一起才能

- Scharr(沙尔)------3x3卷积核,检测的更细一点-----缺点:必须将横轴和纵轴检测的边缘加在一起才能

- Laplacian(lapulasi)-------可以将横轴纵轴一起检测-----缺点:对噪音敏感

Sobel算子

- 先向x方向求导

- 然后在y方向求导

- 最终结果:|G| = |Gx| + |Gy|

Sobel API:

Sobel(src,ddepth,dx,dy,ksize=3,scale=1,delta=0,borderType=BORDER_DEFAULT)

dx=1 就会检测y边

dy=1 就会检测x边

如果ksize=-1就变成Scharr算法

scale 缩放

delta 结果

borderType 边缘类型是什么

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

#y方向边缘

d1=cv2.Sobel(img,cv2.CV_64F,1,0,ksize=5)

d2 = cv2.Sobel(img, cv2.CV_64F, 0, 1, ksize=5)

dst=d1+d2

cv2.imshow('d1', d1)

cv2.imshow('d2', d2)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

Scharr算子

- 与Sobel类似,只不过使用的Kernel值不同

- Scharr只能求x方向或y方向边缘

ScharrAPI:

Scharr(src,ddepth,dx,dy,scale=1,delta=0,borderType=BORDER_DEFAULT)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

d1=cv2.Scharr(img,cv2.CV_64F,1,0)

d2 = cv2.Scharr(img, cv2.CV_64F, 0, 1)

dst=d1+d2

cv2.imshow('d1', d1)

cv2.imshow('d2', d2)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图:

拉普拉斯算子Laplacian

- 可以同时求两个方向的边缘

- 对噪音敏感,一般先进行去噪再调用拉普拉斯

Laplacian API

Laplacian(img,ddepth,ksize=1,scale=1,borderType=BORDER_DEFAULT)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

d1=cv2.Laplacian(img,cv2.CV_64F,ksize=5)

cv2.imshow('d1', d1)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图:

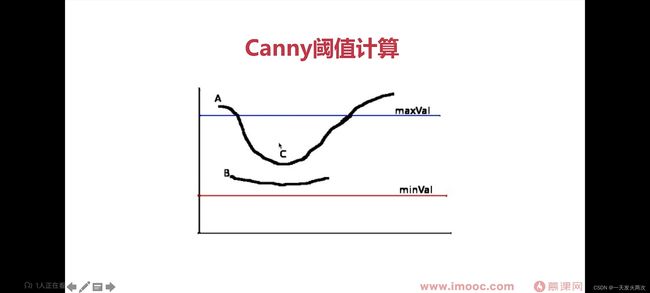

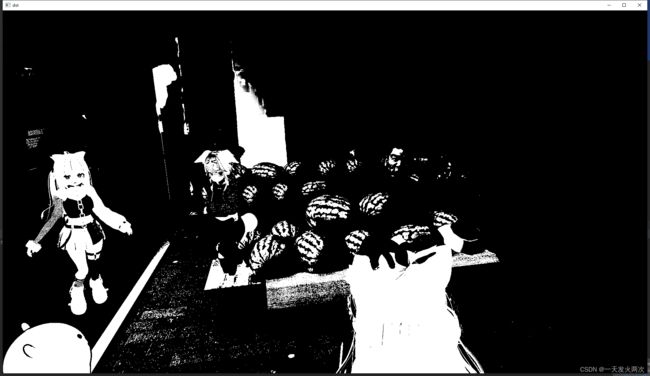

边缘检测Canny

Canny(img,minVal,maxVal,...)

minVal最小阈值 小于最小阈值就不算边缘

maxVal最大阈值 大于最大阈值是边缘

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

d1=cv2.Canny(img,100,200)

cv2.imshow('d1', d1)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图:

形态学图像处理

- 基于图像形态进行处理的一些基本算法

- 这些处理方法基本是对二进制图像进行处理

- 卷积核决定着图像处理后的效果

处理方法:

- 腐蚀与膨胀

- 开运算

- 闭运算

- 顶帽

- 黑帽

图像二值化

- 将图像的每个像素变成两种值,如0,255

- 全局二值化

- 局部二值化 (对光线不好的图像处理较好)

全局二值化

threshold API

threshold(img,thresh,maxVal,type)

img:图像,最好是灰度图

thresh:阈值

maxVal:超过阈值,替换成maxVal

threshodType

- THRESH_BINARY和THRESH_BINARY_INV (两个相反,一个超过阈值为最大,一个超过阈值为最小 )

- THRESH_TRUNC

- THRESH_TOZERO和THRESH_TOZERO_INV

代码:

import cv2

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

gol= cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#一个是执行结果,一个是输出图像

ret,dst=cv2.threshold(gol,180,255,cv2.THRESH_BINARY)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

自适应阈值

由于光照不均匀以及阴影的存在,只有一个阈值会使得在阴影处的白色被二值化成黑色

adaptiveThreshold API

adaptiveThreshold(img,maxVal,adaptiveMethod ,type,blockSize,C)

maxVal 转换成最大值是多少

adaptiveMethod:计算阈值的方法

blockSize 临近区域大小

C 常量,从计算出的平均值或加权平均值中减去

adaptiveMethod:

- 计算阈值的方法

- ADAPTIVE_THRESH_MEAN_C:计算临近区域的平均值

- ADAPTIVE_THRESH_GAUSSIAN_C :高斯窗口加权平均值 (一般用这个效果更好)

Type:二值化类型

- THRESH_BINARY

- THRESH_BINARY_INV

代码:

import cv2

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

gol= cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#一个是执行结果,一个是输出图像

dst=cv2.adaptiveThreshold(gol,255,cv2.ADAPTIVE_THRESH_GAUSSIAN_C,cv2.THRESH_BINARY_INV,3,0)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:

腐蚀

erode(img,kernel,iterations=1)

kernel卷积核大小

iterations 腐蚀次数

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

gol= cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

kernel=np.ones((3,3),np.uint8)

#一个是执行结果,一个是输出图像

dst=cv2.erode(img,kernel,iterations=1)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图:

卷积核的类型

获得卷积核

getStructuringElement(type,size)

size值为(3,3)、(5,5)...

卷积核类型

- MORPH_RECT //全一的矩形 (通常)

- MORPH_ELLIPSE //椭圆形

- MORPH_CROSS // 十字架 交叉的

代码:

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

gol= cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#kernel=np.ones((3,3),np.uint8)

kernel=cv2.getStructuringElement(cv2.MORPH_RECT,(7,7))

print(kernel)

dst=cv2.erode(img,kernel,iterations=1)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:

MORPH_RECT //全一的矩形 (通常):

F:\python\Scripts\python.exe F:/pythonProject/opencvStudy/study1/start35.py

[[1 1 1 1 1 1 1]

[1 1 1 1 1 1 1]

[1 1 1 1 1 1 1]

[1 1 1 1 1 1 1]

[1 1 1 1 1 1 1]

[1 1 1 1 1 1 1]

[1 1 1 1 1 1 1]]

- MORPH_ELLIPSE //椭圆形 :

F:\python\Scripts\python.exe F:/pythonProject/opencvStudy/study1/start35.py

[[0 0 0 1 0 0 0]

[0 1 1 1 1 1 0]

[1 1 1 1 1 1 1]

[1 1 1 1 1 1 1]

[1 1 1 1 1 1 1]

[0 1 1 1 1 1 0]

[0 0 0 1 0 0 0]]

MORPH_CROSS // 十字架 交叉的:

[[0 0 0 1 0 0 0]

[0 0 0 1 0 0 0]

[0 0 0 1 0 0 0]

[1 1 1 1 1 1 1]

[0 0 0 1 0 0 0]

[0 0 0 1 0 0 0]

[0 0 0 1 0 0 0]]

膨胀运算

膨胀API:

dilate(img,kernel,iterations=1)

iterations:膨胀几次

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

gol= cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#kernel=np.ones((3,3),np.uint8)

kernel=cv2.getStructuringElement(cv2.MORPH_CROSS,(7,7))

print(kernel)

dst=cv2.dilate(img,kernel,iterations=1)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:

开运算

开运算=腐蚀+膨胀

开运算API:

morphologyEx(img,MORPH_OPEN,kernel)

MORPH_OPEN 宏 代表形态学的开运算

kernel 卷积核大小。噪点大核大

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(7,7))

dst=cv2.morphologyEx(img,cv2.MORPH_OPEN,kernel)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:

闭运算

morphologyEX(img,MORPH_CLOSE,kernel)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(7,7))

dst=cv2.morphologyEx(img,cv2.MORPH_CLOSE,kernel)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果:

形态学梯度

morphologyEX(img,MORPH_GRADIENT,kernel)

边缘清晰 kernel小一点,希望里边比较粗一点 kernel大一点

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(3,3))

dst=cv2.morphologyEx(img,cv2.MORPH_GRADIENT,kernel)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图:

顶帽运算

顶帽API

morphologyEX(img,MORPH_TOPHAT,kernel)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(3,3))

dst=cv2.morphologyEx(img,cv2.MORPH_TOPHAT,kernel)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图:

黑帽运算

黑帽API:

morphologyEX(img,MORPH_BLACKHAT,kernel)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(3,3))

dst=cv2.morphologyEx(img,cv2.MORPH_BLACKHAT,kernel)

cv2.imshow('dst', dst)

cv2.waitKey(0)

if __name__ == '__main__':

start()

效果图:

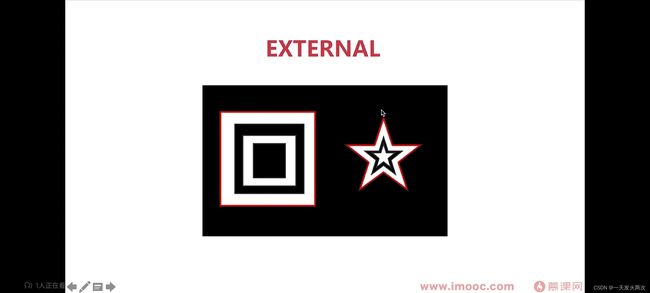

图像轮廓

具有相同颜色或强度的连续点的曲线

轮廓作用:

- 可用于图形分析

- 物体的识别和检测

注意点: - 为了检测到准确性,需要先对图像进行二值化或Canny操作

- 画轮廓时会修改输入的图像

轮廓查找API

findContours(img,mode,ApproximationMode...)

mode 模式 主要作用对查找到轮廓怎样处置

ApproximationMode 近似的模式

两个返回值

contours 和hierarchy

contours 查找到所有轮廓的列表

hierarchy 所有轮廓有没有层级关系,前后关系

mode

- RETR_EXTERNAL=0,表示只检测外轮廓

- RETR_LIST——1,检测的轮廓不建立等级关系

- RETR_CCOMP=2,每层最多两级

- RETR_TREE=3,按树形存储轮廓 (常用)

- CHAIN_APPROX_NONE,保存所有轮廓上的点 (数据量大)

- CHAIN_APPROX_SIMPLE,只保存角点(一般这个)

实战查找轮廓

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\VRChat\\2022-06\\vr.png')

#转换成灰度

imggray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#二值化

dst = cv2.adaptiveThreshold(imggray,255,cv2.ADAPTIVE_THRESH_GAUSSIAN_C,cv2.THRESH_BINARY_INV,3,0)

#轮廓查找

contours,hierarchy = cv2.findContours(dst,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

print(contours)

print(hierarchy)

cv2.waitKey(0)

if __name__ == '__main__':

start()

绘制轮廓

绘制轮廓API:

drawContours(img, contours,contourIdx,color,thickness...)

contours:轮廓坐标点

contourIdx ,轮廓顺序号 -1表示绘制所有轮廓

color:颜色,(0,0,255)

thickness:线宽,-1是全部填充

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\3.png')

#转换成灰度

imggray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#二值化

ret,binary = cv2.threshold(imggray,150,255,cv2.THRESH_BINARY)

#轮廓查找

contours,hierarchy = cv2.findContours(binary,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

#绘制边

cv2.drawContours(img, contours,-1,(0,0,255),5)

cv2.imshow('lines',img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

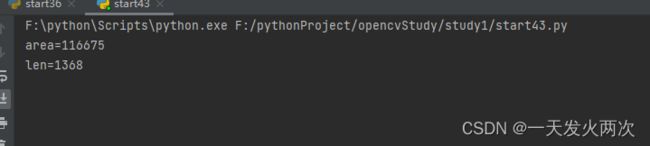

轮廓的面积与周长

轮廓面积API:

contourArea(contour)

contour:轮廓

轮廓周长API:

arcLength(curve,closed)

curve:轮廓

closed:是否是闭合的轮廓

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\3.png')

#转换成灰度

imggray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#二值化

ret,binary = cv2.threshold(imggray,150,255,cv2.THRESH_BINARY)

#轮廓查找

contours,hierarchy = cv2.findContours(binary,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

#绘制边

cv2.drawContours(img, contours,-1,(0,0,255),5)

#计算面积

area = cv2.contourArea(contours[0])

#计算周长

len = cv2.arcLength(contours[0],True)

print("area=%d"%(area))

print("len=%d" % (len))

cv2.imshow('lines',img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

多边形逼近与凸包

approxPolyDP(curve,epsilon,closed)

curve:轮廓

epsilon:精度

closed:是否是闭合轮廓

凸包API:

convexHull(point,clockwise,...)

points:轮廓

clockwise:顺时针绘制

代码:

import cv2

import numpy as np

def drawShape(src,points):

i=0

while i full = cv2.convexHull(contours[1])

drawShape(img,full)

外接矩形

minAreaRect(points)

points:轮廓

返回值:RotatedRect 角度

RotatedRect

- x,y

- width,height

- angle

最大外接矩形API

boundingRect(array)

array:轮廓

返回值:Rect (包括起始点,宽和高)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\hello.png')

# 转换成灰度

imggray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# 二值化

ret, binary = cv2.threshold(imggray, 210, 255, cv2.THRESH_BINARY)

# 轮廓查找

contours, hierarchy = cv2.findContours(binary, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

#最小外接矩形

r=cv2.minAreaRect(contours[1])

box= cv2.boxPoints(r) #只取起始点和宽高

#强制转换 浮点型转换为int

box=np.int0(box)

cv2.drawContours(img,[box],0,(0,225,0),2)

#最大外接矩形

x,y,w,h=cv2.boundingRect(contours[1])

cv2.rectangle(img,(x,y),(x+w,y+h),(225,0,0),2)

cv2.imshow('lines', img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

实战车辆统计

第一步,读取视频

import cv2

import numpy as np

def start():

#打开视频

cap= cv2.VideoCapture("C:\\Users\\Zz\\Videos\\1.mp4")

#一帧一帧读取

while True:

ret,frame =cap.read()

if ret:

#显示画面

cv2.imshow("video",frame)

key=cv2.waitKey(10)

#esc按键

if (key==27):

break

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

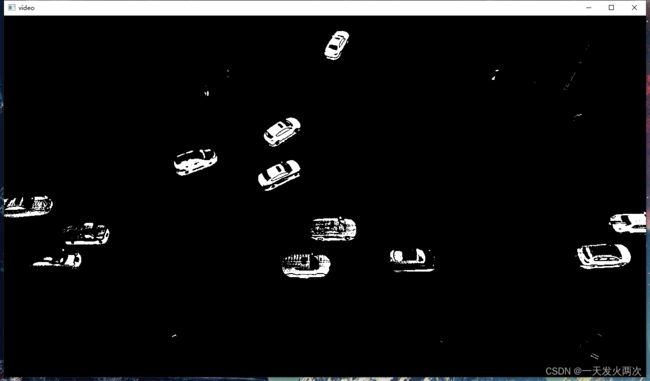

去除背景

将前景提取,去除背景

API

createBackgroundSubractorMOG(...)

history=200 #200ms是5帧

论文推荐

An improved adaptive background mixture model for real-time tracking with shadow detection

代码:

import cv2

import numpy as np

def start():

#打开视频

cap= cv2.VideoCapture("C:\\Users\\Zz\\Videos\\1.mp4")

#获取去背景对象

bgsubmog = cv2.bgsegm.createBackgroundSubtractorMOG()

#一帧一帧读取

while True:

ret,frame =cap.read()

if ret:

#灰度

cv2.cvtColor(frame,cv2.COLOR_BGR2GRAY)

#去高斯噪点

blur= cv2.GaussianBlur(frame,(3,3),5)

#取背影

mask=bgsubmog.apply(blur)

#显示画面

cv2.imshow("video",mask)

key=cv2.waitKey(10)

#esc按键

if (key==27):

break

#消除资源

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

形态处理

代码:

import cv2

import numpy as np

def start():

#打开视频

cap= cv2.VideoCapture("C:\\Users\\Zz\\Videos\\1.mp4")

#获取去背景对象

bgsubmog = cv2.bgsegm.createBackgroundSubtractorMOG()

#一帧一帧读取

while True:

ret,frame =cap.read()

if ret:

#灰度

img = cv2.cvtColor(frame,cv2.COLOR_BGR2GRAY)

#去高斯噪点

blur= cv2.GaussianBlur(img,(3,3),5)

#去掉背景

mask=bgsubmog.apply(blur)

#腐蚀 去掉小的的物体

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3))

erode = cv2.erode(mask,kernel,iterations=1)

#膨胀 还原放大

dilate = cv2.dilate(erode,kernel,iterations=3)

#闭运算 去掉中间的空缺

close = cv2.morphologyEx(dilate,cv2.MORPH_CLOSE,kernel)

close = cv2.morphologyEx(dilate, cv2.MORPH_CLOSE, kernel)

#查找轮廓

contours,hierarchy = cv2.findContours(close,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

for (i,con) in enumerate(contours):

#获取最大外接矩形

(x,y,w,h)=cv2.boundingRect(con)

#绘制矩形

cv2.rectangle(frame,(x,y),(x+w,y+h),(0,0,225),2)

#显示画面

cv2.imshow("video", frame)

key=cv2.waitKey(10)

#esc按键

if (key==27):

break

#消除资源

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

start()

效果:

车辆统计

代码:

import cv2

import numpy as np

min_w=30

min_h=30

#检测线的高度

line_hight = 1100

#存放车辆有效数组

cars=[]

#线的偏移量

offset=6

#求中心点

def center(x,y,w,h):

x1=int(w/2)

y1=int(h/2)

cx=x+x1

cy=y+y1

return cx,cy

def start():

# 路过车的数量

carnum = 0

#打开视频

cap= cv2.VideoCapture("C:\\Users\\Zz\\Videos\\1.mp4")

#获取去背景对象

bgsubmog = cv2.bgsegm.createBackgroundSubtractorMOG()

#一帧一帧读取

while True:

ret,frame =cap.read()

if ret:

#灰度

img = cv2.cvtColor(frame,cv2.COLOR_BGR2GRAY)

#去高斯噪点

blur= cv2.GaussianBlur(img,(3,3),5)

#去掉背景

mask=bgsubmog.apply(blur)

#腐蚀 去掉小的的物体

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3))

erode = cv2.erode(mask,kernel,iterations=1)

#膨胀 还原放大

dilate = cv2.dilate(erode,kernel,iterations=3)

#闭运算 去掉中间的空缺

close = cv2.morphologyEx(dilate,cv2.MORPH_CLOSE,kernel)

close = cv2.morphologyEx(dilate, cv2.MORPH_CLOSE, kernel)

#查找轮廓

contours,hierarchy = cv2.findContours(close,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

cv2.line(frame,(line_hight,10),(line_hight,710),(255,255,0),3)

for (i,con) in enumerate(contours):

#获取最大外接矩形

(x,y,w,h)=cv2.boundingRect(con)

#对车宽高比进行判断

#验证车是否有效

isValid=(w>=min_w) and (h >=min_h)

if(not isValid):

continue

# 绘制矩形

cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 0, 225), 2)

#将有用的车中心点放入集合

cpoint = center(x,y,w,h)

cars.append(cpoint)

for(x,y) in cars:

if(x>line_hight-offset) and(x效果:

opencv特征检测

什么是特征

图像特征就是指有意义区域

具有独特性、易于识别性,比如角点、斑点以及高密度区

角点

在特征中最重要的是角点

灰度梯度的最大值对应的像素

两条线的交点

极值点(一阶导数最大,但二阶导数为0)

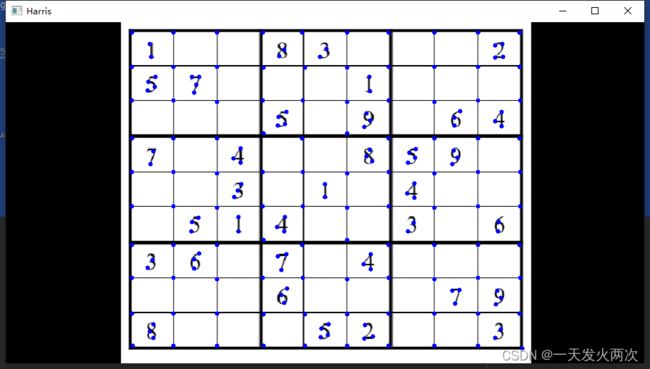

Harris 角点

- 光滑地区,无论向哪里移动,衡量系数不变

- 边缘地址,垂直边缘移动时,衡量系数变化剧烈

- 在交点处,往那个方向移动,衡量系统都变化剧烈

Harris角点检测API

cornerHarris(img,dst,blockSize,ksize,k)

dst 输出结果(python没有)

blockSize:检测窗口大小 窗口越大,检测敏感越高

ksize:Sobel的卷积核

k:权重系数,经验值,一般取 0.0.2~0.04之间

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\shudu.png')

#灰度化

grayimg=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#Harris角点检测

dst =cv2.cornerHarris(grayimg,2,3,0.04)

#Harris角点展示

img[dst>0.01*dst.max()]=[0,0,255]

cv2.imshow("Harris",img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

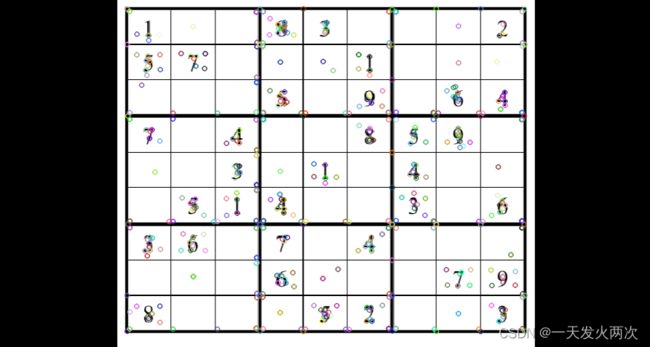

Shi-Tomasi角点检测

- Shi-Tomasi是Harris角点检测的改进

- Harris角点检测算的稳定性和k有关,而k是一个经验值,不好设定最佳值

Shi-Tomasi角点检测API

goodFeaturesTrack(img,maxCorners,...)

maxCorners:角点的最大数,值为0表示无限制

qualityLevel:小于1.0的正数,一般在0.01-0.1之间

minDistance:角之间的最小欧式距离,忽略小于此距离的点 距离越大检测的角越少

mask:感兴趣的区域

blockSize:检测窗口

useHarrisDetector:是否使用Harris算法

k:默认是0.04

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\shudu.png')

#灰度化

grayimg=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#Harris角点检测

#dst =cv2.cornerHarris(grayimg,2,3,0.04)

corner=cv2.goodFeaturesToTrack(grayimg,1000,0.01,10)

#Harris角点展示

#img[dst>0.01*dst.max()]=[0,0,255]

#浮点型转换成整型

corner =np.int0(corner)

for i in corner:

x,y=i.ravel()

cv2.circle(img,(x,y),3,(255,0,0),-1)

cv2.imshow("Harris",img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

SIFT关键点检测(Scale-Invariant Feature Transform)

- Harris角点具有旋转不变的特征

- 但缩放后,原来的角有可能就不是角点了

步骤

- 创建SIFT对象

- 进行检测,kp=sift.detect(img,…)

- 绘制关键点,drawKeypoints(gray,kp,img)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\shudu.png')

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

sift=cv2.xfeatures2d.SIFT_create()

kp= sift.detect(gray,None)

#绘制点

cv2.drawKeypoints(gray,kp,img)

cv2.imshow("img",img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

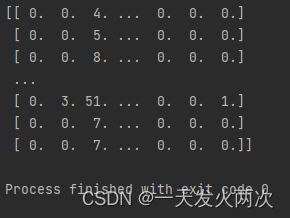

SIFT计算描述子

关键点和描述子

- 关键点:位置、大小和方向

- 关键点描述子:记录了关键点周围对其有贡献的像素点的一组向量值,其不受仿射变换、光照变换等影响

计算描述子

kp,des=sift.compute(img,kp)- 其作用是进行特征匹配

同时计算关键点和描述

- kp,des=sift.detectAndCompute(img,…)

- mask:指明对img中哪个区域进行计算

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\shudu.png')

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#创建sift对象

sift=cv2.xfeatures2d.SIFT_create()

#进行检测

kp,des= sift.detectAndCompute(gray,None)

print(des)

#绘制点

cv2.drawKeypoints(gray,kp,img)

cv2.imshow("img",img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

SURF (Speeded-Up Robust Features)

SIFT最大的问题是速度慢,因此才有SURF

使用步骤

surf=cv2.xfeatures2d.SURF_create()kp,des=surf.detectAndCompute(img,mask)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\shudu.png')

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#创建surf对象

surf = cv2.xfeatures2d.SURF_create()

#进行检测

kp,des= surf.detectAndCompute(gray,None)

#print(des)

#绘制点

cv2.drawKeypoints(gray,kp,img)

cv2.imshow("img",img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

运行效果:

因为版权opencv4,不能使用!!!!!我超!!

ORB (Oriented FAST and Rotated BRIEF)

ORB可以做到实时检测

ORB = Oriented FAST+Rotated BRIEF

使用步骤

orb=cv2.ORB_create()kp,des=orb.detectAndCompute(img,mask)

代码:

import cv2

import numpy as np

def start():

img = cv2.imread('C:\\Users\\Zz\\Pictures\\shudu.png')

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#创建ORB对象

orb=cv2.ORB_create()

#进行检测

kp,des= orb.detectAndCompute(gray,None)

#print(des)

#绘制点

cv2.drawKeypoints(gray,kp,img)

#因为版权,我超,不能用!!!!

cv2.imshow("img",img)

cv2.waitKey(0)

if __name__ == '__main__':

start()

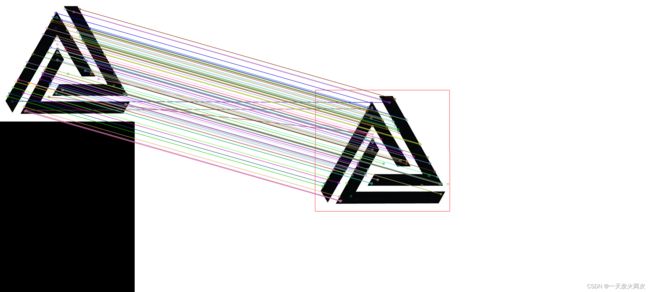

暴力特征匹配

特征匹配方法:

- BF(Brute-Force),暴力特征匹配方法

- FLANN 最快邻近区特征匹配方法

暴力特征匹配原理

它使用第一组中每个特征的描述子

与第二组中的所有特征描述子进行匹配

计算他们之间的差距,然后将最接近一个匹配返回

opencv特征匹配步骤

- 创建匹配器,BFMatcher(normType,crossCheck)

- 进行特征匹配,bf.match(des1,des2)

- 绘制匹配点,cv2.drawMatches(img1,kp1,img2,k2,…)

BFMatcher

- normType:NORM_L1,NORM_L2,HAMMING1…(默认值NORM_L2)

- crossCheck:是否进行交叉匹配,默认为false(开启了计算量大)

match方法

- 参数为SIFT、SURF、OBR等计算的描述子

- 对两幅图的描述子进行计算

drawMatches

- 搜索img,kp

- 匹配图img,kp

- match()方法返回的匹配结果

代码:

import cv2

import numpy as np

def start():

img1 = cv2.imread('C:\\Users\\Zz\\Pictures\\bf1.jpg')

img2 = cv2.imread('C:\\Users\\Zz\\Pictures\\bf2.jpg')

gray1 = cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)

gray2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# 创建sift对象

sift = cv2.xfeatures2d.SIFT_create()

#进行检测

kp1,des1= sift.detectAndCompute(gray1,None)

kp2, des2 = sift.detectAndCompute(gray2, None)

bf=cv2.BFMatcher(cv2.NORM_L1)

match= bf.match(des1,des2)

#绘制点

img3= cv2.drawMatches(img1,kp1,img2,kp2,match,None)

#print(des)

cv2.imshow("img",img3)

cv2.waitKey(0)

if __name__ == '__main__':

start()

FLANN特征匹配

- 在进行批量特征匹配时,FLANN速度更快

- 由于它使用的时邻近近似值,所以精度比较差

使用FLANN特征匹配的步骤

- 创建FLANN匹配器,FLannBasedMatcher(…)

- 进行特征匹配,flann.match/knnMatch(…)

- 绘制匹配点,cv2.drawMatches/drawMatchesKnn(…)

FlannBasedMatcher

- index_params字典:匹配算法KDTREE(SIFT、SURF)、LSH(ORD)

- search——params字典:指定KDTREE算法中遍历树的次数(一般设置为5)

KDTREE

index_params=dict(algorithm=FLANN_INDEX_KDTREE,trees=5)

algorithm=FLANN_INDEX_KDTREE实际上是1

search_params

- search_params = dict(checks=50)

knnMatch方法

- 参数为SIFT、SURF、ORB等计算的描述子

- k,表示取欧式距离最近的前k个关键点

- 返回的是匹配结果DMatch对象

DMatch的内容

- distance,描述子之间的距离,值越低越好

- queryIdx,第一个图像的描述子索引值

- trainIdx,第二个图的描述子索引值

- imgIdx,第二个图的索引值

drawMatchesKnn

- 搜索img,kp

- 匹配图img,kp

- match()方法返回的匹配值

代码:

import cv2

import numpy as np

def start():

img1 = cv2.imread('C:\\Users\\Zz\\Pictures\\bf2.jpg')

img2 = cv2.imread('C:\\Users\\Zz\\Pictures\\bf1.jpg')

gray1 = cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)

gray2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# 创建sift对象

sift = cv2.xfeatures2d.SIFT_create()

#进行检测

kp1,des1= sift.detectAndCompute(gray1,None)

kp2, des2 = sift.detectAndCompute(gray2, None)

# 特征检测器

index_params = dict(algorithm=1, trees=5)

search_params = dict(checks=50)

flann= cv2.FlannBasedMatcher(index_params,search_params)

#对描述子进行匹配计算

matchs= flann.knnMatch(des1,des2,k=2)

good=[]

for i,(m,n) in enumerate(matchs):

if m.distance < 0.7*n.distance:

good.append(m)

ret = cv2.drawMatchesKnn(img1,kp1,img2,kp2,[good],None)

cv2.imshow("img",ret)

cv2.waitKey(0)

if __name__ == '__main__':

start()

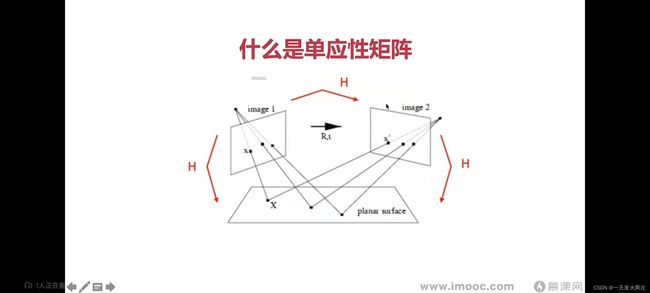

图像查找

import cv2

import numpy as np

def start():

img1 = cv2.imread('C:\\Users\\Zz\\Pictures\\bf2.jpg')

img2 = cv2.imread('C:\\Users\\Zz\\Pictures\\bf1.jpg')

gray1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

gray2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# 创建sift对象

sift = cv2.xfeatures2d.SIFT_create()

# 进行检测

kp1, des1 = sift.detectAndCompute(gray1, None)

kp2, des2 = sift.detectAndCompute(gray2, None)

# 特征检测器

index_params = dict(algorithm=1, trees=5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

# 对描述子进行匹配计算

matchs = flann.knnMatch(des1, des2, k=2)

good = []

for i, (m, n) in enumerate(matchs):

if m.distance < 0.7 * n.distance:

good.append(m)

if len(good)>=4:

#获取匹配点

srcPts = np.float32( [kp1[m.queryIdx].pt for m in good]).reshape(-1,1,2)

dstPts = np.float32([kp2[m.trainIdx].pt for m in good]).reshape(-1, 1, 2)

#查找单元矩阵

H,_= cv2.findHomography(srcPts,dstPts,cv2.RANSAC,5.0)

#透视变换

h,w=img1.shape[:2]

pts = np.float32([[0,0],[0,h-1],[w-1,h-1],[w-1,0]]).reshape(-1,1,2)

dst=cv2.perspectiveTransform(pts,H)

cv2.polylines(img2,[np.int32(dst)],True,(0,0,255))

else:

print("the num is less than 4")

exit()

ret = cv2.drawMatchesKnn(img1, kp1, img2, kp2, [good], None)

cv2.imshow("img",ret)

cv2.waitKey(0)

if __name__ == '__main__':

start()