机器学习笔记 十七:基于Gini Importance、Permutation Importance、Boruta的随机森林模型重要性评估的比较

目录

-

-

- 1. 随机森林模型拟合和预测性能

-

- 1.1 样本拆分

- 1.2 模型拟合

- 1.3 特征重要性

- 1.4 Permutation Importance(permutation_importances)

- 1.5 Boruta

- 2. 特征选择和性能比较

-

- 2.1 基于基尼重要性的特征选择

- 2.2 基于排序重要性的特征选择

- 2.3 基于Boruta的特征选择

- 2.4 预测性能比较

-

1. 随机森林模型拟合和预测性能

1.1 样本拆分

X = wine.drop('quality', axis = 1)

y = wine['quality']

集合y:

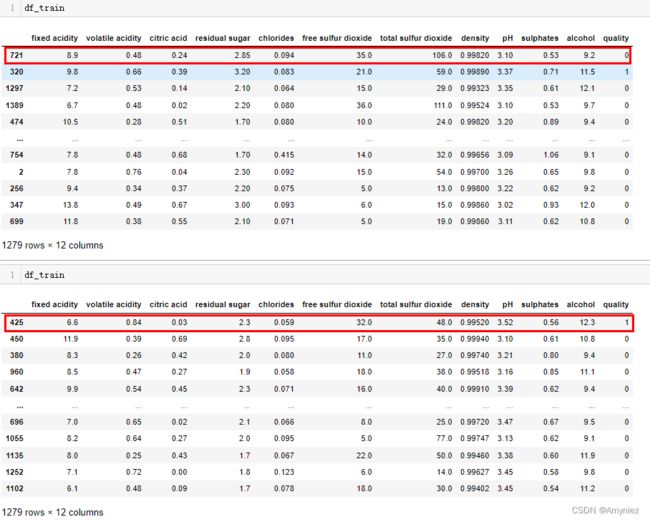

df_train, df_test = train_test_split(wine, test_size=0.20) # random_state随机状态:保证每次分割的样本一致

df_train = df_train[list(wine.columns)] # 获取wine的列名:Index(['fixed acidity', 'volatile acidity', 'citric acid', 'residual sugar',

# 'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density',

# 'pH', 'sulphates', 'alcohol', 'quality'],

df_test = df_test[list(wine.columns)]

X_train, y_train = df_train.drop('quality',axis=1), df_train['quality']

X_test, y_test = df_test.drop('quality',axis=1), df_test['quality']

X_train.shape,y_train.shape,X_test.shape,y_test.shape

((1279, 11), (1279,), (320, 11), (320,))

注意:

若random_state随机状态未设置,将出现下面的情况,即每次划分的结果不一致

1.2 模型拟合

参数解释:

- n_estimators : integer, optional (default=10) 整数,可选择(默认值为10)。 森林里决策树的数目。

- criterion : string, optional (default=”gini”) 字符串,可选择(默认值为“gini”)。衡量分裂质量的性能(函数)。

- min_samples_leaf : int, float, optional (default=1) 整数,浮点数,可选的(默认值为1)。需要在叶子结点上的最小样本数量:如果为int,那么考虑min_samples_leaf作为最小的数字。

- n_jobs : integer, optional (default=1) 整数,可选的(默认值为1)。用于拟合和预测的并行运行的工作(作业)数量。如果值为-1,那么工作数量被设置为核的数量。

- oob_score : bool (default=False) bool,(默认值为False)。是否使用袋外样本来估计泛化精度。

rf = RandomForestClassifier(n_estimators=200,

min_samples_leaf=5,

n_jobs=-1,

oob_score=True,

random_state=42)

rf.fit(X_train, y_train)

# 性能预测

print(classification_report(y_test, rf.predict(X_test)))

结果显示:

precision recall f1-score support

0 0.91 0.98 0.94 281

1 0.67 0.31 0.42 39

accuracy 0.90 320

macro avg 0.79 0.64 0.68 320

weighted avg 0.88 0.90 0.88 320

其中,列表最左边的一列为分类的标签名,右边support列为每个标签的出现次数。avg / total行为各列的均值(support列为总和),precision、recall、f1-score三列分别为各个类别的精确度、召回率及值。

precision: 预测的准确性,获取的所有样本中,正确样本的占比

recall: 实际样本中,有多少样本被正确的预测出来了。

F1 Score: 统计学中用来衡量二分类模型精确度的一种指标。它同时兼顾了分类模型的精确率和召回率。F1分数可以看作是模型精确率和召回率的一种加权平均,它的最大值是1,最小值是0。

举例解释:

某池塘有1400条鲤鱼,300只虾,300只鳖。现在以捕鲤鱼为目的,撒网逮着了700条鲤鱼,200只虾,100只鳖。那么,这些指标分别如下:

精确率 = 700 / (700 +200 + 100) = 70%

召回率 = 700 / 1400 = 50%

1.3 特征重要性

Gini Importance: feature_importances_

gini不纯度: 从一个数据集中随机选取子项,度量其被错误的划分到其他组里的概率,计算式:

基尼不纯度:

(1)基尼不纯度可以作为衡量系统混乱程度的标准;

(2)基尼不纯度越小,纯度越高,集合的有序程度越高,分类的效果越好;

(3)基尼不纯度为 0 时,表示集合类别一致;

(4)在决策树中,比较基尼不纯度的大小可以选择更好的决策条件(子节点)

features = np.array(X_train.columns)

# gini不纯度

imps_gini=rf.feature_importances_

std_gini = np.std([tree.feature_importances_ for tree in rf.estimators_], axis=0) # 计算标准差

indices_gini = np.argsort(imps_gini) # 将imps_gini中的元素从小到大排列,提取其在排列前对应的index(索引)输出

plt.title('Feature Importance')

# plt.barh():横向的柱状图,可以理解为正常柱状图旋转了90°

plt.barh(range(len(indices_gini)), imps_gini[indices_gini], yerr=std_gini[indices_gini],color='c', align='center')

plt.yticks(range(len(indices_gini)), features[indices_gini])

plt.xlabel('Gini Importance')

plt.show()

1.4 Permutation Importance(permutation_importances)

# 从相同的随机数种子出发,可以得到相同的随机数序列

np.random.seed(10)

imps_perm, std_perm = permutation_importances(rf, X_train, y_train,

oob_classifier_accuracy

features = np.array(X_train.columns)

indices_perm = np.argsort(imps_perm)

plt.title('Feature Importances')

plt.barh(range(len(indices_perm)), imps_perm[indices_perm], yerr=std_perm[indices_perm],color='c', align='center')

plt.yticks(range(len(indices_perm)), features[indices_perm])

plt.xlabel('Permutation Importance')

plt.show()

1.5 Boruta

forest = RandomForestClassifier(n_estimators=200,

min_samples_leaf=5,

n_jobs=-1,

oob_score=True,

random_state=42)

feat_selector = BorutaPy(forest, verbose=2,max_iter=50)

np.random.seed(10)

import time

start = time.time()

feat_selector.fit(X_train.values, y_train.values)

end = time.time()

print(end - start)# 获取运行时间

迭代发生变化的节点:

BorutaPy finished running.

Iteration: 50 / 50

Confirmed: 8

Tentative: 0

Rejected: 2

169.35201215744019

print('明确的参数: \n',list(np.array(X_train.columns)[feat_selector.ranking_==1]))

print('\n待定的参数: \n',list(np.array(X_train.columns)[feat_selector.ranking_==2]))

print('\n拒绝的参数: \n',list(np.array(X_train.columns)[feat_selector.ranking_>=3]))

明确的参数:

[‘fixed acidity’, ‘volatile acidity’, ‘citric acid’, ‘chlorides’, ‘total sulfur dioxide’, ‘density’, ‘sulphates’, ‘alcohol’]

待定的参数:

[‘free sulfur dioxide’]

拒绝的参数:

[‘residual sugar’, ‘pH’]

2. 特征选择和性能比较

2.1 基于基尼重要性的特征选择

删除基尼重要性小于0.05的参数(3个):‘pH’,‘residual sugar’,‘free sulfur dioxide’

X_train_gini=X_train.drop(['pH','residual sugar','free sulfur dioxide'],axis=1)

X_test_gini=X_test.drop(['pH','residual sugar','free sulfur dioxide'],axis=1)

rf_gini = RandomForestClassifier(n_estimators=300, # 森林里(决策)树的数目

min_samples_leaf=5,

n_jobs=-1,

oob_score=True,

random_state=42)

rf_gini.fit(X_train_gini, y_train)

2.2 基于排序重要性的特征选择

删除排序重要性小于0.003的参数(6个):‘chlorides’,‘pH’,‘residual sugar’,‘fixed acidity’,‘free sulfur dioxide’,‘citric acid’

X_train_perm=X_train.drop(['chlorides','pH','residual sugar','fixed acidity','free sulfur dioxide','citric acid'],axis=1)

X_test_perm=X_test.drop(['chlorides','pH','residual sugar','fixed acidity','free sulfur dioxide','citric acid'],axis=1)

rf_gini = RandomForestClassifier(n_estimators=300, # 森林里(决策)树的数目

min_samples_leaf=5,

n_jobs=-1,

oob_score=True,

random_state=42)

rf_gini.fit(X_train_gini, y_train)

2.3 基于Boruta的特征选择

删除Boruta的拒绝变量(2个):‘residual sugar’, ‘pH’

X_train_boruta=X_train.drop(['pH','residual sugar'],axis=1)

X_test_boruta=X_test.drop(['pH','residual sugar'],axis=1)

rf_gini = RandomForestClassifier(n_estimators=300, # 森林里(决策)树的数目

min_samples_leaf=5,

n_jobs=-1,

oob_score=True,

random_state=42)

rf_gini.fit(X_train_gini, y_train)

2.4 预测性能比较

print('**************************** 原模型 ****************************')

print('\n')

print(classification_report(y_test, rf.predict(X_test)))

print ('\n')

print('****************** 基于基尼重要性的特征选择 ******************')

print('\n')

print(classification_report(y_test, rf_gini.predict(X_test_gini)))

print ('\n')

print('****************** 基于排序重要性的特征选择 ******************')

print('\n')

print(classification_report(y_test, rf_perm.predict(X_test_perm)))

print ('\n')

print('******************** 基于Boruta的特征选择 ********************')

print('\n')

print(classification_report(y_test, rf_boruta.predict(X_test_boruta)))

**************************** 原模型 ****************************

precision recall f1-score support

0 0.90 0.97 0.93 274

1 0.67 0.39 0.49 46

accuracy 0.88 320

macro avg 0.79 0.68 0.71 320

weighted avg 0.87 0.88 0.87 320

****************** 基于基尼重要性的特征选择 ******************

precision recall f1-score support

0 0.90 0.97 0.93 274

1 0.67 0.39 0.49 46

accuracy 0.88 320

macro avg 0.79 0.68 0.71 320

weighted avg 0.87 0.88 0.87 320

****************** 基于排序重要性的特征选择 ******************

precision recall f1-score support

0 0.89 0.99 0.94 274

1 0.76 0.28 0.41 46

accuracy 0.88 320

macro avg 0.83 0.63 0.67 320

weighted avg 0.87 0.88 0.86 320

******************** 基于Boruta的特征选择 ********************

precision recall f1-score support

0 0.90 0.97 0.93 274

1 0.67 0.39 0.49 46

accuracy 0.88 320

macro avg 0.79 0.68 0.71 320

weighted avg 0.87 0.88 0.87 320

相比之下,排序重要性的特征选择方法稍好一些。基于Boruta的特征选择只删除了pH和原始模型的结果是一致的,并未对模型产生很大的影响。