多分类问题:使用多层神经网络完成iris数据集训练和测试(Pytorch)

0. 声明

本文是作者,总结学到的知识,使用Pandas, Numpy, Pytorch等,完成多分类实验。若有错误,欢迎指正。

1. 实验要求

- 请用多层神经网络完成iris数据集的训练和测试。

- 其中训练集占用80%,测试集20%。

- 模型层数及形状可以任意指定,模型输出部分采用softmax,损失函数采用交叉熵损失函数。

- 请用sklearn的f1_score函数评价模型在测试集上的表现。

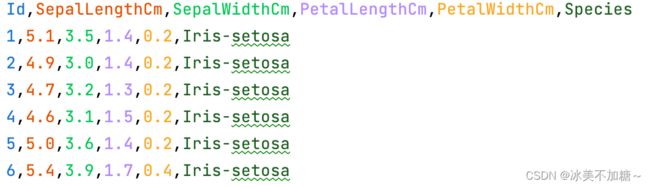

2. 数据集部分样本

3. 完整训练代码展示

import torch

import numpy as np

import pandas as pd

from torch.utils.data.dataset import Dataset

from torch.utils.data.dataloader import DataLoader

import torch.nn.functional as F # 激励函数都在这

from sklearn.metrics import accuracy_score

from sklearn.metrics import f1_score

# 设置随机种子

seed = 1

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.

np.random.seed(seed) # Numpy module.

torch.manual_seed(seed)

# 超参数

Max_epoch = 100

batch_size = 6

learning_rate = 0.05

path = "Iris.csv"

train_rate = 0.8

df = pd.read_csv(path)

# pandas 打乱数据

df = df.sample(frac=1)

cols_feature = ['SepalLengthCm', 'SepalWidthCm', 'PetalLengthCm', 'PetalWidthCm']

df_features = df[cols_feature]

df_categories = df['Species']

# 类别字符串转换为数字。

categories_digitization = []

for i in df_categories:

if i == 'Iris-setosa':

categories_digitization.append(0)

elif i == 'Iris-versicolor':

categories_digitization.append(1)

else:

categories_digitization.append(2)

features = torch.from_numpy(np.float32(df_features.values))

print(features)

categories_digitization = np.array(categories_digitization)

categories_digitization = torch.from_numpy(categories_digitization)

print("categories_digitization",categories_digitization)

# 定义MyDataset类,继承Dataset方法,并重写__getitem__()和__len__()方法

class MyDataset(Dataset):

# 初始化函数,得到数据

def __init__(self, datas, labels):

self.datas = datas

self.labels = labels

# print(df_categories_digitization)

# index是根据batchsize划分数据后得到的索引,最后将data和对应的labels进行一起返回

def __getitem__(self, index):

features = self.datas[index]

categories_digitization = self.labels[index]

return features, categories_digitization

# 该函数返回数据大小长度,目的是DataLoader方便划分,如果不知道大小,DataLoader会一脸懵逼

def __len__(self):

return len(self.labels)

num_train = int(features.shape[0] * train_rate)

train_x = features[:num_train]

train_y = categories_digitization[:num_train]

test_x = features[num_train:]

test_y = categories_digitization[num_train:]

# 通过MyDataset将数据进行加载,返回Dataset对象,包含data和labels

train_data = MyDataset(train_x, train_y)

test_data = MyDataset(test_x, test_y)

# 读取数据

train_dataloader = DataLoader(train_data, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=2)

test_dataloader = DataLoader(test_data, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=2)

# for i in train_dataloader:

# print("train_dataloader",i)

for i, data in enumerate(train_dataloader):

# i表示第几个batch, data表示该batch对应的数据,包含data和对应的labels

print("train_dataloader: 第 {} 个Batch \n{}".format(i, data))

print("===================\n")

for i, data in enumerate(test_dataloader):

# i表示第几个batch, data表示该batch对应的数据,包含data和对应的labels

print("test_dataloader: 第 {} 个Batch \n{}".format(i, data))

class Net(torch.nn.Module): # 继承 torch 的 Module

def __init__(self, n_feature, n_hidden, n_output):

super(Net, self).__init__() # 继承 __init__ 功能

# 定义每层用什么样的形式

self.hidden0 = torch.nn.Linear(n_feature, n_hidden) # 隐藏层线性输出

# self.hidden1 = torch.nn.Linear(n_hidden, n_hidden) # 隐藏层线性输出

# self.hidden2 = torch.nn.Linear(n_hidden, n_hidden) # 隐藏层线性输出

# self.hidden3 = torch.nn.Linear(n_hidden, n_hidden) # 隐藏层线性输出

# self.hidden4 = torch.nn.Linear(n_hidden, n_hidden) # 隐藏层线性输出

self.predict =torch.nn.Linear(n_hidden, n_output) # 输出层线性输出

def forward(self, x): # 这同时也是 Module 中的 forward 功能

# 正向传播输入值, 神经网络分析出输出值

x = F.relu(self.hidden0(x)) # 激励函数(隐藏层的线性值)

# x = F.relu(self.hidden1(x)) # 激励函数(隐藏层的线性值)

# x = F.relu(self.hidden2(x)) # 激励函数(隐藏层的线性值)

# x = F.relu(self.hidden3(x)) # 激励函数(隐藏层的线性值)

# x = F.relu(self.hidden4(x)) # 激励函数(隐藏层的线性值)

x = self.predict(x)

# x = F.softmax(self.predict(x)) # 输出值

return x

net = Net(n_feature=4, n_hidden=6, n_output=3)

print(net) # net 的结构

# optimizer 是训练的工具

loss_func = torch.nn.CrossEntropyLoss() # 预测值和真实值的误差计算公式 (交叉熵)

optimizer = torch.optim.Adam(net.parameters(), lr=learning_rate) # 传入 net 的所有参数, 学习率

# training

net.train()

for epoch in range(Max_epoch):

for step, batch in enumerate(train_dataloader):

batch_x, batch_y = batch

print("batch_x是:",batch_x)

pred_y = net(batch_x)

loss = loss_func(pred_y, batch_y)

# backward

optimizer.zero_grad() # 清空上一步的残余更新参数值

loss.backward() # 误差反向传播, 计算参数更新值

optimizer.step() # 将参数更新值施加到 net 的 parameters 上

print("epoch:{}, step:{}, loss:{}".format(epoch, step, loss.detach().item()))

# testing

net.eval() #测试的时候需要net.eval()

test_pred = []

test_true = []

for step, batch in enumerate(test_dataloader):

batch_x, batch_y = batch

logistics = net(batch_x)

# 取每个[]中最大的值。

pred_y = logistics.argmax(1)

test_true.extend(batch_y.numpy().tolist())

test_pred.extend(pred_y.detach().numpy().tolist())

print(f"测试集预测{test_pred}")

print(f"测试集准确{test_true}")

accuracy = accuracy_score(test_true, test_pred)

f1 = f1_score(test_true, test_pred, average = "macro")

print(f"Accuracy的值为:{accuracy}")

print(f"F1的值为:{f1}")

4. 解决逻辑

4.1 数据预处理

- 数据集保存格式为 .csv文件 -> 使用pandas读取文件。

- 数据集相同类别过于密集 -> 使用pandas中sample函数打乱数据集(frac = 1)。

- 提取df_features(特征) 和 df_categories(类别)。

- 发现categories中的数据都是字符串类型,但神经网络投喂的类型都是数字。 -> 编写逻辑把统一类型映射到相同的integer(0,1,2)。

# pandas 打乱数据

import pandas as pd

df = df.sample(frac=1)

cols_feature = ['SepalLengthCm', 'SepalWidthCm', 'PetalLengthCm', 'PetalWidthCm']

df_features = df[cols_feature]

df_categories = df['Species']

# 类别字符串转换为数字。

categories_digitization = []

for i in df_categories:

if i == 'Iris-setosa':

categories_digitization.append(0)

elif i == 'Iris-versicolor':

categories_digitization.append(1)

else:

categories_digitization.append(2)

4.2 数据处理(Pytorch环境下)

4.2.1 把数据预处理中处理的数据转换成tensor(投喂进神经网络的数据类型)。

1. 判断df_features类型:df_features是DataFrame - > df_features.values是ndarray。-> 可以使用torch.from_numpy()函数把array类型转换成tensor类型。

In: type(df_features)

Out: pandas.core.frame.DataFrame

In: type(df_features.values)

Out: numpy.ndarray

2. 判断categories_digitization类型:⚠️:categories_digitization是list -> np.array()转换成array类型 -> torch.from_numpy()转换成tensor类型)。

import torch

import numpy as np

features = torch.from_numpy(np.float32(df_features.values))

print(features)

categories_digitization = np.array(categories_digitization)

categories_digitization = torch.from_numpy(categories_digitization)

print("categories_digitization",categories_digitization)

4.2.2 继承Dataset:定义在Pytorch环境下,可方便使用的数据集(MyDataset:自己命名)。

from torch.utils.data.dataset import Dataset

- 思考:调用MyDataset的时候传入什么数据会很舒服。本人觉得传入(特征,特征对应的类别)很舒服!如下所述。

# 通过MyDataset将数据进行加载,返回Dataset对象,包含data和labels

train_data = MyDataset(train_x, train_y)

test_data = MyDataset(test_x, test_y)

- 创建自己的MyDateset(Dataset)类,根据自己的喜好传入参数。

# 定义MyDataset类,继承Dataset方法,并重写__getitem__()和__len__()方法

class MyDataset(Dataset):

# 初始化函数,得到数据

def __init__(self, datas, labels):

self.datas = datas

self.labels = labels

# print(df_categories_digitization)

# index是根据batchsize划分数据后得到的索引,最后将data和对应的labels进行一起返回

def __getitem__(self, index):

features = self.datas[index]

categories_digitization = self.labels[index]

return features, categories_digitization

# 该函数返回数据大小长度,目的是DataLoader方便划分,如果不知道大小,DataLoader会一脸懵逼

def __len__(self):

return len(self.labels)

4.2.3 训练集:测试集 = 8: 2 拆分

num_train = int(features.shape[0] * train_rate)

train_x = features[:num_train]

train_y = categories_digitization[:num_train]

test_x = features[num_train:]

test_y = categories_digitization[num_train:]

4.2.4 数据加载 And 数据的读取

from torch.utils.data.dataset import Dataset

from torch.utils.data.dataloader import DataLoader

# 通过MyDataset将数据进行加载,返回Dataset对象,包含data和labels

train_data = MyDataset(train_x, train_y)

test_data = MyDataset(test_x, test_y)

# 读取数据

train_dataloader = DataLoader(train_data, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=2)

test_dataloader = DataLoader(test_data, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=2)

# for i in train_dataloader:

# print("train_dataloader",i)

for i, data in enumerate(train_dataloader):

# i表示第几个batch, data表示该batch对应的数据,包含data和对应的labels

print("train_dataloader: 第 {} 个Batch \n{}".format(i, data))

print("===================\n")

for i, data in enumerate(test_dataloader):

# i表示第几个batch, data表示该batch对应的数据,包含data和对应的labels

print("test_dataloader: 第 {} 个Batch \n{}".format(i, data))

4.3 神经网络的构建(Pytorch环境下)

- 有时从结果 - > 决定现在的动作有奇效。

- 根据数据集中有4个features 和 3个categories -> 输入特征 = 4 和 输出类别 = 3。

- 神经网络通常需要隐藏层,隐藏层层数自己看着效果决定。

- 调用代码中需要决定自己每层的节点个数(输入层节点:4,隐藏层:6,输出层:3)。

net = Net(n_feature=4, n_hidden=6, n_output=3)

- 定义自己的神经网络类(需继承torch.nn.Module,之后在__init__()函数中继承Module的__init__()函数)。会有疑问:为什么明明是多分类问题,在前向传播函数中没有使用softmax()函数?此问题将在下面解答。

import torch.nn.functional as F # 激励函数都在这

class Net(torch.nn.Module): # 继承 torch 的 Module

def __init__(self, n_feature, n_hidden, n_output):

super(Net, self).__init__() # 继承 __init__ 功能

# 定义每层用什么样的形式

self.hidden0 = torch.nn.Linear(n_feature, n_hidden) # 隐藏层线性输出

# self.hidden1 = torch.nn.Linear(n_hidden, n_hidden) # 隐藏层线性输出

# self.hidden2 = torch.nn.Linear(n_hidden, n_hidden) # 隐藏层线性输出

# self.hidden3 = torch.nn.Linear(n_hidden, n_hidden) # 隐藏层线性输出

# self.hidden4 = torch.nn.Linear(n_hidden, n_hidden) # 隐藏层线性输出

self.predict =torch.nn.Linear(n_hidden, n_output) # 输出层线性输出

def forward(self, x): # 这同时也是 Module 中的 forward 功能

# 正向传播输入值, 神经网络分析出输出值

x = F.relu(self.hidden0(x)) # 激励函数(隐藏层的线性值)

# x = F.relu(self.hidden1(x)) # 激励函数(隐藏层的线性值)

# x = F.relu(self.hidden2(x)) # 激励函数(隐藏层的线性值)

# x = F.relu(self.hidden3(x)) # 激励函数(隐藏层的线性值)

# x = F.relu(self.hidden4(x)) # 激励函数(隐藏层的线性值)

x = self.predict(x)

# x = F.softmax(self.predict(x)) # 输出值

return x

4.4 神经网络的Loss函数 And 优化器(optimizer)的使用(Pytorch环境下)

- loss函数使用的是交叉熵损失函数。回答不使用 x = F.softmax(self.predict(x)) 的问题;因为Pytorch中的交叉熵损失函数内置了softmax函数。

- 优化器使用Adam来优化。

# optimizer 是训练的工具

loss_func = torch.nn.CrossEntropyLoss() # 预测值和真实值的误差计算公式 (交叉熵)

optimizer = torch.optim.Adam(net.parameters(), lr=learning_rate) # 传入 net 的所有参数, 学习率

4.5 神经网络的训练(Pytorch环境下)

- epoch:定义最大的 epoch。

- train_dataloader:使用 train_dataloader 绑定的数据。

- net:把特征值喂入 net,之后预测类别。

- loss:把预测的值和真实值传入 loss 函数

- backward:反向传播时;首先,把清空 optimizer 上一步的残余更新参数值;之后,loss 函数误差反向传播,计算参数更新值;最后,将参数更新值施加到 net 的 parameters上。

# training

net.train()

for epoch in range(Max_epoch):

for step, batch in enumerate(train_dataloader):

batch_x, batch_y = batch

print("batch_x是:",batch_x)

pred_y = net(batch_x)

loss = loss_func(pred_y, batch_y)

# backward

optimizer.zero_grad() # 清空上一步的残余更新参数值

loss.backward() # 误差反向传播, 计算参数更新值

optimizer.step() # 将参数更新值施加到 net 的 parameters 上

print("epoch:{}, step:{}, loss:{}".format(epoch, step, loss.detach().item()))

4.6 神经网络的测试(Pytorch环境下)

- 需要使用sklearn的评价函数,需要传入的值时list类型。-> 创建 test_pred[ ] 和 test_true[ ] 来保存结果,用于传入sklearn中的函数。

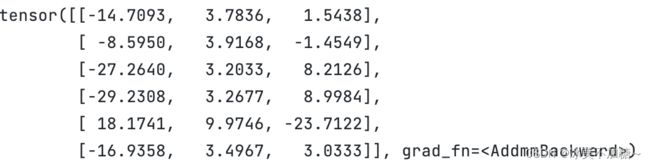

- ⚠️:交叉熵损失函数以后的结果如下图所示:需要从中选择最大值,所以需要使用: pred_y = logistics.argmax(1)

# testing

net.eval() #测试的时候需要net.eval()

test_pred = []

test_true = []

for step, batch in enumerate(test_dataloader):

batch_x, batch_y = batch

logistics = net(batch_x)

print("logistics",logistics)

# 取每个[]中最大的值。

pred_y = logistics.argmax(1)

print("pred_y",pred_y)

4.7 使用Sklearn 获取Accuracy 和 F1值

from sklearn.metrics import accuracy_score

from sklearn.metrics import f1_score

test_true.extend(batch_y.numpy().tolist())

test_pred.extend(pred_y.detach().numpy().tolist())

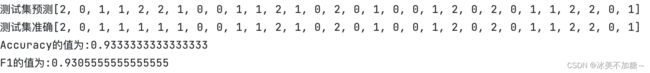

print(f"测试集预测{test_pred}")

print(f"测试集准确{test_true}")

accuracy = accuracy_score(test_true, test_pred)

f1 = f1_score(test_true, test_pred, average = "macro")

print(f"Accuracy的值为:{accuracy}")

print(f"F1的值为:{f1}")

4.8 为了保证每次实验结果都相同,设置随机种子

# 设置随机种子

seed = 1

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.

np.random.seed(seed) # Numpy module.

torch.manual_seed(seed)

4.9 超参数的定义和所有引用包

import torch

import numpy as np

import pandas as pd

from torch.utils.data.dataset import Dataset

from torch.utils.data.dataloader import DataLoader

import torch.nn.functional as F # 激励函数都在这

from sklearn.metrics import accuracy_score

from sklearn.metrics import f1_score

# 超参数

Max_epoch = 100

batch_size = 6

learning_rate = 0.05

path = "Iris.csv"

train_rate = 0.8

5. 调参和最后结果

6. 附件(数据集)

Id,SepalLengthCm,SepalWidthCm,PetalLengthCm,PetalWidthCm,Species

1,5.1,3.5,1.4,0.2,Iris-setosa

2,4.9,3.0,1.4,0.2,Iris-setosa

3,4.7,3.2,1.3,0.2,Iris-setosa

4,4.6,3.1,1.5,0.2,Iris-setosa

5,5.0,3.6,1.4,0.2,Iris-setosa

6,5.4,3.9,1.7,0.4,Iris-setosa

7,4.6,3.4,1.4,0.3,Iris-setosa

8,5.0,3.4,1.5,0.2,Iris-setosa

9,4.4,2.9,1.4,0.2,Iris-setosa

10,4.9,3.1,1.5,0.1,Iris-setosa

11,5.4,3.7,1.5,0.2,Iris-setosa

12,4.8,3.4,1.6,0.2,Iris-setosa

13,4.8,3.0,1.4,0.1,Iris-setosa

14,4.3,3.0,1.1,0.1,Iris-setosa

15,5.8,4.0,1.2,0.2,Iris-setosa

16,5.7,4.4,1.5,0.4,Iris-setosa

17,5.4,3.9,1.3,0.4,Iris-setosa

18,5.1,3.5,1.4,0.3,Iris-setosa

19,5.7,3.8,1.7,0.3,Iris-setosa

20,5.1,3.8,1.5,0.3,Iris-setosa

21,5.4,3.4,1.7,0.2,Iris-setosa

22,5.1,3.7,1.5,0.4,Iris-setosa

23,4.6,3.6,1.0,0.2,Iris-setosa

24,5.1,3.3,1.7,0.5,Iris-setosa

25,4.8,3.4,1.9,0.2,Iris-setosa

26,5.0,3.0,1.6,0.2,Iris-setosa

27,5.0,3.4,1.6,0.4,Iris-setosa

28,5.2,3.5,1.5,0.2,Iris-setosa

29,5.2,3.4,1.4,0.2,Iris-setosa

30,4.7,3.2,1.6,0.2,Iris-setosa

31,4.8,3.1,1.6,0.2,Iris-setosa

32,5.4,3.4,1.5,0.4,Iris-setosa

33,5.2,4.1,1.5,0.1,Iris-setosa

34,5.5,4.2,1.4,0.2,Iris-setosa

35,4.9,3.1,1.5,0.1,Iris-setosa

36,5.0,3.2,1.2,0.2,Iris-setosa

37,5.5,3.5,1.3,0.2,Iris-setosa

38,4.9,3.1,1.5,0.1,Iris-setosa

39,4.4,3.0,1.3,0.2,Iris-setosa

40,5.1,3.4,1.5,0.2,Iris-setosa

41,5.0,3.5,1.3,0.3,Iris-setosa

42,4.5,2.3,1.3,0.3,Iris-setosa

43,4.4,3.2,1.3,0.2,Iris-setosa

44,5.0,3.5,1.6,0.6,Iris-setosa

45,5.1,3.8,1.9,0.4,Iris-setosa

46,4.8,3.0,1.4,0.3,Iris-setosa

47,5.1,3.8,1.6,0.2,Iris-setosa

48,4.6,3.2,1.4,0.2,Iris-setosa

49,5.3,3.7,1.5,0.2,Iris-setosa

50,5.0,3.3,1.4,0.2,Iris-setosa

51,7.0,3.2,4.7,1.4,Iris-versicolor

52,6.4,3.2,4.5,1.5,Iris-versicolor

53,6.9,3.1,4.9,1.5,Iris-versicolor

54,5.5,2.3,4.0,1.3,Iris-versicolor

55,6.5,2.8,4.6,1.5,Iris-versicolor

56,5.7,2.8,4.5,1.3,Iris-versicolor

57,6.3,3.3,4.7,1.6,Iris-versicolor

58,4.9,2.4,3.3,1.0,Iris-versicolor

59,6.6,2.9,4.6,1.3,Iris-versicolor

60,5.2,2.7,3.9,1.4,Iris-versicolor

61,5.0,2.0,3.5,1.0,Iris-versicolor

62,5.9,3.0,4.2,1.5,Iris-versicolor

63,6.0,2.2,4.0,1.0,Iris-versicolor

64,6.1,2.9,4.7,1.4,Iris-versicolor

65,5.6,2.9,3.6,1.3,Iris-versicolor

66,6.7,3.1,4.4,1.4,Iris-versicolor

67,5.6,3.0,4.5,1.5,Iris-versicolor

68,5.8,2.7,4.1,1.0,Iris-versicolor

69,6.2,2.2,4.5,1.5,Iris-versicolor

70,5.6,2.5,3.9,1.1,Iris-versicolor

71,5.9,3.2,4.8,1.8,Iris-versicolor

72,6.1,2.8,4.0,1.3,Iris-versicolor

73,6.3,2.5,4.9,1.5,Iris-versicolor

74,6.1,2.8,4.7,1.2,Iris-versicolor

75,6.4,2.9,4.3,1.3,Iris-versicolor

76,6.6,3.0,4.4,1.4,Iris-versicolor

77,6.8,2.8,4.8,1.4,Iris-versicolor

78,6.7,3.0,5.0,1.7,Iris-versicolor

79,6.0,2.9,4.5,1.5,Iris-versicolor

80,5.7,2.6,3.5,1.0,Iris-versicolor

81,5.5,2.4,3.8,1.1,Iris-versicolor

82,5.5,2.4,3.7,1.0,Iris-versicolor

83,5.8,2.7,3.9,1.2,Iris-versicolor

84,6.0,2.7,5.1,1.6,Iris-versicolor

85,5.4,3.0,4.5,1.5,Iris-versicolor

86,6.0,3.4,4.5,1.6,Iris-versicolor

87,6.7,3.1,4.7,1.5,Iris-versicolor

88,6.3,2.3,4.4,1.3,Iris-versicolor

89,5.6,3.0,4.1,1.3,Iris-versicolor

90,5.5,2.5,4.0,1.3,Iris-versicolor

91,5.5,2.6,4.4,1.2,Iris-versicolor

92,6.1,3.0,4.6,1.4,Iris-versicolor

93,5.8,2.6,4.0,1.2,Iris-versicolor

94,5.0,2.3,3.3,1.0,Iris-versicolor

95,5.6,2.7,4.2,1.3,Iris-versicolor

96,5.7,3.0,4.2,1.2,Iris-versicolor

97,5.7,2.9,4.2,1.3,Iris-versicolor

98,6.2,2.9,4.3,1.3,Iris-versicolor

99,5.1,2.5,3.0,1.1,Iris-versicolor

100,5.7,2.8,4.1,1.3,Iris-versicolor

101,6.3,3.3,6.0,2.5,Iris-virginica

102,5.8,2.7,5.1,1.9,Iris-virginica

103,7.1,3.0,5.9,2.1,Iris-virginica

104,6.3,2.9,5.6,1.8,Iris-virginica

105,6.5,3.0,5.8,2.2,Iris-virginica

106,7.6,3.0,6.6,2.1,Iris-virginica

107,4.9,2.5,4.5,1.7,Iris-virginica

108,7.3,2.9,6.3,1.8,Iris-virginica

109,6.7,2.5,5.8,1.8,Iris-virginica

110,7.2,3.6,6.1,2.5,Iris-virginica

111,6.5,3.2,5.1,2.0,Iris-virginica

112,6.4,2.7,5.3,1.9,Iris-virginica

113,6.8,3.0,5.5,2.1,Iris-virginica

114,5.7,2.5,5.0,2.0,Iris-virginica

115,5.8,2.8,5.1,2.4,Iris-virginica

116,6.4,3.2,5.3,2.3,Iris-virginica

117,6.5,3.0,5.5,1.8,Iris-virginica

118,7.7,3.8,6.7,2.2,Iris-virginica

119,7.7,2.6,6.9,2.3,Iris-virginica

120,6.0,2.2,5.0,1.5,Iris-virginica

121,6.9,3.2,5.7,2.3,Iris-virginica

122,5.6,2.8,4.9,2.0,Iris-virginica

123,7.7,2.8,6.7,2.0,Iris-virginica

124,6.3,2.7,4.9,1.8,Iris-virginica

125,6.7,3.3,5.7,2.1,Iris-virginica

126,7.2,3.2,6.0,1.8,Iris-virginica

127,6.2,2.8,4.8,1.8,Iris-virginica

128,6.1,3.0,4.9,1.8,Iris-virginica

129,6.4,2.8,5.6,2.1,Iris-virginica

130,7.2,3.0,5.8,1.6,Iris-virginica

131,7.4,2.8,6.1,1.9,Iris-virginica

132,7.9,3.8,6.4,2.0,Iris-virginica

133,6.4,2.8,5.6,2.2,Iris-virginica

134,6.3,2.8,5.1,1.5,Iris-virginica

135,6.1,2.6,5.6,1.4,Iris-virginica

136,7.7,3.0,6.1,2.3,Iris-virginica

137,6.3,3.4,5.6,2.4,Iris-virginica

138,6.4,3.1,5.5,1.8,Iris-virginica

139,6.0,3.0,4.8,1.8,Iris-virginica

140,6.9,3.1,5.4,2.1,Iris-virginica

141,6.7,3.1,5.6,2.4,Iris-virginica

142,6.9,3.1,5.1,2.3,Iris-virginica

143,5.8,2.7,5.1,1.9,Iris-virginica

144,6.8,3.2,5.9,2.3,Iris-virginica

145,6.7,3.3,5.7,2.5,Iris-virginica

146,6.7,3.0,5.2,2.3,Iris-virginica

147,6.3,2.5,5.0,1.9,Iris-virginica

148,6.5,3.0,5.2,2.0,Iris-virginica

149,6.2,3.4,5.4,2.3,Iris-virginica

150,5.9,3.0,5.1,1.8,Iris-virginica