小样本学习研究综述

小样本学习方法分类

- 基于模型微调的小样本学习方法

- 基于数据增强的小样本学习

-

- 基于无标签数据的方法

- 基于数据合成的方法

- 基于特征增强的方法

- 基于迁移学习的小样本学习

-

- 基于度量学习的方法

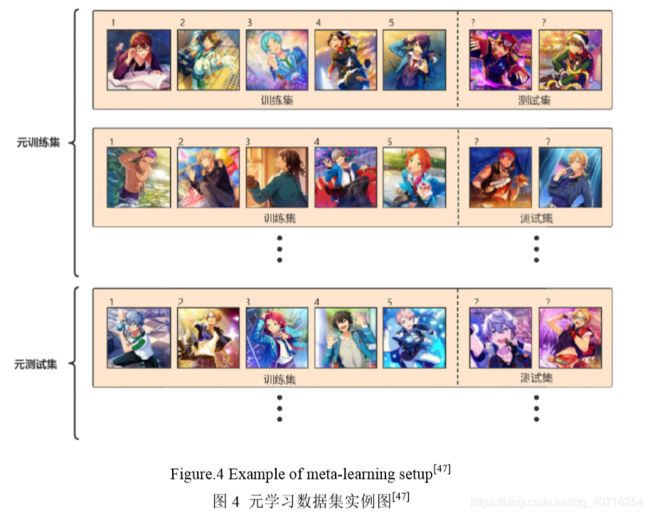

- 基于元学习的方法

- 基于图神经网络的方法

- 展望

小样本学习目标:从少量样本中学习到解决问题的方法。

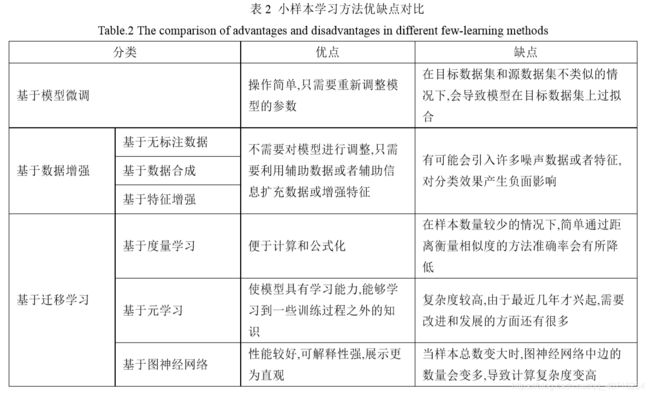

本文将小样本学习分为基于模型微调、基于数据增强、基于迁移学习三种。

基于模型微调的小样本学习方法

在大规模数据上预训练模型,在目标小样本数据集上对神经网络模型的全连接层或顶端几层进行参数微调,得到微调后的模型。

使用前提:目标数据集和源数据集分布类似。

不足:目标数据集和源数据集分布并不类似,模型在目标数据集上过拟合。

- Howard 等在 2018 年提出了一个通用微 调语言模型(Universal Language Model Fine-tuning, ULMFit),与其它模型不同的是,此方法使用了语言模型而非深度神经网络.

该模型的创新点在于改变学习速率来微调语言模型,主要体现在两个方面:1)传统方法认为模型每一层学习速率相同,而 ULMFit 中语言模型的每一层学习速率均不相同.模型底层表示普遍特 征,这些特征不需要很大调整,所以学习速率较慢,而高层特征更具有独特性,更能体现出任务和数据的独有特征,于是高层特征需要用更大的学习速率学习

论文: Howard J, Ruder S. Universal language model fine-tuning for text classification . arXiv preprint arXiv:1801.06146, 2018. - Nakamura 等人提出了一种微 调方法,主要包含以下几个机制:1)在小样本类别上再训练的过程使用更低的学习率;2)在微调阶段使用自适应的梯度优化器;3)当 源数据集和目标数据集之间存在较大差异性时,可以通过调整整个网络来实现.

论文:Nakamura A, Harada T. Revisiting Fine-tuning for Few-shot Learning . 2019.

基于数据增强的小样本学习

数据增强指借助辅助数据或辅助信息,对原有的小样本数据集进行数据扩充或特征增强.数据扩充是向原有数据集添加新的数据,可以是无标签数据或者合成的带标签数据;特征增强是在原样本的特征空间中添加便于分类的特征,增加特征多样性.

基于无标签数据的方法

基于无标签数据的方法是指利用无标签数据对小样本数据集进行扩充,常见的方法有半监督学习[12] [13]和直推式学习[15]等。

-

半监督学习

Wang 等人[106]在半 监督学习的思想下,同时受到 CNN 可迁移性的启发,提出利用一个附加的无监督元训练阶段,让多个顶层单元接触真实世界中大量的无标注数据.通过鼓励这些单元学习无标注数据中低密度分离器的 diverse sets,捕获一个更通用的、更丰富的对视觉世界的描述, 将这些单元从与特定的类别集的联系中解耦出来(也就是不仅仅能表示特定的数据集).Boney等人[14]在2018年提出使用MAML[45]模型来进行半监督学习,利用无标签数据调整嵌入函数的参数,用带标签数据调整分 类器的参数

Ren 等人[35]2018 年在原型网络[34]的基础上进行改进,加入了无标注数据,取得了更高的准确率.

-

直推式学习

直推式学习假设未标注数据是测试数据,目的是在这些未标记数据上取得最佳泛化能力。Liu 等人[16]使用了直推式学习的方法,在 2019 年提出了转导传播网络(Transductive Propagation Network)来解决小样本问题。转导传播网络分为四个阶段:特征嵌入、图构建、标签传播和损失计算.

Hou 等人[113]也提出了一个交叉注意力网络(Cross Attention Network),基于直推式学习的思想,利用注意力机制为每对类特征和查询生 成交叉注意映射对特征进行采样,突出目标对象区域,使提取的特征更具鉴别性.其次,提出了一种转换推理算法,为了缓解数据量过 少的问题,迭代地利用未标记的查询集以增加支持集,从而使类别特性更具代表性.

基于数据合成的方法

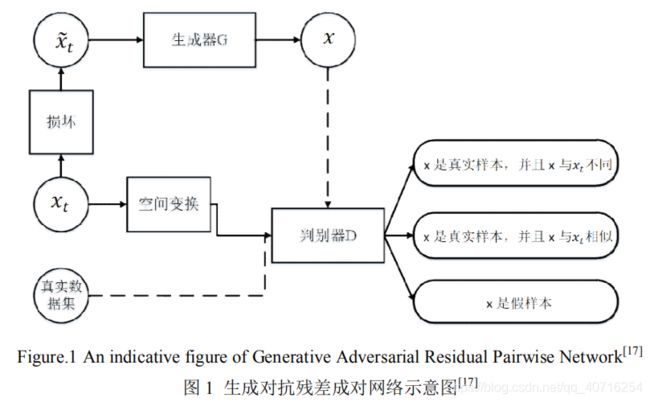

基于数据合成的方法是指为小样本类别合成新的带标签数据来扩充训练数据,常用的算法有生成对抗网络(Generative Adversarial Nets)[89]等。

-

Mehrotra 等人[17]将 GAN 应用到小样本学习中,提出了生成对抗残差成对网络(Generative Adversarial Residual Pairwise Network)来解决单样本学习问题.

-

Hariharan 等人[92]提出了一个新的方法,该方法分为两个阶段:表示学习阶段和小样本学习阶段,表示学习阶段是指在含有大量数据的源数据集上学习一个通用的表示模型,小样本学习阶段是指在少量数据的新类别中微调模型,在此阶段中,本文提出了生成新数据的方法来为小样本类别进行数据增强.作者认为属于同一类别的两个样本之间存在着一个转换,那么给定新类别的一个样本x,通过这个转换可以生成器 G 可以生成属于该类别的新样本。

-

Wang等人[105]将元学习与数据生成相结合,提出了通过数据生成模型生成虚拟数据来扩充样本的多样性,结合元学习方法,通过端到端方法共同训练生成模型和分类算法。通过让现有图像的一些属性和特征发生变化,如光照、姿势、位置迁移等,迁移到新的样本上,从而生成具有不同变化的新样本图像,实现图片的扩充。

现有的数据生成方法缺点:1.没有捕捉到复杂的数据分布。2.不能泛化到小样本类别。3.生成的特征不具有可解释性。 -

Xian 等人[94]为解决上述问题,将变分编码器(VAE)和 GAN 进行结合,集成了一个新的网络 f-VAEGAN-D2,这个网络再完成小样本学习图像分类的同时,能够将生成样本的特征空间通过自然语言的形式表现出来,具有可解释性。

-

Chen 等人[104]对此继续研究,提出可以利用元学习对训练集的图像对支持集进行插值,形成扩充的支持集集合。

基于特征增强的方法

以上两种方法都是利用辅助数据来增强样本空间,除此之外还可通过增强样本特征空间来提高样本多样性,因为小样本学习的一个关键是如何得到一个泛化性好的特征提取器。

- Dixit 等人[18]提出了 AGA(Attributed-Guided Augmentation)

- Schwartz等人[19]提出了Delta编码器

- ,Shen等人[103]提出可以把固定的注意力机制换成不确定的注意力机制M

基于迁移学习的小样本学习

迁移学习是指利用旧知识来学习新知识,主要目标是将已经学会的知识很快地迁移到一个新的领域中[21].源领域和目标领域之间的关联性越强,那么迁移学习的效果就会越好[22].在迁移学习中,数据集被划分为三部分:训 练集(training set)、支持集(support set)和查询集(query set).其中训练集是指源数据集,一般包含大量的标注数据;支持集是指目标领 域中的训练样本,包含少量标注数据;查询集是目标领域中的测试样本。

基于度量学习的方法

-

Koch 等人[30]在2015年最先提出使用孪生神经网络(Siamese Neural Networks)进行单样本图像识别.孪生神经网络是一种相似性度量模型,当类别数多但每个类别的样本数量少的情况下可用于类别的识别.孪生神经网络从数据中学习度量,进而利用学习到的度量比较和匹配未知类别的样本,两个孪生神经网络共享一套参数和权重,其主要思想是通过嵌入函数将输入映射到目标空间, 使用简单的距离函数进行相似度计算.孪生神经网络在训练阶段最小化一对相同类别样本的损失,最大化一对不同类别样本的损失.

-

Vinyals 等人[31]继续就单样本学习问题进行深入探讨,在 2016 年提出了匹配网络(Matching Networks ),该网络可以将带标签的小样本数据和不带标签的样本映射到对应的标签。

-

需要将图像的分类标签纳入考虑,并提出了多注意力网络模型(Multi-attention Network),该模型使用GloVe Embedding将图像的标签嵌入到向量空间,通过构建标签语义特征和图像特征之间的注意力机制,得到一张图像属于该标签的特征主要集中于哪一个部分(单注意力)或哪几个部分(多注意力),利用注意力机制更新该图像的向量,最后通过距离函数计算相似度得到分类结果。

-

它们针对的都是单样本学习问题.为了进一步深入解决小样本问题,Snell 等人[34]在2017年提出了原型网络(Prototypical Networks),作者认为每个类别在向量空间中都存在一个原型(Prototype),也称作类别中心点.原型网络使用深度神经网络将图像映射成向量,对于同属一个类别的样本,求得这一类样本向量的平均值作为该类别的原型。断训练模型和最小化损失函数,使得同一类别内的样本距离更为靠近,不同类别的样本更为远离,从而更新嵌入函数的参数.原型网络的思路如图6所示,输入样本x,比较x的向量和每个类别原型的欧式距离

基于元学习的方法

- 2017年 Munkhdalai 等人[44]继续采用了元学习的框架来解决单样本分类的问题,并提出了一个新的模型——元网络(Meta Networs)。元网络主要分为两个部分:base-learner和meta-learner,还有一个额外记忆块可以帮助模型快速学习。

- Finn 等[45]在 2017 年提出了未知模型的元学习方法((Model-Agnostic Meta-Learning,MAML),MAML 致力于找到神经网络中对每个任务较为敏感的参数,通过微调这些参数让模型的损失函数快速收敛。

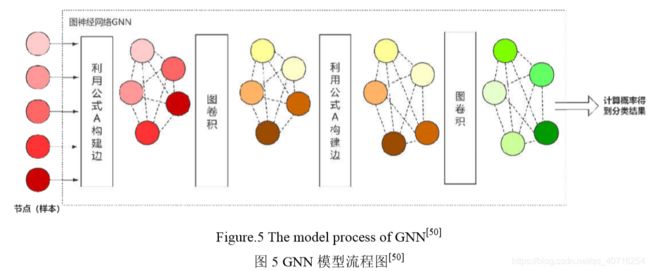

基于图神经网络的方法

Garcia 等人[50]在 2018 年使用图卷积神经网络实现小样本图像分类.在图神经网络里,每一个样本看做图中的一个节点,该模型不仅学习每个节点的嵌入向量,还学习每条边的嵌入向量.卷积神经网络将所有样本嵌入到向量空间中,将样本向量与标签连接后输入图神经网络,构建每个节点之间的连边,然后通过图卷积更新节点向量,再通过节点向量不断更新边的向量,这就构成了一个深度的图神经网络.如图 5 。五个不同的节点输入到GNN中,根据公式 A 构建边,然后通过图卷积更新节点向量 ,再根据A更新边,再通过一层图卷积得到最后的点向量,最后计算概率。

展望

1) 在数据层面,尝试利用其他先验知识训练模型,或者更好地利用无标注数据.为了使小样本学习的概念更靠近真实,可以探 索不依赖模型预训练、使用先验知识(例如知识图谱)就能取得较好效果的方法.虽然在很多领域中标注样本数量很少,但 真实世界中存在的大量无标注数据蕴含着大量信息,利用无标注数据的信息训练模型这个方向也值得深入研究.

2) 基于迁移学习的小样本学习面临着特征、参数和梯度迁移的挑战.为更好理解哪些特征和参数适合被迁移,需要提高深度 学习的可解释性;为使模型在新领域新任务中快速收敛,需要设计合理的梯度迁移算法.

3) 针对基于度量学习的小样本学习,提出更有效的神经网络度量方法.度量学习在小样本学习中的应用已经相对成熟,但是 基于距离函数的静态度量方法改进空间较少,使用神经网络来进行样本相似度计算将成为以后度量方法的主流,所以需 要设计性能更好的神经网络度量算法.

4) 针对基于元学习的小样本学习,设计更好的元学习器.元学习作为小样本学习领域刚兴起的方法,目前的模型还不够成熟, 如何设计元学习器使其学习到更多或更有效的元知识,也将是今后一个重要的研究方向.

5) 针对基于图神经网络的小样本学习,探索更有效的应用方法.图神经网络作为这几年比较火热的方法,已经覆盖到很多领 域,并且可解释性强、性能好,但是在小样本学习中应用的模型较少,如何设计图网络结构、节点更新函数和边更新函数等 方面值得进一步探究.

6) 尝试不同小样本学习方法的融合.现有小样本学习模型都是单一使用数据增强或迁移学习的方法,今后可以尝试将二者 进行结合,从数据和模型两个层面同时进行改进以达到更好的效果.同时,近年来随着主动学习(active learning)[85]和强化 学习(reinforcement learning)[86]框架的兴起,可以考虑将这些先进框架应用到小样本学习上.

References:

[1] Li XY, Long SP, Zhu J. Survey of few-shot learning based on deep neural network . Application Research of Computers: 1-8[2019-08-26].https://doi.org/10.19734/j.issn.1001-3695.2019.03.0036.

[2] Jankowski N, Duch W, Gra̧bczewski K. Meta-learning in computational intelligence [M]// Springer Science & Business Media. 2011: 97-115.

[3] Lake B, Salakhutdinov R. One-shot learning by inverting a compositional causal process [C]// Proc of International Conference on Neural Information Processing Systems. [S.l.]: Curran Associates Inc, 2013: 2526-2534.

[4] Li Fe-Fei et al. A bayesian approach to unsupervised one-shot learning of object categories. In Computer Vision, 2003. Proceedings. Ninth IEEE International Conference

[5] Feifei L, Fergus R, Perona P. One-shot learning of object categories . IEEE Trans Pattern Anal Mach Intell, 2006, 28(4):594-611.

[6] Fu Y, Xiang T, Jiang YG, et al. Recent Advances in Zero-Shot Recognition: Toward Data-Efficient Understanding of Visual Content . IEEE Signal Processing Magazine, 2018, 35(1):112-125.

[7] Wang YX, Girshick R, Hebert M, et al. Low-Shot Learning from Imaginary Data . 2018.

[8] Yang J, Liu YL. The Latest Advances in Face Recognition with Single Training Sample . Journal of Xihua University (Natural Science Edition), 2014, 33(04):1-5+10.

[9] Manning C. Foundations of Statistical Natural Language Processing [M]. 1999.

[10] Howard J, Ruder S. Universal language model fine-tuning for text classification . arXiv preprint arXiv:1801.06146, 2018.

[11] Tu EM, Yang J. A Review of Semi-Supervised Learning Theories and Recent Advances . Journal of Shanghai Jiaotong University, 2018, 52(10):1280-1291.

[12] Liu JW, Liu Y, Luo XL. Semi-supervised Learning Methods . Chinese Journal of Computers, 2015, 38(08):1592-1617.

[13] Chen WJ. Semi-supervised Learning Study Summary . Academic Exchange, 2011, 7(16):3887-3889.

[14] Rinu Boney & Alexander Ilin.Semi-Supervised Few-Shot Learning With MAMLl.2018

[15] Su FL, Xie QH, Huang QQ, et al. Semi-supervised method for attribute extraction based on transductive learning . Journal of Shandong University (Science Edition), 2016, 51(03):111-115.

[16] Liu Y, Lee J, Park M, et al. Learning To Propagate Labels: Transductive Propagation Network For Few-Shot Learning . 2018.

[17] Mehrotra A, Dukkipati A. Generative Adversarial Residual Pairwise Networks for One Shot Learning . 2017.

[18] Dixit M, Kwitt R, Niethammer M, et al. AGA: Attribute Guided Augmentation . 2016.

[19] Schwartz E, Karlinsky L, Shtok J, et al. Delta-encoder: an effective sample synthesis method for few-shot object recognition . 2018.

[20] Chen Z, Fu Y, Zhang Y, et al. Semantic feature augmentation in few-shot learning . arXiv preprint arXiv:1804.05298, 2018, 86: 89.

[21] Liu XP, Luan XD, Xie YX, et al. Transfer Learning Research and Algorithm Review . Journal of Changsha University, 2018, 32(05):33-36+41.

[22] Wang H. Research review on transfer learning . Academic Exchange, 2017(32):209-211.

[23] Wang YX, Hebert M. Learning to Learn: Model Regression Networks for Easy Small Sample Learning[C]// European Conference on Computer Vision. Springer International Publishing, 2016.

[24] Shen YY, Yan Y, Wang HZ. Recent advances on supervised distance metric learning algorithms. Acta Automatica Sinica, 2014, 40(12): 2673-2686

[25] Aurélien B, Amaury H, and Marc S. A survey on metric learning for feature vectors and structured data. arXiv preprint arXiv:1306.6709, 2013.

[26] Kulis B. Metric Learning: A Survey . Foundations & Trends® in Machine Learning, 2013, 5(4):287-364.

[27] Weinberger KQ. Distance Metric Learning for Large Margin nearest Neighbor Classification . JMLR, 2009, 10.

[28] Liu J, Yuan Q, Wu G, Yu X. Review of convolutional neural networks . Computer Era, 2018(11):19-23.

[29] Yang L, Wu YQ, Wang JL, Liu YL. Research on recurrent neural network . Computer Application, 2018, 38(S2):1-6+26.

[30] Koch G, Zemel R, Salakhutdinov R. Siamese neural networks for one-shot image recognition[C]//ICML deep learning workshop. 2015, 2.

[31] Vinyals O, Blundell C, Lillicrap T, et al. Matching Networks for One Shot Learning . 2016.

[32] Jiang LB, Zhou XL, Jiang FW, Che L. One-shot learning based on improved matching network . Systems Engineering and Electronics, 2019, 41(06):1210-1217.

[33] Wang P, Liu L, Shen C, et al. Multi-attention Network for One Shot Learning[C]// 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2017.

[34] Snell J, Swersky K, Zemel RS. Prototypical Networks for Few-shot Learning . 2017.

[35] ∗ ∗ Mengye Ren†, Eleni Triantafillou †, Sachin Ravi §, et al. META-LEARNING FOR SEMI-SUPERVISED FEW-SHOT CLASSIFICATION . 2018.

[36] Sung F, Yang Y, Zhang L, et al. Learning to Compare: Relation Network for Few-Shot Learning . 2017. [37] Zhang X, Sung F, Qiang Y, et al. Deep Comparison: Relation Columns for Few-Shot Learning . 2018.

[38] Hilliard N, Phillips L, Howland S, et al. Few-Shot Learning with Metric-Agnostic Conditional Embeddings . 2018.

[39] Thrun S, Pratt L. Learning to learn: introduction and overview [M]// Learning to Learn. 1998.

[40] Vilalta R, Drissi Y. A Perspective View and Survey of Meta-Learning . Artificial Intelligence Review, 2002, 18(2):77-95.

[41] Hochreiter S, Younger AS, Conwell PR. Learning To Learn Using Gradient Descent[C]// Proceedings of the International Conference on Artificial Neural Networks. Springer, Berlin, Heidelberg, 2001.

[42] Santoro A, Bartunov S, Botvinick M, et al. One-shot Learning with Memory-Augmented Neural Networks . 2016.

[43] Graves A, Wayne G, Danihelka I. Neural Turing Machines . Computer Science, 2014.

[44] Munkhdalai T, Yu H. Meta Networks . 2017. [45] Finn C, Abbeel P, Levine S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks . 2017.

[46] Xiang J, Havaei M, Chartrand G, et al. On the Importance of Attention in Meta-Learning for Few-Shot Text Classification . 2018.

[47] Ravi S, Larochelle H. Optimization as a model for few-shot learning . 2016. [48] Yu M, Guo X, Yi J, et al. Diverse Few-Shot Text Classification with Multiple Metrics . 2018. [49] Zhou J, Cui G, Zhang Z, et al. Graph Neural Networks: A Review of Methods and Applications . 2018. [50] Garcia V, Bruna J. Few-Shot Learning with Graph Neural Networks . 2017. [51] Fort S. Gaussian Prototypical Networks for Few-Shot Learning on Omniglot. 2018. [52] Malalur P, Jaakkola T. Alignment Based Matching Networks for One-Shot Classification and Open-Set Recognition . 2019. [53] Yin C, Feng Z, Lin Y, et al. Fine-Grained Categorization and Dataset Bootstrapping Using Deep Metric Learning with Humans in the Loop[C]// Computer Vision & Pattern Recognition. 2016. [54] Frederic P. Miller, Agnes F. Vandome, John McBrewster. Amazon Mechanical Turk. alphascript publishing, 2011. [55] Deng J, Dong W, Socher R, et al. ImageNet: A large-scale hierarchical image database[C]// IEEE Conference on Computer Vision & Pattern Recognition. 2009. [56] Geng R, Li B, Li Y, et al. Few-Shot Text Classification with Induction Network . 2019. [57] Han X, Zhu H, Yu P, et al. FewRel: A Large-Scale Supervised Few-Shot Relation Classification Dataset with State-of-the-Art Evaluation . 2018. [58] Long M, Zhu H, Wang J, et al. Unsupervised Domain Adaptation with Residual Transfer Networks . 2016. [59] Wang K, Liu BS. Research review on text classification . Data Communication,2019(03):37-47. [60] Huang AW, Xie K, Wen C, et al. Small sample face recognition algorithm based on transfer learning model . Journal of changjiang university (natural science edition), 2019, 16(07):88-94. [61] Lv YQ, Min WQ, Duan H, Jiang SQ. Few-shot Food Recognition Fusint Triplet Convolutional Neural Network with Relation Network .Computer Science,2020(01):1-8[2019-08-24].http://kns.cnki.net/kcms/detail/50.1075.TP.20190614.0950.002.html. [62] Upadhyay S , Faruqui M , Tur G , et al. (Almost) Zero-Shot Cross-Lingual Spoken Language Understanding[C]// ICASSP 2018 - 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2018. [63] Lampinen AK, Mcclelland JL. One-shot and few-shot learning of word embeddings . 2017. [64] Zhu WN, Ma Y. Comparison of small sample of local complications between femoral artery sheath removal and vascular closure device . Journal of modern integrated Chinese and western medicine, 2010, 19(14):1748+1820. [65] Liu JZ. Small Sample Bark Image Recognition Method Based on Convolutional Neural Network . Journal of northwest forestry university, 2019, 34(04):230-235. [66] Lin KZ, Bai JX, Li HT, Li W. Facial Expression Recognition with Small Samples Fused with Different Models under Deep Learning . Computer science and exploration:1-13[2019-08-24].http://kns.cnki.net/kcms/detail/11.5602.tp.20190710.1507.004.html.

[67] Liu JM, Meng YL, Wan XY. Cross-task dialog system based on small sample machine learning . Journal of Chongqing university of posts and telecommunications (natural science edition), 2019, 31(03):299-304. [68] Zhang J. Research and implementation of ear recognition based on few-shot learning [D]. Beijing University of Posts and Telecommunications, 2019. [69] Yan B, Zhou P, Yan L. Disease Identification of Small Sample Crop Based on Transfer Learning . Modern Agricultural Sciences and Technology, 2019(06):87-89. [70] Zhou TY, Zhao L. Research of handwritten Chinese character recognition model with small dataset based on neural network . Journal of Shandong university of technology (natural science edition), 2019, 33(03):69-74. [71] Zhao Y. Convolutional neural network based carotid plaque recognition over small sample size ultrasound images [D]. Huazhong University of Science and Technology, 2018. [72] Cheng L, Yuan Q, Wang Y, et al. A small sample exploratory study of autogenous bronchial basal cells for the treatment of chronic obstructive pulmonary disease . Chongqing medical:1-5[2019-08-27].http://kns.cnki.net/kcms/detail/50.1097.R.20190815.1557.002.html. [73] Li CK, Fang J, Wu N, et al. A road extraction method for high resolution remote sensing image with limited samples . Science of surveying and mapping:1-10[2019-08-27].http://kns.cnki.net/kcms/detail/11.4415.P.20190719.0927.002.html. [74] Chen L,Zhang F, Jiang S. Deep Forest Learning for Military Object Recognition under Small Training Set Condition . Journal of Chinese academy of electronics, 2019, 14(03):232-237. [75] Jia LZ, Qin RR, Chi RX, Wang JH. Evaluation research of nurse’s core competence based on a small sample . Medical higher vocational education and modern nursing, 2018, 1(06):340-342. [76] Wang X, Ma TM, Yang T, et al. Moisture quantitative analysis with small sample set of maize grain in filling stage based on near infrared spectroscopy . Journal of agricultural engineering, 2018, 34(13):203-210. [77] He XJ, Ma S, Wu YY, Jiang GR. E-Commerce Product Sales Forecast with Multi-Dimensional Index Integration Under Small Sample . Computer Engineering and Applications, 2019, 55(15):177-184. [78] Liu XP, Guo B, Cui DJ, et al. Q-Precentile Life Prediction Based on Bivariate Wiener Process for Gear Pump with Small Sample Size . China Mechanical Engineering:1-9[2019-08-27].http://kns.cnki.net/kcms/detail/42.1294.TH.20190722.1651.002.html. [79] Quan ZN, Lin JJ. Text-Independent Writer Identification Method Based on Chinese Handwriting of Small Samples . Journal of East China University of Science and Technology (natural science edition), 2018, 44(06):882-886. [80] Sun CW, Wen C, Xie K, He JB. Voiceprint recognition method of small sample based on deep migration model . Computer Engineering and Design, 2018, 39(12):3816-3822. [81] Sun YY, Jiang ZH, Dong W, et al. Image recognition of tea plant disease based on convolutional neural network and small samples . Jiangsu Journal of Agricultural Sciences, 2019, 35(01):48-55. [82] Hu ZP, He W, Wang M, et al. Deep subspace joined sparse representation for single sample face recognition . Journal of Yanshan University, 2018, 42(05):409-415. [83] Sun HW, Xie XF, Sun T, Zhang LJ. Threat assessment method of warships formation air defense based on DBN under the condition of small sample data missing . Systems Engineering and Electronics, 2019, 41(06):1300-1308. [84] Liu YF, Zhou Y, Liu X, et al. Wasserstein GAN-Based Small-Sample Augmentation for New-Generation Artificial Intelligence: A Case Study of Cancer-Staging Data in Biology.Engineering,2019,5(01):338-354. [85] Cohn DA, Ghahramani Z, Jordan MI. Active Learning with Statistical Models . Journal of Artificial Intelligence Research, 1996, 4(1):705-712. [86] Kaelbling LP, Littman ML, Moore AP. Reinforcement Learning: A Survey . Journal of Artificial Intelligence Research, 1996, 4:237-285. [87] Bailey K, Chopra S. Few-Shot Text Classification with Pre-Trained Word Embeddings and a Human in the Loop . 2018. [88] Yan L, Zheng Y, Cao J. Few-shot learning for short text classification . Multimedia Tools and Applications, 2018, 77(22):29799-29810. [89] Goodfellow IJ, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets[C]// International Conference on Neural Information Processing Systems. 2014. [90] Royle JA, Dorazio RM, Link WA. Analysis of Multinomial Models with Unknown Index Using Data Augmentation . Journal of Computational & Graphical Statistics, 2007, 16(1):67-85. [91] Koh P W, Liang P. Understanding Black-box Predictions via Influence Functions . 2017. [92] Hariharan B, Girshick R. Low-shot visual recognition by shrinking and hallucinating features. 2017. [93] Liu B, Wang X, Dixit M, et al. Feature space transfer for data augmentation. 2018 [94] Xian Y, Sharma S, Schiele B, et al. f-VAEGAN-D2: A feature generating framework for any-shot learning. 2019 [95] Li, W., Xu, J., Huo, J., Wang, L., Yang, G., & Luo, J. Distribution consistency based covariance metric networks for few-shot learning. 2019. [96] Li, W., Wang, L., Xu, J., Huo, J., Gao, Y., & Luo, J. Revisiting Local Descriptor based Image-to-Class Measure for Few-shot Learning. 2019. [97] Gidaris, Spyros, and Nikos Komodakis. Dynamic few-shot visual learning without forgetting. 2018. [98] Sun, Qianru, et al. Meta-transfer learning for few-shot learning. 2019. [99] Jamal, Muhammad Abdullah, and Guo-Jun Qi. Task Agnostic Meta-Learning for Few-Shot Learning. 2019. [100] Lee, Kwonjoon, et al. Meta-learning with differentiable convex optimization. 2019. [101] Wang, Xin, et al. TAFE-Net: Task-Aware Feature Embeddings for Low Shot Learning. 2019. [102] Kim J, Kim T, Kim S, et al. Edge-Labeling Graph Neural Network for Few-shot Learning[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019: 11-20. [103] Shen W, Shi Z, Sun J. Learning from Adversarial Features for Few-Shot Classification . 2019. [104] Chen Z, Fu Y, Kim YX, et al. Image Deformation Meta-Networks for One-Shot Learning . 2019.

[105] Wang YX, Girshick R, Hebert M, et al. Low-Shot Learning from Imaginary Data . 2018. [106] Wang YX, Hebert M. Learning from Small Sample Sets by Combining Unsupervised Meta-Training with CNNs . 2016. [107] Li H, Eigen D, Dodge S , et al. Finding Task-Relevant Features for Few-Shot Learning by Category Traversal. 2019. [108] Gidaris S, Komodakis N. Generating Classification Weights with GNN Denoising Autoencoders for Few-Shot Learning . 2019. [109] Liu XY, Su YT, Liu AA, et al. Learning to Customize and Combine Deep Learners for Few-Shot Learning . 2019. [110] Gao TY, Han X, Liu ZY, Sun MS. Hybrid Attention-Based Prototypical Networks for Noisy Few-Shot Relation Classification . 2019. [111] Sun SL, Sun QF, Zhou K, Lv TC. Hierarchical Attention Prototypical Networks for Few-Shot Text Classification . 2019. [112] Nakamura A, Harada T. Revisiting Fine-tuning for Few-shot Learning . 2019. [113] Hou RB, Chang H, Ma BP, et al. Cross Attention Network for Few-shot Classification . 2019. [114] Jang YH, Lee HK, Hwang SJ, et al. Learning What and Where to Transfer . 2019.