机器学习:朴素贝叶斯分类

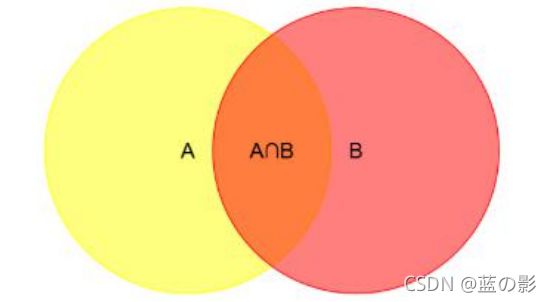

贝叶斯定理:

已知两个独立事件A和B,事件B发生的前提下,事件A发生的概率可 以表示为P(A|B),即上图中橙色部分占红色部分的比例

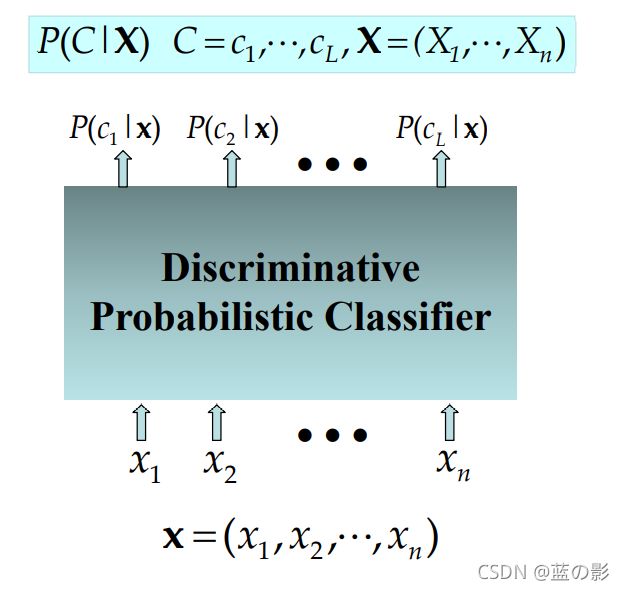

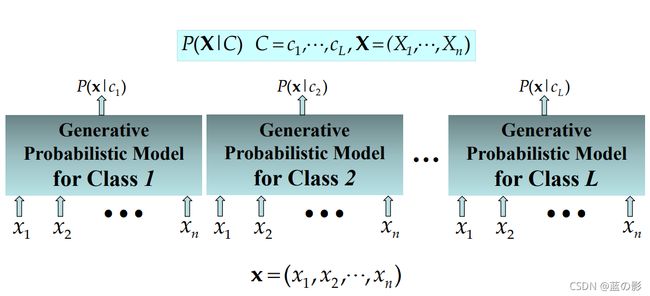

在机器学习中的2个视角:

一:判别式模型

二:生成式模型

朴素贝叶斯分类器(Naïve Bayes Classifier)采用了“属性条件独立性 假设” ,即每个属性独立地对分类结果发生影响

为方便公式标记,不妨记P(C=c|X=x)为P(c|x),基于属性条件独立 性假设,贝叶斯公式可重写为

– 其中d为属性数目,xi为 x 在第i个属性上的取值。

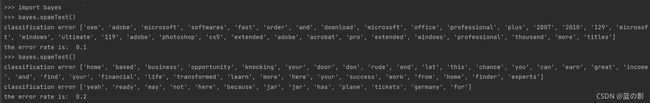

我们这里使用垃圾邮件分类进行交叉验证贝叶斯的交叉验证

textParse()该函数用于接受一个字符串并解析为一个字符串列表

该函数去掉少于2个字符的字符串,并将所有字符串转换为小写

def textParse(bigString): #input is big string, #output is word list

import re

listOfTokens = re.split(r'\W*', bigString)

return [tok.lower() for tok in listOfTokens if len(tok) > 2] spamTest()

该函数用于对贝叶斯垃圾邮件分类器进行自动化处理

导入2个文件夹的文本文件并解析为词列表

随机选择十个文件

用setOfWords2Vec()和traindNB0()函数进行分类,最后给出总的错误百分比

def spamTest():

docList=[]; classList = []; fullText =[]

for i in range(1,26):

wordList = textParse(open('email/spam/%d.txt' % i).read())

docList.append(wordList)

fullText.extend(wordList)

classList.append(1)

wordList = textParse(open('email/ham/%d.txt' % i).read())

docList.append(wordList)

fullText.extend(wordList)

classList.append(0)

vocabList = createVocabList(docList)#create vocabulary

trainingSet = range(50); testSet=[] #create test set

for i in range(10):

randIndex = int(random.uniform(0,len(trainingSet)))

testSet.append(trainingSet[randIndex])

del(trainingSet[randIndex])

trainMat=[]; trainClasses = []

for docIndex in trainingSet:#train the classifier (get probs) trainNB0

trainMat.append(bagOfWords2VecMN(vocabList, docList[docIndex]))

trainClasses.append(classList[docIndex])

p0V,p1V,pSpam = trainNB0(array(trainMat),array(trainClasses))

errorCount = 0

for docIndex in testSet: #classify the remaining items

wordVector = bagOfWords2VecMN(vocabList, docList[docIndex])

if classifyNB(array(wordVector),p0V,p1V,pSpam) != classList[docIndex]:

errorCount += 1

print "classification error",docList[docIndex]

print 'the error rate is: ',float(errorCount)/len(testSet)

#return vocabList,fullTextsetOfWords2Vec()

对每封邮件基于词汇表并使用该函数 构建词向量

def setOfWords2Vec(vocabList, inputSet):

returnVec = [0]*len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

else: print "the word: %s is not in my Vocabulary!" % word

return returnVec这些词在traindNB0()函数中用于计算分类所需概率

def trainNB0(trainMatrix,trainCategory):

numTrainDocs = len(trainMatrix)

numWords = len(trainMatrix[0])

pAbusive = sum(trainCategory)/float(numTrainDocs)

p0Num = ones(numWords); p1Num = ones(numWords) #change to ones()

p0Denom = 2.0; p1Denom = 2.0 #change to 2.0

for i in range(numTrainDocs):

if trainCategory[i] == 1:

p1Num += trainMatrix[i]

p1Denom += sum(trainMatrix[i])

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = log(p1Num/p1Denom) #change to log()

p0Vect = log(p0Num/p0Denom) #change to log()

return p0Vect,p1Vect,pAbusive

输出结果: