Object Track(三):经典算法复现(3)-光流法基本原理和简单实现

真的,无论多少次我还是会感叹,高翔的《SLAM十四讲》YYDS。把复杂的问题解释的很清楚。

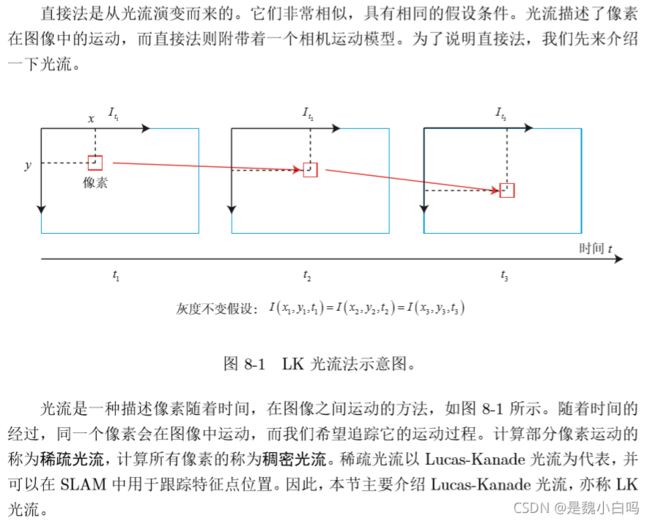

基本原理-光流定义

以下截图皆来自《SLAM十四讲》

光流的OpenCV简单实现

#!/usr/bin/env python

'''

Lucas-Kanade tracker

====================

Lucas-Kanade sparse optical flow demo. Uses goodFeaturesToTrack

for track initialization and back-tracking for match verification

between frames.

Usage

-----

lk_track.py []

Keys

----

ESC - 退出

'''

# Python 2/3 compatibility

from __future__ import print_function

import numpy as np

import cv2 as cv

import video

from common import anorm2, draw_str

lk_params = dict( winSize = (15, 15),

maxLevel = 2,

criteria = (cv.TERM_CRITERIA_EPS | cv.TERM_CRITERIA_COUNT, 10, 0.03)) # 光流跟踪参数设置

feature_params = dict( maxCorners = 500,

qualityLevel = 0.3,

minDistance = 7,

blockSize = 7 ) # 角点检测参数设置

class App: # 进入主程序

def __init__(self, video_src): # 初始化

self.track_len = 10

self.detect_interval = 5

self.tracks = []

self.cam = video.create_capture(video_src)

self.frame_idx = 0

def run(self):

while True:

_ret, frame = self.cam.read() # 打开相机

frame_gray = cv.cvtColor(frame, cv.COLOR_BGR2GRAY) # 灰度图像

vis = frame.copy()

if len(self.tracks) > 0:

img0, img1 = self.prev_gray, frame_gray

p0 = np.float32([tr[-1] for tr in self.tracks]).reshape(-1, 1, 2)

p1, _st, _err = cv.calcOpticalFlowPyrLK(img0, img1, p0, None, **lk_params) # 计算光流p1

p0r, _st, _err = cv.calcOpticalFlowPyrLK(img1, img0, p1, None, **lk_params) # 计算光流p0

d = abs(p0-p0r).reshape(-1, 2).max(-1) # 计算距离

good = d < 1

new_tracks = []

for tr, (x, y), good_flag in zip(self.tracks, p1.reshape(-1, 2), good):

if not good_flag:

continue

tr.append((x, y))

if len(tr) > self.track_len:

del tr[0]

new_tracks.append(tr)

cv.circle(vis, (int(x), int(y)), 2, (0, 255, 0), -1) # 标记光流点

self.tracks = new_tracks

cv.polylines(vis, [np.int32(tr) for tr in self.tracks], False, (0, 255, 0)) # 画出运行线条,可视化的更棒

draw_str(vis, (20, 20), 'track count: %d' % len(self.tracks))

if self.frame_idx % self.detect_interval == 0:

mask = np.zeros_like(frame_gray)

mask[:] = 255

for x, y in [np.int32(tr[-1]) for tr in self.tracks]:

cv.circle(mask, (x, y), 5, 0, -1)

p = cv.goodFeaturesToTrack(frame_gray, mask = mask, **feature_params) # 使用 goodFeaturesToTrack 进行轨道初始化和回溯以进行帧之间的匹配验证。

if p is not None:

for x, y in np.float32(p).reshape(-1, 2):

self.tracks.append([(x, y)])

self.frame_idx += 1

self.prev_gray = frame_gray

cv.imshow('lk_track', vis)

ch = cv.waitKey(1)

if ch == 27:

break

def main():

import sys

try:

video_src = sys.argv[1]

except:

video_src = 0

App(video_src).run()

print('Done')

if __name__ == '__main__':

print(__doc__)

main()

cv.destroyAllWindows()

分析一下试验效果

1.灰度假设较弱,存在跟丢现象

2.为了确定点,可以使用cv.goodFeaturesToTrack()。程序启动后选取第一帧,检测其中的一些Shi-Tomasi角点,然后使用Lucas-Kanade光流迭代地跟踪这些点。对于函数cv.calcOpticalFlowPyrLK(),我们传递前一帧,前一点和下一帧。它会返回下一个点以及一些状态码,如果找到下一个点,状态码的值为1,否则为零。我们将这些下一个点迭代地传递为下一步中的上一个点。请参见上面的代码。

3.运动的速度过快或者运行的幅度过大确实会造成追踪的丢失

4.总而言之,光流法可以加速基于特征点的视觉里程计算法,避免计算和匹配描述子的过程,但要求相机运动较慢(或采集频率较高)。