一点就分享系列(实践篇)“大概率是全网首发”——文字检测(OCR系列)之后处理:文本构造线算法(OpenCv )版本 “为部署而生”!!!

一点就分享系列(实践篇)——文字检测(OCR系列)之后处理算法:文本构造算法(OpenCv )版本 “为部署而生”!!!

想了很久要不要发这个实践篇,一点就分享系列,希望以通俗易懂的方式呈现出来,想做100%的干货,但是把有时候做到70%就已经很累了,因为这是曾经的工作,对于文本线构造的细节我甚至有些淡忘了,本意是想理解篇和实践篇联动!!!哎,目前改为实践篇,主要开源分享下代码,该接口是我用C++的opencv去特意”改装了“,主要是由于自己的工程版本的数据结构和耦合度太高,不易移植等原因吧,代码改的仓促称不上完美,但是可以移植去调节阈值改善效果且正常使用,主要是为了OCR检测文字部分的部署应用,实践篇侧重于代码分享和使用心得,和需要的朋友做优化也是可以参考的,废话不多说,开整!

文章目录

- 一点就分享系列(实践篇)——文字检测(OCR系列)之后处理算法:文本构造算法(OpenCv )版本 “为部署而生”!!!

- 前言

- 一、文本线构造什么鬼?为什么需要?

- 二、文本线构造方法的“身世”

-

- 1.来源和体会

- 2.代码(CPP版本部署神器)

- 总结

前言

深度学习部署在2020这个特殊的一年里,已经是CV领域每个技术应用者的主要攻克点,而OCR中文字检测是大家比较熟悉的应用业务,对于需要部署的工程师,往往费劲心力解决了模型转换中的OP算子等问题,但是发现文字检测的后处理其实并不想大家想的那么“简单”,哈哈!!换成C++你要自己写咯,头大不?希望看到这能帮助到你的朋友,给个赞和关注就好咯!

故本篇回避理论叙述,你获得的代码有以下特点:

1)移植性:只需要Opencv DNN即可使用

2)易用性: 封装的接口,直接调用,输入为BOX信息,该结构我换成了Opencv的Rect,为了可读性和移植性,输出依旧为Rect结构!

3)可塑性 :这个代码是通过我之前的一个版本改过来的,因为数据结构和库都是更多的第三方,所以耦合性太强,代码有很多不足之处和需要优化的地方,可以供读者拿来优化,比如可以将NMS可以改成fast,softer版本试试!

一、文本线构造什么鬼?为什么需要?

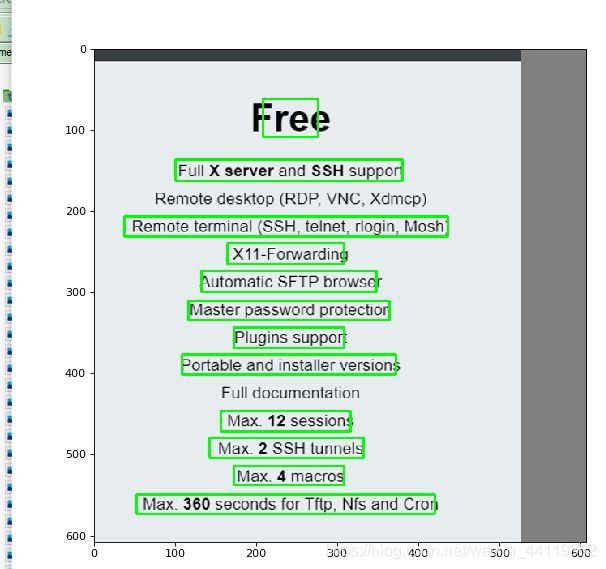

对于目标检测后的BOX信息,大家都比较熟悉了,但是为什么需要文本线构造算法呢?实践篇不需要讲大道理,直接贴图,告诉你为什么!!!

上图是某网友发给我的YOLO3输出结果,因为也需要做部署C++版本,到这里就卡住了,为什么?你需要处理这个结果啊!!!咋办呢?这时候你就知道文本线构造的意义了,怎么写呢C版本?太难了我还要去学个算法,翻开源码全是Py版本,难受吗?难受是正常的!来我贴给你C版本复现,不就完事了嘛!

二、文本线构造方法的“身世”

1.来源和体会

该方法我是从ctpn的解析中获得,但平时使用中,这个点是往往是大家忽略的地方,主要都侧重于目标检测本身以及OCR的识别模型,可工程是一步一个脚印的,少1个都白扯,任你饱读诗书,该上战场时你也得上!!由于该篇幅侧重于代码分享和使用心得,即理论部分也不是我自己的东西,这种重复性工作没必要复述,而且这个算法代码比较多,想要看明白,需要结合理论去理解,不花会儿时间是做不到的!现在直接贴下我当初参考的CTPN的算法解析,并且还有python版本的代码,对理论和源码有大家有兴趣的自己去看和研究!!

CTPN解读文本线构造算法

这里好心提示,要注意两点:一是图像尺寸处理;而是文本线和NMS的参数调节否则效果有问题,如下例子:

失败(示例):

我大概解析下,这是为什么?首先漏检了对吧,这里就要注意了,回到刚才提示注意的几个点,我的经验是:

抛开尺寸这个因素,当你改动文本线阈值后,都还存在这种情况大概率就是你NMS的处理结果不好了,还不行的话,就是尺寸问题了!!(注意分析排除问题因素的顺序!)这图(padding过)是我乱截的,为提醒下用了算法说效果不好的朋友!恰巧也能说明问题,也是告诉大家需要调试,这算法么问题的!

上图是文本线构造使用前后的对比图!

2.代码(CPP版本部署神器)

// 文本线构造OPENCV版本(demo版)

// 对接目标检测的后处理的BOX 输入为检测模型的BOX 输出为文本合并后的BOX

// 输入的数据结构是自带的RECT 可以自己定义只要包含BOX的信息即可(啥都会一点的小程)

#include

delete[]graph;

while (sub_graphs) {

node *cur = sub_graphs;

sub_graphs = sub_graphs->next;

while (cur) {

node *tmp = cur;

cur = cur->right;

free(tmp);

}

}

//std::cout<<"55555"<

return result_objects;

}

std::vector<Rect>

nonMaximumSuppression(float nmsThresh, std::vector<Rect> binfo, vector<float> confidences)

{

auto overlap1D = [](float x1min, float x1max, float x2min, float x2max) -> float {

if (x1min > x2min)

{

std::swap(x1min, x2min);

std::swap(x1max, x2max);

}

return x1max < x2min ? 0 : std::min(x1max, x2max) - x2min;

};

auto computeIoU= [&overlap1D](Rect& bbox1, Rect& bbox2) -> float {

float overlapX= overlap1D(bbox1.x, bbox1.x + bbox1.width, bbox2.x, bbox2.x + bbox2.width);

float overlapY= overlap1D(bbox1.y, bbox1.y + bbox1.height, bbox2.y, bbox2.y + bbox2.height);

float area1 = (bbox1.width) * (bbox1.height);

float area2 = (bbox2.width) * (bbox2.height);

float overlap2D = overlapX * overlapY;

float u = area1 + area2 - overlap2D;

return u == 0 ? 0 : overlap2D / u;

};

std::stable_sort(confidences.begin(), confidences.end(),

[](float b1, float b2)

{

return b1 > b2;

});

std::vector<Rect> out;

for (auto i : binfo)

{

bool keep = true;

for (auto j : out)

{

if (keep)

{

float overlap = computeIoU(i, j);

keep = overlap <= nmsThresh;

}

else

break;

}

if (keep) out.push_back(i);

}

return out;

}

std::vector<Rect>

text_line_drop(std::vector<Rect> boxes, vector<float> confidences, vector<int>classIds, int width, int height)

{

std::vector<Rect> result;

//std::vector> splitBoxes(numClasses);

std::vector<int> boxIndex;

std::vector<Rect> imp_objects;

std::vector<Rect> tmp_imp_objects;

std::vector<Rect> result_objects;

int i = 0;

float nmsThresh = 0.2; //0.3

for (auto& box_conf : confidences)

{

//std::cout<<"zzzzzz:"<

if (box_conf > 0.3) //0.5

{

boxIndex.push_back(i);

}

i++;

}

for (auto& index : boxIndex)

{

imp_objects.push_back(boxes[index]);

}

sort(imp_objects.begin(), imp_objects.end(), sortFun);

tmp_imp_objects = nonMaximumSuppression(nmsThresh, imp_objects, confidences);

//std::cout<<"22222:::"<

result_objects = get_text_lines(tmp_imp_objects, confidences, classIds, width, height);/* 在此将碎片框合并成一行,存储在*/ //std::cout<<"66666::: "<<"result"<

return result_objects;

}

void text_detect(Mat img, int scale, int maxScale, float prob, std::vector<Rect>& objectList)

{

Mat orgimg = img.clone();

int image_w = img.cols;

int image_h = img.rows;

int w = scale;

int h = scale;

int new_w = int(image_w * min(w * 1.0 / image_w, h * 1.0 / image_h));

int new_h = int(image_h * min(w* 1.0 / image_w, h * 1.0 / image_h));

cv::Mat img_size;

cv::resize(img, img_size, cv::Size(new_w, new_h), INTER_LINEAR);

Mat new_image(608, 608, CV_8UC3);

for (int i = 0; i < new_image.rows; i++)

{

Vec3b* p = new_image.ptr<Vec3b>(i);

for (int j = 0; j < new_image.cols; j++)

{

p[j][0] = 128;

p[j][1] = 128;

p[j][2] = 128;

}

}

for (int i = 0; i < img_size.rows; i++)

{

for (int j = 0; j < img_size.cols; j++)

{

new_image.at<Vec3b>(i, j)[0] = img_size.at<Vec3b>(i, j)[0];

new_image.at<Vec3b>(i, j)[1] = img_size.at<Vec3b>(i, j)[1];

new_image.at<Vec3b>(i, j)[2] = img_size.at<Vec3b>(i, j)[2];

}

}

float f = (float)new_w / (float)image_w;

Net net = cv::dnn::readNetFromDarknet("./model/text.cfg", "./model/text.weights"); //模型路径

cv::Mat inputBlob = cv::dnn::blobFromImage(new_image, 1.0 / 255, Size(608, 608), Scalar(), false, false);

net.setInput(inputBlob);

vector<Mat>outs;

net.forward(outs, net.getUnconnectedOutLayersNames());

vector<int>classIds;

vector<float>confidences;

vector<Rect>boxes;

for (size_t i = 0; i < outs.size(); i++)

{

float* data = (float*)outs[i].data;

for (int j = 0; j < outs[i].rows; j++, data += outs[i].cols)

{

Mat scores = outs[i].row(j).colRange(5, outs[i].cols);

Point classIdPoint;

double confidence;

minMaxLoc(scores, 0, &confidence, 0, &classIdPoint);

if (confidence > 0.05)

{

int center_x = (int)(data[0] * scale / f);

int center_y = (int)(data[1] * scale / f);

int width = (int)(data[2] * scale / f);

int height = (int)(data[3] * scale / f);

int left = int(center_x - width / 2);

int top = int(center_y - height / 2);

classIds.push_back(classIdPoint.x);

confidences.push_back((float)confidence);

boxes.push_back(Rect(left, top, width, height));

}

}

}

for (size_t i = 0; i < boxes.size(); i++)

{

Rect box = boxes[i];

cout << box << endl;

int left = box.x;

int top = box.y;

int right = box.x + box.width;

int bottom = box.y + box.height;

rectangle(img, Point(left, top), Point(right, bottom), Scalar(0, 0, 255));

}

imshow("img1", img);

waitKey(0);

int highh = 608, widd = 608;//这个宽高跟你网络模型确定 自己看下怎么算的

objectList = text_line_drop(boxes, confidences, classIds, widd, highh);//文本线构造接口

for (size_t i = 0; i < objectList.size(); ++i)

{

Rect box = objectList[i];

cout << box << endl;

int left = box.x;

int top = box.y;

int right = box.x + box.width;

int bottom = box.y + box.height;

rectangle(orgimg, Point(left, top), Point(right, bottom), Scalar(0, 0, 255));

}

imshow("img2", orgimg);

waitKey(0);

}

int main()

{

//1:读取图片

Mat img = imread("3.jpg"); //图片路径

if (img.empty())

{

std::cout<< "图片读取失败" <<std::endl;

exit(0);

}

std::vector<Rect> objectList;

text_detect(img, 608, 608, 0.05, objectList);//封装好的文本线构造接口

return 0;

}

总结

没啥说的,直接用就好了,注意提到的参数和数据输入即可!作为比较小众的一个点,可能被大家忽视,但是正当你需要的时候,才知道它的价值。我油箱里还有很多油(干货),喜喜,慢慢来,喜欢点关注!!下期来个理论分析!