从零完成slam实战,以Vins-Fusion为例

写在前面

1.本文以vins-fusion为例,是因为其框架正统、简单清晰,易于调试和后续改进;camera-imu外参及同步时间td可实时估计;已有融合gps方案且较为容易可添加融合其它传感器。

2.本文致力于跑出稳定、较高精度的定位结果,故对imu、camera传感器的标定是必须的。为使文章流场、不至于过长,对于Realsense、Realsense-ros详细安装过程,imu、camera标定工具安装、标定,可参考文章Realsense d435i内参、外参标定和Realsense d435i驱动安装、配置及校准

一、方案

1.硬件方案

采用硬件为Intel NUC11TNKI7 + d435i。

前期准备

1.Realsense及Realsense-ros安装

安装后,运行 realsense-viewer ,添加摄像头和运动模块选项,可看到摄像头画面和imu 数据,证明安装成功。

2.slam方案

VINS Fusion是一种基于优化的多传感器状态估计器,可实现自主应用(无人机、汽车和AR/VR)的精确自我定位。VINS Fusion是VINS Mono的扩展,支持多种视觉惯性传感器类型(单摄像机+IMU、双目相机+IMU,或纯双目相机),且支持将VINS与GPS融合。主要特点如下:

(1)支持多传感器(双目相机/单目相机+IMU/双目相机+IMU)

(2)实时camera-imu外参校准(相机和IMU之间的转换)

(3)实时同步时间校准(相机和IMU之间的时间偏移)

(4)闭环可视化

二、传感器校准

可以说该步骤,是稳定运行,解决各种跑飞、结果不理想等问题的关键。

d435i传感器校准主要包括, imu六面校准、imu随机游走和噪声校准、camera内参和imu-camera外参校准,其中前三项在文章开头已说明,接下来详细阐述使用vins-fusion进行imu-camera外参校准。

三、运行前准备

1.配置文件设置

(1)双目相机参数配置(left.yaml、right.yaml)

将之前校准好的双目相机参数,以左目为例:

left.yaml

model_type: PINHOLE

camera_name: camera

image_width: 640

image_height: 480

distortion_parameters:

k1: 0.007532405272341989

k2: -0.03198723534231893

p1: -0.00015249992792258453

p2: 0.001638891018727039

projection_parameters:

fx: 391.57645976863694

fy: 392.2173924045597

cx: 326.83301528066227

cy: 235.30947818084246

(2)运行参数配置(realsense_stereo_imu_config.yaml)

#common parameters

#support: 1 imu 1 cam; 1 imu 2 cam: 2 cam;

imu: 1

num_of_cam: 2

imu_topic: "/camera/imu"

image0_topic: "/camera/infra1/image_rect_raw"

image1_topic: "/camera/infra2/image_rect_raw"

output_path: "/home/ocean/vins_fuison/vins_output"

cam0_calib: "left.yaml"

cam1_calib: "right.yaml"

image_width: 640

image_height: 480

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 1 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

body_T_cam0: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ 9.9983415896161643e-01, -3.9428551504684375e-03,

1.7779439439409347e-02, -1.4253776535446258e-02,

4.3522937648056296e-03, 9.9972485834939917e-01,

-2.3049189514136877e-02, 2.2657863548970836e-01,

-1.7683667959505291e-02, 2.3122748356027831e-02,

9.9957622340467955e-01, -1.0893316869998337e+00, 0., 0., 0., 1. ]

body_T_cam1: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ 9.9979604443066683e-01, -2.9603891467875603e-03,

1.9977628410091861e-02, 9.0127435964667407e-03,

3.4155776956196725e-03, 9.9973445341878342e-01,

-2.2789393946561421e-02, 2.2694441090281392e-01,

-1.9904857944665476e-02, 2.2852981064753180e-02,

9.9954066344829451e-01, -1.0861428664701842e+00, 0., 0., 0., 1. ]

#Multiple thread support

multiple_thread: 1

#feature traker paprameters

max_cnt: 150 # max feature number in feature tracking

min_dist: 30 # min distance between two features

freq: 10 # frequence (Hz) of publish tracking result. At least 10Hz for good estimation. If set 0, the frequence will be same as raw image

F_threshold: 1.0 # ransac threshold (pixel)

show_track: 1 # publish tracking image as topic

flow_back: 1 # perform forward and backward optical flow to improve feature tracking accuracy

#optimization parameters

max_solver_time: 0.04 # max solver itration time (ms), to guarantee real time

max_num_iterations: 8 # max solver itrations, to guarantee real time

keyframe_parallax: 10.0 # keyframe selection threshold (pixel)

#imu parameters The more accurate parameters you provide, the better performance

acc_n: 1.7512828345266122e-02 # accelerometer measurement noise standard deviation.

gyr_n: 3.3502044635737617e-03 # gyroscope measurement noise standard deviation.

acc_w: 4.2528647943077756e-04 # accelerometer bias random work noise standard deviation.

gyr_w: 4.8760608618583259e-05 # gyroscope bias random work noise standard deviation.

g_norm: 9.8046 # gravity magnitude

#unsynchronization parameters

estimate_td: 1 # online estimate time offset between camera and imu

td: 0.002004 # initial value of time offset. unit: s. readed image clock + td = real image clock (IMU clock)

#loop closure parameters

load_previous_pose_graph: 1 # load and reuse previous pose graph; load from 'pose_graph_save_path'

pose_graph_save_path: "/home/ocean/vins_fuison/vins_output/pose_graph/" # save and load path

save_image: 1 # save image in pose graph for visualization prupose; you can close this function by setting 0

2.外参校准

利用Vins-Fusion可实时优化camera-imu外参的功能,找一个相对封闭的场景,拿着设备绕着该场景缓慢走动一圈,同时将数据录好包,具体操作如下:

(1)运行d435i

- source devel/setup.bash

- roslaunch realsense2_camera rs_camera.launch

(2)录包

- source devel/setup.bash

- rosbag record -O IndoorScene /camera/imu /camera/infra1/image_rect_raw /camera/infra2/image_rect_raw

(3)运行

工作空间建立shfiles文件夹

- mkdir -p shfiles

编写脚本run.sh

- touch run.sh

roslaunch vins vins_rviz.launch & sleep 5;

rosbag play bag/IndoorScene.bag & sleep 5;

roslaunch vins vins_node src/VINS-Fusion/config/realsense_d435i/realsense_stere_imu_config.yaml & sleep 3;

roslaunch loop_fusion loop_fusion_node src/VINS-Fusion/config/realsense_d435i/realsense_stere_imu_config.yaml;

wait;

- cd vins_fusion

- source devel/setup.bash

- sh run.sh

在外参输出结果路径,将extrinsic_parameter.txt中数据替换掉realsense_stereo_imu_config.yaml中外参数据。每次替换完后再次运行,离线重复多次,直至外参收敛到一个比较满意的结果。

注:录包离线重复多次优化外参方法,省去不断重复走的过程。

三、结果

经过上一步,我们就得到imu、camera和imu-camera比较准确的数据了,可以开始正式测试了。

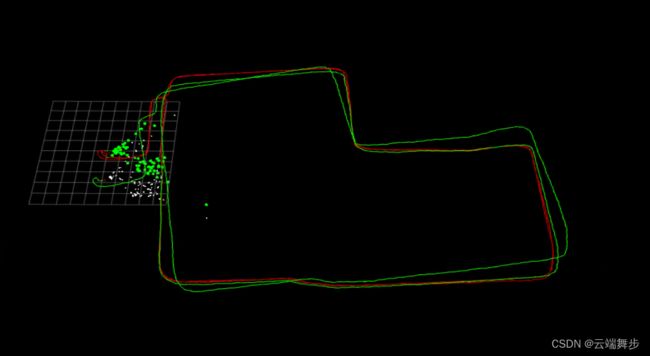

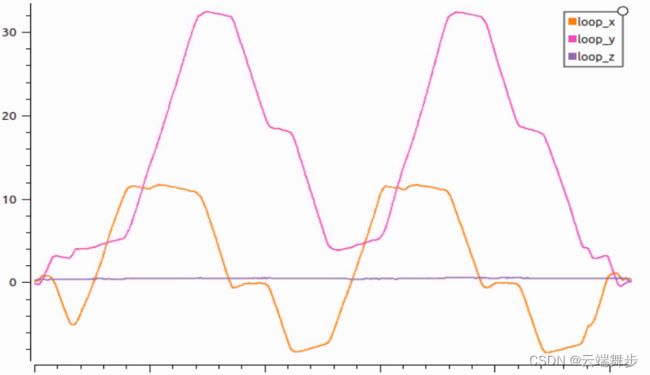

测试环境为800m2的办公区域,走动过程中经过多处门、过道和灯光。为了测试重复定位精度,走了两圈。从实际跑视频中可以看到,整体效果如下所示,图1实际走动轨迹,图2xyz里程计数据曲线,图3xyz闭环数据曲线。

从实际轨迹看整体效果较好,里程计轨迹存在漂移,闭环轨迹几乎重合。

从里程计数据曲线可以看出,里程计轨迹存在漂移,z轴漂移最大,漂移1.5m左右。

发生闭环后,从实际轨迹后,两圈基本重合,z轴最大漂移在30cm以内。

附视频

bilibili d435i + vins-fusion 室内测试

后续预告:

接下来将对vins_fusion重点部分进行详细拆解,大致包括:

1.Vins-Fusion主题框架,数据流分析;

2.前端:基于LK光流法跟踪特征法的原理、源码细节处理及对比特征点法的优劣;

3.后端:基于BA优化的位姿估计,主要包括:如何构建IMU残差、左右目残差、单目前后两帧残差,前帧左目当前帧右目残差;

4.回环检测:基于词袋模型DBoW回环检测分析;

5.其它:外参矩阵实时估计、3d-2d PNP求解、三角化、初始化如何实现位姿对齐等。

参考:

https://blog.csdn.net/u010196944/article/details/127239342

https://blog.csdn.net/u010196944/article/details/127238908

https://github.com/HKUST-Aerial-Robotics/VINS-Fusion