Pytorch基础学习(第五章-Pytorch训练过程)

课程一览表:

目录

一、TensorBoard简介与安装

1.TensorBoard简介

2.TensorBoard安装

二、TensorBoard的使用

三、TensorBoard

1.SummaryWriter类

2.TensorBoard的方法

四、hook函数与CAM可视化

1.Hook函数概念

2.Hook函数与特征图提取

3.CAM

一、TensorBoard简介与安装

1.TensorBoard简介

本节学习迭代训练

TensorBoard:TensorFlow中强大的可视化工具

支持标量、图像、文本、音频、视频和Embedding等多种数据可视化

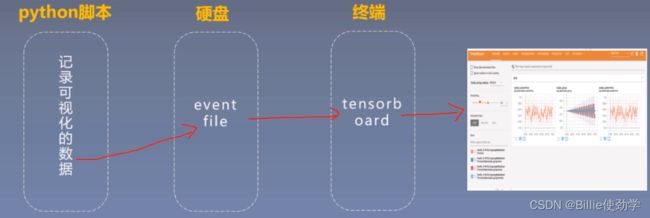

运行机制:

代码:

记录可视化数据并保存到硬盘中

import numpy as np

from torch.utils.tensorboard import SummaryWriter

#记录可视化数据

writer = SummaryWriter(comment='test_tensorboard')

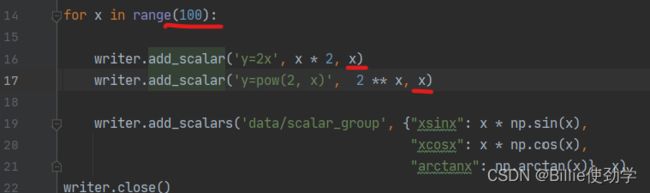

for x in range(100):

writer.add_scalar('y=2x', x * 2, x)

writer.add_scalar('y=pow(2, x)', 2 ** x, x)

writer.add_scalars('data/scalar_group', {"xsinx": x * np.sin(x),

"xcosx": x * np.cos(x),

"arctanx": np.arctan(x)}, x)

writer.close()2.TensorBoard安装

在当前环境:pip install tensorboard

安装注意事项

pip install tensorboard 的时候会报错:

ModuleNotFoundError:No module named ‘past’

通过pip install future解决

运行以上代码,可以看到可视化数据已被存入硬盘

二、TensorBoard的使用

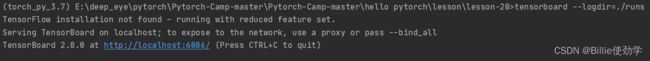

读取event file

命令行中跳转到当前文件的父文件夹,即上图所示的lesson-20文件夹

输入dir可查看当前文件夹下的目录

可以看到runs已经在目录中

使用tensorboard --logdir=./runs 将想要可视化的event所在的文件夹放到logdir中,即event的父目录

这样就显示已经运行了,下面这个网址可以直接进入默认的浏览器打开可视化界面

点击链接或复制链接到浏览器。

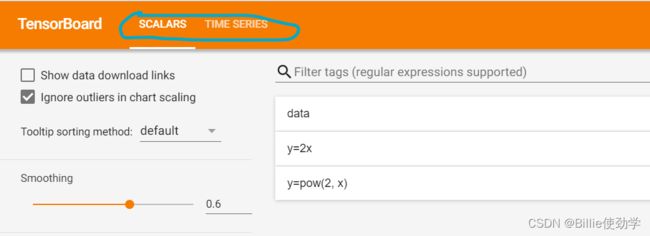

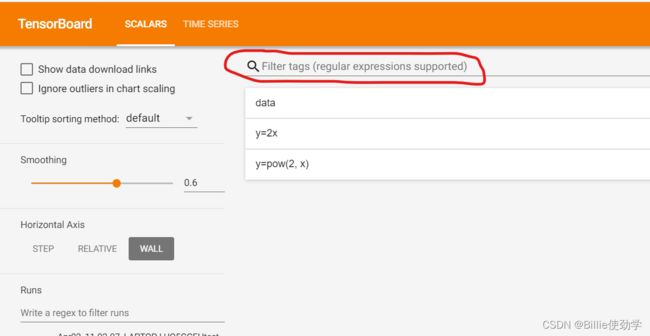

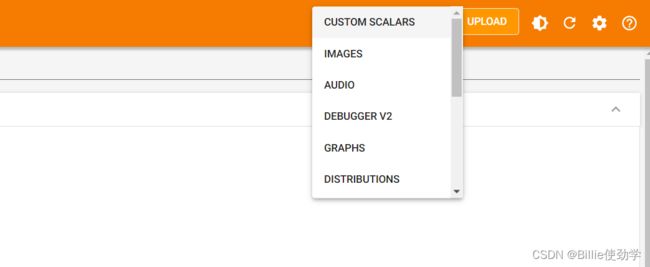

那么如何使用这个界面呢?

这里显示所记录的数据的类型

右上角的INACTIVE显示所有可以可视化的数据类型

右面图标依次为界面样式(light or dark)、刷新、设置多长时间自动刷新(实现实时监测功能)

工具栏:

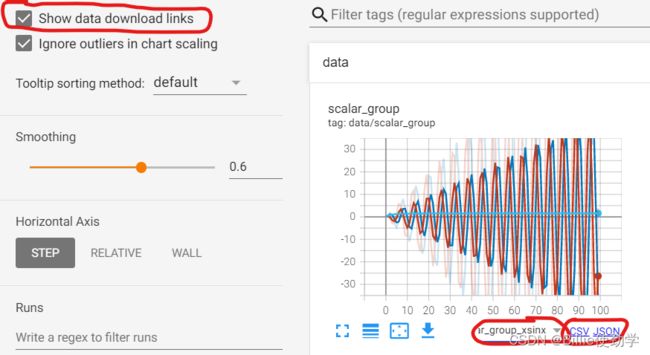

show data download linnks :显示下载链接,可以下载为csv或json格式

下载时需要选择下载哪个曲线(run to download),不选择则下载失败

ignore outilers in char scaling:忽略离群点

当不勾选时,y值达到了10^29,太大了

勾选,则会忽视太大的值

Tooltip sorting method:显示排序功能,如果有多条曲线,则会调整多条曲线的排序 ,一般用default

smoothing:平滑

可以看到,其实隐阴影的部分才是真实值 ,此处使用了0.6的平滑

那我们不使用平滑看一下效果

不使用平滑就显示了真实值,我们一般默认使用0.6的平滑值

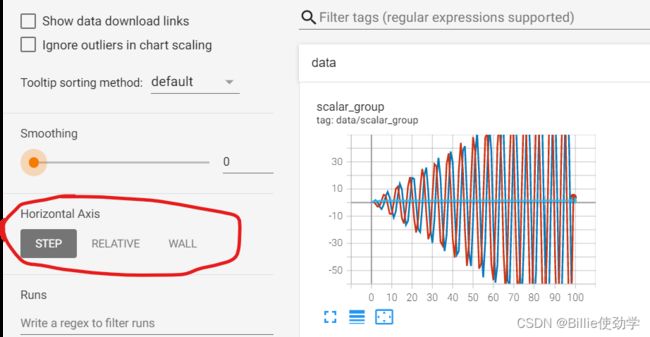

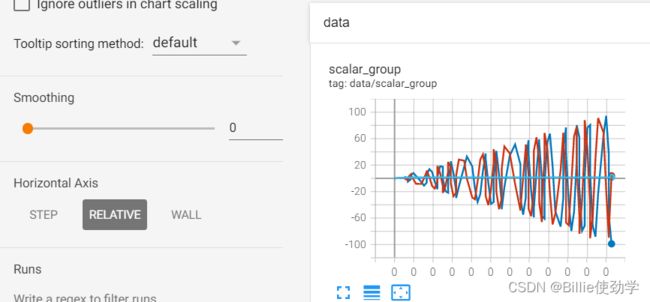

Horizontal axis:x轴的设置

三种模式:一般使用step,表示的是代码中记录的x,记录多少,x轴就是多少

可以看到x轴显示的是0-100

relative是相对训练时间,以小时为单位

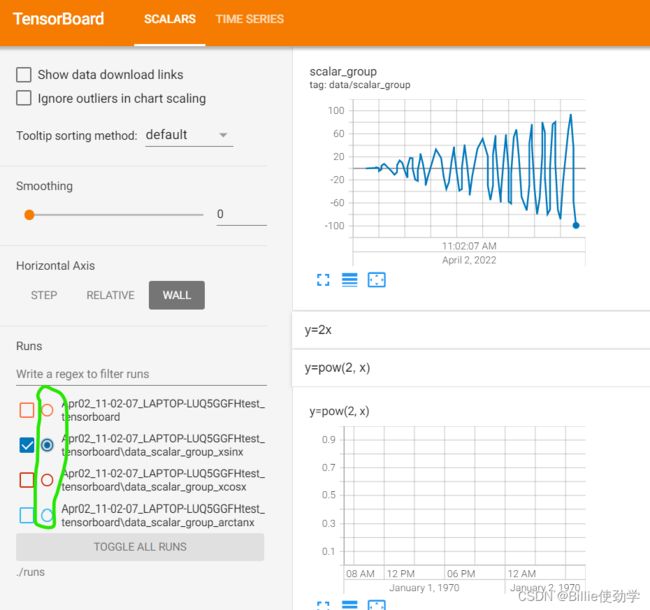

wall是绝对时间单位

runs可以选择event file

左边的方框用来选择是否绘制,不勾选就不绘制

当用很多条曲线时,圆圈表示仅绘制一条曲线,其余曲线不显示

放大镜表示根据tag搜索,tag就是每个图像的名字如data、y=2x等

以上就是tensorboard中的界面元素了

三、TensorBoard

1.SummaryWriter类

SummaryWriter

功能:提供创建event file的高级接口

主要属性:

- log_dir:event file输出文件夹

- comment:不指定log_dir时,文件夹后缀

- filename_suffix:event file文件名后缀

代码:

# ----------------------------------- 0 SummaryWriter -----------------------------------

# flag = 0

flag = 1

if flag:

log_dir = "./train_log/test_log_dir"

writer = SummaryWriter(log_dir=log_dir, comment='_scalars', filename_suffix="12345678")

# writer = SummaryWriter(comment='_scalars', filename_suffix="12345678")

for x in range(100):

writer.add_scalar('y=pow_2_x', 2 ** x, x)

writer.close()

如果指定log_dir:

则会创建目录,event file的后缀是定义的后缀,定义log_dir时,comment参数不会生效

如果不设置log_dir,则会当前文件夹下创建runs文件夹,它的子文件夹名的后缀就是comment定义的

![]()

2.TensorBoard的方法

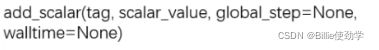

(1)add_scalar()

功能:记录标量,只能记录一条曲线

- tag:图像的标签名,图的唯一标识

- scalar_value:要记录的标量,y轴

- global_step:x轴,epoch或iteration

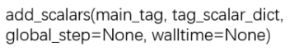

(2)add_scalars()

功能:可以记录多条曲线

- main_tag:该图的标签

- tag_scalar_dict:key是变量的tag,value的变量的值

# ----------------------------------- 1 scalar and scalars -----------------------------------

flag = 0

# flag = 1

if flag:

max_epoch = 100

writer = SummaryWriter(comment='test_comment', filename_suffix="test_suffix")

for x in range(max_epoch):

writer.add_scalar('y=2x', x * 2, x)

writer.add_scalar('y=pow_2_x', 2 ** x, x)

writer.add_scalars('data/scalar_group', {"xsinx": x * np.sin(x),

"xcosx": x * np.cos(x)}, x)

writer.close()命令行输入tensordoard --logdir=./runs进入可视化界面

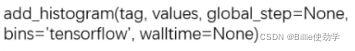

(3)add_histogram()

功能:统计直方图与多分位数折线图

- tag:图像的标签名,图的唯一标识

- values:要统计的参数

- global_step:y轴

- bins:取直方图的bins,默认为tensorflow

代码:

# ----------------------------------- 2 histogram -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_comment', filename_suffix="test_suffix")

for x in range(2):

np.random.seed(x)

data_union = np.arange(100)

data_normal = np.random.normal(size=1000)

writer.add_histogram('distribution union', data_union, x)

writer.add_histogram('distribution normal', data_normal, x)

plt.subplot(121).hist(data_union, label="union")

plt.subplot(122).hist(data_normal, label="normal")

plt.legend()

plt.show()

writer.close()输出结果:

每张图左边是均匀分布,右边是正态分布

命令行输入tensorboard --logdir=./runs

一定要在当前文件夹下执行该命令

可以看到均匀分布和正态分布的直方图

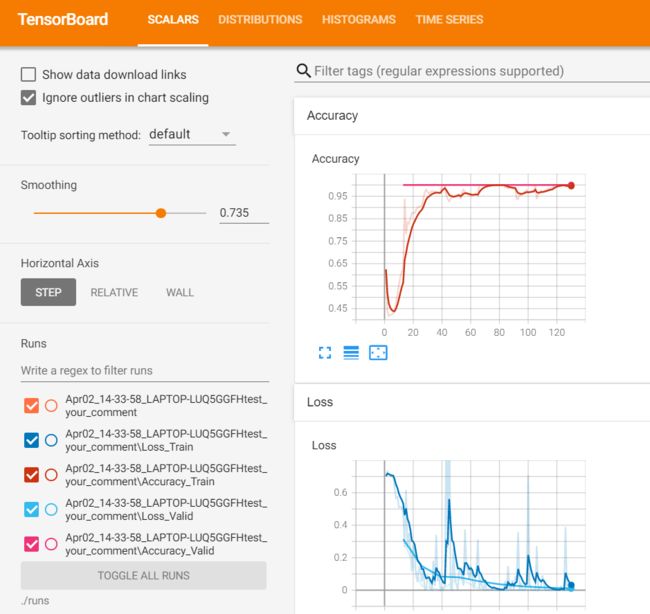

应用:使用这两个方法来监控模型的loss曲线以及accuracy曲线,以及参数的分布和参数所对应的梯度的分布

构建summarywriter

# 构建 SummaryWriter

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")记录训练集的loss和accuracy数据,保存event file

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Train": loss.item()}, iter_count)

writer.add_scalars("Accuracy", {"Train": correct / total}, iter_count)记录每个epoch的每个参数的梯度和数值,保存event file

# 每个epoch,记录梯度,权值

for name, param in net.named_parameters():

writer.add_histogram(name + '_grad', param.grad, epoch)

writer.add_histogram(name + '_data', param, epoch)记录验证集的loss和accuracy数据,保存event file

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Valid": loss_mean_epoch}, iter_count)

writer.add_scalars("Accuracy", {"Valid": correct_val / total_val}, iter_count)可视化:scalars

tensorboard可视化:可以实时监控变化

pycharm可视化:可以看到与tensorboard可视化界面是一致的

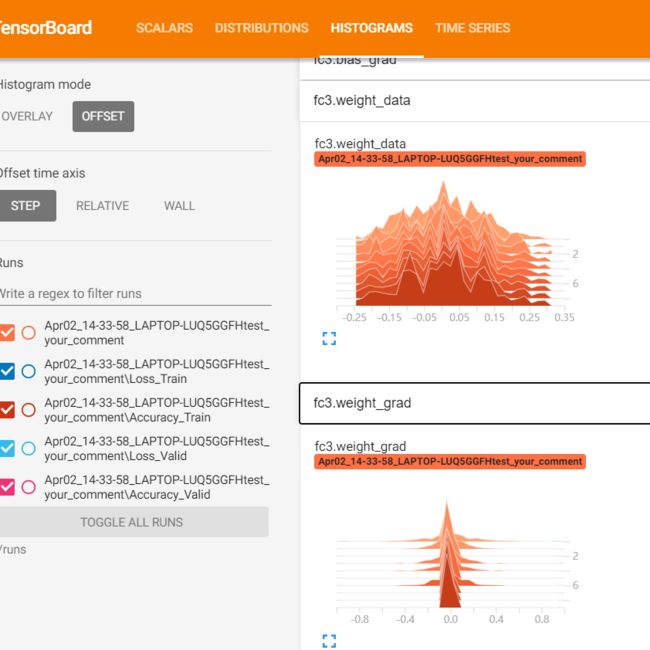

我们再来看看histtograms:其中记录着每个参数(bias、weight)的梯度及数据

可以看到越到后面梯度越小,是因为到后面loss就小了

如果前面的卷积层的梯度很小,可能是梯度消失,需要观察后面的全连接层的梯度

如果全连接层的梯度同样小,说明loss本身就小,如果全连接层loss大,说明发生了梯度消失

全部代码:

import os

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from torch.utils.tensorboard import SummaryWriter

import torch.optim as optim

from matplotlib import pyplot as plt

import sys

hello_pytorch_DIR = os.path.abspath(os.path.dirname(__file__)+os.path.sep+".."+os.path.sep+"..")

sys.path.append(hello_pytorch_DIR)

from model.lenet import LeNet

from tools.my_dataset import RMBDataset

from tools.common_tools import set_seed

set_seed() # 设置随机种子

rmb_label = {"1": 0, "100": 1}

# 参数设置

MAX_EPOCH = 10

BATCH_SIZE = 16

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

split_dir = os.path.abspath(os.path.join(BASE_DIR, "..", "..", "data", "RMB_data","rmb_split"))

if not os.path.exists(split_dir):

raise Exception(r"数据 {} 不存在, 回到lesson-06\1_split_dataset.py生成数据".format(split_dir))

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.RandomGrayscale(p=0.8),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

iter_count = 0

# 构建 SummaryWriter

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

iter_count += 1

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Train": loss.item()}, iter_count)

writer.add_scalars("Accuracy", {"Train": correct / total}, iter_count)

# 每个epoch,记录梯度,权值

for name, param in net.named_parameters():

writer.add_histogram(name + '_grad', param.grad, epoch)

writer.add_histogram(name + '_data', param, epoch)

scheduler.step() # 更新学习率

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

loss_mean_epoch = loss_val / len(valid_loader) # 计算一个epoch的loss均值

valid_curve.append(loss_mean_epoch) # 记录每个epoch的loss

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_mean_epoch, correct_val / total_val))

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Valid": loss_mean_epoch}, iter_count)

writer.add_scalars("Accuracy", {"Valid": correct_val / total_val}, iter_count)

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epoch loss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

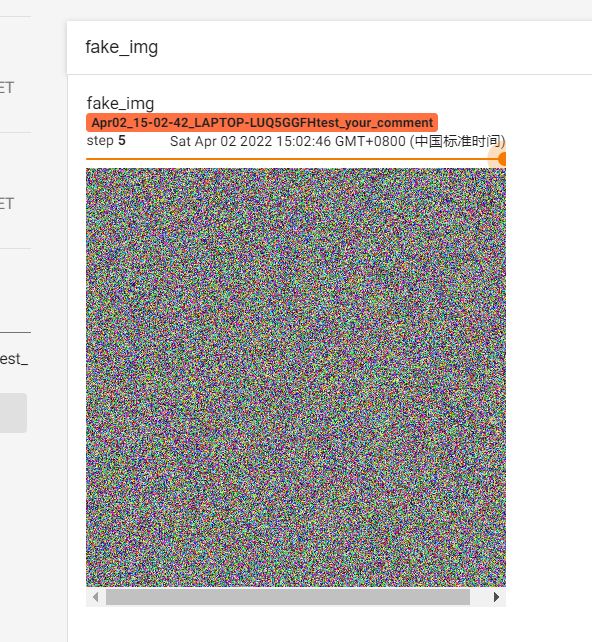

(4)add_image()

功能:记录图像

- tag:图像的标签名,图的唯一标识

- img_tensor:图像数据,注意尺度

- 数据输入到网络后,图像的像素值区间就在0-1之间,不符合可视化的格式,所以就需要将像素值缩放到0-225

- 像素值在(0-1)之间,则自动乘255

- 如果像素值有大于1的值,则默认该区间是(0-255)

- global_step:x轴

- dataformats:数据形式,CHW(默认),HWC,HW

代码:

# ----------------------------------- 3 image -----------------------------------

flag = 0

# flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# img 1 random

fake_img = torch.randn(3, 512, 512)

writer.add_image("fake_img", fake_img, 1)

time.sleep(1)

# img 2 ones

fake_img = torch.ones(3, 512, 512)

time.sleep(1)

writer.add_image("fake_img", fake_img, 2)

# img 3 1.1

fake_img = torch.ones(3, 512, 512) * 1.1

time.sleep(1)

writer.add_image("fake_img", fake_img, 3)

# img 4 HW

fake_img = torch.rand(512, 512)

writer.add_image("fake_img", fake_img, 4, dataformats="HW")

# img 5 HWC

fake_img = torch.rand(512, 512, 3)

writer.add_image("fake_img", fake_img, 5, dataformats="HWC")

writer.close()输出结果:

img1的像素在0-255之间

img2的像素在0-1之间,都是1,会自动缩放的0-255

img3的像素值都是1.1,默认是在0-255区间

img4是一张灰度图

![]()

img5是输入为HWC的彩色图像

在这个可视化界面中可以看到,我们在查看每张图片时,需要一步一步的去查看,这样就比较反隋,有什么办法可以一次性的加载多张图片呢?torchvision.utils.make_grid可以

torchvision.utils.make_grid

功能:制作网格图像,每个网格都放一张图像

- tensor:图像数据,B*C*H*W形式

- nrow:行数(列数自动计算)

- padding:图像间距(像素单位)

- normalize:是否将像素值标准化到0-255,默认值为false

- range:标准化范围

- 例如,当前数据尺度为[-1000,2000],两边的数据我们不需要关关心,只想要中心的数据,则设置为[-500,600],大于600的数设置为600,小于-500 的数设置为-500

- scale_each:是否单张图维度标准化,

- 每张图片的尺度并不一样,true则根据一张图片进行标准化,false则根据整个tensor中的图片进行标准化

- pad_value:padding的像素值

代码:

# ----------------------------------- 4 make_grid -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

split_dir = os.path.join("..", "..", "data", "RMB_data","rmb_split")

train_dir = os.path.join(split_dir, "train")

# train_dir = "path to your training data"

transform_compose = transforms.Compose([transforms.Resize((32, 64)), transforms.ToTensor()])

train_data = RMBDataset(data_dir=train_dir, transform=transform_compose)

train_loader = DataLoader(dataset=train_data, batch_size=16, shuffle=True)

data_batch, label_batch = next(iter(train_loader))

# img_grid = vutils.make_grid(data_batch, nrow=4, normalize=True, scale_each=True)

img_grid = vutils.make_grid(data_batch, nrow=4, normalize=False, scale_each=False)

writer.add_image("input img", img_grid, 0)

writer.close()可视化:这样就可以检查多个图像

应用:可视化网络层的卷积核和特征图

以Alex net的的第一个卷积层的卷积核为例

# ----------------------------------- kernel visualization -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

alexnet = models.alexnet(pretrained=True)

kernel_num = -1

vis_max = 1

# 避免pytorch1.7下的一个小bug,增加 torch.no_grad

with torch.no_grad():

for sub_module in alexnet.modules():

if isinstance(sub_module, nn.Conv2d):

kernel_num += 1

if kernel_num > vis_max:

break

kernels = sub_module.weight

c_out, c_int, k_w, k_h = tuple(kernels.shape)

for o_idx in range(c_out):

kernel_idx = kernels[o_idx, :, :, :].unsqueeze(1) # make_grid需要 BCHW,这里拓展C维度

kernel_grid = vutils.make_grid(kernel_idx, normalize=True, scale_each=True, nrow=c_int)

writer.add_image('{}_Convlayer_split_in_channel'.format(kernel_num), kernel_grid, global_step=o_idx)

kernel_all = kernels.view(-1, 3, k_h, k_w) # 3, h, w

kernel_grid = vutils.make_grid(kernel_all, normalize=True, scale_each=True, nrow=8) # c, h, w

writer.add_image('{}_all'.format(kernel_num), kernel_grid, global_step=322)

print("{}_convlayer shape:{}".format(kernel_num, tuple(kernels.shape)))

writer.close()

可视化:卷积核

以Alex net的的第一个卷积层的特征图为例

代码:

# ----------------------------------- feature map visualization -----------------------------------

# flag = 0

flag = 1

if flag:

with torch.no_grad():

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 数据

path_img = "./lena.png" # your path to image

normMean = [0.49139968, 0.48215827, 0.44653124]

normStd = [0.24703233, 0.24348505, 0.26158768]

norm_transform = transforms.Normalize(normMean, normStd)

img_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

norm_transform

])

img_pil = Image.open(path_img).convert('RGB')

if img_transforms is not None:

img_tensor = img_transforms(img_pil)

img_tensor.unsqueeze_(0) # chw --> bchw

# 模型

alexnet = models.alexnet(pretrained=True)

# forward

#手动获取Alex net的特征图,因为运算结束后,模型不会自动保存特征图

convlayer1 = alexnet.features[0]

fmap_1 = convlayer1(img_tensor)

# 预处理

fmap_1.transpose_(0, 1) # bchw=(1, 64, 55, 55) --> (64, 1, 55, 55)

fmap_1_grid = vutils.make_grid(fmap_1, normalize=True, scale_each=True, nrow=8)

writer.add_image('feature map in conv1', fmap_1_grid, global_step=322)

writer.close()可视化结果:

(5)add_graph

功能:可视化模型计算图,即数据流方向,用来观察模型结构

- model:模型,必须是nn.Module

- input_to_model:输出给模型的数据

- verbose:是否打印计算图结构信息

代码:

# ----------------------------------- 5 add_graph -----------------------------------

flag = 0

# flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 模型

fake_img = torch.randn(1, 3, 32, 32)

lenet = LeNet(classes=2)

writer.add_graph(lenet, fake_img)

writer.close()

# from torchsummary import summary

# print(summary(lenet, (3, 32, 32), device="cpu"))可视化结果:

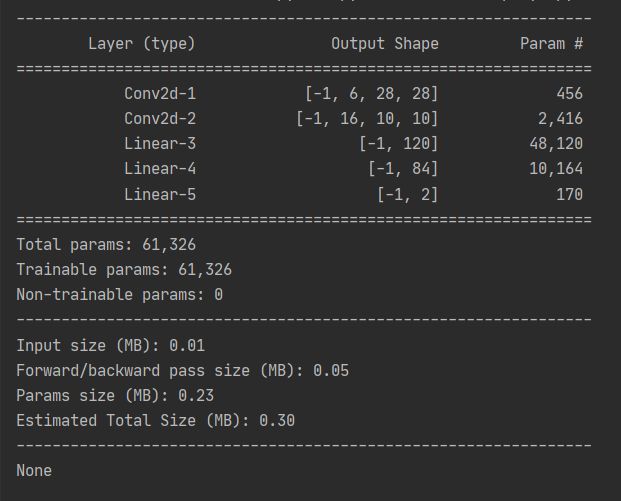

torchsummary

功能:查看模型信息,便于调试

- model:pytorch模型

- input_size:模型输入size

- batch_size:batch size

- device:“cuda” or “cpu”

![]()

from torchsummary import summary

print(summary(lenet, (3, 32, 32), device="cpu"))输出结果:

四、hook函数与CAM可视化

1.Hook函数概念

Hook函数机制:不改变主体,实现额外功能,像一个挂件,挂钩,hook

![]()

2.Hook函数与特征图提取

(1)Tensor.register_hook

功能:注册一个反向传播hook函数,因为反向传播过程中,非叶子结点的梯度会消失

Hook函数仅一个输入参数,为张量的梯度

![]()

代码:

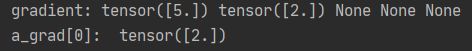

# ----------------------------------- 1 tensor hook 1 -----------------------------------

# flag = 0

flag = 1

if flag:

#构建计算图

w = torch.tensor([1.], requires_grad=True)

x = torch.tensor([2.], requires_grad=True)

a = torch.add(w, x)

b = torch.add(w, 1)

y = torch.mul(a, b)

#存储张量的梯度

a_grad = list()

def grad_hook(grad):

a_grad.append(grad)

#注册一个钩子

handle = a.register_hook(grad_hook)

y.backward()

# 查看梯度

print("gradient:", w.grad, x.grad, a.grad, b.grad, y.grad)

print("a_grad[0]: ", a_grad[0])

handle.remove()输出结果:

可以看到,在反向传播过程中,只保留的w和x这两个叶子结点的梯度,其余的全部被释放掉

但是我们通过钩子函数,将a的梯度保存到a_grad列表中

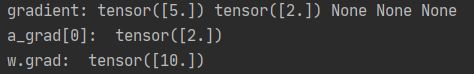

Tensor.register_hook修改梯度值

该函数还有另一个作用,就是直接修改梯度值

代码:

# ----------------------------------- 2 tensor hook 2 -----------------------------------

# flag = 0

flag = 1

if flag:

w = torch.tensor([1.], requires_grad=True)

x = torch.tensor([2.], requires_grad=True)

a = torch.add(w, x)

b = torch.add(w, 1)

y = torch.mul(a, b)

a_grad = list()

def grad_hook(grad):

grad *= 2

#return 会覆盖到前边更改的梯度

# return grad*3

handle = w.register_hook(grad_hook)

y.backward()

# 查看梯度

print("w.grad: ", w.grad)

handle.remove()输出结果:

可以看到,w的梯度变为了原来的2倍

(2)Module.register_forward_hook

功能:注册module的前向传播hook函数,通常用来获取卷积输出的特征图

参数:

- module:当前网络层

- input:当前网络层输入数据

- output:当前网络层输出数据

![]()

代码:

# ----------------------------------- 3 Module.register_forward_hook and pre hook -----------------------------------

# flag = 0

flag = 1

if flag:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 2, 3)

self.pool1 = nn.MaxPool2d(2, 2)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

return x

def forward_hook(module, data_input, data_output):

fmap_block.append(data_output)

input_block.append(data_input)

def forward_pre_hook(module, data_input):

print("forward_pre_hook input:{}".format(data_input))

def backward_hook(module, grad_input, grad_output):

print("backward hook input:{}".format(grad_input))

print("backward hook output:{}".format(grad_output))

# 初始化网络

net = Net()

net.conv1.weight[0].detach().fill_(1)

net.conv1.weight[1].detach().fill_(2)

net.conv1.bias.data.detach().zero_()

# 注册hook

fmap_block = list()

input_block = list()

net.conv1.register_forward_hook(forward_hook)

# net.conv1.register_forward_pre_hook(forward_pre_hook)

# net.conv1.register_backward_hook(backward_hook)

# inference

fake_img = torch.ones((1, 1, 4, 4)) # batch size * channel * H * W

output = net(fake_img)

# loss_fnc = nn.L1Loss()

# target = torch.randn_like(output)

# loss = loss_fnc(target, output)

# loss.backward()

#观察

print("output shape: {}\noutput value: {}\n".format(output.shape, output))

print("feature maps shape: {}\noutput value: {}\n".format(fmap_block[0].shape, fmap_block[0]))

print("input shape: {}\ninput value: {}".format(input_block[0][0].shape, input_block[0]))输出结果:

输出特征图与下图运行原理一致

(3)Module.register_forward_pre_hook

功能:注册module前向传播前的hook函数,可以用来查看网络层之前的数据

参数:

- module:当前网络层

- input:当前网络层输入数据

![]()

代码:见上

(4)Module.register_backward_hook

功能:注册module反向传播的hook函数

参数:

- module:当前网络层

- grad_input:当前网络层输入梯度数据

- grad_output:当前网络层输出梯度数据

代码:见上

应用:用钩子实现Alex net卷积层所有的特征图可视化

代码:

# ----------------------------------- feature map visualization -----------------------------------

# flag = 0

flag = 1

if flag:

with torch.no_grad():

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 数据

path_img = "./lena.png" # your path to image

normMean = [0.49139968, 0.48215827, 0.44653124]

normStd = [0.24703233, 0.24348505, 0.26158768]

norm_transform = transforms.Normalize(normMean, normStd)

img_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

norm_transform

])

img_pil = Image.open(path_img).convert('RGB')

if img_transforms is not None:

img_tensor = img_transforms(img_pil)

img_tensor.unsqueeze_(0) # chw --> bchw

# 模型

alexnet = models.alexnet(pretrained=True)

# 注册hook

fmap_dict = dict()

#name_module获取Alex net的所有子网络层及其名称

for name, sub_module in alexnet.named_modules():

if isinstance(sub_module, nn.Conv2d):

key_name = str(sub_module.weight.shape)

fmap_dict.setdefault(key_name, list())

n1, n2 = name.split(".")

def hook_func(m, i, o):

key_name = str(m.weight.shape)

fmap_dict[key_name].append(o)

alexnet._modules[n1]._modules[n2].register_forward_hook(hook_func)

# forward

output = alexnet(img_tensor)

# add image

for layer_name, fmap_list in fmap_dict.items():

fmap = fmap_list[0]

fmap.transpose_(0, 1)

nrow = int(np.sqrt(fmap.shape[0]))

fmap_grid = vutils.make_grid(fmap, normalize=True, scale_each=True, nrow=nrow)

writer.add_image('feature map in {}'.format(layer_name), fmap_grid, global_step=322)可视化:

为什么打印的特征图和之前不一样呢?可能是经过激活函数处理过?

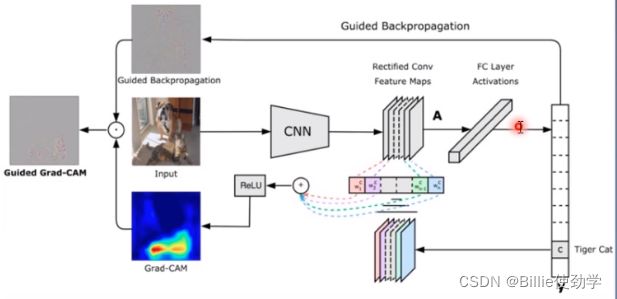

3.CAM(class activation map,类激活图)

CAM :类激活图,class activation map

网络是如何实现分类的呢,在分类的时候看到了什么呢?

CAM会对网络的最后一个特征图进行加权求和,就可以得到注意力机制,越红的地方关注越多

要使用CAM必须进行GAP(global avgpooling)操作获取权重,所以CAM并不是很适用

针对CAM不适用的情况,提出了Grad-CAM

Grad-CAM:CAM改进版,利用梯度作为特征图权重

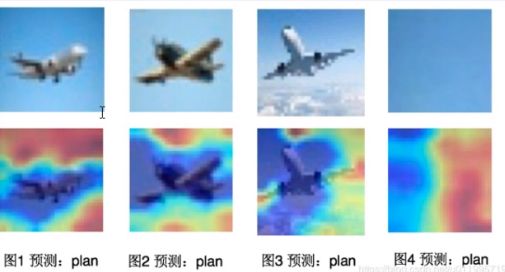

通过CAM方法可以观察到一些很有意思的信息

红色表示注意力集中的部分,可以看到,网络在学习的时候,学习到的并不是飞机的特征,而是伴随飞机出现的天空的特征,所以及时天空中没有飞机,网络也会判断为飞机,因为它学习到的是天空的特征,而不是飞机的特征。