按照kinetics-skeleton规范自制st-gcn数据集

此处参考了博客:https://blog.csdn.net/Lujiahao98689/article/details/121447175

根据自己电脑配置

[win10 python3.7 pytorch1.10.1 cuda11.3]

进行细微修改,日后备用,特此记录

第一步:裁剪视频,将视频裁剪到8到10秒钟

此处可以找辅助的软件进行裁剪

第二步:视频左右翻转,扩充数据集

代码如下:

## flip_horizontal_video.py

import os

# 知识点1,要避免出现‘Cannot find installation of real FFmpeg (which comes with ffprobe)‘

# 在import skvideo.io之前,先skvideo.setFFmpegPath('ffmpeg所在的文件位置')

import skvideo

skvideo.setFFmpegPath('D:/INSTALLER/ffmpeg-5.0.1-essentials_build/bin')

import skvideo.io

import cv2

if __name__ == '__main__':

originvideo_file = 'D:/VIDEOS/running on treadmill/'

videos_file_names = os.listdir(originvideo_file)

# 1. 左右镜像翻转视频

for file_name in videos_file_names:

video_path = '{}{}'.format(originvideo_file, file_name)

name_without_suffix = file_name.split('.')[0]

outvideo_path = '{}{}_mirror.mp4'.format(originvideo_file, name_without_suffix)

writer = skvideo.io.FFmpegWriter(outvideo_path)

# outputdict={'-f': 'mp4', '-vcodec': 'libx264', '-r': '30'})

reader = skvideo.io.FFmpegReader(video_path)

for frame in reader.nextFrame():

frame_mirror = cv2.flip(frame, 1)

writer.writeFrame(frame_mirror)

writer.close()

print('{} mirror success'.format(file_name))

print('the video in are all mirrored')

print('-------------------------------------------------------')

第三步:先将视频统一调整成340x256,调用cmd指令,使用openpose中的OpenPoseDemo.exe给每个视频生成对应的json文件

##

#!/usr/bin/env python

import os

import argparse

import json

import shutil

import numpy as np

import torch

import skvideo

skvideo.setFFmpegPath('D:/INSTALLER/ffmpeg-5.0.1-essentials_build/bin')

import skvideo.io

import tools

import tools.utils as utils

label_name, label_no = 'running', 0

class PreProcess():

"""

利用openpose提取自建数据集的骨骼点数据

"""

def start(self):

work_dir = './st-gcn-master'

# gongfu_filename = gongfu_filename_list[process_index]

# # 标签信息

# labelgongfu_name = 'xxx_{}'.format(process_index)

# label_no = process_index

#################################################################

# 此处修改成要制作的video文件

#################################################################

# 视频所在文件夹 D:\VIDEOS\test_flip

originvideo_file = 'D:/VIDEOS/running on treadmill/'

# resized视频输出文件夹

name_resizecideo_file = 'resize_running_on_treadmill'

resizedvideo_file = 'D:/VIDEOS/{}/'.format(name_resizecideo_file)

os.makedirs(resizedvideo_file, exist_ok=True)

videos_file_names = os.listdir(originvideo_file)

# 1. Resize文件夹下的视频到340x256 30fps

for file_name in videos_file_names:

video_path = '{}{}'.format(originvideo_file, file_name)

outvideo_path = '{}{}'.format(resizedvideo_file, file_name)

writer = skvideo.io.FFmpegWriter(outvideo_path, outputdict={'-s': '340x256'})

# outputdict={'-f': 'mp4', '-vcodec': 'libx264', '-s': '340x256',

# '-r': '30'})

reader = skvideo.io.FFmpegReader(video_path)

for frame in reader.nextFrame():

writer.writeFrame(frame)

writer.close()

print('{} resize success'.format(file_name))

# 2. 利用openpose提取每段视频骨骼点数据

resizedvideos_file_names = os.listdir(resizedvideo_file)

for file_name in resizedvideos_file_names:

if not file_name.endswith('mp4'):

continue

outvideo_path = '{}{}'.format(resizedvideo_file, file_name)

# openpose = '{}/examples/openpose/openpose.bin'.format(self.arg.openpose)

# 'D:/Pycharm_project/Pose/openpose-master/build/bin'

openpose_path = f'D:/Pycharm_project/Pose/openpose_all/openpose'

openpose = 'bin\\OpenPoseDemo.exe'

video_name = os.path.splitext(file_name)[0]

output_snippets_dir = 'D:\\VIDEOS\\{}\\snippets\\{}'.format(name_resizecideo_file, video_name)

output_sequence_dir = 'D:\\VIDEOS\\{}\\data'.format(name_resizecideo_file)

os.makedirs(output_snippets_dir, exist_ok=True)

os.makedirs(output_sequence_dir, exist_ok=True)

output_sequence_path = '{}/{}.json'.format(output_sequence_dir, video_name)

# # 这一段莫名其妙啊

# label_name_path = '{}/resource/kinetics_skeleton/label_name_gongfu.txt'.format(work_dir)

# with open(label_name_path) as f:

# label_name = f.readlines()

# label_name = [line.rstrip() for line in label_name]

# pose estimation

openpose_args = dict(

video=outvideo_path, # 'D:/VIDEOS/resize_test_flip/data'

write_json=output_snippets_dir,

display=0,

render_pose=0,

model_pose='COCO')

command_line = 'd:&cd ' + openpose_path + '& '

command_line += openpose +' '+ ' '.join(['--{} {}'.format(k, v) for k, v in openpose_args.items()])

# D:/Pycharm_project/Pose/openpose_all/openpose/bin/OpenPoseDemo.exe --video &outvideo_path \

# --write_json &output_snippets_dir

shutil.rmtree(output_snippets_dir, ignore_errors=True)

os.makedirs(output_snippets_dir)

os.system(command_line)

# pack openpose ouputs

video = utils.video.get_video_frames(outvideo_path)

height, width, _ = video[0].shape

# 这里可以修改label, label_index

video_info = utils.openpose.json_pack(

output_snippets_dir, video_name, width, height, label_name, label_no)

if not os.path.exists(output_sequence_dir):

os.makedirs(output_sequence_dir)

with open(output_sequence_path, 'w') as outfile:

json.dump(video_info, outfile)

if len(video_info['data']) == 0:

print('{} Can not find pose estimation results.'.format(file_name))

return

else:

print('{} pose estimation complete.'.format(file_name))

if __name__ == '__main__':

p = PreProcess()

p.start()

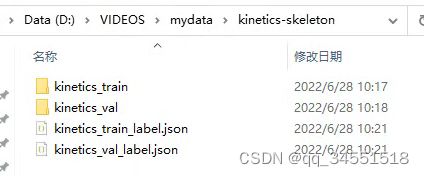

第四步:手动将生成出来的视频的json文件,照数量(7:3)的比例分到两个文件夹当中,分别做训练集(train)和验证集(val),并生成kinetics_train_label.json和kinetics_val_label.json,代码如下:

## json2kinetics.py

import json

import os

if __name__ == '__main__':

train_json_path = 'D:/VIDEOS/mydata/kinetics-skeleton/kinetics_train' # './mydata/kinetics-skeleton/kinetics_train'

val_json_path = 'D:/VIDEOS/mydata/kinetics-skeleton/kinetics_val' # './mydata/kinetics-skeleton/kinetics_val'

output_train_json_path = 'D:/VIDEOS/mydata/kinetics-skeleton/kinetics_train_label.json'

output_val_json_path = 'D:/VIDEOS/mydata/kinetics-skeleton/kinetics_val_label.json'

train_json_names = os.listdir(train_json_path)

val_json_names = os.listdir(val_json_path)

train_label_json = dict()

val_label_json = dict()

for file_name in train_json_names:

name = os.path.splitext(file_name)[0]

json_file_path = '{}/{}'.format(train_json_path, file_name)

# D:/VIDEOS/mydata/kinetics-skeleton/kinetics_train/{file_name}

json_file = json.load(open(json_file_path))

file_label = dict()

if len(json_file['data']) == 0:

file_label['has_skeleton'] = False

else:

file_label['has_skeleton'] = True

file_label['label'] = json_file['label']

file_label['label_index'] = json_file['label_index']

train_label_json['{}'.format(name)] = file_label

print('{} success'.format(file_name))

with open(output_train_json_path, 'w') as outfile:

json.dump(train_label_json, outfile)

for file_name in val_json_names:

name = file_name.split('.')[0]

json_file_path = '{}/{}'.format(val_json_path, file_name)

json_file = json.load(open(json_file_path))

file_label = dict()

if len(json_file['data']) == 0:

file_label['has_skeleton'] = False

else:

file_label['has_skeleton'] = True

file_label['label'] = json_file['label']

file_label['label_index'] = json_file['label_index']

val_label_json['{}'.format(name)] = file_label

print('{} success'.format(file_name))

with open(output_val_json_path, 'w') as outfile:

json.dump(val_label_json, outfile)