PyTorch-PyramidBoxDemo

PyTorch-PyramidBoxDemo

硬件:NVIDIA-GTX1080

软件:Windows10、python3.6.5、pytorch-gpu-0.4.1

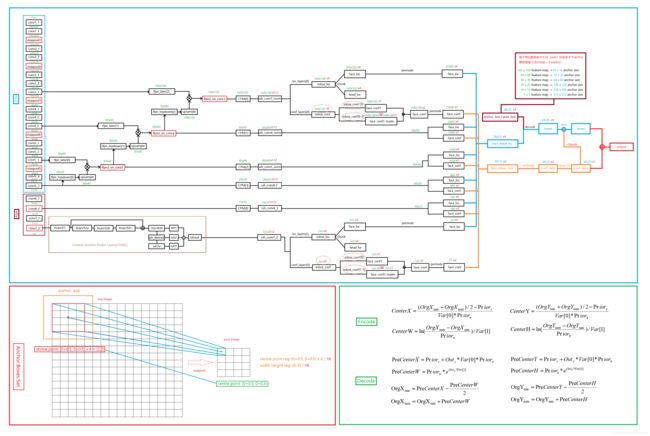

一、基础知识(一图看懂)

二、代码展示

1、demo.py

#-*- coding:utf-8 -*-

from __future__ import division

from __future__ import absolute_import

from __future__ import print_function

import torch

import torch.backends.cudnn as cudnn

from torch.autograd import Variable

import cv2

import time

import numpy as np

from PIL import Image

from config import cfg

from pyramidbox import build_net

use_cuda = torch.cuda.is_available()

if use_cuda:

torch.set_default_tensor_type('torch.cuda.FloatTensor')

else:

torch.set_default_tensor_type('torch.FloatTensor')

def to_chw_bgr(image):

"""

Transpose image from HWC to CHW and from RBG to BGR.

Args:

image (np.array): an image with HWC and RBG layout.

"""

# HWC to CHW

if len(image.shape) == 3:

image = np.swapaxes(image, 1, 2)

image = np.swapaxes(image, 1, 0)

# RBG to BGR

image = image[[2, 1, 0], :, :]

return image

def detect(net, img_path, thresh):

img = Image.open(img_path)

if img.mode == 'L':

img = img.convert('RGB')

img = np.array(img)

height, width, _ = img.shape

max_im_shrink = np.sqrt(

1200 * 1100 / (img.shape[0] * img.shape[1]))

image = cv2.resize(img, None, None, fx=max_im_shrink,

fy=max_im_shrink, interpolation=cv2.INTER_LINEAR)

x = to_chw_bgr(image)

x = x.astype('float32')

x -= cfg.img_mean

x = x[[2, 1, 0], :, :]

#x = x * cfg.scale

x = Variable(torch.from_numpy(x).unsqueeze(0))

if use_cuda:

x = x.cuda()

t1 = time.time()

y = net(x)

detections = y.data

scale = torch.Tensor([img.shape[1], img.shape[0],

img.shape[1], img.shape[0]])

for i in range(detections.size(1)):

j = 0

while detections[0, i, j, 0] >= thresh:

score = detections[0, i, j, 0]

pt = (detections[0, i, j, 1:] * scale).cpu().numpy().astype(int)

left_up, right_bottom = (pt[0], pt[1]), (pt[2], pt[3])

j += 1

cv2.rectangle(img, left_up, right_bottom, (0, 0, 255), 2)

conf = "{:.2f}".format(score)

text_size, baseline = cv2.getTextSize(

conf, cv2.FONT_HERSHEY_SIMPLEX, 0.3, 1)

p1 = (left_up[0], left_up[1] - text_size[1])

cv2.rectangle(img, (p1[0] - 2 // 2, p1[1] - 2 - baseline),

(p1[0] + text_size[0], p1[1] + text_size[1]), [255, 0, 0], -1)

cv2.putText(img, conf, (p1[0], p1[

1] + baseline), cv2.FONT_HERSHEY_SIMPLEX, 0.3, (255, 255, 255), 1, 8)

t2 = time.time()

print('detect:{} timer:{}'.format(img_path, t2 - t1))

cv2.imwrite("demo.jpg", img)

if __name__ == '__main__':

model='weights/pyramidbox_120000_99.02.pth'

net = build_net('test', cfg.NUM_CLASSES)

net.load_state_dict(torch.load(model))

net.eval()

if use_cuda:

net.cuda()

cudnn.benckmark = True

path = "test.jpg"

thresh=0.4

detect(net, path, thresh)

2、pyramidbox.py

#-*- coding:utf-8 -*-

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import torch

import torch.nn as nn

import torch.nn.init as init

from torch.autograd import Function

import torch.nn.functional as F

from torch.autograd import Variable

import os

from config import cfg

from itertools import product as product

class PriorBox(object):

"""Compute priorbox coordinates in center-offset form for each source

feature map.

"""

def __init__(self, input_size, feature_maps,cfg):

super(PriorBox, self).__init__()

self.imh = input_size[0]

self.imw = input_size[1]

# number of priors for feature map location (either 4 or 6)

self.variance = cfg.VARIANCE or [0.1] #cfg.VARIANCE

#self.feature_maps = cfg.FEATURE_MAPS

self.min_sizes = cfg.ANCHOR_SIZES #[16, 32, 64, 128, 256, 512]

self.steps = cfg.STEPS #[4, 8, 16, 32, 64, 128]

self.clip = cfg.CLIP

for v in self.variance:

if v <= 0:

raise ValueError('Variances must be greater than 0')

self.feature_maps = feature_maps

def forward(self):

mean = []

for k in range(len(self.feature_maps)):

feath = self.feature_maps[k][0]

featw = self.feature_maps[k][1]

for i, j in product(range(feath), range(featw)):

f_kw = self.imw / self.steps[k]

f_kh = self.imh / self.steps[k]

cx = (j + 0.5) / f_kw # cx = (j + 0.5) * self.step / self.imw ; for org image, center

cy = (i + 0.5) / f_kh # cy = (i + 0.5) * self.step / self.imh ; for org image, center

s_kw = self.min_sizes[k] / self.imw # for org image, center

s_kh = self.min_sizes[k] / self.imh # for org image, center

mean += [cx, cy, s_kw, s_kh]

output = torch.Tensor(mean).view(-1, 4)

if self.clip:

output.clamp_(max=1, min=0)

return output

def decode(loc, priors, variances):

"""Decode locations from predictions using priors to undo

the encoding we did for offset regression at train time.

Args:

loc (tensor): location predictions for loc layers,

Shape: [num_priors,4]

priors (tensor): Prior boxes in center-offset form.

Shape: [num_priors,4].

variances: (list[float]) Variances of priorboxes

Return:

decoded bounding box predictions

"""

boxes = torch.cat((

priors[:, :2] + loc[:, :2] * variances[0] * priors[:, 2:],

priors[:, 2:] * torch.exp(loc[:, 2:] * variances[1])), 1)

boxes[:, :2] -= boxes[:, 2:] / 2

boxes[:, 2:] += boxes[:, :2]

return boxes

def nms(boxes, scores, overlap=0.5, top_k=200):

"""Apply non-maximum suppression at test time to avoid detecting too many

overlapping bounding boxes for a given object.

Args:

boxes: (tensor) The location preds for the img, Shape: [num_priors,4].

scores: (tensor) The class predscores for the img, Shape:[num_priors].

overlap: (float) The overlap thresh for suppressing unnecessary boxes.

top_k: (int) The Maximum number of box preds to consider.

Return:

The indices of the kept boxes with respect to num_priors.

"""

keep = scores.new(scores.size(0)).zero_().long()

if boxes.numel() == 0:

return keep

x1 = boxes[:, 0]

y1 = boxes[:, 1]

x2 = boxes[:, 2]

y2 = boxes[:, 3]

area = torch.mul(x2 - x1, y2 - y1)

v, idx = scores.sort(0) # sort in ascending order

# I = I[v >= 0.01]

idx = idx[-top_k:] # indices of the top-k largest vals

xx1 = boxes.new()

yy1 = boxes.new()

xx2 = boxes.new()

yy2 = boxes.new()

w = boxes.new()

h = boxes.new()

# keep = torch.Tensor()

count = 0

while idx.numel() > 0:

i = idx[-1] # index of current largest val

# keep.append(i)

keep[count] = i

count += 1

if idx.size(0) == 1:

break

idx = idx[:-1] # remove kept element from view

# load bboxes of next highest vals

torch.index_select(x1, 0, idx, out=xx1)

torch.index_select(y1, 0, idx, out=yy1)

torch.index_select(x2, 0, idx, out=xx2)

torch.index_select(y2, 0, idx, out=yy2)

# store element-wise max with next highest score

xx1 = torch.clamp(xx1, min=x1[i])

yy1 = torch.clamp(yy1, min=y1[i])

xx2 = torch.clamp(xx2, max=x2[i])

yy2 = torch.clamp(yy2, max=y2[i])

w.resize_as_(xx2)

h.resize_as_(yy2)

w = xx2 - xx1

h = yy2 - yy1

# check sizes of xx1 and xx2.. after each iteration

w = torch.clamp(w, min=0.0)

h = torch.clamp(h, min=0.0)

inter = w * h

# IoU = i / (area(a) + area(b) - i)

rem_areas = torch.index_select(area, 0, idx) # load remaining areas)

union = (rem_areas - inter) + area[i]

IoU = inter / union # store result in iou

# keep only elements with an IoU <= overlap

idx = idx[IoU.le(overlap)]

return keep, count

class Detect(Function):

"""At test time, Detect is the final layer of SSD. Decode location preds,

apply non-maximum suppression to location predictions based on conf

scores and threshold to a top_k number of output predictions for both

confidence score and locations.

"""

def __init__(self, cfg):

self.num_classes = cfg.NUM_CLASSES

self.top_k = cfg.TOP_K

self.nms_thresh = cfg.NMS_THRESH

self.conf_thresh = cfg.CONF_THRESH

self.variance = cfg.VARIANCE # [0.1, 0.2]

def forward(self, loc_data, conf_data, prior_data):

"""

Args:

loc_data: (tensor) Loc preds from loc layers

Shape: [batch,num_priors*4]

conf_data: (tensor) Shape: Conf preds from conf layers

Shape: [batch*num_priors,num_classes]

prior_data: (tensor) Prior boxes and variances from priorbox layers

Shape: [1,num_priors,4]

"""

num = loc_data.size(0)

num_priors = prior_data.size(0)

conf_preds = conf_data.view(

num, num_priors, self.num_classes).transpose(2, 1)

batch_priors = prior_data.view(-1, num_priors,

4).expand(num, num_priors, 4)

batch_priors = batch_priors.contiguous().view(-1, 4)

################decode box###################

decoded_boxes = decode(loc_data.view(-1, 4),

batch_priors, self.variance)

decoded_boxes = decoded_boxes.view(num, num_priors, 4)

output = torch.zeros(num, self.num_classes, self.top_k, 5)

###############nms box and take top5000###########

for i in range(num):

boxes = decoded_boxes[i].clone()

conf_scores = conf_preds[i].clone()

for cl in range(1, self.num_classes):

c_mask = conf_scores[cl].gt(self.conf_thresh) # 0.05

scores = conf_scores[cl][c_mask]

if scores.dim() == 0:

continue

l_mask = c_mask.unsqueeze(1).expand_as(boxes)

boxes_ = boxes[l_mask].view(-1, 4)

ids, count = nms(

boxes_, scores, self.nms_thresh, self.top_k)

output[i, cl, :count] = torch.cat((scores[ids[:count]].unsqueeze(1),

boxes_[ids[:count]]), 1)

return output

class L2Norm(nn.Module):

def __init__(self,n_channels, scale):

super(L2Norm,self).__init__()

self.n_channels = n_channels

self.gamma = scale or None

self.eps = 1e-10

self.weight = nn.Parameter(torch.Tensor(self.n_channels))

self.reset_parameters()

def reset_parameters(self):

init.constant(self.weight,self.gamma)

def forward(self, x):

norm = x.pow(2).sum(dim=1, keepdim=True).sqrt()+self.eps

#x /= norm

x = torch.div(x,norm)

out = self.weight.unsqueeze(0).unsqueeze(2).unsqueeze(3).expand_as(x) * x

return out

class conv_bn(nn.Module):

"""docstring for conv"""

def __init__(self,

in_plane,

out_plane,

kernel_size,

stride,

padding):

super(conv_bn, self).__init__()

self.conv1 = nn.Conv2d(in_plane, out_plane,

kernel_size=kernel_size, stride=stride, padding=padding)

self.bn1 = nn.BatchNorm2d(out_plane)

def forward(self, x):

x = self.conv1(x)

return self.bn1(x)

class CPM(nn.Module):

"""docstring for CPM"""

def __init__(self, in_plane):

super(CPM, self).__init__()

self.branch1 = conv_bn(in_plane, 1024, 1, 1, 0)

self.branch2a = conv_bn(in_plane, 256, 1, 1, 0)

self.branch2b = conv_bn(256, 256, 3, 1, 1)

self.branch2c = conv_bn(256, 1024, 1, 1, 0)

self.ssh_1 = nn.Conv2d(1024, 256, kernel_size=3, stride=1, padding=1)

self.ssh_dimred = nn.Conv2d(

1024, 128, kernel_size=3, stride=1, padding=1)

self.ssh_2 = nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1)

self.ssh_3a = nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1)

self.ssh_3b = nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1)

def forward(self, x):

out_residual = self.branch1(x)

x = F.relu(self.branch2a(x), inplace=True)

x = F.relu(self.branch2b(x), inplace=True)

x = self.branch2c(x)

rescomb = F.relu(x + out_residual, inplace=True)

ssh1 = self.ssh_1(rescomb)

ssh_dimred = F.relu(self.ssh_dimred(rescomb), inplace=True)

ssh_2 = self.ssh_2(ssh_dimred)

ssh_3a = F.relu(self.ssh_3a(ssh_dimred), inplace=True)

ssh_3b = self.ssh_3b(ssh_3a)

ssh_out = torch.cat([ssh1, ssh_2, ssh_3b], dim=1)

ssh_out = F.relu(ssh_out, inplace=True)

return ssh_out

class PyramidBox(nn.Module):

"""docstring for PyramidBox"""

def __init__(self,

phase,

base,

extras,

lfpn_cpm,

head,

num_classes):

super(PyramidBox, self).__init__()

#self.use_transposed_conv2d = use_transposed_conv2d

self.vgg = nn.ModuleList(base)

self.extras = nn.ModuleList(extras)

self.L2Norm3_3 = L2Norm(256, 10)

self.L2Norm4_3 = L2Norm(512, 8)

self.L2Norm5_3 = L2Norm(512, 5)

self.lfpn_topdown = nn.ModuleList(lfpn_cpm[0])

self.lfpn_later = nn.ModuleList(lfpn_cpm[1])

self.cpm = nn.ModuleList(lfpn_cpm[2])

self.loc_layers = nn.ModuleList(head[0])

self.conf_layers = nn.ModuleList(head[1])

self.is_infer = False

if phase == 'test':

self.softmax = nn.Softmax(dim=-1)

self.detect = Detect(cfg)

self.is_infer = True

def _upsample_prod(self, x, y):

_, _, H, W = y.size()

return F.upsample(x, size=(H, W), mode='bilinear') * y

def forward(self, x):

size = x.size()[2:]

#################################base_vgg#############################

# apply vgg up to conv3_3 relu

for k in range(16):

x = self.vgg[k](x)

conv3_3 = x

# apply vgg up to conv4_3

for k in range(16, 23):

x = self.vgg[k](x)

conv4_3 = x

for k in range(23, 30):

x = self.vgg[k](x)

conv5_3 = x

for k in range(30, len(self.vgg)):

x = self.vgg[k](x)

convfc_7 = x

# apply extra layers and cache source layer outputs

for k in range(2):

x = F.relu(self.extras[k](x), inplace=True)

conv6_2 = x

for k in range(2, 4):

x = F.relu(self.extras[k](x), inplace=True)

conv7_2 = x

####################################lfpn##############################

x = F.relu(self.lfpn_topdown[0](convfc_7), inplace=True)

lfpn2_on_conv5 = F.relu(self._upsample_prod(

x, self.lfpn_later[0](conv5_3)), inplace=True)

x = F.relu(self.lfpn_topdown[1](lfpn2_on_conv5), inplace=True)

lfpn1_on_conv4 = F.relu(self._upsample_prod(

x, self.lfpn_later[1](conv4_3)), inplace=True)

x = F.relu(self.lfpn_topdown[2](lfpn1_on_conv4), inplace=True)

lfpn0_on_conv3 = F.relu(self._upsample_prod(

x, self.lfpn_later[2](conv3_3)), inplace=True)

####################################cpm##############################

ssh_conv3_norm = self.cpm[0](self.L2Norm3_3(lfpn0_on_conv3))

ssh_conv4_norm = self.cpm[1](self.L2Norm4_3(lfpn1_on_conv4))

ssh_conv5_norm = self.cpm[2](self.L2Norm5_3(lfpn2_on_conv5))

ssh_convfc7 = self.cpm[3](convfc_7)

ssh_conv6 = self.cpm[4](conv6_2)

ssh_conv7 = self.cpm[5](conv7_2)

########################loc_layers[0] conf_layers[0]#################

face_locs, face_confs = [], []

head_locs, head_confs = [], []

N = ssh_conv3_norm.size(0)

mbox_loc = self.loc_layers[0](ssh_conv3_norm)

face_loc, head_loc = torch.chunk(mbox_loc, 2, dim=1)

face_loc = face_loc.permute(

0, 2, 3, 1).contiguous().view(N, -1, 4)

if not self.is_infer:

head_loc = head_loc.permute(

0, 2, 3, 1).contiguous().view(N, -1, 4)

mbox_conf = self.conf_layers[0](ssh_conv3_norm)

face_conf1 = mbox_conf[:, 3:4, :, :]

face_conf3_maxin, _ = torch.max(

mbox_conf[:, 0:3, :, :], dim=1, keepdim=True)

face_conf = torch.cat((face_conf3_maxin, face_conf1), dim=1)

face_conf = face_conf.permute(

0, 2, 3, 1).contiguous().view(N, -1, 2)

if not self.is_infer:

head_conf3_maxin, _ = torch.max(

mbox_conf[:, 4:7, :, :], dim=1, keepdim=True)

head_conf1 = mbox_conf[:, 7:, :, :]

head_conf = torch.cat((head_conf3_maxin, head_conf1), dim=1)

head_conf = head_conf.permute(

0, 2, 3, 1).contiguous().view(N, -1, 2)

###############loc_layers[1-5] conf_layers[1-5]#################

face_locs.append(face_loc)

face_confs.append(face_conf)

if not self.is_infer:

head_locs.append(head_loc)

head_confs.append(head_conf)

inputs = [ssh_conv4_norm, ssh_conv5_norm,

ssh_convfc7, ssh_conv6, ssh_conv7]

feature_maps = []

feat_size = ssh_conv3_norm.size()[2:]

feature_maps.append([feat_size[0], feat_size[1]])

for i, feat in enumerate(inputs):

feat_size = feat.size()[2:]

feature_maps.append([feat_size[0], feat_size[1]])

mbox_loc = self.loc_layers[i + 1](feat)

face_loc, head_loc = torch.chunk(mbox_loc, 2, dim=1)

face_loc = face_loc.permute(

0, 2, 3, 1).contiguous().view(N, -1, 4)

if not self.is_infer:

head_loc = head_loc.permute(

0, 2, 3, 1).contiguous().view(N, -1, 4)

mbox_conf = self.conf_layers[i + 1](feat)

face_conf1 = mbox_conf[:, 0:1, :, :]

face_conf3_maxin, _ = torch.max(

mbox_conf[:, 1:4, :, :], dim=1, keepdim=True)

face_conf = torch.cat((face_conf1, face_conf3_maxin), dim=1)

face_conf = face_conf.permute(

0, 2, 3, 1).contiguous().view(N, -1, 2)

if not self.is_infer:

head_conf = mbox_conf[:, 4:, :, :].permute(

0, 2, 3, 1).contiguous().view(N, -1, 2)

face_locs.append(face_loc)

face_confs.append(face_conf)

if not self.is_infer:

head_locs.append(head_loc)

head_confs.append(head_conf)

###########################PriorBox Set###############################

face_mbox_loc = torch.cat(face_locs, dim=1)

face_mbox_conf = torch.cat(face_confs, dim=1)

if not self.is_infer:

head_mbox_loc = torch.cat(head_locs, dim=1)

head_mbox_conf = torch.cat(head_confs, dim=1)

priors_boxes = PriorBox(size, feature_maps, cfg)

priors = Variable(priors_boxes.forward(), volatile=True) #priors_boxes [34125, 4]

#################face_mbox_loc face_mbox_conf########################

if not self.is_infer:

output = (face_mbox_loc, face_mbox_conf,

head_mbox_loc, head_mbox_conf, priors)

else:

output = self.detect(face_mbox_loc,

self.softmax(face_mbox_conf),

priors)

return output

def load_weights(self, base_file):

other, ext = os.path.splitext(base_file)

if ext == '.pkl' or '.pth':

print('Loading weights into state dict...')

mdata = torch.load(base_file,

map_location=lambda storage, loc: storage)

weights = mdata['weight']

epoch = mdata['epoch']

self.load_state_dict(weights)

print('Finished!')

else:

print('Sorry only .pth and .pkl files supported.')

return epoch

def xavier(self, param):

init.xavier_uniform(param)

def weights_init(self, m):

if isinstance(m, nn.Conv2d):

self.xavier(m.weight.data)

if 'bias' in m.state_dict().keys():

m.bias.data.zero_()

if isinstance(m, nn.ConvTranspose2d):

self.xavier(m.weight.data)

if 'bias' in m.state_dict().keys():

m.bias.data.zero_()

if isinstance(m, nn.BatchNorm2d):

m.weight.data[...] = 1

m.bias.data.zero_()

vgg_cfg = [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M',

512, 512, 512, 'M']

def vgg(cfg, i, batch_norm=False):

layers = []

in_channels = i

for v in cfg:

if v == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

elif v == 'C':

layers += [nn.MaxPool2d(kernel_size=2, stride=2, ceil_mode=True)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v

conv6 = nn.Conv2d(512, 1024, kernel_size=3, padding=6, dilation=6)

conv7 = nn.Conv2d(1024, 1024, kernel_size=1)

layers += [conv6,

nn.ReLU(inplace=True), conv7, nn.ReLU(inplace=True)]

return layers

extras_cfg = [256, 'S', 512, 128, 'S', 256] # [2][5] not use

def add_extras(cfg, i, batch_norm=False):

# Extra layers added to VGG for feature scaling

layers = []

in_channels = i

flag = False

for k, v in enumerate(cfg):

if in_channels != 'S':

if v == 'S':

layers += [nn.Conv2d(in_channels, cfg[k + 1],

kernel_size=(1, 3)[flag], stride=2, padding=1)] #(1,3)[False] == 1 -> (1,3)[0] == 1

else:

layers += [nn.Conv2d(in_channels, v, kernel_size=(1, 3)[flag])]

flag = not flag

in_channels = v

return layers

lfpn_cpm_cfg = [256, 512, 512, 1024, 512, 256]

def add_lfpn_cpm(cfg):

lfpn_topdown_layers = []

lfpn_latlayer = []

cpm_layers = []

for k, v in enumerate(cfg):

cpm_layers.append(CPM(v))

fpn_list = cfg[::-1][2:]

for k, v in enumerate(fpn_list[:-1]):

lfpn_latlayer.append(nn.Conv2d(

fpn_list[k + 1], fpn_list[k + 1], kernel_size=1, stride=1, padding=0))

lfpn_topdown_layers.append(nn.Conv2d(

v, fpn_list[k + 1], kernel_size=1, stride=1, padding=0))

return (lfpn_topdown_layers, lfpn_latlayer, cpm_layers)

multibox_cfg = [512, 512, 512, 512, 512, 512]

def multibox(vgg, extra_layers):

loc_layers = []

conf_layers = []

vgg_source = [21, 28, -2]

i = 0

loc_layers += [nn.Conv2d(multibox_cfg[i],

8, kernel_size=3, padding=1)]

conf_layers += [nn.Conv2d(multibox_cfg[i],

8, kernel_size=3, padding=1)]

i += 1

for k, v in enumerate(vgg_source):

loc_layers += [nn.Conv2d(multibox_cfg[i],

8, kernel_size=3, padding=1)]

conf_layers += [nn.Conv2d(multibox_cfg[i],

6, kernel_size=3, padding=1)]

i += 1

for k, v in enumerate(extra_layers[1::2], 2):

loc_layers += [nn.Conv2d(multibox_cfg[i],

8, kernel_size=3, padding=1)]

conf_layers += [nn.Conv2d(multibox_cfg[i],

6, kernel_size=3, padding=1)]

i += 1

return vgg, extra_layers, (loc_layers, conf_layers)

def build_net(phase, num_classes=2):

base_, extras_, head_ = multibox(

vgg(vgg_cfg, 3), add_extras((extras_cfg), 1024))

lfpn_cpm = add_lfpn_cpm(lfpn_cpm_cfg)

return PyramidBox(phase, base_, extras_, lfpn_cpm, head_, num_classes)3、config.py

#-*- coding:utf-8 -*-

from __future__ import division

from __future__ import absolute_import

from __future__ import print_function

from easydict import EasyDict

import numpy as np

_C = EasyDict()

cfg = _C

# data augument config

_C.img_mean = np.array([104., 117., 123.])[:, np.newaxis, np.newaxis].astype(

'float32')

# anchor config

_C.FEATURE_MAPS = [160, 80, 40, 20, 10, 5]

_C.INPUT_SIZE = 640

_C.STEPS = [4, 8, 16, 32, 64, 128]

_C.ANCHOR_SIZES = [16, 32, 64, 128, 256, 512]

_C.CLIP = False

_C.VARIANCE = [0.1, 0.2] #方差

# loss config

_C.NUM_CLASSES = 2

_C.OVERLAP_THRESH = 0.35

_C.NEG_POS_RATIOS = 3

# detection config

_C.NMS_THRESH = 0.3

_C.TOP_K = 5000

_C.KEEP_TOP_K = 750

_C.CONF_THRESH = 0.05三、结果展示

四、参考:

https://github.com/yxlijun/Pyramidbox.pytorch

任何问题请加唯一QQ2258205918(名称samylee)!

或唯一VX:samylee_csdn