vgg和PyTorch

1.vgg:

由于使用图片数量过多,耗时久,这里仅取其中四百张图片。

代码片段:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, regularizers

import numpy as np

import os

import cv2

import matplotlib.pyplot as plt

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

resize = 224

path ="D:/pc/data/seven/strain/"

def load_data():

imgs = os.listdir(path)

num = len(imgs)

train_data = np.empty((200, resize, resize, 3), dtype="int32")

train_label = np.empty((200, ), dtype="int32")

test_data = np.empty((200, resize, resize, 3), dtype="int32")

test_label = np.empty((200, ), dtype="int32")

for i in range(200):

if i % 2:

train_data[i] = cv2.resize(cv2.imread(path+'/'+ 'dog.' + str(i) + '.jpg'), (resize, resize))

train_label[i] = 1

else:

train_data[i] = cv2.resize(cv2.imread(path+'/' + 'cat.' + str(i) + '.jpg'), (resize, resize))

train_label[i] = 0

for i in range(200, 400):

if i % 2:

test_data[i-200] = cv2.resize(cv2.imread(path+'/' + 'dog.' + str(i) + '.jpg'), (resize, resize))

test_label[i-200] = 1

else:

test_data[i-200] = cv2.resize(cv2.imread(path+'/' + 'cat.' + str(i) + '.jpg'), (resize, resize))

test_label[i-200] = 0

return train_data, train_label, test_data, test_label

def vgg16():

weight_decay = 0.0005

nb_epoch = 10

batch_size = 32

# layer1

model = keras.Sequential()

model.add(layers.Conv2D(64, (3, 3), padding='same',

input_shape=(224, 224, 3), kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.3))

# layer2

model.add(layers.Conv2D(64, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# layer3

model.add(layers.Conv2D(128, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer4

model.add(layers.Conv2D(128, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# layer5

model.add(layers.Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer6

model.add(layers.Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer7

model.add(layers.Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# layer8

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer9

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer10

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# layer11

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer12

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer13

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Dropout(0.5))

# layer14

model.add(layers.Flatten())

model.add(layers.Dense(512, kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

# layer15

model.add(layers.Dense(512, kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

# layer16

model.add(layers.Dropout(0.5))

model.add(layers.Dense(2))

model.add(layers.Activation('softmax'))

return model

#if __name__ == '__main__':

train_data, train_label, test_data, test_label = load_data()

train_data = train_data.astype('float32')

test_data = test_data.astype('float32')

train_label = keras.utils.to_categorical(train_label, 2)

test_label = keras.utils.to_categorical(test_label, 2)

#定义训练方法,超参数设置

model = vgg16()

sgd = tf.keras.optimizers.SGD(lr=0.01, decay=1e-6, momentum=0.9, nesterov=True) #设置优化器为SGD

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

history = model.fit(train_data, train_label,

batch_size=20,

epochs=10,

validation_split=0.2, #把训练集中的五分之一作为验证集

shuffle=True)

scores = model.evaluate(test_data,test_label,verbose=1)

print(scores)

model.save('model/vgg16dogcat.h5')

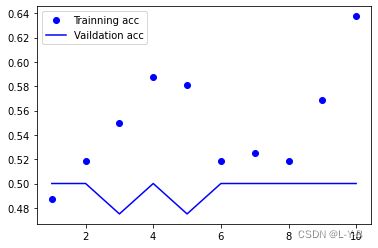

acc = history.history['accuracy'] # 获取训练集准确性数据

val_acc = history.history['val_accuracy'] # 获取验证集准确性数据

loss = history.history['loss'] # 获取训练集错误值数据

val_loss = history.history['val_loss'] # 获取验证集错误值数据

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'bo', label='Trainning acc') # 以epochs为横坐标,以训练集准确性为纵坐标

plt.plot(epochs, val_acc, 'b', label='Vaildation acc') # 以epochs为横坐标,以验证集准确性为纵坐标

plt.legend() # 绘制图例,即标明图中的线段代表何种含义

plt.show()

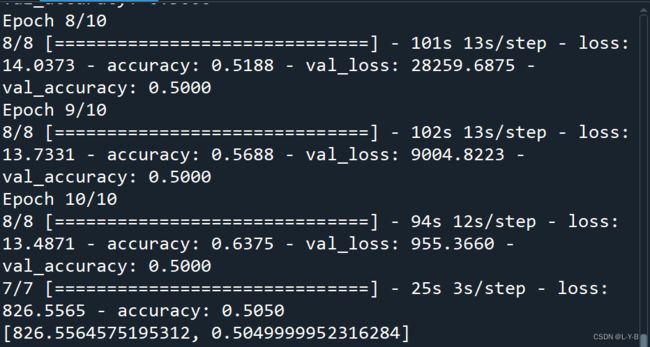

运行结果如下:

2.PyTorch

PyTorch的一些应用

这里列举两个应用

a.模型测试

#2022-03-22

#导入相应的库

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import os

from scipy import stats

import pandas as pd

#数据的导入

titanic_data = pd.read_excel(r"C:\Users\L\data_survived.xlsx")

print(titanic_data.columns )#打印列名

#用哑变量将指定字段转成one-hot

#titanic_data = pd.concat([titanic_data,

# pd.get_dummies(titanic_data['sex']),

# pd.get_dummies(titanic_data['embarked'],prefix="embark"),

# pd.get_dummies(titanic_data['pclass'],prefix="class")], axis=1)

print(titanic_data.columns )

# print(titanic_data['sex'])

# print(titanic_data['female'])

#处理None值

titanic_data["age"] = titanic_data["age"].fillna(titanic_data["age"].mean())

titanic_data["fare"] = titanic_data["fare"].fillna(titanic_data["fare"].mean())#乘客票价

#删去无用的列

#titanic_data = titanic_data.drop(['age','sibsp','parch','fare','female','male','embark_C','embark_Q','embark_S','class_1','class_2','class_3'], axis=1)

#print(titanic_data.columns )

#titanic_data.to_excel(r"C:\Users\L\1112.xlsx")

#分离样本和标签

labels = titanic_data["survived"].to_numpy()

data_features = titanic_data.drop(['survived'], axis=1)

data = data_features.to_numpy()

labels

#样本的属性名称

feature_names = list(titanic_data.columns)

#将样本分为训练和测试两部分

from sklearn.model_selection import train_test_split

train_features,test_features,train_labels,test_labels = train_test_split(data,labels,test_size=0.3,random_state=10)

class Mish(nn.Module):#Mish激活函数

def __init__(self):

super().__init__()

print("Mish activation loaded...")

def forward(self,x):

x = x * (torch.tanh(F.softplus(x)))

return x

torch.nn.Linear(in_features,out_features,bias = True )

对传入数据应用线性变换:y = A x+ b

参数:

in_features - 每个输入样本的大小

out_features - 每个输出样本的大小

bias - 如果设置为False,则图层不会学习附加偏差。默认值:True

torch.manual_seed(0) #设置随机种子

#构建一个三层网络12-8-2

class ThreelinearModel(nn.Module):

def __init__(self):

super().__init__()

self.linear1 = nn.Linear(12, 8)

self.mish1 = Mish()

self.linear2 = nn.Linear(8, 9)

self.mish2 = Mish()

self.linear3 = nn.Linear(9, 6)

self.softmax = nn.Softmax(dim=1)

self.criterion = nn.CrossEntropyLoss() #定义交叉熵函数

def forward(self, x): #定义一个全连接网络

lin1_out = self.linear1(x)

out1 = self.mish1(lin1_out)

out2 = self.mish2(self.linear2(out1))

return self.softmax(self.linear3(out2))

def getloss(self,x,y): #实现LogicNet类的损失值计算接口

y_pred = self.forward(x)

loss = self.criterion(y_pred,y)#计算损失值得交叉熵

return loss

# 深度学习中经常看到epoch、 iteration和batchsize,下面按自己的理解说说这三个的区别:

(1)batchsize:批大小。在深度学习中,一般采用SGD训练,即每次训练在训练集中取batchsize个样本训练;

(2)iteration:1个iteration等于使用batchsize个样本训练一次;

(3)epoch:1个epoch等于使用训练集中的全部样本训练一次;

举个例子,训练集有1000个样本,batchsize=10,那么:

训练完整个样本集需要:

100次iteration,1次epoch。

net = ThreelinearModel()#模型实例化

num_epochs = 200 #迭代数次

optimizer = torch.optim.Adam(net.parameters(), lr=0.04) #优化器选择Adam,只有一个参数学习率

#把特征和标签值转成torch张量形式

input_tensor = torch.from_numpy(train_features).type(torch.FloatTensor)

label_tensor = torch.from_numpy(train_labels)

losses = []#定义列表,用于接收每一步的损失值

for epoch in range(num_epochs):

loss = net.getloss(input_tensor,label_tensor)

losses.append(loss.item())

optimizer.zero_grad()#清空之前的梯度。

loss.backward()#反向传播损失值

optimizer.step()#更新参数

if epoch % 20 == 0:

print ('Epoch {}/{} => Loss: {:.2f}'.format(epoch+1, num_epochs, loss.item()))

#.item()方法 是得到一个元素张量里面的元素值

os.makedirs('models', exist_ok=True)

#os.makedirs自动创建一个文件夹models

#如果exist_ok为True,则在目标目录已存在的情况下不会触发FileExistsError异常。

torch.save(net.state_dict(), 'models/titanic_model.pht') #保存模型

print(len(losses))

import matplotlib.pyplot as plt

def moving_average(a, w=10):#定义函数计算移动平均损失值

if len(a) < w:

return a[:]

return [val if idx < w else sum(a[(idx-w):idx])/w for idx, val in enumerate(a)]

#enumerate() 函数用于将一个可遍历的数据对象(如列表、元组或字符串)组合为一个索引序列,同时列出数据和数据下标,一般用在 for 循环当中。

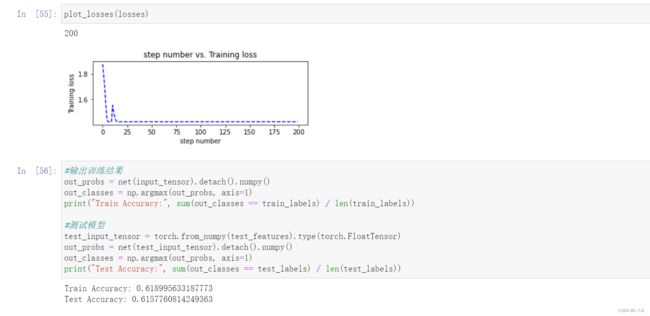

def plot_losses(losses):

avgloss= moving_average(losses) #获得损失值的移动平均值

print(len(avgloss))

plt.figure(1)

plt.subplot(211)

plt.plot(range(len(avgloss)), avgloss, 'b--')

plt.xlabel('step number')

plt.ylabel('Training loss')

plt.title('step number vs. Training loss')

plt.show()

plot_losses(losses)

#输出训练结果

out_probs = net(input_tensor).detach().numpy()

out_classes = np.argmax(out_probs, axis=1)

print("Train Accuracy:", sum(out_classes == train_labels) / len(train_labels))

#测试模型

test_input_tensor = torch.from_numpy(test_features).type(torch.FloatTensor)

out_probs = net(test_input_tensor).detach().numpy()

out_classes = np.argmax(out_probs, axis=1)

print("Test Accuracy:", sum(out_classes == test_labels) / len(test_labels))

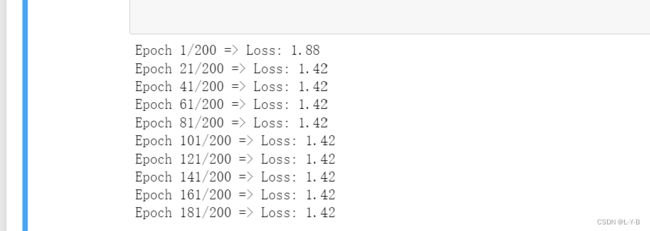

运行结果:

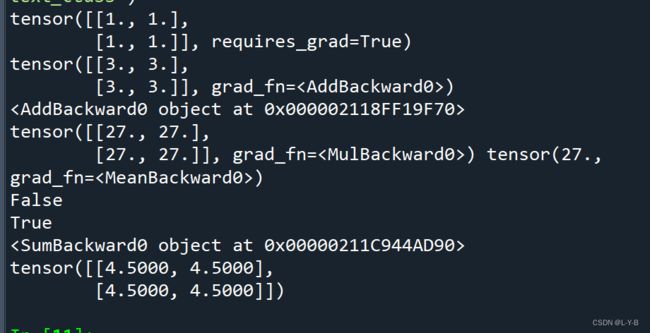

2.PyTorch自动微分

import torch

x=torch.ones(2,2,requires_grad=True)

print(x)

y=x+2

print(y)

print(y.grad_fn)

z=y*y*3

out=z.mean()

print(z,out)

a=torch.randn(2,2)

a=((a*3)/(a-1))

print(a.requires_grad)

a.requires_grad_(True)

print(a.requires_grad)

b=(a*a).sum()

print(b.grad_fn)

out.backward()

print(x.grad)

3008