yolo v5 代码阅读记录

目录

一、安装配置环境

二、Debug记录

三、打印日志输出的地方

1、 开始打印参数值

2、若缺少环境,则打印相关警告

3、 打印python版本以及显卡信息

4、 当图片的输入 最大size 不是 stride 的整数倍时,打印报警日志

5、NMS过程的警告

6、 打印时间花费的信息

7、打印 图片以保存

四、功能接口

0、代码中的 f'{}'

1、保存结果的路径

2、默认的步长 、 使用cuda

3、加载模型以及加载 权重参数

4、拿出文件夹中的测试图片

5、demo时对输入图片的填充裁剪

6、input 进入 model 中的过程

7、保存路径的接口

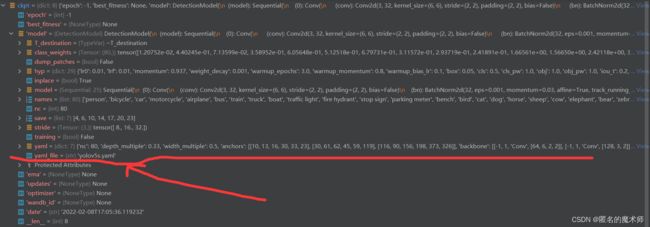

8、yaml 参数设置文件的加载处

9、 save_txt 应用影响的地方

10、拿出标记的地方

11、画矩形框和标记的接口

12、展示图片选项 view_img 作用接口

13、save_img 作用的接口

五、网络结构

六、一些需要了解的细节--模型的输出,即预测的都是些什么

1、grid 和 anchor_grid

2、Detect head 中的细节过程

3、nms 过程中的细节

4、预测的bbox怎么映射回原图片上的

5、在原图上画bbox 和 标记

6、如果想保留视频和webcam 的检测结果,需要做的设定

一、安装配置环境

conda create -n yolov5 python=3.7

启动该环境

source activate yolov5pip install -r requirements.txt运行测试demo,即detect.py

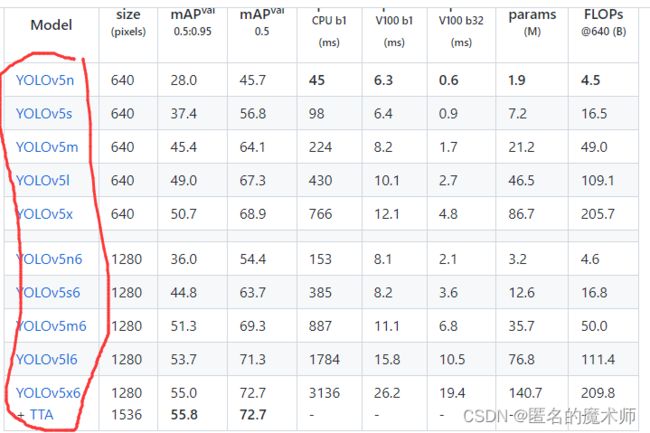

这时需要上官网下载对应版本的预训练权重。上图中画线的部分就是当前所用的版本,

点击对应的版本下载即可,然后放到yolo-master即主项目文件夹下即可。

二、Debug记录

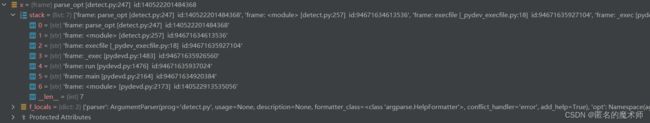

1、vars(opt)

2、x

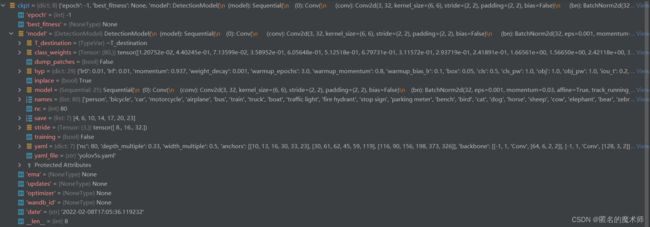

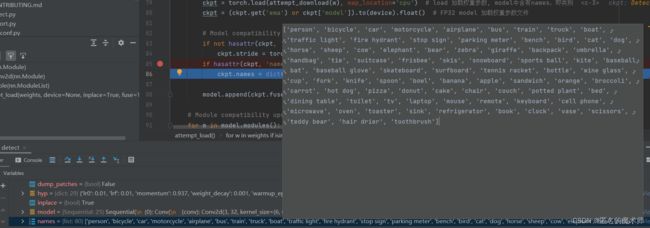

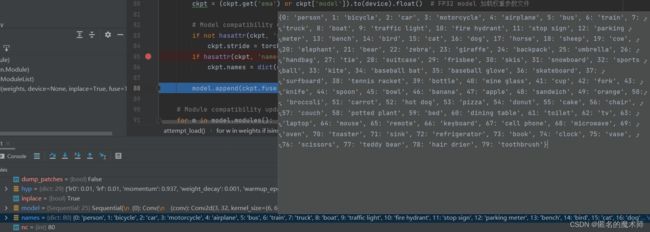

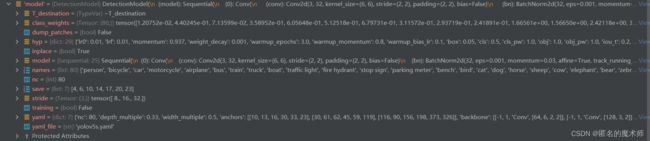

3、ckpt

4、names

list

dict

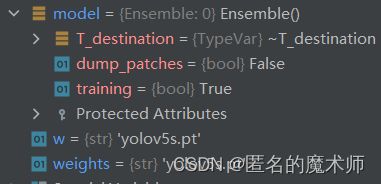

5、model

前

后

6、dataset

7、dt

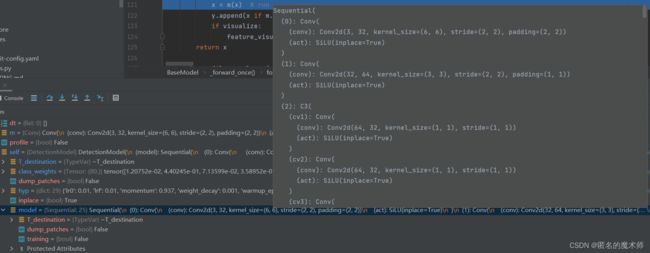

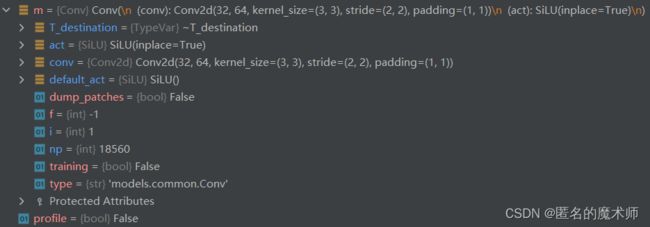

8、 m 和 model

model

m 举例

9、y

三、打印日志输出的地方

1、 开始打印参数值

detect: weights=yolov5s.pt, source=data/images, data=data/coco128.yaml, imgsz=[640, 640], conf_thres=0.25, iou_thres=0.45, max_det=1000, device=, view_img=False, save_txt=False, save_conf=False, save_crop=False, nosave=False, classes=None, agnostic_nms=False, augment=False, visualize=False, update=False, project=runs/detect, name=exp, exist_ok=False, line_thickness=3, hide_labels=False, hide_conf=False, half=False, dnn=False, vid_stride=1general.py --- 216

2、若缺少环境,则打印相关警告

general.py --- 362始

3、 打印python版本以及显卡信息

YOLOv5 2022-10-13 Python-3.7.12 torch-1.12.1+cu102 CUDA:0 (NVIDIA GeForce RTX 2080 Ti, 11019MiB)torch_utils.py --- 139

4、 当图片的输入 最大size 不是 stride 的整数倍时,打印报警日志

check_img_size 函数, general.py ---382

if new_size != imgsz:

LOGGER.warning(f'WARNING ⚠️ --img-size {imgsz} must be multiple of max stride {s}, updating to {new_size}'5、NMS过程的警告

WARNING ⚠️ NMS time limit 0.550s exceeded

general.py ---938

6、 打印时间花费的信息

detect.py --- 204

LOGGER.info(f"{s}{'' if len(det) else '(no detections), '}{dt[1].dt * 1E3:.1f}ms")image 1/3 /root/data/zjx/yolov5/yolov5-master/data/images/bus.jpg: 640x480 4 persons, 1 bus, 11.0ms

image 2/3 /root/data/zjx/yolov5/yolov5-master/data/images/laboratory.jpg: 480x640 4 bottles, 4 chairs, 2 tvs, 11.5ms

image 3/3 /root/data/zjx/yolov5/yolov5-master/data/images/zidane.jpg: 384x640 2 persons, 2 ties, 12.5msdetect.py --- 208

LOGGER.info(f'Speed: %.1fms pre-process, %.1fms inference, %.1fms NMS per image at shape {(1, 3, *imgsz)}' % t) LOGGER.info(f'Speed: %.1fms pre-process, %.1fms inference, %.1fms NMS per image at shape {(1, 3, *imgsz)}' % t)7、打印 图片以保存

detect.py --- 209

if save_txt or save_img:

s = f"\n{len(list(save_dir.glob('labels/*.txt')))} labels saved to {save_dir / 'labels'}" if save_txt else ''

LOGGER.info(f"Results saved to {colorstr('bold', save_dir)}{s}")Results saved to runs/detect/exp93四、功能接口

0、代码中的 f'{}'

# format 的缩写

s = f'YOLOv5 111 缩写 torch-{torch.__version__} '

print(s)

>> YOLOv5 111 缩写 torch-1.12.1+cu102 1、保存结果的路径

detect.py --- 91

save_dir = increment_path(Path(project) / name, exist_ok=exist_ok)其中 project 决定保存的路径

那个exp变化的决定在 general.py 中的 increment_path函数中,即exp2 exp3 等

def increment_path(path, exist_ok=False, sep='', mkdir=False):

# Increment file or directory path, i.e. runs/exp --> runs/exp{sep}2, runs/exp{sep}3, ... etc.

path = Path(path) # os-agnostic runs/detect/exp

if path.exists() and not exist_ok:

path, suffix = (path.with_suffix(''), path.suffix) if path.is_file() else (path, '') # suffix : ''

# Method 1

for n in range(2, 9999):

p = f'{path}{sep}{n}{suffix}' # increment path

if not os.path.exists(p): #

break

path = Path(p) # 路径中 exp后面带数字 如 exp12

# Method 2 (deprecated)

# dirs = glob.glob(f"{path}{sep}*") # similar paths

# matches = [re.search(rf"{path.stem}{sep}(\d+)", d) for d in dirs]

# i = [int(m.groups()[0]) for m in matches if m] # indices

# n = max(i) + 1 if i else 2 # increment number

# path = Path(f"{path}{sep}{n}{suffix}") # increment path

if mkdir:

path.mkdir(parents=True, exist_ok=True) # make directory

return path2、默认的步长 、 使用cuda

stride = 32 # default stridecommon.py --- 334

cuda = torch.cuda.is_available() and device.type != 'cpu' # use CUDAcommon.py --- 335

3、加载模型以及加载 权重参数

detect.py --- 95

model = DetectMultiBackend(weights, device=device, dnn=dnn, data=data, fp16=half)experimental.py --- 79

attempt_load函数

ckpt = torch.load(attempt_download(w), map_location='cpu') 注意这里的ckpt,其属性如下

说明yolov5s.pt中的文件为字典,主要看这里的model 键值一栏,

所以这里的模型 为 DetectionModel,权重参数已经是训练好的了。直接用就ok。

4、拿出文件夹中的测试图片

detect.py --- 114

for path, im, im0s, vid_cap, s in dataset:此代码会依次执行 LoadImages 类中的 __iter__ 、 __next__,并且在__next__中有读入图片的操作

else:

# Read image

self.count += 1

im0 = cv2.imread(path) # BGR

assert im0 is not None, f'Image Not Found {path}'

s = f'image {self.count}/{self.nf} {path}: '

# 以及下面的转换通道操作

else:

im = letterbox(im0, self.img_size, stride=self.stride, auto=self.auto)[0] # padded resize

im = im.transpose((2, 0, 1))[::-1] # HWC to CHW, BGR to RGB

im = np.ascontiguousarray(im) # contiguous而且最后返回的

return path, im, im0, self.cap, s正好对应上面for in 的5个参数。

5、demo时对输入图片的填充裁剪

augmentation.py --- 111

中的 letterbox 函数,其在LoadImages类中有应用

else:

im = letterbox(im0, self.img_size, stride=self.stride, auto=self.auto)[0] # padded resize 调用的函数

im = im.transpose((2, 0, 1))[::-1] # HWC to CHW, BGR to RGB

im = np.ascontiguousarray(im) # contiguous6、input 进入 model 中的过程

1)首先由 detect.py ---125 进入model

pred = model(im, augment=augment, visualize=visualize)跳转到 DetectMultiBackend 中的forward 函数中,如下所示

2) common.py --- 504

y = self.model(im, augment=augment, visualize=visualize) if augment or visualize else self.model(im)跳转到 DetectionModel 中的 forward 函数中, 如下所示

3) yolo.py --- 206

def forward(self, x, augment=False, profile=False, visualize=False):

if augment:

return self._forward_augment(x) # augmented inference, None

return self._forward_once(x, profile, visualize) 这里 demo 过程不需要数据增强, 会跳转到 self._forward_once(x, profile, visualize) 函数中,这个方法在 BaseModel 父类中定义,所以跳转到 BaseModel 中,如下所示

4)yolo.py --- 114

def _forward_once(self, x, profile=False, visualize=False):

y, dt = [], [] # outputs

for m in self.model: # m 与 model

if m.f != -1: # if not from previous layer

x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

if profile:

self._profile_one_layer(m, x, dt)

x = m(x) # run

y.append(x if m.i in self.save else None) # save output 这里注意 m.i 这个属性

if visualize:

feature_visualization(x, m.type, m.i, save_dir=visualize)

return x 这个循环拿出 model 中的一层 来执行的,而不是像pytroch的那种直接 Sequence 完事的。

虽然时执行父类中的方法,不过属性依然可以应用子类中的,比如这个 self.save,来自DetectionModel, yolo.py ---185,如下所示

self.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch])而且,其中的 这个y好像没用是,可能是在可视化中会用到吧,最后输出的只有 x 。

5)这里的 m 即是 拿出 sequential 的模块,self.model包含的信息如下所示

Sequential(

(0): Conv(

(conv): Conv2d(3, 32, kernel_size=(6, 6), stride=(2, 2), padding=(2, 2))

(act): SiLU(inplace=True)

)

(1): Conv(

(conv): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(act): SiLU(inplace=True)

)

(2): C3(

(cv1): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1))

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1))

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1))

(act): SiLU(inplace=True)

)

。。。。。。。。。。。。。。。等等这里 m 每一次循环都会拿出一个模块,比如第一个 m 拿出的模块为 (0) 那个模块,这个模块由 Conv 类定义,所以接下来会执行 Conv 这个类里的前向传播函数,它是在

from models.common import *导入的,也就是 common.py --- 41

class Conv(nn.Module):

# Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

return self.act(self.conv(x))只不过这里执行的 是 forward_fuse 函数,而不是 forward 函数。

同理 C3 也在 common.py ---166 中有定义。最后的head 为 Detect 类,在yolo.py --- 38中。

总之,这个 m 是哪个模块,就会执行对应的模块类,m 的 模块见下面的 五 中的网络结构,()为单个模块,也就是循环中 m 所对应的的值

6)最终得到输出 , 跳回到 2) 中的输出 y,接下来执行

if isinstance(y, (list, tuple)): # Ture

return self.from_numpy(y[0]) if len(y) == 1 else [self.from_numpy(x) for x in y] def from_numpy(self, x):

return torch.from_numpy(x).to(self.device) if isinstance(x, np.ndarray) else x6)最后返回到 1) ,得到pred

7、保存路径的接口

detect.py ---144

save_path = str(save_dir / p.name) # im.jpg 'runs/detect/exp87/bus.jpg'

txt_path = str(save_dir / 'labels' / p.stem) + ('' if dataset.mode == 'image' else f'_{frame}') # im.txt 'runs/detect/exp87/labels/bus'8、yaml 参数设置文件的加载处

yaml文件设置了所有的实验参数,那代码在哪里处加载它的呢。答案就是在 权重文件中已经包含了对他的设置了,debug ckpt中显示

9、 save_txt 应用影响的地方

detect.py --- 161

if save_txt: # Write to file False

xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh

line = (cls, *xywh, conf) if save_conf else (cls, *xywh) # label format

with open(f'{txt_path}.txt', 'a') as f:

f.write(('%g ' * len(line)).rstrip() % line + '\n')10、拿出标记的地方

detect.py --- 169

label = None if hide_labels else (names[c] if hide_conf else f'{names[c]} {conf:.2f}') # 举例: 'person: 0.53'11、画矩形框和标记的接口

detect.py --- 170

annotator.box_label(xyxy, label, color=colors(c, True))plots.py --- 100

else: # cv2 True

p1, p2 = (int(box[0]), int(box[1])), (int(box[2]), int(box[3]))

cv2.rectangle(self.im, p1, p2, color, thickness=self.lw, lineType=cv2.LINE_AA)

if label:

tf = max(self.lw - 1, 1) # font thickness

w, h = cv2.getTextSize(label, 0, fontScale=self.lw / 3, thickness=tf)[0] # text width, height

outside = p1[1] - h >= 3

p2 = p1[0] + w, p1[1] - h - 3 if outside else p1[1] + h + 3

cv2.rectangle(self.im, p1, p2, color, -1, cv2.LINE_AA) # filled

cv2.putText(self.im,

label, (p1[0], p1[1] - 2 if outside else p1[1] + h + 2),

0,

self.lw / 3,

txt_color,

thickness=tf,

lineType=cv2.LINE_AA)12、展示图片选项 view_img 作用接口

detect.py --- 176

if view_img:

if platform.system() == 'Linux' and p not in windows:

windows.append(p)

cv2.namedWindow(str(p), cv2.WINDOW_NORMAL | cv2.WINDOW_KEEPRATIO) # allow window resize (Linux)

cv2.resizeWindow(str(p), im0.shape[1], im0.shape[0])

cv2.imshow(str(p), im0)

cv2.waitKey(1) # 1 millisecond13、save_img 作用的接口

detect.py --- 185

if save_img:

if dataset.mode == 'image':

cv2.imwrite(save_path, im0)五、网络结构

DetectionModel(

(model): Sequential(

(0): Conv(

(conv): Conv2d(3, 32, kernel_size=(6, 6), stride=(2, 2), padding=(2, 2), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): Conv(

(conv): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(2): C3(

(cv1): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(3): Conv(

(conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(4): C3(

(cv1): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(1): Bottleneck(

(cv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(5): Conv(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(6): C3(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(1): Bottleneck(

(cv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(2): Bottleneck(

(cv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(7): Conv(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(8): C3(

(cv1): Conv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(9): SPPF(

(cv1): Conv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): MaxPool2d(kernel_size=5, stride=1, padding=2, dilation=1, ceil_mode=False)

)

(10): Conv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(11): Upsample(scale_factor=2.0, mode=nearest)

(12): Concat()

(13): C3(

(cv1): Conv(

(conv): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(14): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(15): Upsample(scale_factor=2.0, mode=nearest)

(16): Concat()

(17): C3(

(cv1): Conv(

(conv): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(18): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(19): Concat()

(20): C3(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(21): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(22): Concat()

(23): C3(

(cv1): Conv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(24): Detect(

(m): ModuleList(

(0): Conv2d(128, 255, kernel_size=(1, 1), stride=(1, 1))

(1): Conv2d(256, 255, kernel_size=(1, 1), stride=(1, 1))

(2): Conv2d(512, 255, kernel_size=(1, 1), stride=(1, 1))

)

)

)

)六、一些需要了解的细节--模型的输出,即预测的都是些什么

1、grid 和 anchor_grid

首先来看下 grid和anchor_grid, 它们都在 Detect head 中出现。yolov1的论文中有这样一句话

yolo 它说将输入img分成 S X S 个格子来进行检测。其实这 S X S 格子就是model预测输出的特征图H和W 两通道上返回原img的区域(这很容易理解,不懂的可以去看一下关于 感受野 的知识)。而yolov5中采用了anchor的机制,这个anchor也是在每个格子上建立的,映射为输出特征图上的每个点。所以这个grid是特征图H W 通道上网格坐标点,anchor_grid 是 anchor的长和宽。

如下图所示,左边为grid,右边两个为anchor_grid(一共3个anchor,下图只列了2个),此时的feature shape 为 Tensor(1,3,80,80,2),下图只显示了一个列的坐标点。至于-0.5 是代码中的位置补偿。

grid = torch.stack((xv, yv), 2).expand(shape) - 0.5 # add grid offset, i.e. y = 2.0 * x - 0.5

试验举例:grid[0] shape为Tensor (1,3,80,80,2), anchor_grid shape为Tensor (1,3,80,80,2)。

解释:第一通的1 为 batch;第二通道的 3 为 anchor 的数目;第三和第四通道 80,80 为特征图的H和W;第五通道 2 坐标信息,包括 x轴 和 y轴。

self.anchors如下所示

tensor([[[ 1.25000, 1.62500],

[ 2.00000, 3.75000],

[ 4.12500, 2.87500]],

[[ 1.87500, 3.81250],

[ 3.87500, 2.81250],

[ 3.68750, 7.43750]],

[[ 3.62500, 2.81250],

[ 4.87500, 6.18750],

[11.65625, 10.18750]]], device='cuda:0')2、Detect head 中的细节过程

yolov1 论文中说到

意思是 输出的向量shape为 ![]() 。

。

然后再来看实际代码,Detect head 的 输入有三个,它们的尺度不同,通道数不同。实验举例:输入的x如下

x {list:3} Tensor:(1,128,80,80), Tensor:(1,256,40,40), Tensor:(1,512,20,20)

for i in range(self.nl): # 不同尺度下分别进行

x[i] = self.m[i](x[i]) # 这部把通道数统一为255

bs, _, ny, nx = x[i].shape # 拿出shape的值

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous() # 调整shape,也就是SxSx(B*5+C) 的形状得到输出变化举例(只第一轮的)

x {list:3} Tensor:(1,255,80,80), Tensor:(1,256,40,40), Tensor:(1,512,20,20)

x {list:3} Tensor:(1,3,80,80,85), Tensor:(1,256,40,40), Tensor:(1,512,20,20)

这里有两点需要注意: 一是为什么把通道数统一为255。因为yolov5的设置是 anchor 数量为3, 种类数为80,外加 x,y,w,h,c 这5个,正好为 255 = 3 X 85。

二是调整通道那步操作,把输出调整为(1,3,80,80,85),这里 第一通道为 batch; 第二通道为 anchor数量,公式中的B;第三和第四通道为 H W,公式中的S;第五通道为公式中的C+5。 所以,yolov5中的输出已经不再是yolov1中的形式了,而是

接下来

xy, wh, conf, mask = x[i].split((2, 2, self.nc + 1, self.no - self.nc - 5), 4)

xy = (xy.sigmoid() * 2 + self.grid[i]) * self.stride[i]

wh = (wh.sigmoid() * 2) ** 2 * self.anchor_grid[i]

y = torch.cat((xy, wh, conf.sigmoid(), mask), 4)其流程为

把x 按第五维拆分,也就是 C 所在的那维 ---> 锚框的逆运算求bbox ---> 置信度 conf 做sigmoid ---> 最后再按第五维拼接在一起

然后

z.append(y.view(bs, self.na * nx * ny, self.no)) # 举例:Tensor(1,19200,85)整成了

, integrate 为其它通道整合在一起

最终 的输出为

(torch.cat(z, 1), x)按第二通道也就是不同尺度拼接在一起

如下所示

总结这里最重要的是 85代表的是C+5,它的含义是什么,它的内容是什么。C 类别, 5 xywhc,这里 c 的值预测的是IoU。 并且还需要注意下通道的顺序,85中 [:4] 也就是前5个通道是 C, [5:]后80个代表类别

举例如下所示,蓝色中的数字表示为该类别的概率,上面的数字0 等表示类别的序号

3、nms 过程中的细节

x[:, 5:] *= x[:, 4:5] # conf = obj_conf * cls_conf对应论文中,第5通道的置信度分数预测的就是IoU。

conf, j = x[:, 5:mi].max(1, keepdim=True)这步操作,关于torch.max 的作用可以参考python torch.max() 用法记录。注意现在的x里的内容是什么,现在的x是二维的Tensor,它除去了batch通道,进行了

xc = prediction[..., 4] > conf_thres 和 x[:, 5:] *= x[:, 4:5]

操作,举例 x的shape 为Tensor(52,85),其中 第一维 52 表示通过分类阈值筛选后所剩的 bbox 的数量,第二维 85 不用多说,为 80+C。总结来说就是 一共有52个bbox,其中每个bbox 包含的信息量为 85。

上述的 torch.max 是 根据第2维通道的后80个通道进行的,其功能是 确定每个框中 置信度 最高的的分数,以及该对应类别的序号是几。实际举例:

conf:(52,1), j:(52,1)

结合上图,conf中的值就是 (1.33,2.44,3.55,8.88,。。。),j 中的值为(0,0,5,4,。。。),只不过这里shape是 (52,1)应该是列的形式,本质意思是这样。

接下来进行

x = torch.cat((box, conf, j.float(), mask), 1)[conf.view(-1) > conf_thres] # 举例 Tensor:(51,6)这里有两点需要说明:一是 根据置信度阈值又筛选了一遍;二是 x 的shape此时第二维 为 6,从代码中也可以看出,6分别包括的是 bbox的坐标信息(x,y,w,h)注意这里预测的是偏移量,这里已经是中心形式,然后是conf 置信度分数, 最后是 j,为类别序号,也就是类别信息。

接下来

if not n: # no boxes 无可选目标则跳到下一个循环,这里的循环是按batch 来的

continue

elif n > max_nms: # excess boxes

x = x[x[:, 4].argsort(descending=True)[:max_nms]] # sort by confidence

else:

x = x[x[:, 4].argsort(descending=True)] # sort by confidence这里首先 是 如果 筛选留下的bbox数目大于 设置的最大nms bbox 数目,则进行从大到小排序,然后选前 max_nms个。如果小于,则按置信度分数从大到小进行排列,torch.argsort见这里,他返回的是下标。

接下来

c = x[:, 5:6] * (0 if agnostic else max_wh)

boxes, scores = x[:, :4] + c, x[:, 4]这里需要说明一下NMS 的技术改善,把所有boxes放在一起做NMS,没有考虑类别。即某一类的boxes不应该因为它与另一类最大得分boxes的iou值超过阈值而被筛掉。对于多类别NMS来说,它的思想比较简单:每个类别内部做NMS就可以了。实现方法:把每个box的坐标添加一个偏移量,偏移量由类别索引来决定。所以进行了上述的处理。注意这里借用了中间变量 boxes,scores来进行nms,并没有改变 x 中的关于bbox的信息。

最后 通过 torchvision.ops.nms 来实现nms过程,得到的 i 举例如下

Tensor:(5,) ([0,2,4,5,3])

所以,最终经过NMS处理后 返回的 output 如下举例

![]()

包含矩形框的数量, 以及bbox的坐标信息,置信度分数和 类别信息。

output = [torch.zeros((0, 6 + nm), device=prediction.device)] * bs # 定义

output[xi] = x[i] # 输出时的形式前后呼应。

4、预测的bbox怎么映射回原图片上的

当执行上述的nms过程后,接下来就需要把bbox画出来。首先将bbox返回原图,

detect.py --- 152

det[:, :4] = scale_boxes(im.shape[2:], det[:, :4], im0.shape).round()进入到 scale_boxes函数中,注意此时传入的参数,im为裁剪后输入到model的图片,det前四个通道为bbox信息,im0为原img的copy。

def scale_boxes(img1_shape, boxes, img0_shape, ratio_pad=None):

# Rescale boxes (xyxy) from img1_shape to img0_shape

if ratio_pad is None: # calculate from img0_shape

gain = min(img1_shape[0] / img0_shape[0], img1_shape[1] / img0_shape[1]) # gain = old / new

pad = (img1_shape[1] - img0_shape[1] * gain) / 2, (img1_shape[0] - img0_shape[0] * gain) / 2 # wh padding

else:

gain = ratio_pad[0][0]

pad = ratio_pad[1]

boxes[:, [0, 2]] -= pad[0] # x padding

boxes[:, [1, 3]] -= pad[1] # y padding

boxes[:, :4] /= gain # 返回原图

clip_boxes(boxes, img0_shape) # 防止bbox 超过img边框

return boxes这里首先计算了一下 裁剪img的shape / 原 img的shape 的比例 gain,然后又用 裁剪img的shape 减去 原 img的shape 乘上比例 得到 pad,感觉和直接减一样,其实就是一样(pad可以为0),只不过怕比例不是整除,出现一点差别,所以这个pad就是弥补这个差别的。然后通过bbox 减去pad 除以 比例 gain 就返回原图了。

def clip_boxes(boxes, shape):

# Clip boxes (xyxy) to image shape (height, width)

if isinstance(boxes, torch.Tensor): # faster individually

boxes[:, 0].clamp_(0, shape[1]) # x1

boxes[:, 1].clamp_(0, shape[0]) # y1

boxes[:, 2].clamp_(0, shape[1]) # x2

boxes[:, 3].clamp_(0, shape[0]) # y2

else: # np.array (faster grouped)

boxes[:, [0, 2]] = boxes[:, [0, 2]].clip(0, shape[1]) # x1, x2

boxes[:, [1, 3]] = boxes[:, [1, 3]].clip(0, shape[0]) # y1, y2这个clip_boxes函数是为了防止bbox 超出 img 的边框,把超出的部分去掉,并且把范围压缩在 img的范围内。

上述过程就是实现了bbox 从 裁剪img 的尺度 映射回 原 img的尺度下。

5、在原图上画bbox 和 标记

这个是一个一个矩形框取画的。

for *xyxy, conf, cls in reversed(det):循环执行这个。主要依据下面这个类

detect.py --- 149

annotator = Annotator(im0, line_width=line_thickness, example=str(names))这个类里的方法 box_label 来实现。最终 im0 就是画完 bbox 和 标记的图片。

detect.py --- 175

im0 = annotator.result()6、如果想保留视频和webcam 的检测结果,需要做的设定

主要看下面代码段(detect.py --- 188), 其中需要设定的有

vid_path,vid_writer,vid_cap

else: # 'video' or 'stream'

if vid_path[i] != save_path: # new video

vid_path[i] = save_path

if isinstance(vid_writer[i], cv2.VideoWriter):

vid_writer[i].release() # release previous video writer

if vid_cap: # video

fps = vid_cap.get(cv2.CAP_PROP_FPS)

w = int(vid_cap.get(cv2.CAP_PROP_FRAME_WIDTH))

h = int(vid_cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

else: # stream

fps, w, h = 30, im0.shape[1], im0.shape[0]

save_path = str(Path(save_path).with_suffix('.mp4')) # force *.mp4 suffix on results videos

vid_writer[i] = cv2.VideoWriter(save_path, cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

vid_writer[i].write(im0)