python 安装包问题

一、[python] 安装TensorFlow问题 解决Cannot uninstall 'wrapt'. It is a distutils installed project

cmd安装 pip install tensorflow

1.遇到了

ERROR: Cannot uninstall 'wrapt'. It is a distutils installed project and thus we cannot accurately determine which files belong to it which would lead to only a partial uninstall.

办法1:输入 pip install -U --ignore-installed wrapt enum34 simplejson netaddr

参考:https://www.cnblogs.com/xiaowei2092/p/11025155.html

2.遇到了

ERROR: tensorboard 1.14.0 has requirement setuptools>=41.0.0, but you'll have setuptools 39.1.0 which is incompatible.

原因: setuptools 版本太低

办法:更新setuptools版本 输入 pip install --upgrade setuptools

二、导入tensorflow.出现importError: DLL load failed: 找不到指定的模块。python3.7

解决方案:

进入https://www.tensorflow.org/install/errors

![]()

安装2.1.0版本程序包需要msvcp140_1.dll文件,点击上图中的Microsoft VC++ downloads下载安装包并下载,即可成功导入Tensorflow。

![]()

下载地址:https://support.microsoft.com/zh-cn/help/2977003/the-latest-supported-visual-c-downloads

转自:https://blog.csdn.net/sereasuesue/article/details/105327611

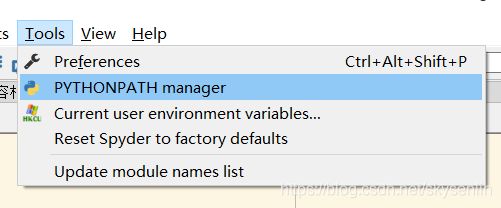

三、spyder自动补全

参考链接:点

四、python开发spark

1、findspark 问题

import findspark

findspark.init()

Traceback (most recent call last):

File "", line 2, in

findspark.init()

File "D:\software\Anaconda\lib\site-packages\findspark.py", line 129, in init

spark_home = find()

File "D:\software\Anaconda\lib\site-packages\findspark.py", line 36, in find

"Couldn't find Spark, make sure SPARK_HOME env is set"

ValueError: Couldn't find Spark, make sure SPARK_HOME env is set or Spark is in an expected location (e.g. from homebrew installation). 1)搭建环境

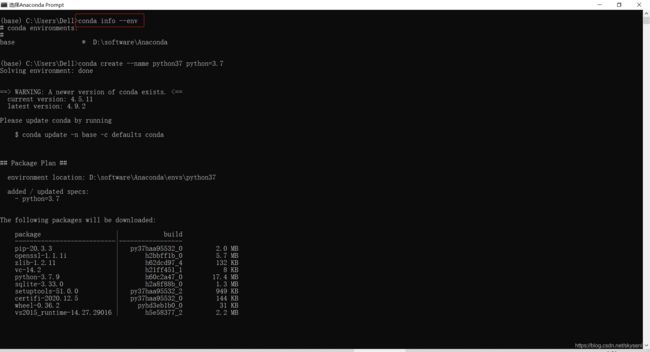

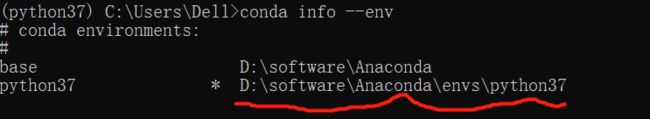

搭建python3.7环境

代码:

conda info --env

conda create --name python37 python=3.7

conda activate python37创建成功之后:

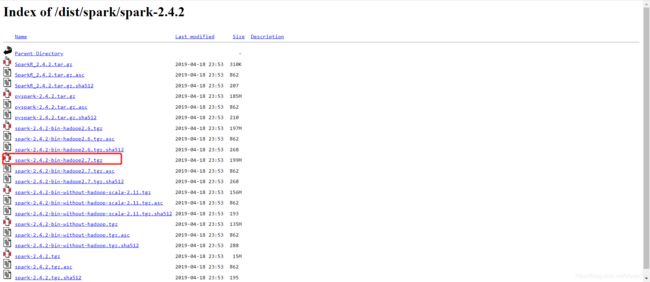

2)安装spark

官网下载:https://archive.apache.org/dist/spark/spark-2.4.2/

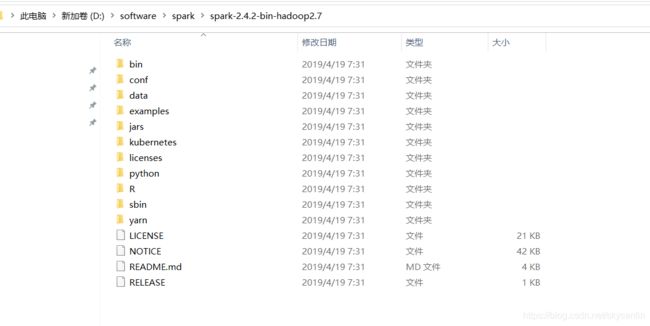

解压到本地目录下,这里是:D:\software\spark\spark-2.4.2-bin-hadoop2.7

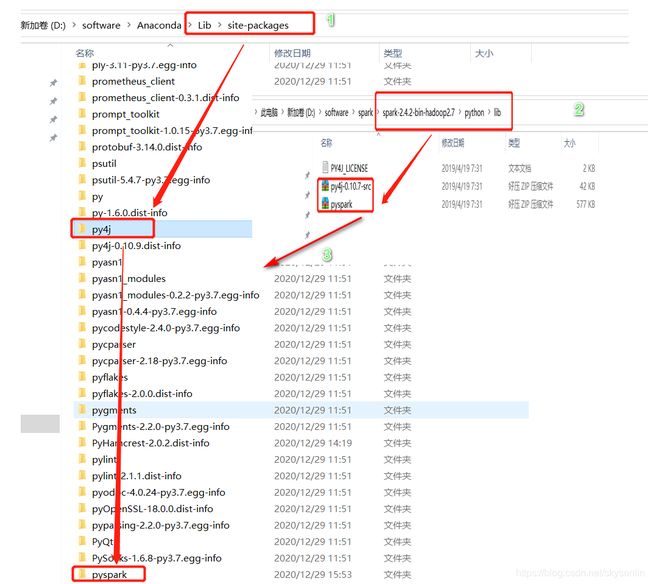

D:/software/Anaconda/lib/site-packages原始的pyspark和py4j删掉,将D:/software/spark/spark-2.4.2-bin-hadoop2.7/python/lib里的pyspark和py4j两个压缩包复制到D:/software/Anaconda/lib/site-packages并解压(解压后记得把压缩包删除)

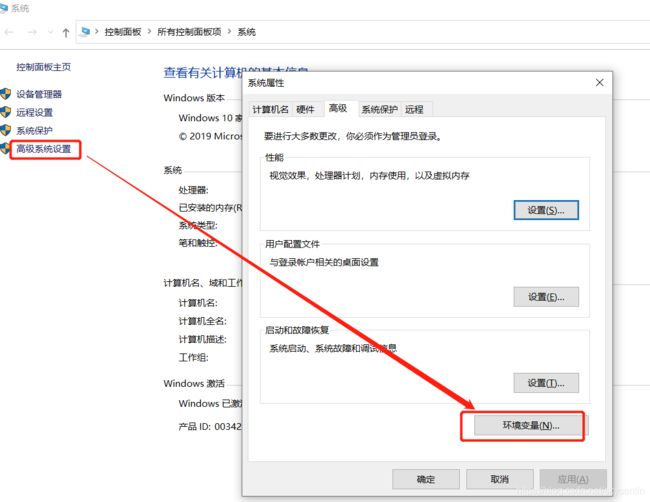

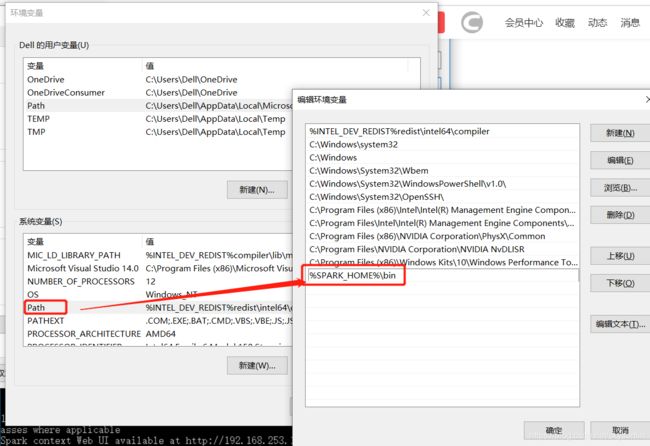

3)配置环境变量:SPARK_HOME

打开 环境变量窗口

右键 This PC(此电脑) -> Properties(属性) -> Advanced system settings(高级系统设置) -> Environment Variables(环境变量)...

3)安装py4j、pyspark

pip install py4j

pip install pyspark

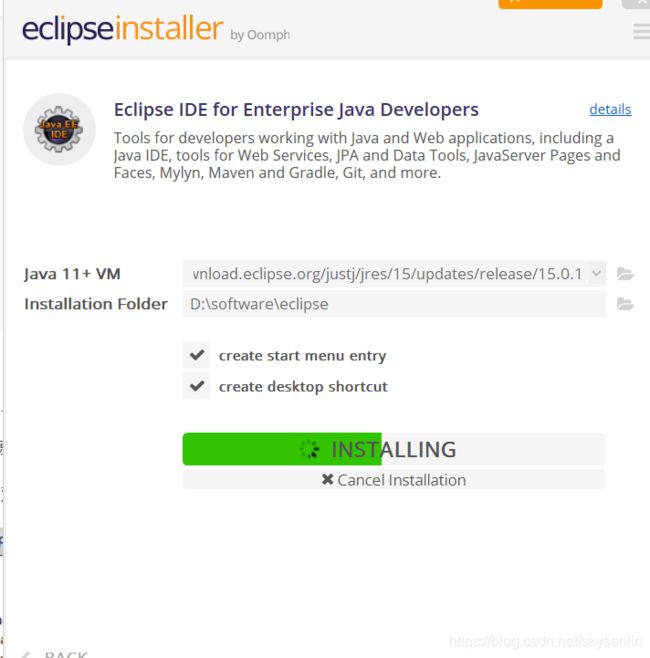

4)eclipse安装

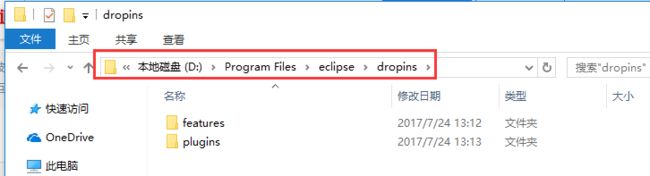

1)eclipse中开发python程序,需要安装pydev插件。eclipse要求4.7以上,下载python插件包PyDev.zip(http://www.pydev.org/download.html),解压后加压拷贝到eclipse的dropins中,重启即可。

将下载下来的PyDev x.y.z.zip(其中的x.y.z是baidu本号,如PyDev 5.8.0.zip)里面的features和plugins两个文件夹复制到Eclipse安装目录下的dropins目录下即可。

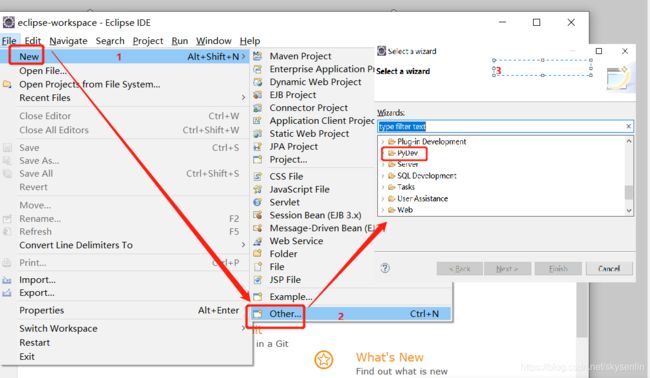

启动Eclipse,就可以看到新建项目的Other里面有PyDev的支持了:

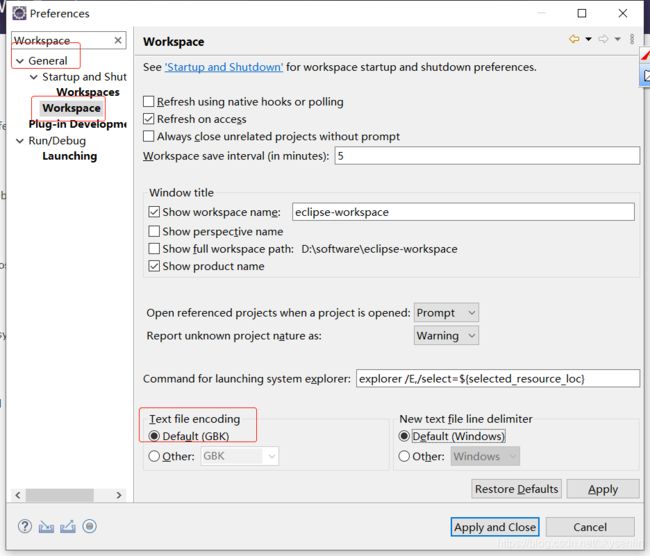

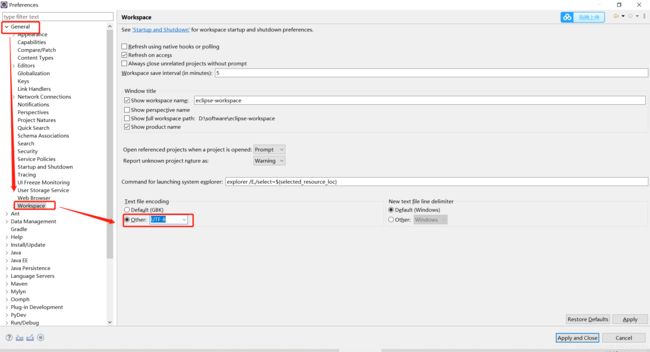

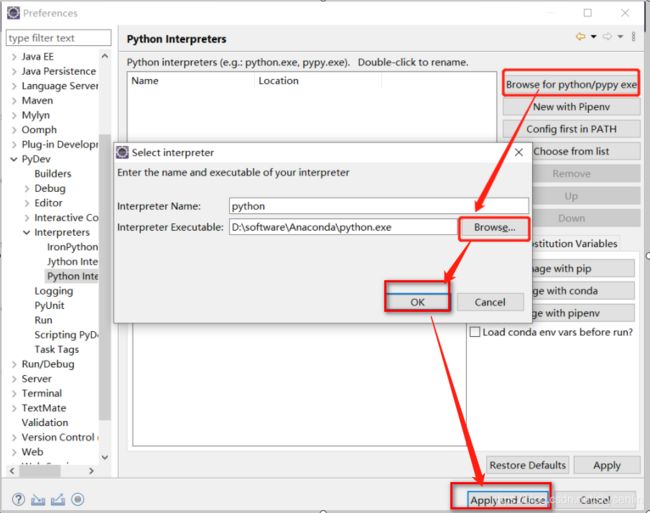

PyDev插件安装成功后,还需要设置 Python 解释器。具体步骤为:

- 打开 Eclipse,选择菜单“Window->Preferences”,弹回设置对话框;

- 选择“PyDev->Interpreters->Python Interpreter”,这里可以通过点击"Config first in PATH"按钮,通过在 Path 路径中找到 Python 解释器,也可以点击“Browse for Python/pypy exe”按钮,手动找到 Python 解释器。添加完成后,点击“Apply and Close”按钮即可(如图 所示)。

从Eclipse IDE的菜单中选择 Run–> Run configurations ….

Run As -> Run Configuration->Environment来设置SPARK_CLASSPATH 指定依赖的jar包:

2、SparkSession.builder.appName("test pyspark").getOrCreate()

参考链接:

python开发spark环境搭建

Spark 的 python 编程环境

五、pip whl

国内的pip源,如下:

阿里云 http://mirrors.aliyun.com/pypi/simple/

中国科技大学 https://pypi.mirrors.ustc.edu.cn/simple/

豆瓣(douban) http://pypi.douban.com/simple/

清华大学 https://pypi.tuna.tsinghua.edu.cn/simple/

————————————————

版权声明:本文为CSDN博主「lsf_007」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/lsf_007/article/details/87931823

e:

cd E:\whl

pip install PyHamcrest-2.0.2-py3-none-any