语音分类入门案例: 英文数字音频分类

语音分类入门案例: 英文数字音频分类

本项目是一个全流程的语音分类项目,内容简单,适合想要涉猎音频分类的小白学习。

推荐将本项目Fork成为自己的项目并运行,以获得更好的学习体验!! 项目地址:语音分类入门:全流程英文数字音频识别

1. 解压数据集

我们使用的数据集是FSDD(free-spoken-digit-dataset),

FSDD是一个简单的音频/语音数据集,由 8kHz 文件中的语音数字记录组成。内含由6位音频录制人员录制的3000条数字(0-9)的英语发音音频数据(每人50条)。

!unzip data/data176422/FSDD.zip

2.配置环境依赖

- 需要安装python_speech_features库,这是一个强大的音频处理库,我们进行持久化安装

!pip install python_speech_features -t /home/aistudio/external-libraries

import sys

sys.path.append('/home/aistudio/external-libraries') #将持久化安装的库加入环境中

import paddle

import os

from tqdm import tqdm

import random

from glob import glob

import numpy as np

import matplotlib.pyplot as plt

from functools import partial

from IPython import display

import soundfile

from python_speech_features import mfcc, delta # 导入音频特征提取工具包

import scipy.io.wavfile as wav

3.数据加载与处理

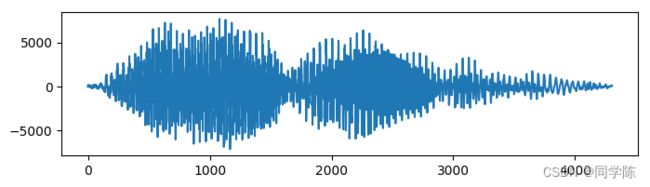

3.1 音频数据展示,绘制波形

example_path='FSDD/0_george_14.wav'

# 读取音频

# fs--采样率 signal--音频信号

fs, signal = wav.read(example_path)

print('这段音频的采样率为:%d' % fs)

print('音频信号:', signal)

print('音频信号形状:', signal.shape)

# 绘制波形

import matplotlib.pyplot as plt

plt.figure(figsize=(8,2))

x = [_ for _ in range(len(signal))]

plt.plot(x, signal)

plt.show()

这段音频的采样率为:8000

音频信号: [ 12 39 179 ... 83 68 114]

音频信号形状: (4304,)

3.2 划分数据集

- 获取音频文件名称列表

- 打乱音频文件名称列表

- 训练集:验证集:测试集=0.9:0.05:0.05

#获取音频文件名称列表

data_path='FSDD'

wavs = glob("{}/*.wav".format(data_path), recursive=True)

print(type(wavs),wavs[0])

# 打乱音频文件名称列表

random.shuffle(wavs)

wavs_len=len(wavs)

print("总数据数量:\t",wavs_len)

#训练集:验证集:测试集=0.9:0.05:0.05

# 训练集

train_wavs=wavs[:int(wavs_len*0.9)]

# 验证集

val_wavs=wavs[int(wavs_len*0.9):int(wavs_len*0.95)]

# 测试集

test_wavs=wavs[int(wavs_len*0.95):]

print("训练集数目:\t",len(train_wavs),"\n验证集数目:\t",len(val_wavs),"\n测试集数目:\t",len(test_wavs))

FSDD/1_george_13.wav

总数据数量: 3000

训练集数目: 2700

验证集数目: 150

测试集数目: 150

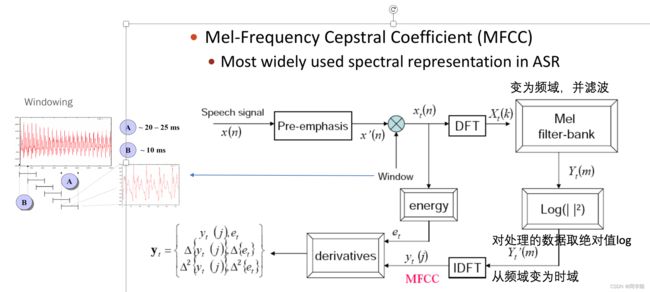

3.3 MFCC特征提取

MFCC(Mel Frequency Cepstral Coefficent)是一种在自动语音和说话人识别中广泛使用的特征。

关于MFCC比较详细的介绍文档可以参考:语音信号处理之(四)梅尔频率倒谱系数(MFCC)

# MFCC特征提取

def get_mfcc(data, fs):

# MFCC特征提取

wav_feature = mfcc(data, fs)

# 特征一阶差分

d_mfcc_feat = delta(wav_feature, 1)

# 特征二阶差分

d_mfcc_feat2 = delta(wav_feature, 2)

# 特征拼接

feature = np.concatenate([wav_feature.reshape(1, -1, 13), d_mfcc_feat.reshape(1, -1, 13), d_mfcc_feat2.reshape(1, -1, 13)], 0)

# 对数据进行截取或者填充

if feature.shape[1]>64:

feature = feature[:, :64, :]

else:

feature = np.pad(feature, ((0, 0), (0, 64-feature.shape[1]), (0, 0)), 'constant')

# 通道转置(HWC->CHW)

feature = feature.transpose((2, 0, 1))

return feature

# 读取音频

fs, signal = wav.read(example_path)

# 特征提取

feature = get_mfcc(signal, fs)

print('特征形状(CHW):', feature.shape,type(feature))

特征形状(CHW): (13, 3, 64)

3.4 音频转向量,标签提取

def preproess(wavs):

datalist=[]

lablelist=[]

for w in tqdm(wavs):

lablelist.append([int(w[5])])

fs, signal = wav.read(w)

f = get_mfcc(signal, fs)

datalist.append(f)

return np.array(datalist),np.array(lablelist)

train_data,train_lable=preproess(train_wavs)

val_data,val_lable=preproess(val_wavs)

test_data,test_lable=preproess(test_wavs)

#print(type(train_data),train_lable[0])

100%|██████████| 2700/2700 [01:34<00:00, 28.65it/s]

100%|██████████| 150/150 [00:05<00:00, 28.87it/s]

100%|██████████| 150/150 [00:05<00:00, 27.83it/s]

3.5 组装数据集

class MyDataset(paddle.io.Dataset):

"""

步骤一:继承paddle.io.Dataset类

"""

def __init__(self,audio,text):

"""

步骤二:实现构造函数,定义数据集大小

"""

super(MyDataset, self).__init__()

self.text = text

self.audio = audio

def __getitem__(self, index):

"""

步骤三:实现__getitem__方法,定义指定index时如何获取数据,并返回单条数据(训练数据,对应的标签)

"""

return self.audio[index],self.text[index]

def __len__(self):

"""

步骤四:实现__len__方法,返回数据集总数目

"""

return self.audio.shape[0]

def prepare_input(inputs):

src=np.array([inputsub[0] for inputsub in inputs]).astype('float32')

trg=np.array([inputsub[1] for inputsub in inputs])

return src,trg

train_dataset = MyDataset(train_data,train_lable)

train_loader = paddle.io.DataLoader(train_dataset, batch_size=64, shuffle=True,drop_last=True,collate_fn=partial(prepare_input))

val_dataset = MyDataset(val_data,val_lable)

val_loader = paddle.io.DataLoader(val_dataset, batch_size=64, shuffle=True,drop_last=True,collate_fn=partial(prepare_input))

for i,data in enumerate(train_loader):

for d in data:

print(d.shape)

break

[64, 13, 3, 64]

[64, 1]

4.组装网络

4.1 使用CNN网络

class audio_Net(paddle.nn.Layer):

def __init__(self):

super(audio_Net, self).__init__()

self.conv1 = paddle.nn.Conv2D(13, 16, 3, 1, 1)

self.conv2 = paddle.nn.Conv2D(16, 16, (3, 2), (1, 2), (1, 0))

self.conv3 = paddle.nn.Conv2D(16, 32, 3, 1, 1)

self.conv4 = paddle.nn.Conv2D(32, 32, (3, 2), (1, 2), (1, 0))

self.conv5 = paddle.nn.Conv2D(32, 64, 3, 1, 1)

self.conv6 = paddle.nn.Conv2D(64, 64, (3, 2), 2)

self.fc1 = paddle.nn.Linear(8*64, 128)

self.fc2 = paddle.nn.Linear(128, 10)

# 定义前向网络

def forward(self, inputs):

out = self.conv1(inputs)

out = self.conv2(out)

out = self.conv3(out)

out = self.conv4(out)

out = self.conv5(out)

out = self.conv6(out)

out = paddle.reshape(out, [-1, 8*64])

out = self.fc1(out)

out = self.fc2(out)

return out

4.2 查看网络结构

audio_network=audio_Net()

paddle.summary(audio_network,input_size=[(64,13,3,64)],dtypes=['float32'])

W1112 12:44:10.491739 269 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 11.2

W1112 12:44:10.494805 269 gpu_resources.cc:91] device: 0, cuDNN Version: 8.2.

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[64, 13, 3, 64]] [64, 16, 3, 64] 1,888

Conv2D-2 [[64, 16, 3, 64]] [64, 16, 3, 32] 1,552

Conv2D-3 [[64, 16, 3, 32]] [64, 32, 3, 32] 4,640

Conv2D-4 [[64, 32, 3, 32]] [64, 32, 3, 16] 6,176

Conv2D-5 [[64, 32, 3, 16]] [64, 64, 3, 16] 18,496

Conv2D-6 [[64, 64, 3, 16]] [64, 64, 1, 8] 24,640

Linear-1 [[64, 512]] [64, 128] 65,664

Linear-2 [[64, 128]] [64, 10] 1,290

===========================================================================

Total params: 124,346

Trainable params: 124,346

| Non-trainable params: 0 |

|---|

| Input size (MB): 0.61 |

| Forward/backward pass size (MB): 6.32 |

| Params size (MB): 0.47 |

| Estimated Total Size (MB): 7.40 |

| --------------------------------------------------------------------------- |

{'total_params': 124346, 'trainable_params': 124346}

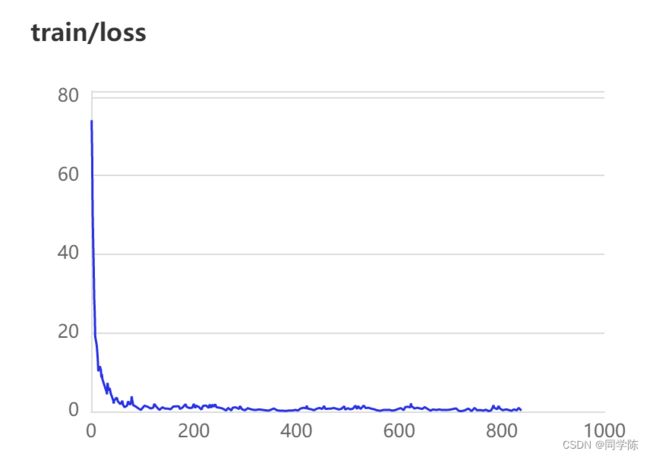

4.3 模型训练

epochs = 20

model=paddle.Model(audio_network)

model.prepare(optimizer=paddle.optimizer.Adam(learning_rate=0.001,parameters=model.parameters()),

loss=paddle.nn.CrossEntropyLoss(),

metrics=paddle.metric.Accuracy())

model.fit(train_data=train_loader,

epochs=epochs,

eval_data= val_loader,

verbose =2,

log_freq =100,

callbacks=[paddle.callbacks.VisualDL('./log')]

5.预测

bingo_num=0

for i in range(test_data.shape[0]):

x=paddle.to_tensor([test_data[i]],dtype='float32')

out=audio_network(x)

out=paddle.nn.functional.softmax(out,axis=-1)

out=paddle.argmax(out)

if i<3:

fs, signal = wav.read(test_wavs[i])

display.display(display.Audio(signal, rate=fs))

print("预测值:",out.numpy()[0],"\t 真实值:",test_lable[i][0])

if out.numpy()[0]==test_lable[i][0]:

bingo_num+=1

print("\n测试集准确率:",bingo_num/150)

预测值: 5 真实值: 5

预测值: 2 真实值: 2

预测值: 9 真实值: 9

测试集准确率: 0.9066666666666666

6.总结

本项从0搭建一个全流程的音频分类模型,重点展示音频文件的读取与处理过程,适合想涉猎音频的领域的小白学习。