(二)sklearn.metrics.classification_report中的Micro/Macro/Weighted Average指标

欢迎访问个人网络日志知行空间

文章目录

-

- 1.classification_report

- 2.计算过程

- 3.Macro Average

- 4.Weighted Average

- 5.Micro Average

- 参考资料

1.classification_report

sklearn.metrics.classification_report输出分类预测结果的常用评估标准,输入是标签和类别的预测向量,包括精准度,召回率和F1 Score。

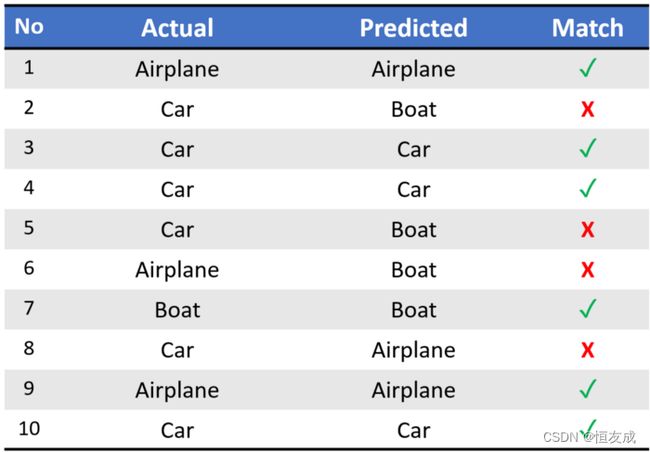

借用1个例子来说明一下计算过程。原例子见:

https://towardsdatascience.com/micro-macro-weighted-averages-of-f1-score-clearly-explained-b603420b292f

from sklearn.metrics import classification_report

import numpy as np

names = ["Airplane", "Boat", "Car"]

names.sort()

class_dict = dict([[v, i] for i,v in enumerate(names)])

# {'Airplane': 0, 'Boat': 1, 'Car': 2}

tru = np.array([0, 2, 2, 2, 2, 0, 1, 2, 0, 2])

pre = np.array([0, 1, 2, 2, 1, 1, 1, 0, 0, 2])

report = classification_report(tru, pre)

print(report)

输出结果为:

precision recall f1-score support

0 0.67 0.67 0.67 3

1 0.25 1.00 0.40 1

2 1.00 0.50 0.67 6

accuracy 0.60 10

macro avg 0.64 0.72 0.58 10

weighted avg 0.82 0.60 0.64 10

2.计算过程

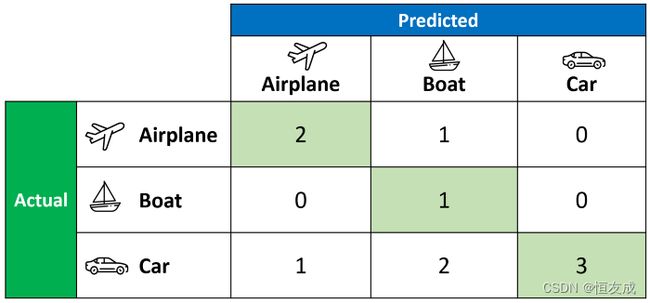

上述预测结果对应的混淆矩阵为:

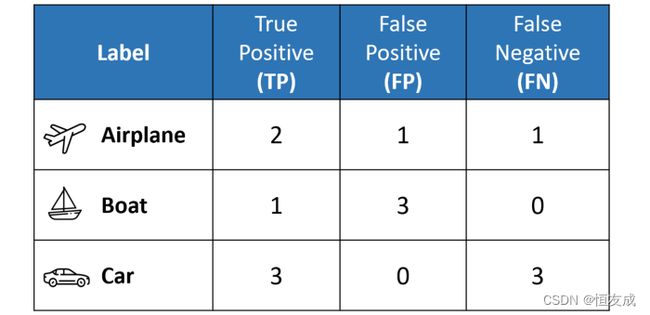

每个类别对应的TP(True Positive)\FP(False Negative)\FN(False Negative)为:

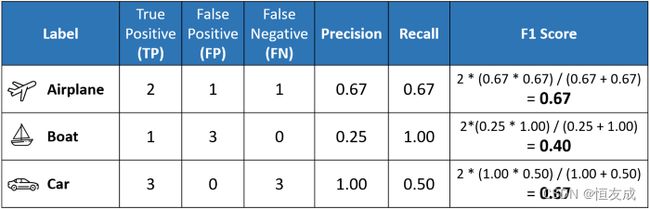

计算每个类别的Precision, Recall和F1 Score:

上述的结果对每个类别单个输出,如果要输出分类效果的整体指标,最好的办法就是对每个类别做平均。Macro Average,Weighted Average,Micro Average对应不同的平均方法。

3.Macro Average

Macro Average是直接对各指标求和求平均。

m a c r o _ a v g _ p r e c i s i o n = 0.67 + 0.25 + 1 3 = 0.64 macro\_avg\_precision = \frac{0.67 + 0.25 + 1}{3} = 0.64 macro_avg_precision=30.67+0.25+1=0.64

m a c r o _ a v g _ r e c a l l = 0.67 + 1.00 + 0.50 3 = 0.72 macro\_avg\_recall = \frac{0.67 + 1.00 + 0.50}{3} = 0.72 macro_avg_recall=30.67+1.00+0.50=0.72

m a c r o _ a v g _ f 1 − s c o r e = 0.67 + 0.40 + 0.67 3 = 0.58 macro\_avg\_f1-score = \frac{0.67 + 0.40 + 0.67}{3} = 0.58 macro_avg_f1−score=30.67+0.40+0.67=0.58

在处理不平衡数据时,可以使用Macro Average衡量算法的效果。

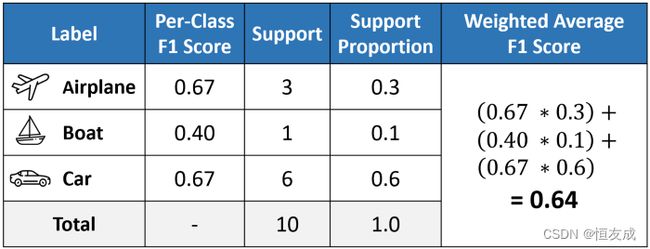

4.Weighted Average

Weighted Average的计算方式为:

w e i g h t e d _ a v g _ p r e c i s i o n = 0.67 ∗ 0.3 + 0.25 ∗ 0.1 + 1.00 ∗ 0.67 = 0.82 weighted\_avg\_precision = 0.67*0.3 + 0.25 * 0.1 + 1.00 * 0.67 = 0.82 weighted_avg_precision=0.67∗0.3+0.25∗0.1+1.00∗0.67=0.82

w e i g h t e d _ a v g _ r e c a l l = 0.67 ∗ 0.3 + 1.0 ∗ 0.1 + 0.5 ∗ 0.6 = 0.6 weighted\_avg\_recall = 0.67*0.3 + 1.0 * 0.1 + 0.5 * 0.6 = 0.6 weighted_avg_recall=0.67∗0.3+1.0∗0.1+0.5∗0.6=0.6

w e i g h t e d _ a v g _ f 1 − s c o r e = 0.67 ∗ 0.3 + 0.4 ∗ 0.1 + 0.67 ∗ 0.6 = 0.64 weighted\_avg\_f1-score = 0.67*0.3 + 0.4 * 0.1 + 0.67 * 0.6 = 0.64 weighted_avg_f1−score=0.67∗0.3+0.4∗0.1+0.67∗0.6=0.64

如果处理不平衡数据时,但需要更多考虑算法在数据量较多的类别数据上表现效果,可以使用加权平均Weighted Average。

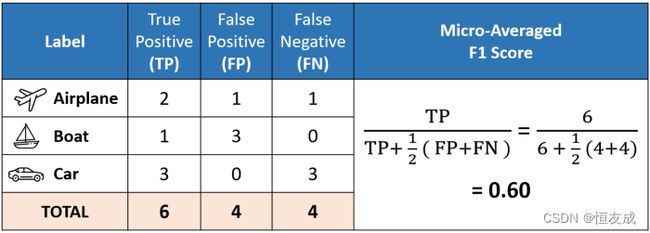

5.Micro Average

m i c r o _ f 1 _ s c o r e = a c c u r a c y = m i c r o _ p r e c i s i o n = m i c r o _ r e c a l l micro\_f1\_score = accuracy = micro\_precision = micro\_recall micro_f1_score=accuracy=micro_precision=micro_recall

其等同于accuracy列对应的f1-score。在处理平衡数据时可以考虑使用Micro Average.

参考资料

- Micro, Macro & Weighted Averages of F1 Score, Clearly Explained

- sklearn.metrics.classification_report

欢迎访问个人网络日志知行空间