深度学习之基于Tensorflow2.0实现AlexNet网络

在之前的实验中,一直是自己搭建或者是迁移学习进行物体识别,但是没有对某一个网络进行详细的研究,正好人工智能课需要按组上去展示成果,借此机会实现一下比较经典的网络,为以后的研究学习打下基础。本次基于Tensorflow2.0实现AlexNet网络。

1.AlexNet 网络简介

作为LeNet网络提出后的第一个比较成熟的网络,AlexNet不负众望的在2012年的ImageNet比赛中以远超第二名的成绩夺冠,使得卷积神经网络广受关注,它相比于VGG系列的网络,层数并不多,而且也没有Xception和Inception等网络比较精巧的设计,但是却是后面网络的基础,在深度学习领域具有重要的意义。

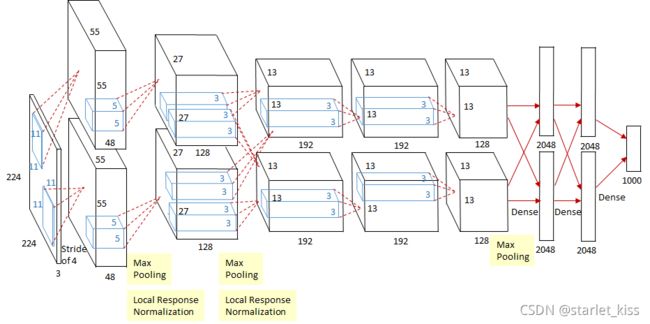

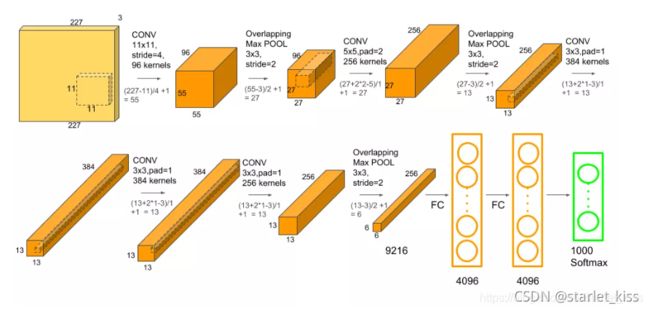

2.网络结构

3.创新点

AlexNet网络相比于之前的LeNet网络,更能提取图片的特征,主要得益于以下几点创新:

① 使用了更深的网络结构。

② 使用卷积层+卷积层+池化层的网络结构来提取图像特征。

③ 使用数据增强以及Dropout来抑制过拟合现象。

④ 使用Relu作为激活函数,替换之前的sigmoid函数。

⑤ 使用多GPU进行训练。

⑥ 使用LRN(局部响应归一化)进行泛化。

4.网络实现

def AlexNet(nb_classes,input_shape):

input_ten = Input(shape=input_shape)

#1

x = tf.keras.layers.Conv2D(filters=96,kernel_size=(11,11),strides=(4,4),activation='relu')(input_ten)

x = BatchNormalization()(x)##利用BN代替LRN

x = MaxPooling2D(pool_size=(3,3),strides=2)(x)

#2

x = tf.keras.layers.Conv2D(filters=256,kernel_size=(5,5),strides=(1,1),activation='relu',padding='same')(x)

x = BatchNormalization()(x)

x = MaxPooling2D(pool_size=(3,3),strides=2)(x)

#3

x = tf.keras.layers.Conv2D(filters=384,kernel_size=(3,3),strides=(1,1),activation='relu',padding='same')(x)

#4

x = tf.keras.layers.Conv2D(filters=384,kernel_size=(3,3),strides=(1,1),activation='relu',padding='same')(x)

#5

x = tf.keras.layers.Conv2D(filters=256,kernel_size=(3,3),strides=(1,1),activation='relu',padding='same')(x)

x = MaxPooling2D(pool_size=(3,3),strides=2)(x)

#FC

x = tf.keras.layers.Flatten()(x)

x = Dense(4096,activation='relu')(x)

x = tf.keras.layers.Dropout(0.5)(x)

x = Dense(4096,activation='relu')(x)

x = tf.keras.layers.Dropout(0.5)(x)

output_ten = tf.keras.layers.Dense(nb_classes,activation='softmax')(x)

model = Model(input_ten,output_ten)

return model

model_AlexNet = AlexNet(24,(img_height,img_width,3))

model_AlexNet.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 224, 224, 3)] 0

_________________________________________________________________

conv2d (Conv2D) (None, 54, 54, 96) 34944

_________________________________________________________________

batch_normalization (BatchNo (None, 54, 54, 96) 384

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 26, 26, 96) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 26, 26, 256) 614656

_________________________________________________________________

batch_normalization_1 (Batch (None, 26, 26, 256) 1024

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 12, 12, 256) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 12, 12, 384) 885120

_________________________________________________________________

conv2d_3 (Conv2D) (None, 12, 12, 384) 1327488

_________________________________________________________________

conv2d_4 (Conv2D) (None, 12, 12, 256) 884992

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 5, 5, 256) 0

_________________________________________________________________

flatten (Flatten) (None, 6400) 0

_________________________________________________________________

dense (Dense) (None, 4096) 26218496

_________________________________________________________________

dropout (Dropout) (None, 4096) 0

_________________________________________________________________

dense_1 (Dense) (None, 4096) 16781312

_________________________________________________________________

dropout_1 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_2 (Dense) (None, 24) 98328

=================================================================

Total params: 46,846,744

Trainable params: 46,846,040

Non-trainable params: 704

_________________________________________________________________

最终需要训练的参数为46,846,040个,相比起LeNet已经是多到爆,但是相比于后面的网络,这些训练参数还是比较少的。

努力加油a啊