机器学习——基于OpenCV和Python的智能图像处理(一)

智能图像处理入门

文章目录

- 智能图像处理入门

- 前言

- 一、Python与OpenCV配置安装

- 二、图像处理基础

-

-

-

- 1.ROI区域

- 2.边界填充

- 3.数值计算

- 4.图像阈值

-

-

- 图像平滑滤波处理

-

-

-

- 1.图像平滑处理

- 2.高斯与中值滤波

-

-

- 图像形态学处理

-

-

-

- 1.腐蚀操作

- 2.膨胀操作

- 3.开运算与闭运算

- 4.梯度计算方法

- 5.黑帽与礼帽

-

-

- 图像梯度与边缘检测

-

-

-

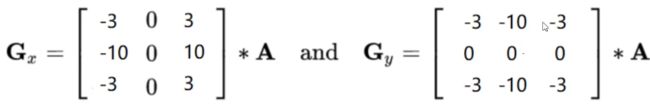

- 1.Sobel算子

- 2.梯度计算方法

- 3.Scharr与lapkacian

- 4. Canny边缘检测流程

- 5.非极大值抑制

- 6.边缘检测

-

-

- 图像分割

-

-

-

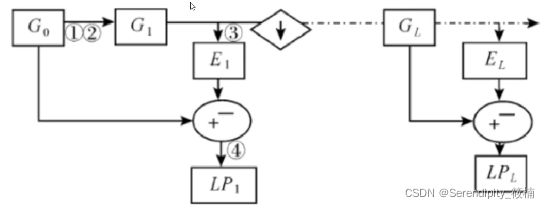

- 1.金字塔定义

- 2.金字塔制作方法

-

-

- 轮廓检测

-

-

-

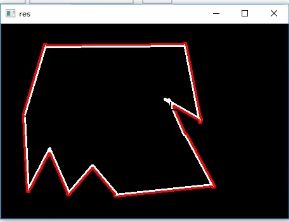

- 1.绘制轮廓:

- 2.轮廓特征:

- 3.轮廓近似

-

-

- 图像直方图处理

-

-

-

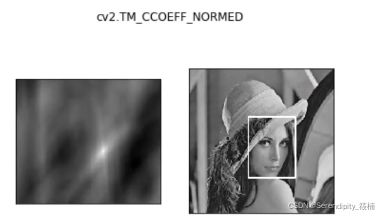

- 1.模板匹配方法

- 2.匹配效果

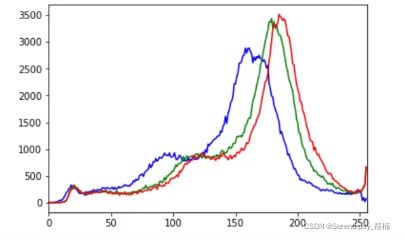

- 3.直方图定义

- 4.均衡化原理

- 5.均衡化效果

-

-

前言

随着人工智能的不断发展,机器学习这门技术也越来越重要,很多人都开启了学习机器学习,本文就介绍了计算机视觉图像处理

一、Python与OpenCV配置安装

1、下载Anaconda3-5.1.0-Windows-x86_64.exe 下载对应 python3.6.3

https://mirrors.tuna.tsinghua.edu.cn/anaconda/archive/

2、下载opencv 3.4.1.15

https://pypi.tuna.tsinghua.edu.cn/simple/opencv-python/

3、代码运行环境:IDEA(按个人喜好)

二、图像处理基础

1.ROI区域

截取部分图像数据

img=cv2.imread('cat'.jpg)

cat=img[0:50,0:200]

cv_show('cat',cat)

颜色通道提取

b.g.r=cv2.split(img)

img=cv2.merge((b,g,r))

img.shape

(414,500,3)

#只保留R,显示为红色,其他类同

cur_img=img.copy()

cur_img[:,:,0]=0

cur_img[:,:,0]=0

cv_show('R',cur_img)

#只保留G,显示为绿色,其他类同

cur_img=img.copy()

cur_img[:,:,0]=0

cur_img[:,:,2]=0

cv_show('G',cur_img)

#只保留B,显示为蓝色,其他类同

cur_img=img.copy()

cur_img[:,:,1]=0

cur_img[:,:,2]=0

cv_show('B',cur_img)

2.边界填充

代码如下(示例):

top_size, bottom_size,left_size,right_size = (50,50,50,50)

replicate = cv2.copyMakeBorder(img,top_size,bottom_size,left_ize,right_size, borderType=cv2.BORDER_REPLICATE)

reflect = cv2.copyMakeBorder(img, top_size, bottom_size,left_size,right_size,cv2.BORDER_REFLECT)

reflect10l = cv2.copy;MakeBorder(img, top_size,bottom_size,left_size,right_size,cv2.BORDER_REFLECT_101)

wrap = cv2.copyMakeBorder img,top_size,bottom_size,left_size,right_size,cv2.BORDER_WRAP)

constant - cv2.copyMakeBorder(img, top_size,bottom_size,left_size,right_size, cv2. EORDER_CONSTANT, value-0)

import matplotlib. pyplot as plt

plt. subplot(231),plt.imshow (img,'gray'), plt.title(”ORIGINAL')

plt.subplot (232),plt.imshow(replicate, 'gray'), plt.title (*’REPLICATE”)

plt.subplot (233),plt. imshow(reflect,'gray'), plt.title(’REFLECT’)

plt. subplot(234),plt.imshow(reflect10l, 'gray'),plt.title('REFLECT_101')

plt.subplot(235),plt.imshow(wrap,'gray'), plt.title(" WRAP)

plt.subplot(236),plt.imshow(constant,'gray'), plt.tit1e(' cONSTANT')

plt.show ()

BORDER_REPLICATE:复制法。也就是复制最边缘像素。

BORDER_REFLECT:反射法,对感兴趣的图像中的像素在两边进行复制如:fedcbaabcdefghihgfedcb

BORDER_REFLECT_101:反射法,也就是以最边缘像素为轴,对称,gfedcblabcdefgh|gfedcba

BORDER_WRAP:外包装法cdefgh|abedefgh|abcdefg

BORDER_CONSTANT:常量法,常数值填充。

3.数值计算

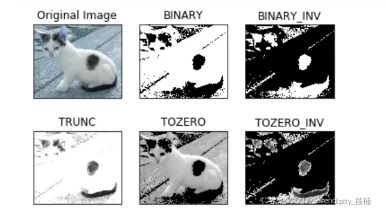

4.图像阈值

ret, dst = cv2.threshol d(src, thresh, maxval, type)

- src:输入图,只能输入单通道图像,通常来说为灰度图 dst:输出图

- thresh:阈值

- maxval:当像素值超过了阈值(或者小于阈值,根据type来决定),所赋予的值

- type:二值化操作的类型,包含以下5种类型:

cv2.THRESH_BINARY; cv2.THRESH_BINARY_INV;

cv2.THRESH_ TRUNC; cV2.HRESH_ TO2 cv2.THRESH_TOZERO_INV - cv2.THRESH_BINARY 超过阈值部分取maxval (最人值),否则取O.

- cv2.THRESH_BINARY_INV THRESH_BINARY的反转

- cv2.THRESH_TRUNC大于阈值部分设为阈值,否则不变

- cv2.THRESH_TOZERO大于阈值部分不改变,否则设为0

- cv2.THRESH_TOZERO_INV THRESH_TOZERO的反转

ret,thresh1 = cv2.threshold(img_gray,127,255,cv2.THRESH_RTNARY)

#上一种类型的反转

ret,thresh2 = cv2.threshold(img_gray,127,255, cv尘THRESH BINARY INV)

#阈值

ret,thresh3 - ev2.t hreshold(img_gray,127,255,cV2.THRESH_TRUNC)

ret,thresh4 = cv2.threshold(img_gray,127,255, cv2.THRESH_T0ZERO)

# 上一种的反转

ret,thresh5 = cv2.threshold(img_gray,127,255, cv2.THRESH_TOZERO_INV)

titles = [ ' original Image','BINARY*,'BINARY_INV','TRUNC', 'TOZERo','TOZERO_INV”']images = [img,thresh1, thresh2,thresh3, thresh4, thresh5]

for i in range(6) :

plt.subplot (2,3.i + 1), plt.imshow (images[i], 'gray')

plt.title(titles[i])

plt.xticks([). plt.yticks([])

plt.show (

图像平滑滤波处理

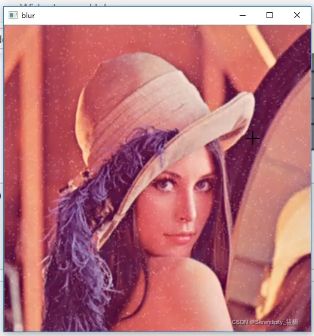

1.图像平滑处理

#均值滤波

#简单的乎均卷积操作

blur = cv2.blur(img,(3,3))

cv2.imshow (' blur', blur)

cv2.waitKey (0)

cv2.destroyAllWindows ()

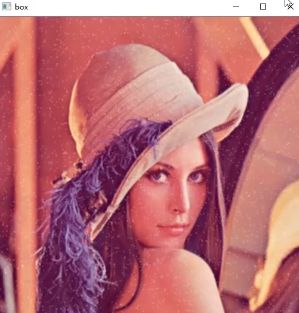

方框滤波

#基本和均值一样,可以选择归一化

box = cv2.boxFilter(img,-1,(3,3),normalize=True)

cv2.imshow ((' box', box)

cv2.waitKey (0)

cv2.destroyAllWindows ()

#方框滤波

#基本和均痘一样,可以选择归一化,容易越界

box = cv2.boxFilter(img,-1,(3,3), normalize=False)

cv2.imshow (" box', box)

cv2.waitKey (0)

cv2.destroyAllWindows ()

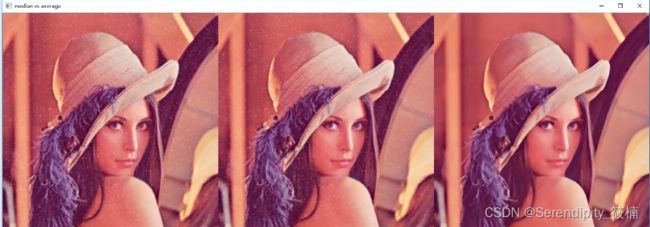

#高斯滤波

#高斯模糊的卷积核里的数值是满足高斯分布,机当于更重视中间的

aussian = cv2. GaussianBlur(img,(5, 5), 1)

cv2. imshow(' aussian',aussian)

cv2. waitKey (0)

cv2. destroyAllWindows ()

# 中值滤波

cv2. imshow(' median',median)

cv2. waitKey (0)

cv2. destroyAllWindows ()

#展示所有的

res = np. hstack ( (blur, aussian, median))

cv2. imshow(' median Vs average' ,res)

cv2. waitKey (0)

cv2. destroyAllWindows()

图像形态学处理

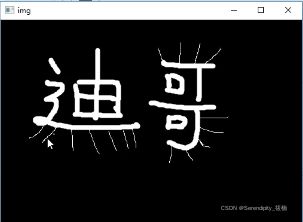

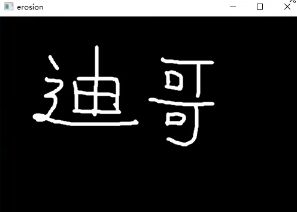

1.腐蚀操作

img = cv2. imread( dige. png' )

cv2. imshow(' img',img)

cv2. waitKey (0)

cv2. destroyAllWindows ()

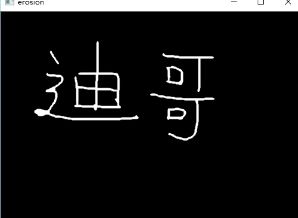

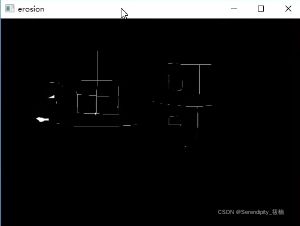

#5*5的腐蚀程度远远高于3*3

kernel = np. ones( 5, 5), np. uint8)

#修改数据为2,处理后线条几乎消失了

erosion = cv2. erode (img, kernel, iterations = 2)

cv2. imshow('erosion',erosion)

cv2. waitKey (0)

cv2. destroyAl lWindows ()

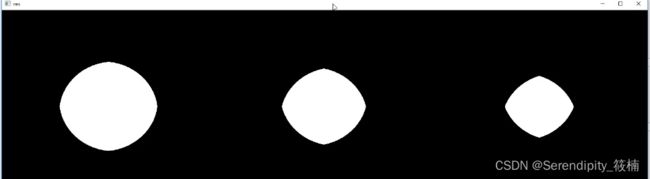

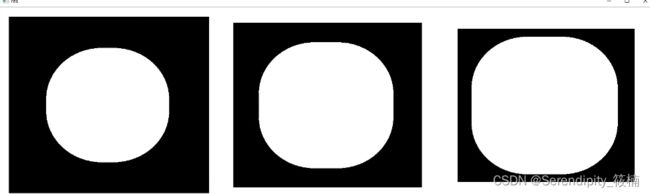

pie = cv2. imread(' pie',png' )

cv2. imshow('pie',pie)

cv2. waitKey (0)

cv2. destroyAllWindows ()

kernel = np. ones ((30, 30), rp. uint8)

erosion 1 = cv2. erode (pie, kernel, iterations = 1)

erosion_ 2 = cv2. erode (pie, kernel, iterations = 2)

erosion_ 3 = cv2. erode (pie, kernel, iterations = 3)

res = np. hstack((erosion_ 1, erosion_ 2, erosion 3))

cv2. imshow(' res' ,res)

cv2. wai tKey (0)

cv2. destroyAllWindows( )

2.膨胀操作

img = cv2. imread( dige. png' )

cv2. imshow('img',img)

cv2. waitKey (0)

cv2. destroyAllWindows ()

kernel = np. ones((3, 3), np. uint8)

dige. erosion = cv2. erode (img, kernel, iterations = 1)

cv2. imshow('erosion',erosion)

cv2. waitKey (0)

cv2. destroyAllWindows ()

kernel = np. ones((3, 3), np. uint8)

dige_ _dilate = cv2. dilate (dige_ erosion, kernel, iterations = 1)

cv2. imshow('dilate',dige_ dilate)

cv2. waitKey (0)

cv2. destroyAllWindows()

pie = cv2. imread('pie.png' )

kernel = np. ones ((30, 30),np. uint8)

dilate_ 1 = cv2. dilate (pie, kernel, iterations = 1)

dilate_ 2 = cv2. dilate (pie, kernel, iterations = 2)

dilate_ 3 = cv2. dilate (pie, kernel, iterations = 3)

res = np. hstack((dilate_ 1, dilate_ 2, dilate_ 3))

cv2. imshow('res',res)

3.开运算与闭运算

#开:先腐蚀,再膨胀

img = cv2. imread(' dige. png )

kernel = np. ones((5, 5),np. uint8)

opening = cv2. morphologyEx(img,cv2. MORPH 0OPEN, kernel)

cv2. imshow( opening',opening)

cv2. waitKey (0)

cv2. destroyAllWindows 0

#闭:先膨胀,再腐蚀

img = cv2. imread('dige. png' )

kernel = np. ones((5, 5), np. uint8)

closing = cv2. morphologyEx (img,cv2. MORPH CLOSE,kerne1)

cv2. imshow('closing',closing)

cv2. waitKey (0)

cv2. destroyAllWindows ()

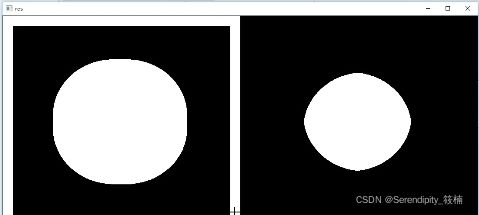

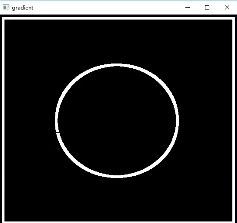

4.梯度计算方法

#梯度=膨胀腐蚀

pie = cv2. imread('pie. png' )

kernel = np. ones((7, 7), np. uint8)

dilate = cv2. dilate(pie, kernel, iterations = 5)

erosion = cv2. erode (pie, kernel, iterations = 5)

res = np. hstack((di late, erosion))

cv2. imshow('res',res)

cv2. waitKey(0)

cv2. destroyAllWindows()

gradient = cv2. morphologyEx(pie,cv2. MORPHL GRADIENT,kernel)

cv2. imshow('gradient',gradient)

cv2. waitKey (0)

cv2. destroyAllWindows()

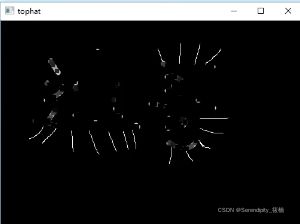

5.黑帽与礼帽

- 礼帽=原始输入-开运算结果

- 黑帽=闭运算-原数输入

处理前:

#礼帽

img = cv2. imread('dige. png' )

tophat = cv2. morphologyEx (img,cv2.MORPH TOPHAT,kernel)

cv2. imshow(' tophat',tophat)

cv2. waitKey (0)

cv2. destroyAllWindows ()

#黑帽

img = cv2. imread('dige.png' )

blackhat = cv2. morphologyEx (img. cv2. MORPH BLACKHAT, kernel)

cv2. imshow(' blackhat',blackhat )

cv2. waitKey (0)

cv2. destroyAllWindows()

图像梯度与边缘检测

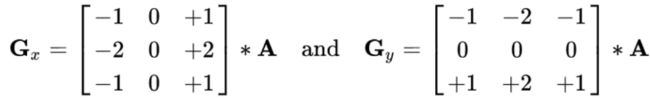

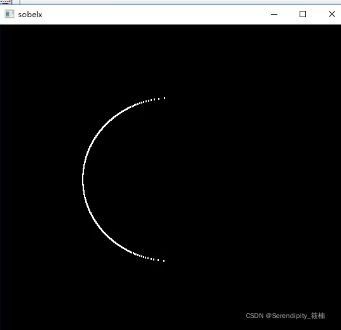

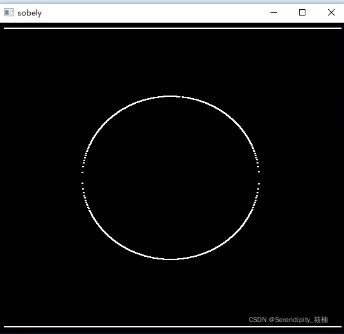

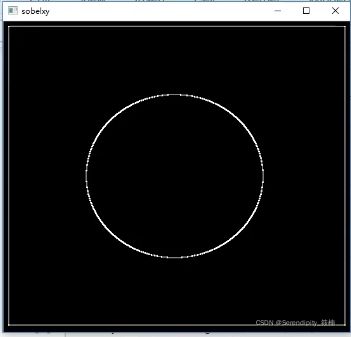

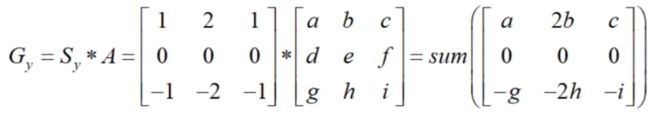

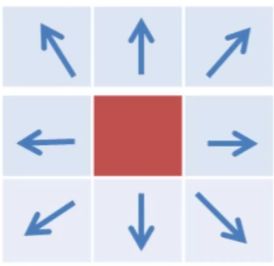

1.Sobel算子

img = cv2.imread('pie. png', cv2.IMREAD_GRAYSCALE)

cv2.imshow("img ", img)

cv2.waitKey ()

cv2.destroyAllWindows ()

dst = cv2.Sobel(src, ddepth, dx, dy, ksize)

- ddepth:图像的深度

- dx和dy分别表示水平和竖直方向

- ksize是Sobel算子的大小

def cv_show(img, name) :

cv2.imshow(name,img)

cv2.waitKey ()

cv2.destroyAllwindows()

sobelx = cv2.Sobel(img, cv2.CV_64F,1.0,ksize=3)

cv_show (sobelx,'sobelx')

白到黑是正数,黑到白就是负数了,所有的负数会被截断成0,所以要取绝对值

sobelx = cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

sobelx - Cv2.convertScaleAbs(sobelx)

cv_show(sobelx, 'sobelx')

sobely = cv2.Sobel(img,cv2.CV_64F,0,1,ksize=3)

sobely = cv2.convertscaleAbs(sobely)

sobelxy = Cv2.addWeighted(sobelx,0.5,sobely,0.5,0)

cv_show(sobelxy,'sobelxy')

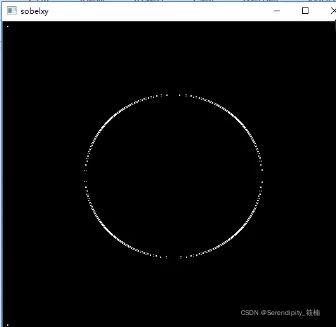

sobelxy=cv2.Sobel(img,cv2.CV_64F,1,1,ksize=3)sobelxy = cv2.convertscaleAbs(sobelxy)

cv_show(sobelxy,'sobelxy')

img = cv2.imread(' lena.jpg',cv2.IMREAD_GRAYSCALE)

cv_show(sobelxy, 'sobelxy')

img = cv2.imread(' lena.jpg',cv2.IMREAD_GRAYSCALE)

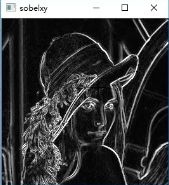

sobelx = cv2.Sobel(img, cv2.CV_64F,1,0,ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

sobely = cv2.Sobel(img, cv2.CV_64F,0,1,ksize=3)

sobely = cv2.convertScaleAbs(sobely)

sobelxy = cv2.addWeighted(sobelx,0.5,sobely,0.5,0)

cv_show(sobelxy, 'sobelxy')

img = cv2. imread(' lena. jpg',cv2. IMREAD GRAYSCALE)

sobe. lxy=cv2. Sobel (img, cv2. CV_ 64F, 1, 1, ksize-3)

sobelxy = cv2. convertScaleAbs (sobelxy)

cv_ show (sobelxy,’sobelxy' )

总结:如上图所示,分别计算的运行效果图明显好于直接计算的效果图

2.梯度计算方法

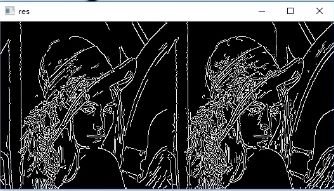

3.Scharr与lapkacian

#不同算子的差异

img = cv2. imread('lena. jpg',cv2. IMREAD GRAYSCALE)

sobelx = cv2. Sobel(img, cv2. CV_ _64F, 1, 0, ksize-3)

sobely = cv2. Sobel (img, cv2. CV_ _64F, 0, 1, ksize-3)

sobelx = cv2. convertScaleAbs (sobelx)

sobely = cv2. convertScaleAbs (sobely)

sobelxy = cv2. addWeighted (sobelx, 0. 5, sobely,0.5, 0)

scharrx = cv2. Scharr(img, cv2. CV_ _64F, 1, 0)

scharry = cv2. Scharr(img, cv2. CV_ 64F, 0, 1)

scharrx = cv2. convertScaleAbs (scharrx)

scharry = cv2. convertScaleAbs (scharry)

scharrxy = cv2. addWei ghted (scharrx, 0. 5, scharry,0. 5, 0)

laplacian = cv2. Laplacian(img. cv2. CV_ 64F)

laplacian = cv2. convertScaleAbs (1aplacian)

res = np. hstack((sobelxy, scharrxy, laplacian))

cv_ show(res,' res' )

运行效果的差异:sobel、scharrx、laplacian

4. Canny边缘检测流程

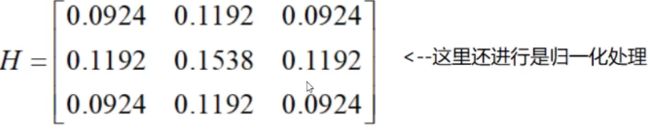

- 使用高斯滤波器,以平滑图像,滤除噪声。

- 计算图像中每个像素点的梯度强度和方向。

- 应用非极大值(Non-Maximum Suppression)抑制,以消除边缘检测带来的杂散响应。

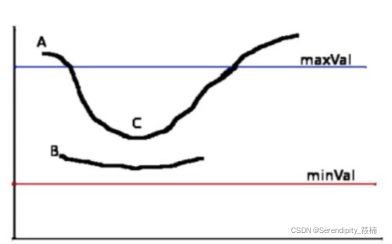

- 应用双阈值(Double-Threshold) 检测来确定真实的和潜在的边缘。

- 通过抑制孤立的弱边缘最终完成边缘检测。

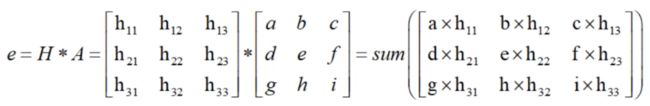

1、高斯滤波器

5.非极大值抑制

线性插值法:设g1的梯度幅值M(g1), g2的梯度幅值M(g2),则dtmp1可以很得到:

M(dtmp1)=w*M(g2)+(1-w)*M(g1)

其中w= distance(dtmp1,g2)/distance(g1,g2)

distance(g1,g2)表示两点之间的距离。

为了简化计算,由于一个像素周围有八个像素,把-个像素的梯度

方向离散为八个方向,这样就只需计算前后即可,不用插值了。

6.边缘检测

img=cv2. imread("lena.jpg ",cv2. IMREAD GRAYSCALE)

v1=cv2. Canny (img, 80, 150)

v2=cv2. Canny (img, 50, 100)

res = np. hstack((v1, v2))

CV_ show(res,

res' )

结论:左侧阈值为(80,150),右侧为(50,100)

阈值越小,得到的图像细节信息越多

img=cv2. imread('car. png',cv2. IMREAD_ GRAYSCALE)

v1=cv2. Canny (img, 120, 250)

v2-cv2. Canny (img, 50, 100)

res = np. hstack((v1, v2))

CV_ show(res,'res' )

结论:阈值越小,得到的图像细节信息越多,边界信息也越多

图像分割

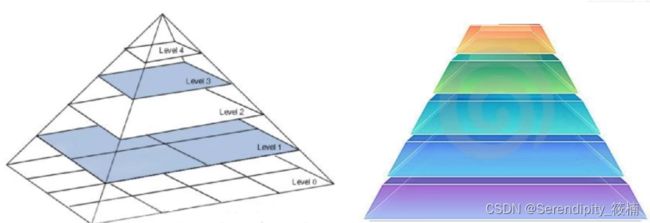

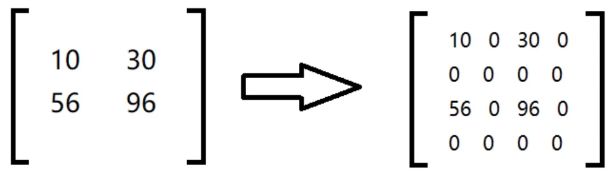

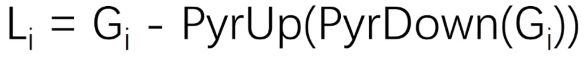

1.金字塔定义

1.将图像在每个方向扩大为原来的两倍,新增的行和列以0填充

2.使用先前同样的内核(剩以4)与放大后的图像卷积,获得近似值

2.金字塔制作方法

ing=cv2. imread(" AM. png" )

cv_ show(img,'img')

print (img. shape)

(442,340, 3)

up=cv2. pyrUp (img)

cv_ show(up,'up' )

print up. shape

down=cv2. pyrDown(img)

cv_ show (down,'down' )

print (down. shape)

up2=cv2.pyrUp(up)

cv_ show(up2,'up2' )

print (up2. shape)

(1768,1360,3)

up=cv2. pyrUp (img)

up_ down=cv2. pyrDown (up)

cv_ show(img-up_ down,'img-up_ down' )

CV_ show ( np. hstack((img, up_ down)),' up_ _down' )

结论:原始图像与上图的对比,原始图像更为清晰

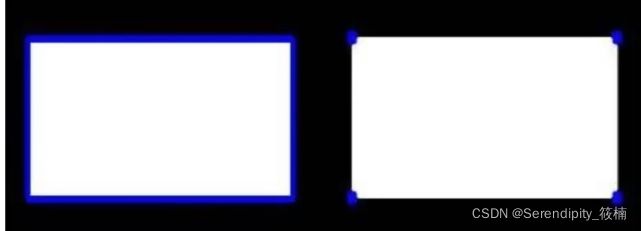

轮廓检测

cv2.findContours(img,mode,method)

mode:轮廓检索模式

●RETR_ EXTERNAL :只检索最外面的轮廓; .

●RETR_ LIST: 检索所有的轮廓,并将其保存到一条链表当中;

●RETR_ CCOMP:检索所有的轮廓,并将他们组织为两层:顶层是各部分的外部边界,第二层是空洞的边界;

●RETR_ TREE:检索所有的轮廓,并重构嵌套轮廓的整个层次;

method:轮廓逼近方法

●CHAIN_ APPROX_ NONE:以Freeman链码的方式输出轮廓,所有其他方法输出多边形(顶点的序列)。

●CHAIN_ APPROX_ SIMPLE:压缩水平的、 垂直的和斜的部分,也就是,函数只保留他们的终点部分。

为了更高的准确率,使用二值图像。

为了更高的准确率,使用二值图像。

原始图:

转化为灰度图,再转为二值图,目的是更好的进行目标检测

img = cv2. imread('car. png' )

gray = cv2. cvtColor(img, cv2. COLOR BGR2GRAT)

ret,thresh = cv2. threshold(gray, 127, 255, cv2. THRESH BINARY)

cv_ show(thresh,' thresh' )

binary, contours, hierarchy = cv2. findContours (thresh, cv2. RETR_ TREE,cv2. CHAIN APPROX_ NONE)

1.绘制轮廓:

#传入绘制图像,轮廓,轮廓索引,颜色模式,线条厚度

#注意需要copy,要不原图会变

draw_ img = img. copy()

res = cv2. drawContours (draw_ img, contours, -1, (O, 0,255), 2)

cv_ show(res,'res' )

draw_ img = img. copy()

res = cv2. drawContours (draw_ img, (contours,0,(0, 0,255), 2)

cv_show(res,'res' )

2.轮廓特征:

cnt = contours[0] I

#面积

cv2. contourArea (cnt)

8500.5

#周长,True表示闭合的

cv2. arcLength(cnt, True)

- 9482651948929

3.轮廓近似

img = cv2. imread(' contours2. prong’' )

gray = cv2. cvtColor(img,cv2. COLOR _BGR2GRAY)

ret,thresh = cv2. threshold(gray, 127, 255, cv2. THRESH_ BINARY)

binary, contours, hierarchy = cv2. findContours (thresh, cv2. RETR_ TREE,cv2. CHAIN _APPROX_ NONE)

cnt = contours [0]

draw_ img = img. copy()

res=cv2. drawContours(draw img, [cnt], -1, (0, 0, 255), 2)

cv_ show(res,' res' )

epsilon = 0. 1*Cv2. arcLength (cnt, True)

approx = cv2. approxPolyDP (ent, epsilon, True)

draw_ img = img. copy()

res = cv2. drawContours (draw_ img, [approx], -1, (0, 0,255), 2)

cV_ show(res,'res' )

图像直方图处理

1.模板匹配方法

- 模板匹配和卷积原理很像,模板在原图像上从原点开始滑动,计算模板与(图像被模板覆盖的地方)的差别程度,这个差别程度的计算方法在opencv里有6种,然后将每次计算的结果放入一个矩阵里,作为结果输出。假如原图形是AxB大小,而模板是axb大小,则输出结果的矩阵是(A-a+1)x(B-b+1)

#模板四配

img = cv2. imread(' lena. jpg',0)

template = cv2. imread( face. jpg',0)

h, w =template. shape[:2]

img. shape

(263,263)

template. shape

(110,85)

●TM_ SQDIFF: 计算平方不同,计算出来的值越小,越相关

● TM_ CCORR:计算相关性,计算出来的值越大,越相关

●TM_ CCOEFF:计算相关系数,计算出来的值越大,越相关

●TM_ SQDIFF_ NORMED: 计算归一化平方不同,计算出来的值越接近0,越相关

TM_ CCORR NORMED:计算归-化相关性,计算出来的值越接近1,越相关

●TM_ CCOEFF_ NORMED:计算归-化相关系数,计算出来的值越接近1,越相关

2.匹配效果

- 匹配单个对象

for meth in methods:

img2 = img. copy()

#匹配方法的真值

method = eval (meth)

print (method)

res = cv2. matchTemplate(img, template, method)

min_ val, max_ _val, min 1oc,max_ 1oc = cv2. minMaxLoc (res)

#如果是平方差匹配TM SQDIFF或归-化平方差匹配TK SQDIFF NORMED,取最小值

if method in [cv2. TM SQDIFF, cv2. TM SQDIFF NORMED] :

top_ 1eft = min_ loc

else:

top_ 1eft = max_ 1oc

bottom right = (top_ 1eft[o] + w,top_ 1eft[1] + h)

#画矩形

cv2. rectangle(img2,top_ left, bottom_ right, 255, 2)

plt. subplot(121),plt. imshow(res,cmap=' gray' )

plt. xticks([]),plt. yticks([]) # 隐减坐标轴

plt. subplot(122),plt. inshow(img2,cmap-' gray' )

plt. xticks([]),plt. yticks([])

plt. suptitle (meth)

plt. show()

- 匹配多个对象

img_ rgb = cv2. imread( mario. jpg' )

ing_ gray = cv2. cvtColor(img_ rgb, cv2. COLOR BGR2GRAY)

template = cv2. imread(' mariopcoin. jpg',0)

h, w=template. shape[:2]

res = cv2. matchTemplate(img gray, template, cv2. TM_ CCOEFF NORMED)

threshold = 0. 8

#取匹配程度大于%80的坐标

1oc = np. where(res >= threshold)

for pt in zip(*loc[::-1]): # *号表示可选参数

bottom_ right = (pt[0] + w,pt[1] + h)

cv2. rectangle(img_ rgb, pt, bottom right, (0, 0, 255), 2)

cv2. imshow(' img. rgb' ,img. rgb)

cv2. waitKey (0)

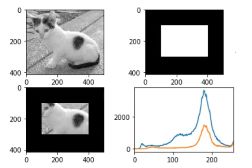

3.直方图定义

cv2.calcHist(images,channels,mask,histSize,ranges)

●images: 原图像图像格式为uint8或float32。当传入函数时应用中括号0括来例如[img]

●channels:同样用中括号括来它会告函数我们统幅图像的直方图。如果入图像是灰度图它的值就是[0]如果是彩色图像的传入的参数可以是[0][1][2]它们分别对应着BGR。

●mask:掩模图像。统整幅图像的直方图就把它为None。但是如果你想统图像某一分的直方图的你就制作一 个掩模图像并使用它。

●histSize:BIN 的数目。也应用中括号括来

, ranges:像素值范围常为[0256]

img = cv2. imread( cat. jpg' ,0) #0表示灰度图

hist = cv2. calcHist([img], [0], None, [256], [O, 256])

hist. shape

(256,1)

plt. hist (img. rave10, 256) ;

plt. show()

img = cv2. imread('cat. jpg' )

color-('b','g','r)

for i,col in enumerate (cqlor):

histr = cv2. calcHist ([img], [i], None, [256],[0, 256])

plt. plot (histr,color = col)

plt. xlim([0, 256])

#创建mast

mask = np. zeros (img. shape[:2],np. uint8)

mask[100:300,100:400] 一255

cv_ show(mask,' mask' )

img = cv2. imread(' cat. jpg',0)

cv_ show(img,' img' )

masked_ img = cv2. bitwise. _and(img,img, mask-mask) #与操作

cv_ show(masked_ img,' masked_ img' )

hist_ full = cv2. calcHist([img],[0], None, [256], [0, 256])

hist_ mask = cv2. calcHist([img],[0], mask, [256], [0,256])

plt. subplot (221),plt. imshow(img,' gray' )

plt. subplot (22),plt. imshow(mask,' gray' )

plt. subplot (223),plt. imshow (masked_ img,gray' )

plt. subplot (224),plt. plot (hist_ _full), plt. plot (hist_ mask)

plt. xlim([0, 256])

plt. show()