Pytorch查看模型的参数量和计算量

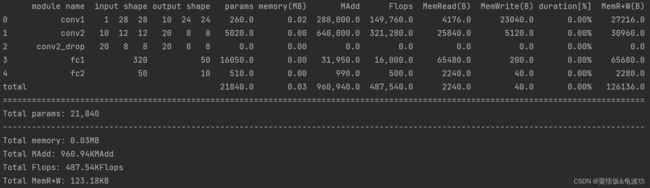

1. stat

缺点:仅支持单输入模型

- Example

import torch.nn as nn

import torch.nn.functional as F

from torchstat import stat

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x, dim=1)

model = Net()

# [C, H, W],不需要B维度

stat(model, (1, 28, 28))

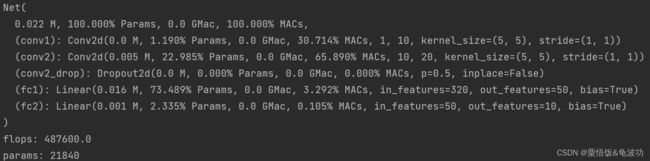

2. thop

支持多输入网络

- Example

import torch

import torch.nn as nn

import torch.nn.functional as F

import thop

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x, dim=1)

model = Net()

x = torch.randn((1, 1, 28, 28))

flops, params = thop.profile(model, inputs=(x, ))

# 多输入则为

# flops, params = thop.profile(model, inputs=(x, y, z))

flops, params = thop.clever_format([flops, params], '%.3f')

print('flops:', flops)

print('params:', params)

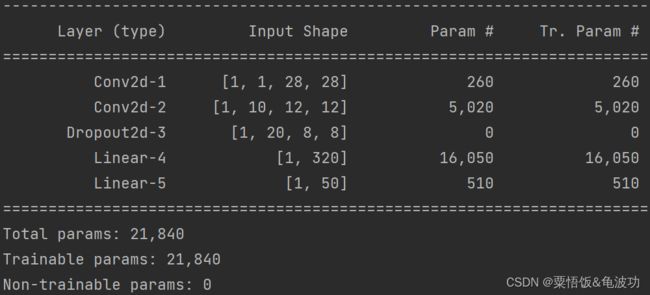

3. ptflops

没试过支不支持多输入

- Example

import torch

import torch.nn as nn

import torch.nn.functional as F

from ptflops import get_model_complexity_info

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x, dim=1)

model = Net()

flops, params = get_model_complexity_info(model, (1, 28, 28), as_strings=False, print_per_layer_stat=True)

print('flops:', flops)

print('params:', params)

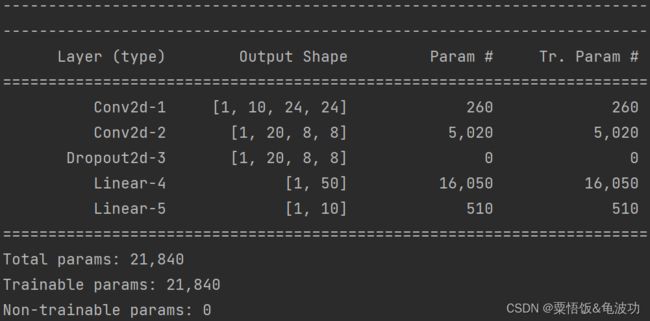

4. pytorch_model_summary

缺点:只能看参数量

- Example

import torch

import torch.nn as nn

import torch.nn.functional as F

from pytorch_model_summary import summary

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x, dim=1)

# show input shape

print(summary(Net(), torch.zeros((1, 1, 28, 28)), show_input=True))

# show output shape

print(summary(Net(), torch.zeros((1, 1, 28, 28)), show_input=False))

# show output shape and hierarchical view of net

print(summary(Net(), torch.zeros((1, 1, 28, 28)), show_input=False, show_hierarchical=True))