史上最详细Lipreading using Temporal Convolutional Networks(MS-TCN)代码层面详解

本文将从代码层面详细介绍在LRW数据集实现SOTA效果的唇语识别模型MS-TCN。GitHub代码请看Lipreading using Temporal Convolutional Networks,环境配置请看史上最详细 Lipreading using Temporal Convolutional Networks 环境配置,使用的数据集请看史上最详细唇语识别数据集综述和史上最详细LRW数据集、LRW-1000数据集、LRS2数据集和OuluVS2数据集介绍。

文章内容将持续更新,欢迎在评论区或私信讨论

文章目录

- 1 Frontend

-

- 1.1 3DConv

- 1.2 2DConv

-

- 1.2.0 3D_to_2D

- 1.2.1 ResNet18

- 2 Backend

-

- 2.0 Reshape Feature

- 2.1 MS-TCN

-

- 2.1.0 Feature_to_1D

- 2.1.1 Overall

- 2.1.2 MultibranchTemporalBlock

- 2.1.3 ConvBatchChompRelu

- 2.1.4 Chomp1d

- 2.2 Prediction

下面开始正文,本文将以一个mini batch作为模型输入进行讲解,输入尺寸为B×1×29×88×88 (batch size, color channel-gray scale, sequence length, width, height)。使用的模型为3D Conv+ResNet18+MS-TCN的组合。

1 Frontend

1.1 3DConv

输入为尺寸B×1×29×88×88,经

nn.Conv3d(1, self.frontend_nout, kernel_size=(5, 7, 7), stride=(1, 2, 2), padding=(2, 3, 3), bias=False)

得到输出尺寸B×24×29×44×44,经

nn.BatchNorm3d(self.frontend_nout)

nn.PReLU(num_parameters=self.frontend_nout)

得到输出尺寸B×24×29×44×44,经

nn.MaxPool3d(kernel_size=(1, 3, 3), stride=(1, 2, 2), padding=(0, 1, 1))

得到输出尺寸B×24×29×22×22。至此,得到第一层3D卷积输出的特征图。

1.2 2DConv

1.2.0 3D_to_2D

1.1最终的特征图尺寸为B×24×29×22×22

使用此函数

def threeD_to_2D_tensor(x):

n_batch, n_channels, s_time, sx, sy = x.shape

x = x.transpose(1, 2)

return x.reshape(n_batch * s_time, n_channels, sx, sy)

首先转换第1和第2维,得到B×29×24×44×44

再将前两维合并,得到(B×29)×24×44×44,即最后三维表示一帧,共B×29帧

1.2.1 ResNet18

1.2.0将视频转为图片,尺寸为(B×29)×24×44×44,对此使用ResNet18。ResNet不是本文重点,因此只给出ResNet18的输入输出格式。即输入为24×44×44大小的图片,输出为512的特征。故(B×29)×24×44×44作为输入,得到(B×29)×512的特征。

2 Backend

2.0 Reshape Feature

前端网络最终输出为(B×29)×512的向量,使用

x = x.view(B, Tnew, x.size(1))

将其恢复为B×29×512

2.1 MS-TCN

2.1.0 Feature_to_1D

TCN基于一维卷积,需将长度这一维移到最后,输入B×29×512,通过

xtrans = x.transpose(1, 2)

得到B×512×29

2.1.1 Overall

之后使用此部分的代码构造4层,三分支,感受域3,5,7的MS-TCN整体模型

layers = []

num_levels = 4

for i in range(num_levels):

dilation_size = 2 ** i

in_channels = 512 if i == 0 else 768

out_channels = 768

padding = [(s - 1) * dilation_size for s in [3, 5, 7]]

layers.append(

MultibranchTemporalBlock(

in_channels, out_channels, self.ksizes,

stride=1, dilation=dilation_size, padding=padding,

dropout=dropout, relu_type=relu_type, dwpw=dwpw

)

)

self.network = nn.Sequential(*layers)

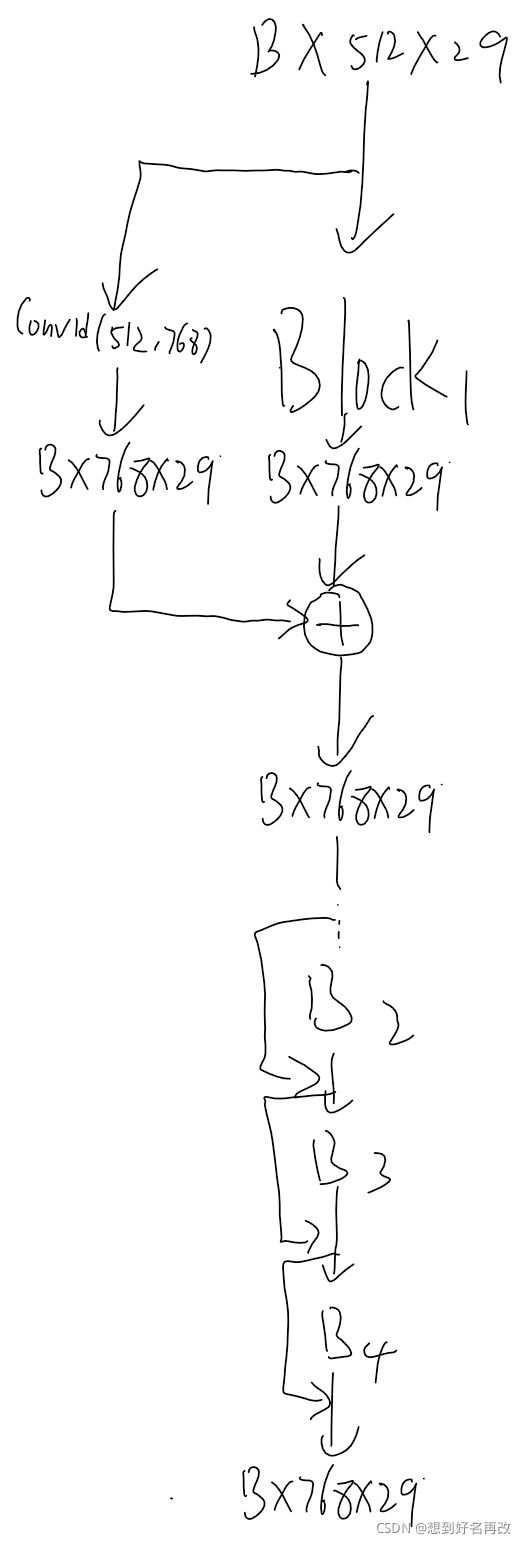

下图为MS-TCN的整体缩略图,上述代码共制作了4个MultibranchTemporalBlock。

2.1.2 MultibranchTemporalBlock

Block的类代码为

class MultibranchTemporalBlock(nn.Module):

def __init__(self, n_inputs, n_outputs, kernel_sizes, stride, dilation, padding, dropout=0.2,

relu_type='relu', dwpw=False):

super(MultibranchTemporalBlock, self).__init__()

self.kernel_sizes = kernel_sizes

self.num_kernels = len(kernel_sizes)

self.n_outputs_branch = n_outputs // self.num_kernels

assert n_outputs % self.num_kernels == 0, "Number of output channels needs to be divisible by number of kernels"

for k_idx, k in enumerate(self.kernel_sizes):# 构建第一层Sub-Block

cbcr = ConvBatchChompRelu(n_inputs, self.n_outputs_branch, k, stride, dilation, padding[k_idx], relu_type,

dwpw=dwpw)

setattr(self, 'cbcr0_{}'.format(k_idx), cbcr)

self.dropout0 = nn.Dropout(dropout)

for k_idx, k in enumerate(self.kernel_sizes):# 构建第二层Sub-Block

cbcr = ConvBatchChompRelu(n_outputs, self.n_outputs_branch, k, stride, dilation, padding[k_idx], relu_type,

dwpw=dwpw)

setattr(self, 'cbcr1_{}'.format(k_idx), cbcr)

self.dropout1 = nn.Dropout(dropout)

# downsample?

self.downsample = nn.Conv1d(n_inputs, n_outputs, 1) if (n_inputs // self.num_kernels) != n_outputs else None

# (n_inputs // self.num_kernels) != n_outputs 一直为True,故self.downsample = nn.Conv1d(n_inputs, n_outputs, 1)

# final relu

if relu_type == 'relu':

self.relu_final = nn.ReLU()

elif relu_type == 'prelu':

self.relu_final = nn.PReLU(num_parameters=n_outputs)

def forward(self, x):

# first multi-branch set of convolutions

outputs = []

for k_idx in range(self.num_kernels):

branch_convs = getattr(self, 'cbcr0_{}'.format(k_idx))

outputs.append(branch_convs(x))

out0 = torch.cat(outputs, 1)

out0 = self.dropout0(out0)

# second multi-branch set of convolutions

outputs = []

for k_idx in range(self.num_kernels):

branch_convs = getattr(self, 'cbcr1_{}'.format(k_idx))

outputs.append(branch_convs(out0))

out1 = torch.cat(outputs, 1)

out1 = self.dropout1(out1)

# downsample?

res = x if self.downsample is None else self.downsample(x)

return self.relu_final(out1 + res)

下图为一个Block1和Block234的图解

2.1.3 ConvBatchChompRelu

下面是每一个分支的类代码

class ConvBatchChompRelu(nn.Module):

def __init__(self, n_inputs, n_outputs, kernel_size, stride, dilation, padding, relu_type, dwpw=False):

super(ConvBatchChompRelu, self).__init__()

self.dwpw = dwpw

if dwpw:

self.conv = nn.Sequential(

# -- dw

nn.Conv1d(n_inputs, n_inputs, kernel_size, stride=stride,

padding=padding, dilation=dilation, groups=n_inputs, bias=False),

nn.BatchNorm1d(n_inputs),

Chomp1d(padding, True),

nn.PReLU(num_parameters=n_inputs) if relu_type == 'prelu' else nn.ReLU(inplace=True),

# -- pw

nn.Conv1d(n_inputs, n_outputs, 1, 1, 0, bias=False),

nn.BatchNorm1d(n_outputs),

nn.PReLU(num_parameters=n_outputs) if relu_type == 'prelu' else nn.ReLU(inplace=True)

)

else:

self.conv = nn.Conv1d(n_inputs, n_outputs, kernel_size,

stride=stride, padding=padding, dilation=dilation)

self.batchnorm = nn.BatchNorm1d(n_outputs)

self.chomp = Chomp1d(padding, True)

self.non_lin = nn.PReLU(num_parameters=n_outputs) if relu_type == 'prelu' else nn.ReLU()

下图为Block1的第一个Sub-Block,dwpw=True的图解,省略BN和ReLU。

2.1.4 Chomp1d

Chomp1d的代码如下

class Chomp1d(nn.Module):

def __init__(self, chomp_size, symm_chomp):

super(Chomp1d, self).__init__()

self.chomp_size = chomp_size

self.symm_chomp = symm_chomp

if self.symm_chomp:

assert self.chomp_size % 2 == 0, "If symmetric chomp, chomp size needs to be even"

def forward(self, x):

if self.chomp_size == 0:

return x

if self.symm_chomp:# 从中间截取

return x[:, :, self.chomp_size // 2:-self.chomp_size // 2].contiguous()

else:# 从最左侧截取

return x[:, :, :-self.chomp_size].contiguous()

使用改代码消除因感受域不同造成的分支间长度不统一。具体操作为如得到的长度为29+2,则截取中间1到29,共29个元素来统一尺寸。

2.2 Prediction

在2.1中,MS-TCN输出为B×768×29的张量,之后,使用此函数

def _average_batch(x, lengths, B):

return torch.stack([torch.mean(x[index][:, 0:i], 1) for index, i in enumerate(lengths)], 0)

在长度维度取特征的平均值,得到B×768的张量。之后使用

self.tcn_output = nn.Linear(num_channels[-1], num_classes)

将输出调整为B×500的张量,即500分类的预测概率