Doris系列之建表操作

Doris系列

注:大家觉得博客好的话,别忘了点赞收藏呀,本人每周都会更新关于人工智能和大数据相关的内容,内容多为原创,Python Java Scala SQL 代码,CV NLP 推荐系统等,Spark Flink Kafka Hbase Hive Flume等等~写的都是纯干货,各种顶会的论文解读,一起进步。

今天和大家分享一下Doris系列之建表操作

#博学谷IT学习技术支持#

文章目录

- Doris系列

- 前言

- 一、Doris 建表-基本概念

- 二、使用步骤

-

- 1.Doris 建表-单分区多分桶SUM预聚合

- 2.Doris 建表-单分区多分桶REPLACE预聚合

- 3.Doris 建表-多分区多分桶SUM预聚合

- 4.Doris 建表-数据导入-Broker Load

- 总结

前言

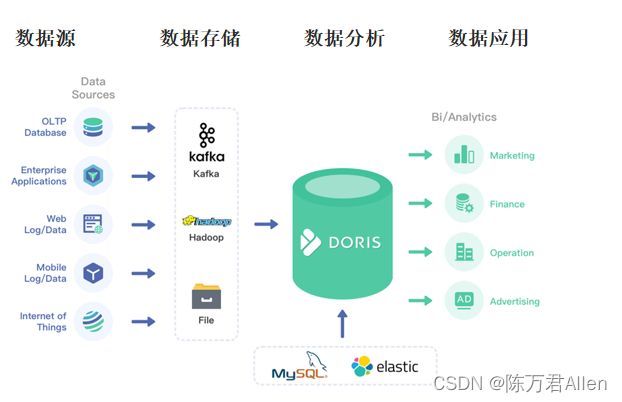

- Apache Doris是一个现代化的基于MPP(大规模并行处理)技术的分析型数据库产品。简单来说,MPP是将任务并行的分散到多个服务器和节点上,在每个节点上计算完成后,将各自部分的结果汇总在一起得到最终的结果(与Hadoop相似)。仅需亚秒级响应时间即可获得查询结果,有效地支持实时数据分析。

- Apache Doris可以满足多种数据分析需求,例如固定历史报表,实时数据分析,交互式数据分析和探索式数据分析等。令您的数据分析工作更加简单高效!

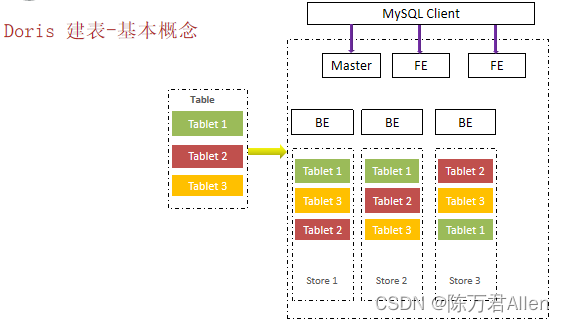

一、Doris 建表-基本概念

- 从表的角度来看数据结构,用户的一张 Table 会拆成多个 Tablet,Tablet 会存成多副本,存储在不同的 BE中,从而保证数据的高可用和高可靠。

- 数据主要都是存储在BE里面,BE节点上物理数据的可靠性通过多副本来实现,默认是3副本,副本数可配置且可随时动态调整,满足不同可用性级别的业务需求。FE调度BE上副本的分布与补齐。

- 如果说用户对可用性要求不高,而对资源的消耗比较敏感的话,我们可以在建表的时候选择建两副本或者一副本。

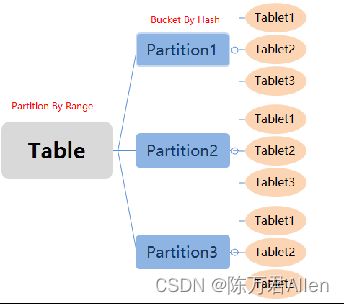

- Tablet & Partition

1.在 Doris 的存储引擎中,用户数据被水平划分为若干个数据分片(Tablet,也称作数据分桶)。

2.每个 Tablet 包含若干数据行。各个 Tablet 之间的数据没有交集,并且在物理上是独立存储的。

3.多个 Tablet 在逻辑上归属于不同的分区(Partition)。一个 Tablet 只属于一个 Partition。而一个 Partition

4.包含若干个 Tablet。因为 Tablet 在物理上是独立存储的,所以可以视为 Partition 在物理上也是独立。

5.Tablet 是数据移动、复制等操作的最小物理存储单元。

若干个 Partition 组成一个 Table。

6.Partition 可以视为是逻辑上最小的管理单元。数据的导入与删除,都可以或仅能针对一个 Partition 进行。

二、使用步骤

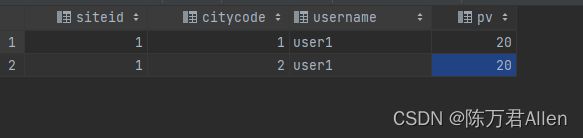

1.Doris 建表-单分区多分桶SUM预聚合

CREATE TABLE table1

(

siteid INT DEFAULT '10',

citycode SMALLINT,

username VARCHAR(32) DEFAULT '',

pv BIGINT SUM DEFAULT '0'

)

AGGREGATE KEY(siteid, citycode, username)

DISTRIBUTED BY HASH(siteid) BUCKETS 10

PROPERTIES("replication_num" = "1");

insert into table1 values(1,1,'user1',10);

insert into table1 values(1,1,'user1',10);

insert into table1 values(1,2,'user1',10);

insert into table1 values(1,2,'user1',10);

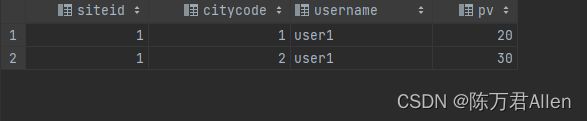

2.Doris 建表-单分区多分桶REPLACE预聚合

CREATE TABLE table1

(

siteid INT DEFAULT '10',

citycode SMALLINT,

username VARCHAR(32) DEFAULT '',

pv BIGINT REPLACE DEFAULT '0'

)

AGGREGATE KEY(siteid, citycode, username)

DISTRIBUTED BY HASH(siteid) BUCKETS 10

PROPERTIES("replication_num" = "1");

insert into table1 values(1,1,'user1',10);

insert into table1 values(1,1,'user1',20);

insert into table1 values(1,2,'user1',10);

insert into table1 values(1,2,'user1',30);

3.Doris 建表-多分区多分桶SUM预聚合

以下场景推荐使用复合分区

- 有时间维度或类似带有有序值的维度,可以以这类维度列作为分区列。分区粒度可以根据导入频次、分区数据量等进行评估。

- 历史数据删除需求:如有删除历史数据的需求(比如仅保留最近N

天的数据)。使用复合分区,可以通过删除历史分区来达到目的。也可以通过在指定分区内发送 DELETE 语句进行数据删除。 - 解决数据倾斜问题:每个分区可以单独指定分桶数量。如按天分区,当每天的数据量差异很大时,可以通过指定分区的分桶数,合理划分不同分区的数据,分桶列建议选择区分度大的列。

CREATE TABLE table2

(

event_day DATE,

siteid INT DEFAULT '10',

citycode SMALLINT,

username VARCHAR(32) DEFAULT '',

pv BIGINT SUM DEFAULT '0'

)

AGGREGATE KEY(event_day, siteid, citycode, username)

PARTITION BY RANGE(event_day)

(

PARTITION p202106 VALUES LESS THAN ('2021-07-01'),

PARTITION p202107 VALUES LESS THAN ('2021-08-01'),

PARTITION p202108 VALUES LESS THAN ('2021-09-01')

)

DISTRIBUTED BY HASH(siteid) BUCKETS 10

PROPERTIES("replication_num" = "3");

导入数据:

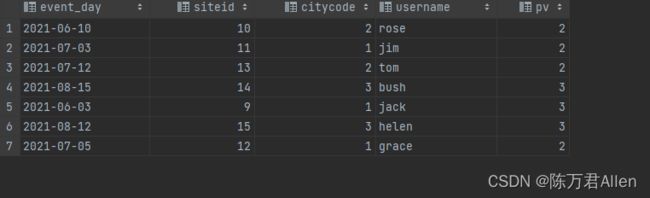

2021-06-03|9|1|jack|3

2021-06-10|10|2|rose|2

2021-07-03|11|1|jim|2

2021-07-05|12|1|grace|2

2021-07-12|13|2|tom|2

2021-08-15|14|3|bush|3

2021-08-12|15|3|helen|3

curl --location-trusted -u root:123456 -H “label:table2_20210707” -H “column_separator:|” -T table2_data http://node01:8030/api/test_db/table2/_stream_load

4.Doris 建表-数据导入-Broker Load

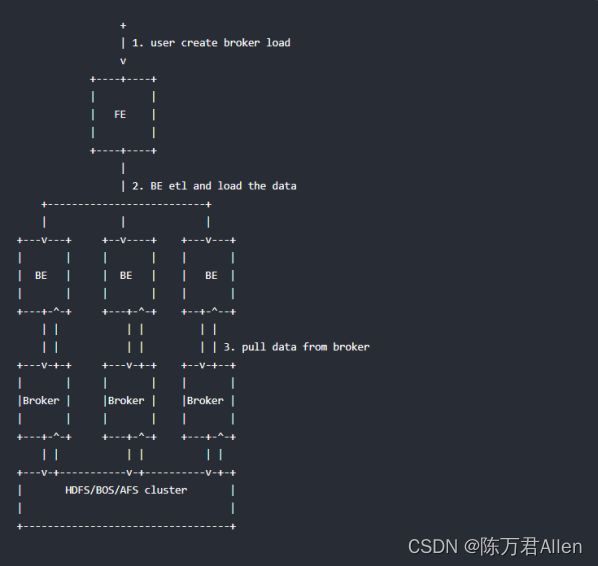

Broker load是一个导入的异步方式,不同的数据源需要部署不同的 broker 进程。可以通过 show broker 命令查看已经部署的 broker。

- 源数据在Broker可以访问的存储系统中,如HDFS

- 数据量在几十到几百GB级别

- 用户在递交导入任务后,FE(Doris系统的元数据和调度节点)会生成相应的PLAN(导入执行计划,BE会导入计划将输入导入Doris中)并根据BE(Doris系统的计算和存储节点)的个数和文件的大小,将PLAN分给多个BE执行,每个BE导入一部分数据。

- BE在执行过程中会从Broker拉取数据,在对数据转换之后导入系统,所有BE均完成导入,由FE最终决定导入是否成功。

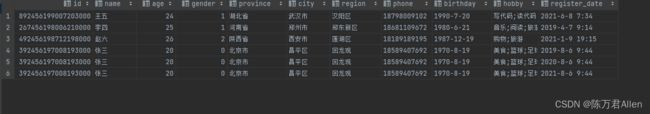

# 创建表

CREATE TABLE test_db.user_result(

id BIGINT,

name VARCHAR(50),

age INT,

gender INT,

province VARCHAR(50),

city VARCHAR(50),

region VARCHAR(50),

phone VARCHAR(50),

birthday VARCHAR(50),

hobby VARCHAR(50),

register_date VARCHAR(50)

)

DUPLICATE KEY(id)

DISTRIBUTED BY HASH(id) BUCKETS 10;

# 通过HDFS导入数据

LOAD LABEL test_db.user_result

(

DATA INFILE("hdfs://node01:8020/datas/user.csv")

INTO TABLE `user_result`

COLUMNS TERMINATED BY ","

FORMAT AS "csv"

(id, name, age, gender, province,city,region,phone,birthday,hobby,register_date)

)

WITH BROKER broker_10_20_30

(

"dfs.nameservices" = "my_cluster",

"dfs.ha.namenodes.my_cluster" = "nn1,nn2,nn3",

"dfs.namenode.rpc-address.my_cluster.nn1" = "node01:8020",

"dfs.namenode.rpc-address.my_cluster.nn2" = "node02:8020",

"dfs.namenode.rpc-address.my_cluster.nn3" = "node03:8020",

"dfs.client.failover.proxy.provider" = "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

)

PROPERTIES

(

"max_filter_ratio"="0.00002"

);

总结

例如:以上就是今天要讲的内容,本文仅仅简单介绍了Doris系列之建表操作,以后还会继续更新Doris的其他用法。