FCN源码解读之infer.py

转载自 https://blog.csdn.net/qq_21368481/article/details/80286809

infer.py是FCN中用于测试的python文件,每次可以单独测试一张图片在训练好的模型下的分割效果(直观上的以图片形式展示的分割效果)。

其源码如下:

-

import numpy

as np

-

from PIL

import Image

-

-

import caffe

-

import vis

-

-

# the demo image is "2007_000129" from PASCAL VOC

-

-

# load image, switch to BGR, subtract mean, and make dims C x H x W for Caffe

-

im = Image.open(

'demo/image.jpg')

-

in_ = np.array(im, dtype=np.float32)

-

in_ = in_[:,:,::

-1]

-

in_ -= np.array((

104.00698793,

116.66876762,

122.67891434))

-

in_ = in_.transpose((

2,

0,

1))

-

-

# load net

-

net = caffe.Net(

'voc-fcn8s/deploy.prototxt',

'voc-fcn8s/fcn8s-heavy-pascal.caffemodel', caffe.TEST)

-

# shape for input (data blob is N x C x H x W), set data

-

net.blobs[

'data'].reshape(

1, *in_.shape)

-

net.blobs[

'data'].data[...] = in_

-

# run net and take argmax for prediction

-

net.forward()

-

out = net.blobs[

'score'].data[

0].argmax(axis=

0)

-

-

# visualize segmentation in PASCAL VOC colors

-

voc_palette = vis.make_palette(

21)

-

out_im = Image.fromarray(vis.color_seg(out, voc_palette))

-

out_im.save(

'demo/output.png')

-

masked_im = Image.fromarray(vis.vis_seg(im, out, voc_palette))

-

masked_im.save(

'demo/visualization.jpg')

源码解读如下:

-

#coding=utf-8

-

import numpy

as np

-

from PIL

import Image

-

-

import caffe

-

import vis

#即vis.py文件

-

-

# the demo image is "2007_000129" from PASCAL VOC

-

-

# load image, switch to BGR, subtract mean, and make dims C x H x W for Caffe

-

im = Image.open(

'demo/image.jpg')

#可以自行替换相应的测试图片名

-

"""

-

以下部分和voc_layers.py中一样,也可参见https://blog.csdn.net/qq_21368481/article/details/80246028

-

- cast to float 转换为float型

-

- switch channels RGB -> BGR 交换通道位置,即R通道和B通道交换(感觉是用了opencv库的原因)

-

- subtract mean 减去均值

-

- transpose to channel x height x width order 将通道数放在前面(对应caffe数据存储的格式)

-

"""

-

in_ = np.array(im, dtype=np.float32)

-

in_ = in_[:,:,::

-1]

-

in_ -= np.array((

104.00698793,

116.66876762,

122.67891434))

-

in_ = in_.transpose((

2,

0,

1))

-

-

# load net 加载训练好的caffemodel

-

# deploy.prototxt为网络的构造文件;.caffemodel是训练好的模型文件(即参数信息);网络模式为test模式

-

net = caffe.Net(

'voc-fcn8s/deploy.prototxt',

'voc-fcn8s/fcn8s-heavy-pascal.caffemodel', caffe.TEST)

-

# shape for input (data blob is N x C x H x W), set data

-

net.blobs[

'data'].reshape(

1, *in_.shape)

#数字1表示测试图片为一张,即这里的第一维N=1

-

net.blobs[

'data'].data[...] = in_

-

# run net and take argmax for prediction

-

net.forward()

#前向计算

-

# 计算score层每一像素点的归属(像素归属于得分最高的那类)

-

out = net.blobs[

'score'].data[

0].argmax(axis=

0)

#

这里的axis=0表示第一维,而data[0]的大小表示C*H*W,也即argmax(axis=0)表示按C这一维进行取最大值,具体理解可看下面例子(注:caffe中的blob中的data是按N*C*H*W的数组存储的,即data[N][C][H][W],如果输入一张彩色图像大小为500*500,则data中的最后一个元素为data[0][2][499][499]):

-

import numpy

as np

-

a = np.array([[[

1,

2,

3],[

4,

5,

6]],[[

7,

8,

9],[

10,

11,

12]],[[

13,

14,

15],[

16,

17,

18]]])

-

b=np.max(a,axis=

0)

-

print(str(a))

-

print(str(b))

运行结果为:

-

[[[

1

2

3]

-

[

4

5

6]]

-

-

[[

7

8

9]

-

[

10

11

12]]

-

-

[[

13

14

15]

-

[

16

17

18]]]

-

[[

13

14

15]

-

[

16

17

18]]

从结果从可以看出b的维数为a的后两维,即2*3(a的维数为3*2*3),即np.max(a,axis=0)表示按a的第一维进行取最大值操作,如果修改为b=np.max(a,axis=1),则运行结果为:

-

[[[

1

2

3]

-

[

4

5

6]]

-

-

[[

7

8

9]

-

[

10

11

12]]

-

-

[[

13

14

15]

-

[

16

17

18]]]

-

[[

4

5

6]

-

[

10

11

12]

-

[

16

17

18]]

从结果中看出b的维数为3*3,即是按a的第二维进行取最大值操作,如果再修改为b=np.max(a,axis=2),则是按a的第三维进行取最大值操作,即b的维数应为3*2,结果如下:

-

[[[

1

2

3]

-

[

4

5

6]]

-

-

[[

7

8

9]

-

[

10

11

12]]

-

-

[[

13

14

15]

-

[

16

17

18]]]

-

[[

3

6]

-

[

9

12]

-

[

15

18]]

继续分析剩余代码如下

-

# visualize segmentation in PASCAL VOC colors 调用vis.py中的函数可视化分割结果

-

voc_palette = vis.make_palette(

21)

-

out_im = Image.fromarray(vis.color_seg(out, voc_palette))

-

out_im.save(

'demo/output.png')

#保存分割好的图像

-

masked_im = Image.fromarray(vis.vis_seg(im, out, voc_palette))

-

masked_im.save(

'demo/visualization.jpg')

#保存含有分割掩膜的原图

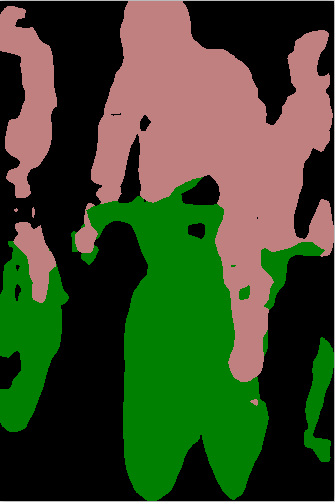

直观说明一下分割好的图像(左)与含有分割掩膜的原图(右)之间的区别,如下图所示: