视觉学习笔记7——ZED2安装SDK,并用于ORB-SLAM3

系列文章目录

ORB-SLAM3安装配置

文章目录

- 系列文章目录

- 前言

- 一、硬件选择

- 二、ZED SDK

-

- 1、依赖与安装

-

- 执行命令:

- 安装时选项:

- 验证测试:

- 2、报错问题

-

- 报错1:未安装PythonAPI

- 报错2:找不到 libturbojpeg.so.0文件

- 三、ROS调用ZED2

-

- 1、编译ZED的工作空间

-

- 报错1:多cuda问题

- 报错2:imu包缺失

- 报错3:源更新问题

- 报错4:USB3.0问题

- 四、调用orb slam3+zed2

-

- 1、完善配置

-

- 1. 创建相关.yaml和.cc文件

- 2. 生成类似Mono的可执行文件

- 3. 重新搭建软链接

- 4. 重新编译

- 2、测试

- 3、报错

- 五、跑图

前言

经过实践发现硬件这个坑太大了,普通相机和专业相机在视觉惯性导航的差距果然不是简单解决掉的,最终还是回到拼钞能力的地步。

系统版本与ROS版本:Ubuntu18.04、Melodic

也算是把网上所有相关博客都看了一遍,提炼出来了精华部分做此总结,再次感谢各位前辈的肩膀~~

至于可能编译过程会出现一些问题,基本上就是缺少依赖包,注意看error信息,还有可能是Cmakelist文件的问题,根据报错信息做相应修改就行。

学会自己百度,授人予鱼,不如授人予渔,要学会自己解决问题,我不可能教会所有和解决所有,别总跟我抱怨什么问题卡住解决不了,我已经把饭送到你面前了,不会还要我嚼碎喂你吧,别让我去守护你的家人好吧。。。

一、硬件选择

经前期调研及摄像头参数对比,最终选择STEREOLABS公司的ZED2双目立体摄像头作为slam平台

具体参数

推荐原因

1.空间目标检测

根据空间环境检测和跟踪对象。通过结合AI和3D,ZED 2可以定位空间中的对象,并提供创建下一代空间感知的工具。

2.全铝外壳

ZED 2配备了更坚固的全铝外壳,带有热控制,可补偿焦距和运动传感器偏差。

3.相机控制

ZED 2 是一个UVC视频相机,可以对其进行低级访问。它支持对所有相机参数的控制,如曝光、增益、锐度等。

4.神经深度感知

ZED2是款使用神经网络再现人类视觉的立体相机,将立体感知提升到了一个新的水平。

5.内置传感器堆栈

的传感器堆栈可在ZED2上使用。结合惯性数据,ZED 2还可以实时捕获高程和磁场。

6.云连接

远程监视和控制您的相机。使用云平台,在世界任何地方捕获和分析空间数据。远程管理您的应用程序,并一次更新您的相机。

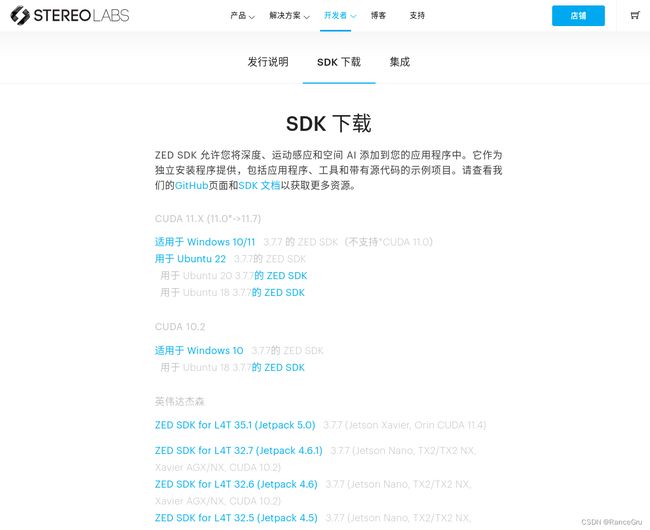

二、ZED SDK

1、依赖与安装

ZED SDK对CUDA是有要求的,本机是cuda10.1,可是没找到对应SDK版本,于是进行了多CUDA安装,可以参考我之前的博客去安装cuda10.2版本。

执行命令:

chmod +x ZED_SDK_Ubuntu18_cuda10.2_v3.7.7.run

./ZED_SDK_Ubuntu18_cuda10.2_v3.7.7.run

安装时选项:

If you are reading this file during the installation process, hit 'q' on your keyboard to close this view.

q

To continue you have to accept the EULA. Accept [Y/n] ?

y

Do you want to also install the static version of the ZED SDK (AI module will still require libsl_ai.so) [Y/n] ?

y

Do you want to install the AI module (required for Object detection and Neural Depth, recommended), cuDNN 8.2 and TensorRT 8.2 will be installed [Y/n] ?

n

Install samples (recommended) [Y/n] ?

y

Installation path: /usr/local/zed/samples/

回车

Do you want to auto-install dependencies (recommended) ? following packet will be installed via the package manager : libjpeg-turbo8 libturbojpeg libusb-1.0-0 libusb-1.0-0-dev libopenblas-dev libarchive-dev libv4l-0 curl unzip zlib1g libpng16-16 libpng-dev libturbojpeg0-dev python3-dev python3-pip python3-setuptools libglew-dev freeglut3-dev qtbase5-dev qtchooser qt5-qmake qtbase5-dev-tools libqt5opengl5 libqt5svg5 [Y/n] ?

y

WARNING! Dependencies installation failed, the installer requires an internet connection

Do you want to install the Python API (recommended) [Y/n] ?

y

Please specify your python executable: python3

回车

验证测试:

cd /usr/local/zed/tools

./ZED Depth Viewer

软件功能:

ZED Calibration——用于ZED校准;

ZED Sensor Viewer——ZED传感器,ZED2支持;

ZED Explorer——用于zed相机的实时预览和录制;

ZED Depth Viewer——捕获和显示深度图像和点云;

ZEDfu——ZED相机的实时3D映射和制图;

2、报错问题

报错1:未安装PythonAPI

WARNING! Python API failed to install

执行:

cd /usr/local/zed/

python get_python_api.py

python3 -m pip install pyzed-3.1-cp37-cp37m-linux_x86_64.whl

检查:

python3

import pyzed

成功安装

报错2:找不到 libturbojpeg.so.0文件

./ZED_Depth_Viewer: error while loading shared libraries: libturbojpeg.so.0: cannot open shared object file: No such file or directory

1、进入下载官网页面,下载2.0.x版本的libjpeg-turbo-2.0.2.tar.gz,网址可能要爬墙。

2、使用tar -zxvf libjpeg-turbo-2.0.2.tar.gz 将压缩包解压, 接着在解压文件下执行以下命令:

mkdir build

cmake -G"Unix Makefiles" ..(我用的是cmake-GUI)

make

sudo make install(被安装在opt下)

3、复制到到 /usr/local/lib库路径:

sudo cp /opt/libjpeg-turbo/lib64/libturbojpeg.so.0 /usr/local/lib

检查:

cd /usr/local/zed/tools

./ZED Depth Viewer

成功调用

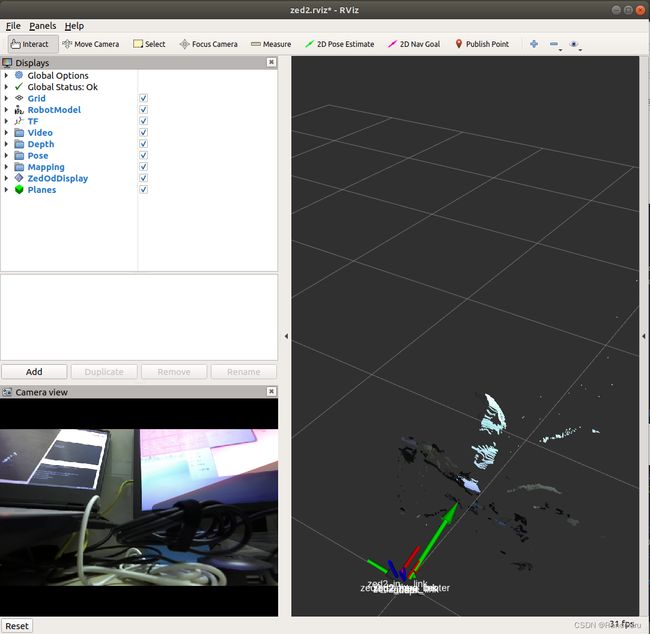

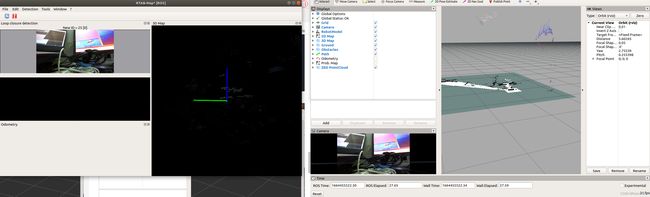

三、ROS调用ZED2

1、编译ZED的工作空间

安装zed ros wrapper

这里默认各位已经有自己的ros工作空间了

进入工作空间

cd ~/catkin_ws/src

下载文件

git clone --recursive https://github.com/stereolabs/zed-ros-wrapper.git

git clone --recursive https://github.com/stereolabs/zed-ros-examples.git

退出安装依赖与编译

cd ../

rosdep install --from-paths src --ignore-src -r -y

catkin_make -DCMAKE_BUILD_TYPE=Release

source ./devel/setup.bash

通过ros调用zed2

roslaunch zed_display_rviz display_zed2.launch

roslaunch zed_rtabmap_example zed_rtabmap.launch

报错1:多cuda问题

CMake Error at /usr/share/cmake-3.10/Modules/FindPackageHandleStandardArgs.cmake:137 (message):

Could NOT find CUDA: Found unsuitable version "10.2", but required is exact

version "10.1" (found /usr/local/cuda)

Call Stack (most recent call first):

/usr/share/cmake-3.10/Modules/FindPackageHandleStandardArgs.cmake:376 (_FPHSA_FAILURE_MESSAGE)

/usr/share/cmake-3.10/Modules/FindCUDA.cmake:1080 (find_package_handle_standard_args)

/usr/local/lib/cmake/opencv4/OpenCVConfig.cmake:86 (find_package)

/usr/local/lib/cmake/opencv4/OpenCVConfig.cmake:108 (find_host_package)

zed-ros-examples/tests/zed_sync_test/CMakeLists.txt:10 (find_package)

-- Configuring incomplete, errors occurred!

See also "/home/llw/Guide_blind/ROS/catkin_ws/build/CMakeFiles/CMakeOutput.log".

See also "/home/llw/Guide_blind/ROS/catkin_ws/build/CMakeFiles/CMakeError.log".

Invoking "cmake" failed

Please delete if you wish to re-initialize

大概指OpenCVConfig.cmake有误,因为之前是在cuda10.1下编译的CV,想彻底解决可能需要重新编译。

可是我用的是多cuda,于是尝试一个捷径,可能会存在一定后患。

sudo gedit /usr/local/lib/cmake/opencv4/OpenCVConfig.cmake

修改成10.2和8.0.5

成功编译上面的工作空间命令,之后还要多思考cuda问题。

报错2:imu包缺失

PluginlibFactory: The plugin for class 'rviz_imu_plugin/Imu' failed to load. Error: According to the loaded plugin descriptions the class rviz_imu_plugin/Imu with base class type rviz::Display does not exist. Declared types are rviz/Axes rviz/Camera rviz/DepthCloud rviz/Effort rviz/FluidPressure rviz/Grid rviz/GridCells rviz/Illuminance rviz/Image rviz/InteractiveMarkers rviz/LaserScan rviz/Map rviz/Marker rviz/MarkerArray rviz/Odometry rviz/Path rviz/PointCloud rviz/PointCloud2 rviz/PointStamped rviz/Polygon rviz/Pose rviz/PoseArray rviz/PoseWithCovariance rviz/Range rviz/RelativeHumidity rviz/RobotModel rviz/TF rviz/Temperature rviz/WrenchStamped rviz_plugin_tutorials/Imu rviz_plugin_zed_od/ZedOdDisplay

大概意思就是没有ros imu的包,用命令安装一下:

sudo apt-get install ros-melodic-imu-tools

报错3:源更新问题

安装 ros-melodic-imu-tools失败

错误:1 http://mirrors.tuna.tsinghua.edu.cn/ros/ubuntu bionic/main amd64 ros-melodic-imu-complementary-filter amd64 1.2.3-1bionic.20220127.155013

404 Not Found [IP: 2402:f000:1:400::2 80]

不知道为什么404,看链接感觉像自带软件源,可是我一直阿里云的源,试试去更新源

sudo apt-get update

更新后又可以安装ros-melodic-imu-tools,具体原因未知。。。

报错4:USB3.0问题

运行调用命令时,表示设置失败

[ INFO] [1664370769.746576278]: ZED connection -> CAMERA FAILED TO SETUP

[ INFO] [1664370777.009440351]: ZED connection -> CAMERA FAILED TO SETUP

[ INFO] [1664370784.697619171]: ZED connection -> CAMERA FAILED TO SETUP

刚开始百思不得其解,后来想起自己电脑接口不够用,把zed2接在usb扩展器上,但zed相机要求USB3.0的接口进行传输,更换后调用成功。

四、调用orb slam3+zed2

1、完善配置

目前搭建了环境,结合我之前的工作,默认orbslam3也搭建好了,如果没有可以参考我写的orbslam3博客。

因为ORB-SLAM3中并没有提供zed2的配置文件,在ORB-SLAM3的官方github上ros的示例文件中没有相关的操作。执行./build_ros.sh也无效,并没有生成ros下的可执行文件,因为作者ORB_SLAM3/下的CMakeLists.txt中并没有ros包可执行文件生成的操作,作者把ros的相关配置都放在了/Examples_old/ROS/ORB_SLAM3文件下了。

所以需要做好以下工作:

1. 创建相关.yaml和.cc文件

cd ORB_SLAM3-master/Examples_old/ROS/ORB_SLAM3/src

touch zed2_stereo_inertial.cc

touch zed2_stereo_inertial.yaml

相机在出厂的时候已经经过初步调试了,可以直接用这个.yaml参数

.yaml文件

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

Camera.type: "PinHole"

# Camera calibration and distortion parameters (OpenCV) (equal for both cameras after stereo rectification)

Camera.fx: 528.3009033203125

Camera.fy: 528.3009033203125

Camera.cx: 632.7931518554688

Camera.cy: 372.5525817871094

# 用的是校正过的节点,所以畸变参数设置为0

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.width: 1280

Camera.height: 720

# Camera frames per second

Camera.fps: 15.0

# stereo baseline times fx

Camera.bf: 63.396108984375

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

ThDepth: 40.0 # 35

# Transformation from camera 0 to body-frame (imu)

# 从左目转换到IMU坐标系

Tbc: !!opencv-matrix

rows: 4

cols: 4

dt: f

data: [ 0.0055827285742915, 0.0128040922714603, 0.9999024394223516, 0.0285440762197234,

-0.9999801332587812, 0.0029981004108222, 0.0055447706603969, -0.1038871459045697,

-0.0029268121592544, -0.9999135295689473, 0.0128205754767047, -0.0063514683297355,

0.0000000000000000, -0.0000000000000000, -0.0000000000000000, 1.0000000000000000]

# IMU noise

# get it from Project of **zed-examples/tutorials/tutorial 7 - sensor data/**.

IMU.NoiseGyro: 0.007 # 1.6968e-04

IMU.NoiseAcc: 0.0016 # 2.0000e-3

IMU.GyroWalk: 0.0019474

IMU.AccWalk: 0.0002509 # 3.0000e-3

IMU.Frequency: 400

#--------------------------------------------------------------------------------------------

# Stereo Rectification. Only if you need to pre-rectify the images.

# Camera.fx, .fy, etc must be the same as in LEFT.P

#--------------------------------------------------------------------------------------------

LEFT.height: 720

LEFT.width: 1280

LEFT.D: !!opencv-matrix

rows: 1

cols: 5

dt: d

data: [0, 0, 0, 0, 0]

LEFT.K: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [528.3009033203125, 0.0, 632.7931518554688, 0.0, 528.3009033203125, 372.5525817871094, 0.0, 0.0, 1.0]

LEFT.R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

LEFT.Rf: !!opencv-matrix

rows: 3

cols: 3

dt: f

data: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

LEFT.P: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [528.3009033203125, 0.0, 632.7931518554688, 0.0, 0.0, 528.3009033203125, 372.5525817871094, 0.0, 0.0, 0.0, 1.0, 0.0]

RIGHT.height: 720

RIGHT.width: 1280

RIGHT.D: !!opencv-matrix

rows: 1

cols: 5

dt: d

data: [0, 0, 0, 0, 0]

RIGHT.K: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [528.3009033203125, 0.0, 632.7931518554688, 0.0, 528.3009033203125, 372.5525817871094, 0.0, 0.0, 1.0]

RIGHT.R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

RIGHT.P: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [528.3009033203125, 0.0, 632.7931518554688, -63.47084045410156, 0.0, 528.3009033203125, 372.5525817871094, 0.0, 0.0, 0.0, 1.0, 0.0]

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1200

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

.cc文件其实就是复制一份ros_stereo_inertial.cc文件,修改它的话题topic

.cc文件

/**

* This file is part of ORB-SLAM3

*

* Copyright (C) 2017-2021 Carlos Campos, Richard Elvira, Juan J. Gómez Rodríguez, José M.M. Montiel and Juan D. Tardós, University of Zaragoza.

* Copyright (C) 2014-2016 Raúl Mur-Artal, José M.M. Montiel and Juan D. Tardós, University of Zaragoza.

*

* ORB-SLAM3 is free software: you can redistribute it and/or modify it under the terms of the GNU General Public

* License as published by the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* ORB-SLAM3 is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even

* the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License along with ORB-SLAM3.

* If not, see <http://www.gnu.org/licenses/>.

*/

#include第一个终端运行:

cd catkin_ws

source ./devel/setup.bash

roscore

第二个终端运行

cd catkin_ws

source ./devel/setup.bash

roslaunch zed_wrapper zed2.launch

第三个终端运行

rostopic list

在第三个终端下面查看对应的topic到底是什么,然后输入进去

2. 生成类似Mono的可执行文件

首先编辑ORB_SLAM3-1.0/Examples_old/ROS/ORB_SLAM3/CMakeLists.txt文件,在最下面添加

rosbuild_add_executable(zed2_stereo_inertial

src/zed2_stereo_inertial.cc

)

target_link_libraries(zed2_stereo_inertial

${LIBS}

)

然后编译,这个步骤类似build_ros.sh

cd ORB_SLAM3-master/Examples_old/ROS/ORB_SLAM3

mkdir build

cd build

cmake ..

make -j8

sudo make install

这时候会发现ORB_SLAM33-1.0/Examples_old/ROS/ORB_SLAM3下面多了一个可执行文件zed2_stereo_inertial,这个文件很重要不能出错。

3. 重新搭建软链接

更新ORB_SLAM3软连接,在/opt/ros/melodic/share目录下,删除原来的ORB_SLAM3软连接(如果有的话)

sudo rm -r ORB_SLAM3

在/opt/ros/melodic/share目录下建立软链接

sudo ln -s /home/xxx/Guide_blind/ORB_SLAM3-1.0/Examples_old/ROS/ORB_SLAM3 /opt/ros/melodic/share/ORB_SLAM3

修改后需要重新编译ros:

需要注意是.sh文件需要的是在Examples_old下的,建议打开来一步步执行保证不出错。

sudo ./build_ros.sh

4. 重新编译

因为orbslam3做的比较早,后来又更改了cuda版本,做成多cuda,在后续里出现了不少问题,所以就全盘编译一遍,有效解决问题。

把ORB-SLAM3重新编译:

sudo ./build.sh

sudo ./build_ros.sh

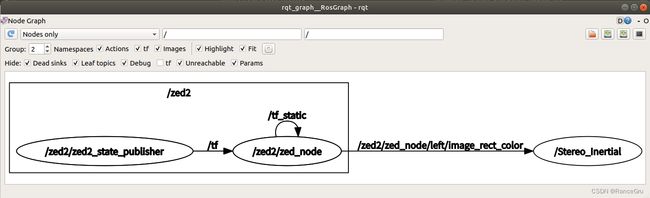

2、测试

打开第一个终端

cd ~/catkin_ws

source ./devel/setup.bash

roscore

打开第二个终端

cd ~/catkin_ws

source ./devel/setup.bash

roslaunch zed_wrapper zed2.launch

打开第三个终端

cd ~/catkin_ws

rosrun ORB_SLAM3 zed2_stereo_inertial /home/llw/Guide_blind/ORB_SLAM3-1.0/Vocabulary/ORBvoc.txt /home/llw/Guide_blind/ORB_SLAM3-1.0/Examples_old/ROS/ORB_SLAM3/src/zed2_stereo_inertial.yaml false

第4个终端:

rqt_graph

3、报错

没有图像,老问题了

经过查找,是命令问题

打开第3个终端使用命令时,注意用的是zed2_stereo_inertial 可执行文件。

五、跑图

未完待续。。。