数据挖掘实战(6)——机器学习实现文本分类(今日头条tnews数据集)

文章目录

- 1 数据准备

- 2 数据预处理

- 3 交叉验证&特征提取

- 4 模型训练

- 5 评估与总结

1 数据准备

import numpy as np

import pandas as pd

import time

import jieba

import re

import string

import pickle

from tqdm import tqdm

from zhon.hanzi import punctuation

from collections import Counter

from sklearn.metrics import accuracy_score, precision_score, recall_score

from sklearn.linear_model import LogisticRegression, RidgeClassifier

from sklearn.naive_bayes import GaussianNB, MultinomialNB, BernoulliNB

from sklearn.feature_extraction.text import TfidfTransformer, CountVectorizer

from sklearn.model_selection import StratifiedKFold

from sklearn.preprocessing import LabelEncoder

from lightgbm import LGBMClassifier

# Pandas设置

pd.set_option("display.max_columns", None) # 设置显示完整的列

pd.set_option("display.max_rows", None) # 设置显示完整的行

pd.set_option("display.expand_frame_repr", False) # 设置不折叠数据

pd.set_option("display.max_colwidth", 100) # 设置列的最大宽度

# 中文停用词

STOPWORDS_ZH = '../data/stopwords_zh.txt'

# 加载数据集

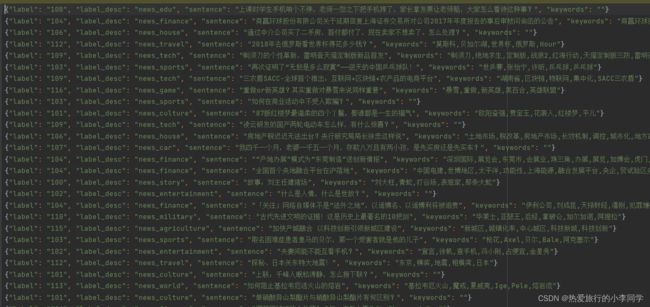

train_df = pd.read_json('../data/tnews/train.json', lines=True, nrows=20000)

train_df.info()

print(train_df.head(10))

# 删除keywords列

del train_df['keywords']

print(train_df.tail())

2 数据预处理

思路:

- 去除无效字符(英文字符、数字、表情、中英文标点符号、空白)

- 中文分词(jieba)

- 去停用词

- 去低频词(阈值为20)

- 序列化列表

# 去除无用的字符

def clear_character(sentence):

pattern1 = re.compile('[a-zA-Z0-9]') # 英文字符和数字

pattern2 = re.compile(u'[^\s1234567890::' + '\u4e00-\u9fa5]+') # 表情和其他字符

pattern3 = re.compile('[%s]+' % re.escape(punctuation + string.punctuation)) # 标点符号

line1 = re.sub(pattern1, '', sentence)

line2 = re.sub(pattern2, '', line1)

line3 = re.sub(pattern3, '', line2)

new_sentence = ''.join(line3.split()) # 去除空白

return new_sentence

# 预处理

def preprocessing(df, col_name):

t1 = time.time()

print('去除无用字符')

df[col_name + '_processed'] = df[col_name].apply(clear_character)

print(df[col_name + '_processed'].values)

print('中文分词')

cut_words = []

for content in df[col_name + '_processed'].values:

seg_list = jieba.lcut(content)

cut_words.append(seg_list)

print(cut_words[0])

print('去停用词')

with open(STOPWORDS_ZH, 'r', encoding='utf8') as f:

stopwords = f.read().split(sep='\n')

for seg_list in cut_words:

for seg in seg_list:

if seg in stopwords:

seg_list.remove(seg) # 删除

print(cut_words[0])

print('去低频词')

min_threshold = 20

word_list = []

for seg_list in cut_words:

word_list.extend(seg_list)

counter = Counter(word_list)

delete_list = [] # 要去除的停用词

for k, v in counter.items():

if v < min_threshold:

delete_list.append(k)

print(f'要去除掉低频词数量:{len(delete_list)}')

for seg_list in tqdm(cut_words):

for seg in seg_list:

if seg in delete_list:

seg_list.remove(seg)

print(cut_words[0])

print('序列化列表')

with open('../data/cut_words.pkl', 'wb') as f:

pickle.dump(cut_words, f)

t2 = time.time()

print(f'共耗时{t2 - t1}秒')

3 交叉验证&特征提取

思路:

- StratifiedKFold抽样(10份)

- 定义accuracy_list等,保存模型评价指标

- 特征提取(TfidfTransforme+CountVectorizer)

- 模型fit、predict、accuracy

- 打印模型accuracy等

# 交叉验证(skf+10)

def cross_validate(model, X, y):

t1 = time.time()

skf = StratifiedKFold(n_splits=10)

accuracy_list, precision_list, recall_list = [], [], []

for i, (train_id, test_id) in enumerate(skf.split(X, y)):

print(f'{i + 1}/10')

X_train, X_val, y_train, y_val = X[train_id], X[test_id], y[train_id], y[test_id]

X_train = X_train.ravel()

X_val = X_val.ravel()

X_train = [" ".join(i) for i in X_train]

X_val = [" ".join(i) for i in X_val]

# 特征提取

transformer = TfidfTransformer()

vectorizer = CountVectorizer(analyzer='word')

train_tfidf = transformer.fit_transform(vectorizer.fit_transform(X_train)).toarray()

val_tfidf = transformer.transform(vectorizer.transform(X_val)).toarray()

# train_tfidf = train_tfidf.astype(np.float32)

# val_tfidf = val_tfidf.astype(np.float32)

print(train_tfidf.shape) # (48024, 26954)

# 模型训练

print('模型训练')

model.fit(train_tfidf, y_train)

y_predict = model.predict(val_tfidf)

accuracy = accuracy_score(y_val, y_predict)

precision = precision_score(y_val, y_predict, average='micro')

recall = recall_score(y_val, y_predict, average='micro')

# f1 = f1_score(y_val, y_predict, average='micro')

accuracy_list.append(accuracy)

precision_list.append(precision)

recall_list.append(recall)

print(accuracy)

print(precision)

print(recall)

t2 = time.time()

print(f"Acc:{np.mean(accuracy_list)} | Pre:{np.mean(precision_list)} | Rec:{np.mean(recall_list)}")

print(f'耗时{t2 - t1}秒')

4 模型训练

思路:

- 加载序列

- 构建模型列表

- 交叉验证

def machine_learning():

# 加载序列

with open('../data/cut_words.pkl', 'rb') as f:

cut_words = pickle.load(f)

train_df['sentence_processed'] = pd.Series(cut_words)

X = train_df['sentence_processed']

y = train_df['label']

X = np.array(X).reshape(-1, 1)

y = np.array(y).reshape(-1, 1)

y = LabelEncoder().fit_transform(y)

# 交叉验证

# models = [XGBClassifier(gpu_id=0, tree_method='gpu_hist')]

models = [LogisticRegression(max_iter=200), RidgeClassifier(), GaussianNB(), BernoulliNB(), MultinomialNB(), LGBMClassifier()]

model_names = ['逻辑回归', '岭回归', '高斯模型', '伯努利模型', '多项式模型', 'LGB']

for model, model_name in zip(models, model_names):

print(f'Start {model_name}')

cross_validate(model, X, y)

if __name__ == '__main__':

preprocessing(train_df, 'sentence')

machine_learning()

5 评估与总结

(1) StratifiedKFold()的split()函数要求同时传入X和y,且对X和y有要求:(特征数,样本数),所以提前将X和y转化为ndarray格式,并reshape(-1, 1)

(2)CounterVectorizer()的fit_transform()函数最好传入[str1, str2, str3]格式的list,否则将报错

(3)model.fit()报错,百度是不能传稀疏矩阵之类的,将之转化为ndarray解决了

(4)样本量大,tfidf生成的特征也多,使用PCA降维后准确率反而降低,速度也降低了,应该是没有做特征选择

(5)以下分别是逻辑回归、岭回归分类、高斯模型、伯努利模型、多项式模型、LightGBM的控制台输出,数据有15个类,50000多条数据,选取了20000条数据进行训练,没有做进一步做特征工程,也没有调参,精度最高的LR回归ACC值只有43.6%

Acc:0.43665000000000004 | Pre:0.43665000000000004 | Rec:0.43665000000000004

耗时1418.719212770462秒

Acc:0.4325 | Pre:0.4325 | Rec:0.4325

耗时435.4280045032501秒

Acc:0.25575000000000003 | Pre:0.25575000000000003 | Rec:0.25575000000000003

耗时64.14511179924011秒

Acc:0.30455 | Pre:0.30455 | Rec:0.30455

耗时31.419057846069336秒

Acc:0.40785 | Pre:0.40785 | Rec:0.40785

耗时17.39114022254944秒

Acc:0.3839 | Pre:0.3839 | Rec:0.3839

耗时42.139591455459595秒

(6)之前测试用10000条数据LR回归ACC值只有38%,而提高到20000有5%的提升,提高样本量应该能提升精度

(7)笔记本性能有限,后续考虑使用linux服务器进行训练,考虑使用pycuda或cupy加速,以及RNN、LSTM等神经网络模型