TensorboardX(pytorch)

用tensorboardX可视化神经网络

# 用tensorboardX可视化神经网络

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision

from tensorboardX import SummaryWriter

# 构建神经网络

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

self.bn = nn.BatchNorm2d(20)

def forward(self, x):

x = F.max_pool2d(self.conv1(x), 2)

x = F.relu(x) + F.relu(-x)

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = self.bn(x)

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

x = F.softmax(x, dim=1)

return x

# 把模型保存为graph

#定义输入

input = torch.rand(32, 1, 28, 28)

#实例化神经网络

model = Net()

#将model保存为graph

with SummaryWriter(log_dir='logs',comment='Net') as w:

w.add_graph(model, (input, ))

用tensorboardX可视化损失值

import torch

import torch.nn as nn

from tensorboardX import SummaryWriter

import numpy as np

input_size = 1

output_size = 1

num_epoches = 60

learning_rate = 0.01

dtype = torch.FloatTensor

writer = SummaryWriter(log_dir='logs', comment='Linear')

np.random.seed(100)

x_train = np.linspace(-1, 1, 100).reshape(100, 1)

y_train = 3 * np.power(x_train, 2) + 2 + 0.2 * np.random.rand(x_train.size).reshape(100, 1)

model = nn.Linear(input_size, output_size)

criterion = nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

for epoch in range(num_epoches):

inputs = torch.from_numpy(x_train).type(dtype)

targets = torch.from_numpy(y_train).type(dtype)

output = model(inputs)

loss = criterion(output, targets)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 保存loss的数据与epoch数值

writer.add_scalar('训练损失值', loss, epoch)

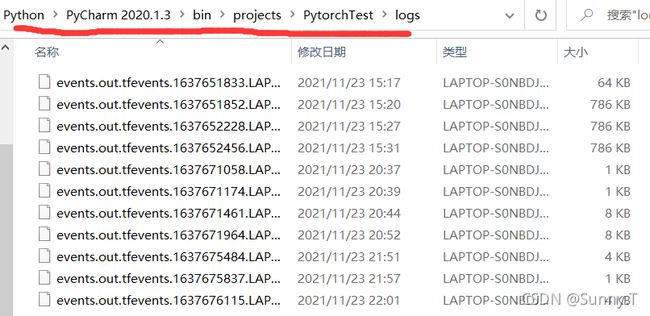

启动Tensorboard控制台

步骤如下:

- 定位到你训练后log文件保存的位置;

- 通过命令行cd 到log文件的上一级目录,或者直接在log文件上一级目录框输入cmd进入命令行;

- 键入命令行,启动TensorBoard;

- 复制红线上的网址到Chrome浏览器中就会看到TensorBoard的页面了。

结果示意图

我们可以在Chrome浏览器中看到TensorBoard的页面,分别如下图所示: