Pytorch从零构建CNN实战

Pytorch从零构建CNN网络实战

- 前言

- CNN基础

-

- 卷积核

-

- 一维卷积核

- 二维卷积核

- 池化

- 视图

- 从零构建CNN网络

- 基于MNIST包的图像识别实战

-

- 数据获取

- 训练函数

- 主程序

前言

本文是基于Pytorch进行构建CNN(Convolution Neural Network)卷积神经网络的简单实践。其中包含:

- 构建CNN所需的基础知识

- 从零构建CNN网络

- 基于MNIST包的图像识别实战

由于CNN的构建是基于Pytorch的,所以Pytorch安装详尽教程可见本人之前博客链接: Pytorch安装.

CNN基础

构建CNN神经网络过程较为简单,但需要明白其中各个专业术语的原理:

- 卷积核(Kernel)

- 池化(Pooling)

- 视图(View)

由于仅上述三个术语为CNN网络专有,其他由神经网络共有的特性在这里不再赘述。

卷积核

卷积核可看作一个滤波器(Filter),下面我们考虑一维卷积核与二维卷积核两个例子:

一维卷积核

我们假设输入一维张量长度为7,将大小为3的滤波Conv1d作用于该张量,如下图所示:

其中输入张量经过大小为3的卷积核输出长度为5,输入与输出的连接权即为Conv1d的参数。

二维卷积核

二维卷积核与一维原理完全相同,下面我们假设输入二维张量大小为6*6,将大小为3*3的卷积滤波器作用于该张量,效果如下图所示:

由图可看出虚线框内3*3的数据与卷积核进行加权求和,由于卷积核大小为3*3,步长为1,因此最终输出张量大小为4*4。

池化

池化与卷积核类似同样具有滤波器,与卷积核不同的是池化的滤波器没有权重,只需设置Kernel大小,一般来说包含最大池化与平均池化,最大池化较为常用,下面用简单实例介绍一下:

以上设置卷积核大小为2*2,步长为(2,2),分别演示了最大池化与平均池化的工作原理。

视图

所谓视图即在采用batch批数据时,在输出时为了使得不将不同图片的数据混合在一起,使用.view()方法使得不同图片的数据保持clean,利于后续学习或处理。

其实.view()方法不仅用在CNN网络中,在一般神经网络中若采用批数据均要用到,只不过.view()方法大家容易忽略,在这里强调一下。

从零构建CNN网络

其实基于Pytorch构建CNN网络很简单,重要组件在Pytorch中均已封装好,在基于前述先验知识的情况下即可快速搭建CNN,其具体代码如下:

import torch

# import numpy as np

# import time as t

import torch.nn as nn

from torchvision import *

# import matplotlib.pyplot as plt

'''若仅仅搭建网络进行训练而不进行可视化,则不需注释掉的部分'''

class C_Net(nn.Module):

'''构建名为C_Net的卷积网络'''

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

'''包含两卷积层、一dropout层、两线性层'''

def forward(self, x):

x = nn.functional.relu(nn.functional.max_pool2d(self.conv1(x), 2))

x = nn.functional.relu(nn.functional.max_pool2d(self.conv2_drop(

self.conv2(x)), 2))

x = x.view(-1, 320)

x = nn.functional.relu(self.fc1(x))

x = nn.functional.dropout(x, training=self.training)

x = self.fc2(x)

return nn.functional.log_softmax(x)

'''经向前传播构成网络,最终输出激活函数为log_softmax'''

具体过程结合注释应很明了。

基于MNIST包的图像识别实战

基于上述CNN网络,我们进行一个基于MNIST包的图像识别简单训练。

数据获取

首先需要从MNIST中下载训练与测试数据并进行格式转换:

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize([0.1307,], [0.3081,])])

train_dataset = datasets.MNIST('data/', train=True, transform=transform,

download=True)

test_dataset = datasets.MNIST('data/', train=False, transform=transform,

download=True)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=32,

shuffle=True)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=32,

shuffle=True)

训练函数

现在训练数据与训练框架都已构建,下面编写一个简易函数用于训练与验证过程:

'''该函数包含training与validation两个阶段,切换较为方便,在训练过程中损失函数采用的是nll_loss'''

def fit(epoch, model, data_loader, phase='training',):

if phase == 'training':

model.train()

if phase == 'validation':

model.eval()

running_loss = 0

# running_correct = 0

for batch_idx, (data, target) in enumerate(data_loader):

data.requires_grad_(True)

if phase == 'training':

optimizer.zero_grad()

output = model(data)

# print(output.shape)

loss = nn.functional.nll_loss(output, target)

running_loss += nn.functional.nll_loss(output, target, size_average=False).data

if phase == 'training':

loss.backward()

optimizer.step()

loss = running_loss/len(data_loader.dataset)

return loss

主程序

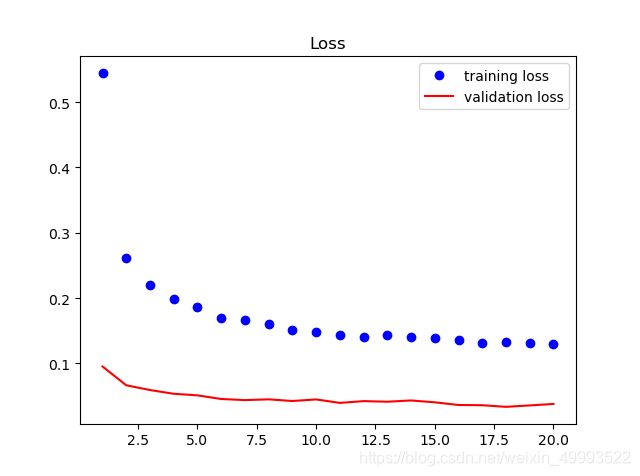

在构建了CNN网络模块、数据获取模块与训练函数模块之后主程序为:

model = C_Net()

optimizer = torch.optim.SGD(model.parameters(), lr=0.05, )

'''采用SGD优化器'''

train_losses, val_losses = [], []

for epoch in range(20):

epoch_loss = fit(epoch, model, train_loader, phase='training')

var_epoch_loss = fit(epoch, model, test_loader, phase='validation')

print(epoch+1, '\tepoch loss: ',epoch_loss, '\n\tvar epoch loss: ',var_epoch_loss)

'''每轮打印出相对误差'''

train_losses.append(epoch_loss)

val_losses.append(var_epoch_loss)

plt.plot(np.linspace(1, len(train_losses), len(train_losses)), train_losses,

'bo', label='training loss')

plt.plot(np.linspace(1, len(val_losses), len(val_losses)), val_losses, 'r',

label='validation loss')

plt.legend()

'''图例'''

经过运行上述主程序即可获得如下结果:

- 随着训练轮数的增加损失值的变化

1 epoch loss: tensor(0.5447)

var epoch loss: tensor(0.0952)

2 epoch loss: tensor(0.2618)

var epoch loss: tensor(0.0665)

3 epoch loss: tensor(0.2206)

var epoch loss: tensor(0.0592)

4 epoch loss: tensor(0.1983)

var epoch loss: tensor(0.0535)

5 epoch loss: tensor(0.1863)

var epoch loss: tensor(0.0510)

6 epoch loss: tensor(0.1702)

var epoch loss: tensor(0.0455)

7 epoch loss: tensor(0.1662)

var epoch loss: tensor(0.0438)

8 epoch loss: tensor(0.1610)

var epoch loss: tensor(0.0449)

9 epoch loss: tensor(0.1506)

var epoch loss: tensor(0.0424)

10 epoch loss: tensor(0.1488)

var epoch loss: tensor(0.0448)

11 epoch loss: tensor(0.1430)

var epoch loss: tensor(0.0395)

12 epoch loss: tensor(0.1411)

var epoch loss: tensor(0.0422)

13 epoch loss: tensor(0.1438)

var epoch loss: tensor(0.0413)

14 epoch loss: tensor(0.1399)

var epoch loss: tensor(0.0432)

15 epoch loss: tensor(0.1388)

var epoch loss: tensor(0.0404)

16 epoch loss: tensor(0.1358)

var epoch loss: tensor(0.0363)

17 epoch loss: tensor(0.1315)

var epoch loss: tensor(0.0359)

18 epoch loss: tensor(0.1321)

var epoch loss: tensor(0.0335)

19 epoch loss: tensor(0.1316)

var epoch loss: tensor(0.0356)

20 epoch loss: tensor(0.1291)

var epoch loss: tensor(0.0379)