疫情数据爬取,可视化及其预测

疫情数据爬取及可视化

数据爬取及保存(provinceDataGet.py)

import requests

import json

import pandas as pd

# 地区

areas = ['北京', '上海', '天津', '重庆', '澳门', '香港', '海南', '台湾', '河北', '山西',

'山东', '江苏', '浙江', '安徽', '福建', '江西', '河南', '湖北', '湖南', '广东',

'广西', '四川', '贵州', '云南', '陕西', '甘肃', '辽宁', '吉林', '黑龙江', '青海',

'宁夏', '西藏', '新疆', '内蒙古']

# 获取某个省份的所有数据

def getProvinceData(results):

provinceName = results["data"][0]["name"]

trend = results["data"][0]["trend"]

updateDate = trend["updateDate"]

confirmAll = trend["list"][0]

cure = trend["list"][1]

die = trend["list"][2]

confirmAdd = trend["list"][3]

confirmAddNative = trend["list"][4]

asymptomaticAdd = trend["list"][5]

print(provinceName)

# 获取3.1索引

indexStart = 0

if provinceName == "澳门" or provinceName == "香港" or provinceName == "台湾":

indexStart = updateDate.index('3.14')

else:

indexStart = updateDate.index('3.1')

updateDate = updateDate[indexStart:]

confirmAll = confirmAll["data"][indexStart:]

cure = cure["data"][indexStart:]

die = die["data"][indexStart:]

confirmAdd = confirmAdd["data"][indexStart:]

confirmAddNative = confirmAddNative["data"][indexStart:]

asymptomaticAdd = asymptomaticAdd["data"][indexStart:]

return provinceName, updateDate, confirmAll, cure, die, confirmAdd, confirmAddNative, asymptomaticAdd

# 格式化数据

def formatData(dataLen):

dataList = []

for i in range(dataLen):

dateTemp = updateDate[i]

confirmAllTemp = confirmAll[i]

cureTemp = cure[i]

dieTemp = die[i]

confirmAddTemp = confirmAdd[i]

confirmAddNativeTemp = confirmAddNative[i]

asymptomaticAddTemp = asymptomaticAdd[i]

nowConfirm = confirmAllTemp-cureTemp-dieTemp

dataList.append(

[dateTemp, confirmAddTemp, confirmAddNativeTemp, asymptomaticAddTemp, confirmAllTemp, cureTemp, dieTemp, nowConfirm])

# print(dataList)

df = pd.DataFrame(dataList)

df.columns = ["日期", "新增确诊", "新增本土", "新增无症状", "累计确诊", "累计治愈", "累计死亡", "现有确诊"]

return df

if __name__ == '__main__':

writer = pd.ExcelWriter(r'D:\pycharmCode\BigData\result\provinceData.xlsx')

for area in areas:

url = 'https://voice.baidu.com/newpneumonia/getv2?' \

'from=mola-virus&stage=publish&target=trend&isCaseIn=1&' \

'area={}&callback=jsonp_1652526814976_84234'.format(area)

# url = 'https://voice.baidu.com/newpneumonia/getv2?from=mola-virus&stage=publish&target=trend&isCaseIn=1&area=%E8%BE%BD%E5%AE%81&callback=jsonp_1652526814976_84234'

response = requests.get(url).text

results = response[26:-2]

results = json.loads(results)

provinceName, updateDate, confirmAll, cure, die, confirmAdd, confirmAddNative, asymptomaticAdd = getProvinceData(results)

# print(provinceName, updateDate, confirmAll, cure, die, confirmAdd, confirmAddNative, asymptomaticAdd)

dataLen = len(updateDate)

df = formatData(dataLen)

print(df)

# 输出到excel

df.to_excel(writer, index=None, sheet_name=provinceName)

# writer.save()

writer.close()

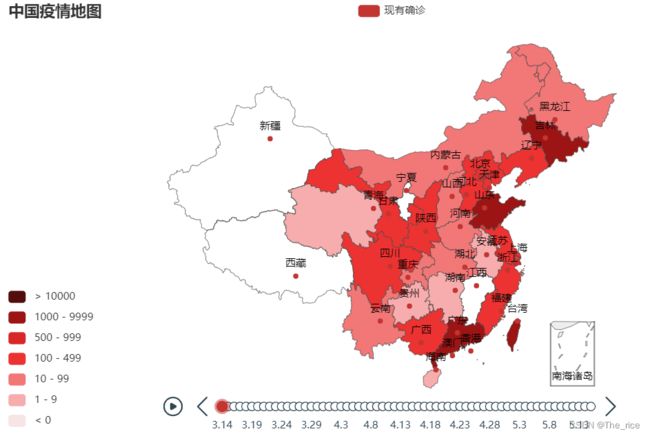

各省现有确诊疫情分布图(dataVisualization_map.py)

import pandas as pd

#通过pyecharts实现可视化

from pyecharts.charts import Map, Geo

from pyecharts import options as opts

from pyecharts.charts import Timeline

import BigData.provinceDataGet

import datetime

areas = BigData.provinceDataGet.areas

# 疫情地图所用颜色

pieces = [

{'min': 10000, 'color': '#540d0d'},

{'max': 9999, 'min': 1000, 'color': '#9c1414'},

{'max': 999, 'min': 500, 'color': '#d92727'},

{'max': 499, 'min': 100, 'color': '#ed3232'},

{'max': 99, 'min': 10, 'color': '#f27777'},

{'max': 9, 'min': 1, 'color': '#f7adad'},

{'max': 0, 'color': '#f7e4e4'},

]

def getDataFromExcel():

confirmListAll = []

dateList = []

today = datetime.date.today() # 获得今天的日期

yesterday = today - datetime.timedelta(days=1)

textY = yesterday.strftime('%m.%d')

textY = textY[1:]

# print(textY)

for area in areas:

data = pd.read_excel(r'D:\pycharmCode\BigData\result\provinceData.xlsx', sheet_name=area, usecols='A,H')

datalist = list(data.现有确诊)

dateList = list(data.日期)

if area != '台湾' and area != '香港' and area != '澳门':

datalist = datalist[13:]

if str(dateList[-1]) == textY:

dateList = dateList[:-1]

datalist = datalist[:-1]

confirmListAll.append(datalist)

# print(area, len(datalist), dateList[-1])

# print(confirmListAll)

dateList = dateList[13:]

# print(len(dateList))

confirmList = []

for i in range(len(confirmListAll[0])):

confirmNeed = []

for j in range(len(confirmListAll)):

confirmNeed.append(confirmListAll[j][i])

confirmList.append(confirmNeed)

# print(confirmList)

return confirmList, dateList

if __name__ == '__main__':

confirmList, dateList = getDataFromExcel()

# 时间线

t = Timeline()

i = 0

# 将数据转换为二元的列表并绘制地图

for seq in confirmList:

ret = list(zip(areas, seq))

print(ret)

c = (

Map()

.add("现有确诊", ret, "china")

.set_global_opts(

title_opts=opts.TitleOpts(title="中国疫情地图"),

visualmap_opts=opts.VisualMapOpts(pieces=pieces, is_piecewise=True)

)

)

t.add(c, dateList[i])

i = i+1

t.render(r'D:\pycharmCode\BigData\result\map.html')

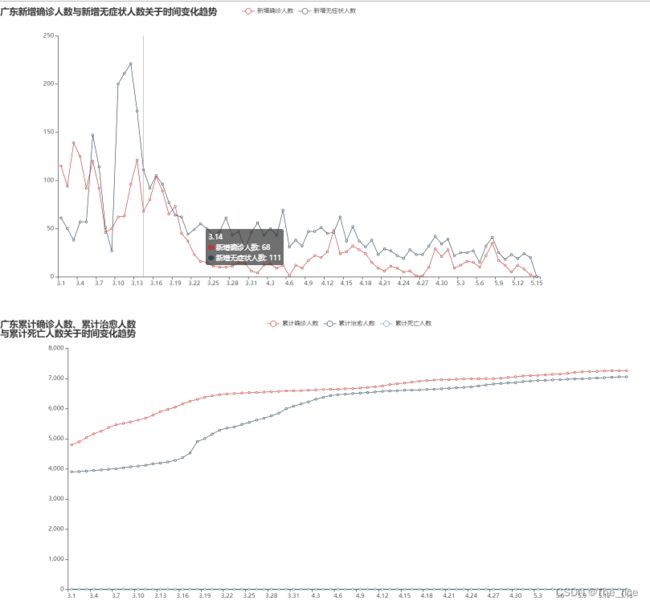

生成各省疫情信息折线图(dataVisualization_line.py)

import pandas as pd

from pyecharts.charts import *

from pyecharts import options as opts

from utils import root_dir

province=["北京","上海","天津","重庆","河北","山西","吉林","辽宁","黑龙江","江苏",

"浙江","安徽","福建","江西","山东","河南","湖北","湖南","广东","海南",

"四川","贵州","云南","陕西","甘肃","青海","台湾","宁夏","西藏","新疆",

"广西","内蒙古","香港","澳门"]

for i,pro in enumerate(province):

chinaDayData = pd.read_excel(r'{}\Big_Data_Work\provinceData.xlsx'.format(root_dir), sheet_name=pro) # 读取特定的sheet

#print(chinaDayData.info())

chinaDayData['日期'] = chinaDayData['日期'].map(str)

#将"3.1"转换为"3.10" ......

date = []

for i in range(len(list(chinaDayData.日期))):

s = list(chinaDayData.日期)[i]

temp = list(chinaDayData.日期)[i].split('.')

if i <= len(list(chinaDayData.日期))-2:

temp1 = list(chinaDayData.日期)[i+1].split('.')

if temp[1]=='1' and temp1[1][-1] == '1':

s = '{}{}'.format(list(chinaDayData.日期)[i], '0')

if temp[1] == '2' and temp1[1][-1] == '1':

s = '{}{}'.format(list(chinaDayData.日期)[i], '0')

if temp[1]=='3' and temp1[1][-1] == '1':

s = '{}{}'.format(list(chinaDayData.日期)[i], '0')

date.append(s)

# 作出 关于新增确诊人数和新增无症状人数的折线图

my_line1 = (

Line(init_opts=opts.InitOpts(width='1200px',height='600px'))

# x轴

.add_xaxis(date)

# y轴

.add_yaxis('新增确诊人数',list(chinaDayData.新增确诊))

.add_yaxis('新增无症状人数', list(chinaDayData.新增无症状))

# 标题

.set_series_opts(label_opts=opts.LabelOpts(is_show=False))

.set_global_opts(

title_opts=opts.TitleOpts(title=pro+"新增确诊人数与新增无症状人数关于时间变化趋势"),

tooltip_opts=opts.TooltipOpts(is_show=True,trigger="axis")

)

)

# 作出关于 累计确诊、累计治愈与累计死亡人数的折线图

my_line2 = (

Line(init_opts=opts.InitOpts(width='1400px',height='600px'))

# x轴

.add_xaxis(date)

# y轴

.add_yaxis('累计确诊人数', list(chinaDayData.累计确诊))

.add_yaxis('累计治愈人数', list(chinaDayData.累计治愈))

.add_yaxis('累计死亡人数', list(chinaDayData.累计死亡))

# 标题

.set_series_opts(label_opts=opts.LabelOpts(is_show=False))

.set_global_opts(title_opts=opts.TitleOpts(title=pro+"累计确诊人数、累计治愈人数\n与累计死亡人数关于时间变化趋势"),

tooltip_opts=opts.TooltipOpts(is_show=True,trigger="axis"))

)

# 两个折线图各自保存进html文件中

i = Page(layout=Page.DraggablePageLayout)

i.add(my_line1,my_line2)

i.render_notebook()

i.render(r"{}\Big_Data_Work\result\{}.html".format(root_dir,pro))

i.save_resize_html(r"{}\Big_Data_Work\result\{}.html".format(root_dir,pro),cfg_file=r"{}\Big_Data_Work\result\chart_config.json".format(root_dir),

dest=r"{}\Big_Data_Work\result\{}.html".format(root_dir,pro))

通过训练一个网络模型进行疫情预测(LSTM_for_Acculative.py)

'''

时间序列预测问题可以通过滑动窗口法转换为监督学习问题

'''

import numpy

import matplotlib.pyplot as plt

import pandas as pd

import math

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.layers import LSTM

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error

import warnings

warnings.filterwarnings('ignore')

from utils import create_dataset,root_dir

if __name__ == '__main__':

# 加载数据

dataset = pd.read_excel(r"{}\Big_Data_Work\Acculative.xlsx".format(root_dir))

print("数据集长度:",len(dataset))

#dataset = dataframe.values

#print(dataset)

# 将“日期”列转换为时间数据类型,并将“日期”列设置为Pandas的索引

#dataset['日期'] = pd.to_datetime(dataset['日期'])

dataset = dataset.set_index(['index'], drop=True)

# 将整型变为float

dataset = dataset.astype('float32')

plt.plot(dataset)

plt.show()

#数据格式转换为监督学习,归一化数据,训练集和测试集划分

# 数据处理,归一化至0~1之间

scaler = MinMaxScaler(feature_range=(0, 1))

dataset = scaler.fit_transform(dataset)

train=dataset

# 构建监督学习型数据 创建测试集和训练集

look_back = 3

trainX, trainY = create_dataset(train, look_back) # 三步预测

print("转为监督学习,训练集数据长度:",len(trainX))

print(trainX,trainY)

# 调整输入数据的格式,数据重构为3D [samples, time steps, features]

trainX = numpy.reshape(trainX, (trainX.shape[0], look_back, 1)) # (样本个数,1,输入的维度)

# 创建LSTM神经网络模型

model = Sequential()

# 输入维度为1,时间窗的长度为1,隐含层神经元节点个数为120

model.add(LSTM(4, input_shape=(trainX.shape[1], trainX.shape[2])))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

model.fit(trainX, trainY, epochs=30, batch_size=1, verbose=2)

# 打印模型

model.summary()

#tf.keras.models.save_model(model,"Model_for_Acculative.ckpt")

# 预测

trainPredict = model.predict(trainX)

# 反归一化,逆缩放预测值

trainPredict = scaler.inverse_transform(trainPredict)

trainY = scaler.inverse_transform([trainY])

# 计算误差 RMSE 计算均方根误差(标准差) 衡量观测值同真实值之间的偏差,RMSE越接近于0,说明模型选择和拟合更好,数据预测也越成功

trainScore = math.sqrt(mean_squared_error(trainY[0], trainPredict[:, 0]))

print('Train Score: %.2f RMSE' % (trainScore))

#绘图

trainPredictPlot = numpy.empty_like(dataset)

trainPredictPlot[:, :] = numpy.nan

trainPredictPlot[look_back:len(trainPredict) + look_back, :] = trainPredict

plt.plot(scaler.inverse_transform(dataset))

plt.plot(trainPredictPlot)

plt.show()

# 测试集对比折线图

fig = plt.figure(figsize=(12, 10))

plt.plot(trainY[0], label='observe')

plt.plot([x for x in trainPredict[:, 0]], label='trained')

plt.xlabel("date", fontsize=25)

plt.ylabel("updateDate", fontsize=25)

plt.legend(fontsize=25)

plt.show()

# 预测未来的数据

# 测试数据的最后一个数据没有预测,这里补上

finalX = numpy.reshape(train[-3:], (1, trainX.shape[1],1))

#finalX = numpy.reshape(test[-3:], (1, testX.shape[1], 1)) #使用最后三个数据预测下一个数据

# 预测得到标准化数据

featruePredict = model.predict(finalX)

# 将标准化数据转换为真实值

featruePredict = scaler.inverse_transform(featruePredict)

print('未来一天的累计确诊人数是: ', featruePredict)

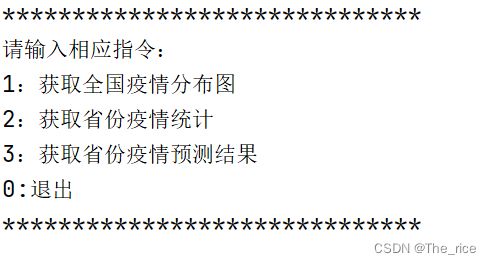

主进程(predict.py)

import pandas as pd

import warnings

import pyautogui

from utils import predict_Three_days,getData

warnings.filterwarnings('ignore')

import win32api

if __name__ == '__main__':

# 加载数据

while(1):

print('*'*30)

print("请输入相应指令:")

print('1:获取全国疫情分布图')

print('2:获取省份疫情统计')

print('3:获取省份疫情预测结果')

print('0:退出')

print('*' * 30)

income=int(input())

pyautogui.hotkey("Alt","c")#清除run的输出信息,需要添加Alt+c快捷键到pycharm

if(income==0):

break;

elif(income==1):

win32api.ShellExecute(0,'open','.\\result\\China_map.html',"","",1)

continue

elif(income==2):

province=input("请输入要查询的省份:")

try:

win32api.ShellExecute(0, 'open', '.\\result\\{}'.format(province)+".html", "", "", 1)

except:

print("没有查询到相应的省份信息!")

elif(income==3):

try:

pro_vince=input("请输入要进行预测的省份:")

dataset=getData(r'.\provinceData.xlsx',sheet=pro_vince)

data_add_diagnosis=dataset[['日期','新增确诊']]

data_add_asymptomatic=dataset[['日期','新增无症状']]

data_acc_diagnosis=dataset[['日期','累计确诊']]

data_acc_healing=dataset[['日期','累计治愈']]

data_acc_dead=dataset[['日期','累计死亡']]

print(r"{}--》未来三天预计新增确诊人数:".format(pro_vince),predict_Three_days("Model_for_Additional.ckpt",data_add_diagnosis))

print(r"{}--》未来三天预计新增无症状人数:".format(pro_vince),predict_Three_days("Model_for_Additional.ckpt",data_add_asymptomatic))

print(r"{}--》未来三天预计累计确诊人数:".format(pro_vince),predict_Three_days("Model_for_Acculative.ckpt",data_acc_diagnosis))

print(r"{}--》未来三天预计累计治愈人数:".format(pro_vince),predict_Three_days("Model_for_Acculative.ckpt",data_acc_healing))

print(r"{}--》未来三天预计累计死亡人数:".format(pro_vince),predict_Three_days("Model_for_Acculative.ckpt",data_acc_dead))

except:

print("预测过程出错!")

else:

print("您输入的指令有误,请重新输入(0-3)!")

通过简单的数字命令实现交互

工具包(utils.py)

import numpy

import pandas as pd

import matplotlib.pyplot as plt

import numpy

import tensorflow as tf

from sklearn.preprocessing import MinMaxScaler

import warnings

warnings.filterwarnings('ignore')

# 创建数据集 将值数组转换为数据集矩阵,look_back是步长

root_dir=r'D:\gitdown'

def create_dataset(dataset, look_back=1):

dataX, dataY = [], []

for i in range(len(dataset) - look_back - 1):

a = dataset[i:(i + look_back), 0]

dataX.append(a) #X按照顺序取值

dataY.append(dataset[i + look_back, 0]) #Y向后移动一位取值

return numpy.array(dataX), numpy.array(dataY)

#预测数据控制

def process_num(num):

if(num<=0):

num=0

else:

num=numpy.floor(num)

return num

#预测未来三天的数据

def predict_Three_days(model_dir,dataset,look_back=3):

dataset = dataset.set_index(['日期'], drop=True)

dataset = dataset.astype('float32')

# 数据处理,归一化至0~1之间

scaler = MinMaxScaler(feature_range=(0, 1))

dataset = scaler.fit_transform(dataset)

train = dataset

trainX, trainY = create_dataset(train, look_back) # 三步预测

# 调整输入数据的格式,数据重构为3D [samples, time steps, features]

trainX = numpy.reshape(trainX, (trainX.shape[0], look_back, 1)) # (样本个数,1,输入的维度)

# 模型加载

model = tf.keras.models.load_model(model_dir)

# 预测未来三天的数据

finalX = numpy.reshape(train[-3:], (1, trainX.shape[1], 1))

featruePredict1 = model.predict(finalX)

# 将标准化数据转换为真实值

finalx2=numpy.append(train[-2:],featruePredict1)

featruePredict2=model.predict(numpy.reshape(finalx2,(1,trainX.shape[1],1)))

finalx3=numpy.append(finalx2[-2:],featruePredict2)

featruePredict3=model.predict(numpy.reshape(finalx3,(1,trainX.shape[1],1)))

featruePredict1 = scaler.inverse_transform(featruePredict1)

featruePredict2 = scaler.inverse_transform(featruePredict2)

featruePredict3 = scaler.inverse_transform(featruePredict3)

return process_num(featruePredict1.squeeze()),process_num(featruePredict2.squeeze()),process_num(featruePredict3.squeeze())

#读取工作表获取数据

def getData(dir,sheet):

dataset = pd.read_excel(dir, sheet_name=sheet)

return dataset