Pytorch → ONNX → TensorRT

Pytorch → ONNX → TensorRT

由于实验室与应急减灾中心关于道路检测的项目需加快推理速度,在尝试手动融合模型的Con层和bn层发现推理速度提升不明显之后,我的“mentor”zwf同学让我完成他半年前未竟的TensorRT尝试,于是就有了接下来的这些东西。

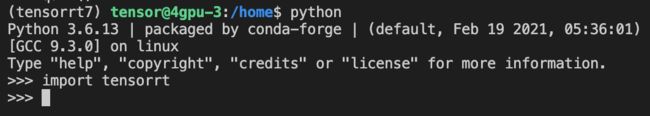

首先放上我使用的环境版本号

TensorRT Version: TensorRT-7.2.3.4.Ubuntu-18.04.x86_64-gnu.cuda-11.1.cudnn8.1

GPU Type: NVIDIA 2080ti

CUDA Version: 11.1.1

CUDNN Version: 8.1.0.77

Operating System + Version: Ubuntu-18.04

Python Version: 3.6.13

Pycuda Version: 2020.1

Onnx Version:1.10.1

Pytorch Version:1.7.1+cu110

Torchvision Version:0.8.2+cu110

Pytorch → ONNX

主要参考Pytorch文档,分两种转换模式,第一种是trace-based,不支持动态网络,输入维度固定。第二种是script-based,支持动态网络,输入可变维度。

很显然第二种更加合理,但改起来相对比较复杂,这里面我们使用trace-based转换模式。另外Torchvision内部所有模型全部支持通过torch2trt转换到ONNX,参考这里。

import torch

import torchvision

import numpy as np

net = DinkNet34()

net = torch.nn.DataParallel(net, device_ids=range(torch.cuda.device_count()))

net.load_state_dict(torch.load("log01_dink34.th"))

net = net.module

net = net.cuda()

model = net.eval()

x = torch.rand(1, 3, 1024, 1024).cuda()

with torch.no_grad():

predictions = model(x)

trace_backbone = torch.jit.trace(model, x, check_trace=False)

torch.onnx.export(trace_backbone, x, "Dinknet-1.onnx", verbose=False, export_params=True, training=False, opset_version=10, example_outputs=predictions)

# 运行onnx的示例

import onnxruntime as ort

ort_session = ort.InferenceSession('Dinknet-1.onnx')

onnx_outputs = ort_session.run(None, {ort_session.get_inputs()[0].name: x.cpu().numpy().astype(np.float32)})

# 校验结果

print(max(predictions.cpu().numpy() - onnx_outputs))

结果输出示例如下,可以看到差异出现在小数点后第七位数字,转换成功。

[[[-4.7683716e-07 3.5762787e-07 3.8743019e-07 1.1920929e-07

-9.5367432e-07 -1.0728836e-06 -1.4305115e-06 5.9604645e-08

-7.1525574e-07 3.5762787e-07 5.9604645e-08 7.7486038e-07

1.1920929e-07 -5.9604645e-07 4.1723251e-07 -3.5762787e-07

0.0000000e+00 -1.7881393e-07 -1.1920929e-07 1.7881393e-07

...

4.7683716e-07 -2.3841858e-07 5.6624413e-07 2.3841858e-07

-9.5367432e-07 -7.1525574e-07 2.3841858e-07 5.9604645e-07]]]

注:

- 在转onnx模型时就采用的是静态输入,输入维度固定,所以我在接下来的转TensorRT模型中均采用的转为静态的尺寸的TensorRT模型和静态推断,输入维度均固定,如果只改变静态推断中的维度大小而不改变转onnx模型时静态输入的维度,就会出现推理结果为0的情况,比如说静态推断时输入的batch_size为8,而在转onnx模型时静态输入的batch_size为1,那静态推断的推理结果只有第一个batch_size有结果,剩下七个全为0。

- 转onnx模型时的net不支持DataParallel,只能放到单一GPU上

ONNX → TensorRT

TensorRT安装过程:

TensorRT 的安装方式很简单,只需要注意一些环境的依赖关系就可以,官网安装教程已经给出的步骤很详细,这里简单总结一下步骤

-

进入下载链接,确定自己要下载的TensorRT版本,确定其对应的cuda和cudnn版本,由于我是在虚拟环境里面安装TensorRT,可自行安装cuda和cudnn,这里我选的是tar文件来进行安装,可自行选择安装位置

-

在虚拟环境里面安装cuda和cudnn;由于要使用 Python 接口的 TensorRT,需安装 Pycuda;安装pytorh和torchvision(这一步中,报缺失什么依赖包,用conda install或者pip install咔咔装就完事了)

conda install cudatoolkit=11.1

conda install cudnn=8.1

pip install 'pycuda<2021.1'

pip install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html

官方文档说在pytorch1.8.1上测试过了,而且向下兼容,但是我尝试1.8.1版本不work,我就装了1.7.1版本,可能是我装的是TensorRT7而不是TensorRT8

![]()

- 将虚拟环境里的cuda路径添加到环境变量

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/tensor/anaconda3/envs/lhc/lib

注:

- 在"Install the Python TensorRT wheel file.“中,我选择的是”-cp36",可能会出现安装某些版本的时候会报错,例如

ERROR: tensorrt-7.2.3.4-cp36-none-linux_x86_64.whl is not a supported wheel on this platform.

这个是因为自己 python 版本不对,输入 python -V来查看 python 版本是否是自己想象中的版本,不对的话切换一下 ,python3.6有比较好的兼容性

- uff模块用tensorflow时需要安装,不用就不需要

- 当报错无法调用tensorrt,提示与cuda或cudnn版本不适配,可能没有把TensorRT的lib绝对路径添加到环境变量中或者没有虚拟环境里的cuda路径添加到环境变量

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/lhc/TensorRT-7.2.3.4/lib

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/tensor/anaconda3/envs/lhc/lib

TensorRT使用过程:

主要流程为首先通过TensorRT的pythonAPI接口构建得到engine,然后创建运行环境context,然后使用allocate_buffers()函数分配内存,在调用do_inference进行推理。

import tensorrt as trt # TensorRT 7

import pycuda.driver as cuda

import pycuda.autoinit

import numpy as np

import cv2

import torch

import torch.nn as nn

import torchvision

from torch.autograd import Variable

import os

import onnx

import time

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

class HostDeviceMem(object):

def __init__(self, host_mem, device_mem):

self.host = host_mem

self.device = device_mem

def __str__(self):

return "Host:\n" + str(self.host) + "\nDevice:\n" + str(self.device)

def __repr__(self):

return self.__str__()

#Allocates all buffers required for an engine, i.e. host/device inputs/outputs.

def allocate_buffers(engine):

inputs = []

outputs = []

bindings = []

stream = cuda.Stream()

for binding in engine:

size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

# Allocate host and device buffers

host_mem = cuda.pagelocked_empty(size, dtype)

device_mem = cuda.mem_alloc(host_mem.nbytes)

# Append the device buffer to device bindings.

bindings.append(int(device_mem))

# Append to the appropriate list.

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

#The Onnx path is used for Onnx models.

def build_engine_onnx(model_file):

with trt.Builder(TRT_LOGGER) as builder, builder.create_network(flags=1 << int(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)) as network,\

trt.OnnxParser(network, TRT_LOGGER) as parser:

builder.max_workspace_size = 1 <<30 # 1GB

#builder.max_batch_size = 8 # <<30 # 1GB

# Load the Onnx model and parse it in order to populate the TensorRT network.

with open(model_file, 'rb') as model:

if not parser.parse(model.read()):

print('ERROR: Failed to parse the ONNX file.')

for error in range(parser.num_errors):

print(parser.get_error(error))

return None

return builder.build_cuda_engine(network)

#This function is generalized for multiple inputs/outputs.

#inputs and outputs are expected to be lists of HostDeviceMem objects.

def do_inference(context, bindings, inputs, outputs, stream, batch_size=1):

# Transfer input data to the GPU.

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

# Run inference.

context.execute_async(batch_size=batch_size, bindings=bindings, stream_handle=stream.handle)

# Transfer predictions back from the GPU.

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

# Synchronize the stream

stream.synchronize()

# Return only the host outputs.

return [out.host for out in outputs]

input_shape = [3,1024,1024]

x = np.ones([1,]+input_shape).astype(np.float32)

onnx_model_file = '/home/lhc/tensorrt-test/Dinknet-1.onnx'

with build_engine_onnx(onnx_model_file) as engine, engine.create_execution_context() as context:

inputs, outputs, bindings, stream = allocate_buffers(engine)

print('Running inference on image x...')

inputs[0].host = x.data

trt_outputs = do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)

mask = trt_outputs[0].reshape((1024,1024))

print(mask.shape)

正确性:

将输入图片复制为batch_size为8,8张图同时推理出的结果与原模型在pytorch下做相同预处理的推理结果相同(左一为原模型在pytorch下做相同预处理的推理结果,中间和右一分别为TensorR对复制的第一张和第八张图的推理结果)

速度:

输入尺寸(8,3,1024,1024)

单卡2080ti 100个推理过程耗时 17.31880474090576s

对比四卡 100个pytorch推理过程耗时 24.691092491149902s

对比单卡 100个pytorch推理过程耗时 34.04168725013733s

注:遇到了三个问题

- TensorRT报错说不支持转置卷积,但是验证了生成的ONNX文件输出是正确的,解决方法:更换了生成engine的代码,从动态尺寸和动态推理改为了静态尺寸和静态推理;

- Assertion failed: cublasStatus == CUBLAS_STATUS_SUCCESS(可参考这里),解决方法:cuda10.2关于cuBLAS LT有bug,官网有给出cuda10.2的补丁安装包,但我们使用了cuda11.1和相对应的TensorRT版本

- TensorRT推理报错:pycuda._driver.LogicError: cuMemcpyHtoDAsync failed: invalid argument,解决方法:tensorrt推理引擎输入推理数据的维度或者类型(np.float32)不对,输入推理数据的维度是否匹配,类型是否是float32

多GPU使用TensorRT:

每个ICudaEngine对象被实例化的时候(builder 或者deserialization)都会绑定在指定的GPU上。如果要选择GPU, 则应该在创建engine或者反序列化engine的时候使用cudaSetDevice进行设定。每一个IExecutionContext都被绑定在了engine被创建的那个GPU上。当使用execute或者enqueue 需要明确与当前显卡有关的线程。

每个TensorRT engine都会绑定到一个指定的GPU上,因此多GPU使用TensorRT想到了使用进程池利用多进程来并行为每个GPU创建engine和推理。

python有自带的multiprocessing模块,但是如果要使用cuda的话会报错,解决方法参考了这篇文章,最终代码如下:

def build2inference(x, id): #id-GPUid

print(torch.cuda.device_count())

torch.cuda.set_device(id) #指定为哪个GPU创建engine

with build_engine_onnx(onnx_model_file) as engine, engine.create_execution_context() as context:

inputs, outputs, bindings, stream = allocate_buffers(engine)

print('Running inference on image {}...'.format(id))

inputs[0].host = x.data

trt_outputs = do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)#, batch_size=8)

mask = trt_outputs[0].reshape((1024,1024))

if __name__ == "__main__":

ctx = torch.multiprocessing.get_context("spawn")

print(torch.multiprocessing.cpu_count())

pool = ctx.Pool(3) # 应该合理选择pool的worker个数

input_shape = [3,1024,1024]

x = np.ones([1,]+input_shape).astype(np.float32)

pool_list = []

for i in range(2, 4):

res = pool.apply_async(build2inference, (x, i))

pool_list.append(res)

pool.close() # 关闭进程池,不再接受新的进程

pool.join()

正确性:

速度:

输入尺寸(4,3,1024,1024)

两卡2080ti 100个推理过程耗时 9.2540s

参考链接:

https://zongxp.blog.csdn.net/article/details/86077553

https://zhuanlan.zhihu.com/p/88318324

https://github.com/RizhaoCai/PyTorch_ONNX_TensorRT/blob/master/dynamic_shape_example.py

https://blog.csdn.net/qq_36276587/article/details/113175314

https://blog.csdn.net/Mr_WHITE2/article/details/107841255

https://docs.nvidia.com/deeplearning/tensorrt/developer-guide/index.html