【论文笔记】无监督的视觉里程计、深度图估计、视差图估计方法:Robustness Meets Deep Learning: An End-to-EndHybrid Pipeline...

Robustness Meets Deep Learning: An End-to-EndHybrid Pipeline for Unsupervised Learning of Ego motion

宾夕法尼亚大学

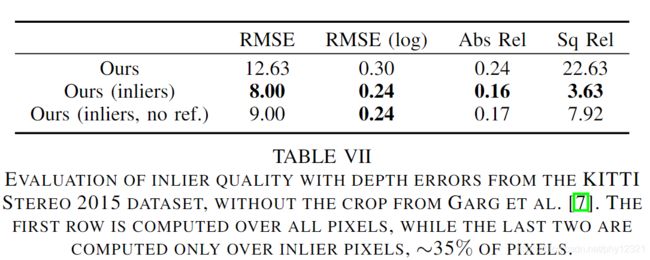

本文提出使用深度学习方法和几何RANSAC结合的无监督相对位姿回归pipeline, 分别对光流图和视差图进行预测,然后使用RANSAC求解内点集合以及相对位姿。

网络结构基于Flownet-S architecture,是其简化版本。

“In short, we remove the final three convolution layers from the encoder and the first two convolution layers from the decoder, and halve the number of output channels at each layer. In our experiments, our network reduces an input image with resolution 128x448 to activations with resolution 8x28 and 256 channels at the bottleneck”

光流和视差预测网络的损失函数:

由于光流图和视差图均表示一种图像放射变换,可以使用image warp函数进行建模,因此在损失函数中使用函数W(x_i)->x_j来描述二者。

1. Appearance Loss

- 光度一致损失:(基于光度一致假设)

L photo W i → j ( x ⃗ ) = ρ ( I i ( x ⃗ ) − I j ( W i → j ( x ⃗ ) ) ) ρ ( x ) = x 2 + ϵ 2 ρ ( x ) i s t h e r o b u s t C h a r b o n n i e r l o s s f u n c t i o n \begin{aligned} \mathcal{L}_{\text {photo }}^{W_{i \rightarrow j}}(\vec{x}) &=\rho\left(I_{i}(\vec{x})-I_{j}\left(W_{i \rightarrow j}(\vec{x})\right)\right) \\ \rho(x) &=\sqrt{x^{2}+\epsilon^{2}} \end{aligned} \\ ρ(x)\ is \ the \ robust \ Charbonnier\ loss \ function Lphoto Wi→j(x)ρ(x)=ρ(Ii(x)−Ij(Wi→j(x)))=x2+ϵ2ρ(x) is the robust Charbonnier loss function

光度一致假设:assumes that the correct warp should warp pixels from Ii to pixels at Ij with the same intensity

将光度一致假设与结构性损失结合就得到Appearance Loss:

L appearance W i → j ( x ⃗ ) = ( 1 − α ) SSIM ( x ⃗ ) + α L photo ( x ⃗ ) \mathcal{L}_{\text {appearance }}^{W_{i \rightarrow j}}(\vec{x})=(1-\alpha) \operatorname{SSIM}(\vec{x})+\alpha \mathcal{L}_{\text {photo }}(\vec{x}) Lappearance Wi→j(x)=(1−α)SSIM(x)+αLphoto (x)

2.几何一致性损失

前后向(或左右视图)的warp得到的结果应该是相反的,所以求和结果应该为0(个人理解,有错误欢迎指正),原文:

“the backward warp, warped to the previous image, should be equivalent to the negative of the forward warp. We apply this constraint to both the disparity and flow:”

L consistency W i → j ( x ⃗ ) = ρ ( W i → j ( x ⃗ ) + W j → i ( W i → j ( x ⃗ ) ) ) \mathcal{L}_{\text {consistency }}^{W_{i \rightarrow j}}(\vec{x})=\rho\left(W_{i \rightarrow j}(\vec{x})+W_{j \rightarrow i}\left(W_{i \rightarrow j}(\vec{x})\right)\right) Lconsistency Wi→j(x)=ρ(Wi→j(x)+Wj→i(Wi→j(x)))

3.Smoothness Regularization

图像边缘处权重更小,即要求梯度误差在平滑区域更小:

L smooth W i → j ( x ⃗ ) = ρ ( δ x W i → j ( x ⃗ ) e − ∣ δ x I ( x ⃗ ) ∣ ) + ρ ( δ y W i → j ( x ⃗ ) e − ∣ δ y I ( x ⃗ ) ∣ ) \begin{aligned} \mathcal{L}_{\text {smooth }}^{W_{i \rightarrow j}}(\vec{x})=& \rho\left(\delta_{x} W_{i \rightarrow j}(\vec{x}) e^{-\left|\delta_{x} I(\vec{x})\right|}\right)+\\ & \rho\left(\delta_{y} W_{i \rightarrow j}(\vec{x}) e^{-\left|\delta_{y} I(\vec{x})\right|}\right) \end{aligned} Lsmooth Wi→j(x)=ρ(δxWi→j(x)e−∣δxI(x)∣)+ρ(δyWi→j(x)e−∣δyI(x)∣)

4.Motion Occlusion Estimation

通过生成 01 mask 对于warp到图像外面的像素进行过滤。

然后将mask用于光度一致损失和几何一致损失。

mask是直接在预测的光流图和视差图上进行计算的,不需要任何学习参数。

总损失函数

对于一个warp:

L total W i → j = ∑ x ⃗ M o c c W i → j ( x ⃗ ) ( L photo W i → j ( x ⃗ ) + λ 1 L consistency W i → j ( x ⃗ ) ) + λ 2 L smoothness W i → j ( x ⃗ ) \begin{aligned} \mathcal{L}_{\text {total }}^{W_{i \rightarrow j}}=& \sum_{\vec{x}} M_{o c c}^{W_{i \rightarrow j}}(\vec{x})\left(\mathcal{L}_{\text {photo }}^{W_{i \rightarrow j}}(\vec{x})+\lambda_{1} \mathcal{L}_{\text {consistency }}^{W_{i \rightarrow j}}(\vec{x})\right) \\ &+\lambda_{2} \mathcal{L}_{\text {smoothness }}^{W_{i \rightarrow j}}(\vec{x}) \end{aligned} Ltotal Wi→j=x∑MoccWi→j(x)(Lphoto Wi→j(x)+λ1Lconsistency Wi→j(x))+λ2Lsmoothness Wi→j(x)

将每个光流和视差预测的误差进行求和:

L total = ∑ c ∈ { l , r } L total F 0 → 1 , c + L total F 1 → 0 , c + ∑ t ∈ { 0 , 1 } L total D l → r , t + L total D r → l , t \begin{aligned} \mathcal{L}_{\text {total }}=& \sum_{c \in\{l, r\}} \mathcal{L}_{\text {total }}^{F_{0 \rightarrow 1, c}}+\mathcal{L}_{\text {total }}^{F_{1 \rightarrow 0, c}}+\\ & \sum_{t \in\{0,1\}} \mathcal{L}_{\text {total }}^{D_{l \rightarrow r, t}}+\mathcal{L}_{\text {total }}^{D_{r \rightarrow l, t}} \end{aligned} Ltotal =c∈{l,r}∑Ltotal F0→1,c+Ltotal F1→0,c+t∈{0,1}∑Ltotal Dl→r,t+Ltotal Dr→l,t

根据光流F和视差图D使用RANSAC求解相对位姿

本质是用视差图和光流图的内点来预测相机的运动速度v w,乘以采样频率得到相机的运动位移。

首先由视差图D、相机baseline b和焦距f,可求得深度图Z:

Z ( x ⃗ ) = f b D ( x ⃗ ) Z(\vec{x})=\frac{f b}{D(\vec{x})} Z(x)=D(x)fb

由约束姿势,深度和光流的运动场方程:

F ( x ⃗ ) = 1 Z ( x ⃗ ) A v + B ω = 1 Z ( x ⃗ ) [ − 1 0 x 0 − 1 y ] v + [ x y − ( 1 + x 2 ) y 1 + y 2 − x y − x ] ω \begin{array}{l} F(\vec{x})=\frac{1}{Z(\vec{x})} A v+B \omega \\ =\frac{1}{Z(\vec{x})}\left[\begin{array}{ccc} -1 & 0 & x \\ 0 & -1 & y \end{array}\right] v+\left[\begin{array}{ccc} x y & -\left(1+x^{2}\right) & y \\ 1+y^{2} & -x y & -x \end{array}\right] \omega \end{array} F(x)=Z(x)1Av+Bω=Z(x)1[−100−1xy]v+[xy1+y2−(1+x2)−xyy−x]ω

“v and ω are the linear and angular velocity of the camera in the camera frame,Av and Bω are the 2-dimensional vectors corresponding to linear and angular velocity terms”

F、Z已知,因此可以将该方程看作一个v和w的最小二乘问题。论文使用3点RANSAC(在光流图和视差图中选择三个点),求解v和w.

refinement loss

根据位姿预测值和光流预测值,优化视差图:

最 小 化 误 差 : L ( x ⃗ ) = F ( x ⃗ ) − D ^ ( x ⃗ ) f b A v − B ω 最小化误差:L(\vec{x})=F(\vec{x})-\frac{\hat{D}(\vec{x})}{f b} A v-B \omega 最小化误差:L(x)=F(x)−fbD^(x)Av−Bω

可微RANSAC

DSAC中使用评分网络对预测进行评分和选择,因此需要额外的评分网络,本文为了网络的轻量性,使用了固定的mask来选择内点(类似于Motion Occlusion Estimation),在给定一组inlier的情况下,从inlier的光流和视差估计相机速度的函数可以通过简单的矩阵求逆实现,而求逆操作是可微的。