evaluation---sift,orb,surf,BRISK代码评估(opencv-contrib-python==3.4.1.15)--和matplotlib画画

参考:

重复率评估指标:code

OpenCV实践之特征点评估代码解析_AutoDeep的专栏-CSDN博客

特征点的匹配正确衡量标准与量化_Jack_Sarah的博客-CSDN博客

刚开始找了一个代码:

https://github.com/jckruz777/Computer_Vision/blob/master/Proyectos/Proyecto_01/keypointsStillImageTracking.py

但是这是自己算出H进行recall计算,其实和recall定义不一样,还有他对octaves的理解也不太对,我加了octaves的注释,简单做了修改,希望通过读取Mikolajczyk数据集,进行recall的计算,写了一半,因为后面发现了新大陆:

opencv-python如果想使用sift,surf

SIFT:AttributeError: module 'cv2.cv2' has no attribute 'xfeatures2d'

python -mpip install opencv-contrib-python==3.4.1.15

python -mpip install opencv-python==3.4.1.15

main_evaluation.pyimport matplotlib

matplotlib.use('tkagg')

from matplotlib import pyplot as plt

import numpy as np

import argparse

import os

import cv2

import time

def getDescriptor(descriptor, octaves=None):

if descriptor == "SIFT":

if octaves==None:

octaves = 3

#https://docs.opencv.org/3.4.1/d5/d3c/classcv_1_1xfeatures2d_1_1SIFT.html

#nOctaveLayers = 3

#The number of layers in each octave. 3 is the value used in D. Lowe paper.

#The number of octaves is computed automatically from the image resolution.

return (cv2.xfeatures2d.SIFT_create(nOctaveLayers=octaves), "STR")

elif descriptor == "SURF":

if octaves==None:

octaves = 4

#https://docs.opencv.org/3.4.1/d5/df7/classcv_1_1xfeatures2d_1_1SURF.html

#nOctaves = 4

#Number of pyramid octaves the keypoint detector will use.

#nOctaveLayers = 3

#Number of octave layers within each octave

return (cv2.xfeatures2d.SURF_create(nOctaves=octaves), "STR")

elif descriptor == "ORB":

if octaves==None:

octaves = 3

#https://docs.opencv.org/3.4.1/db/d95/classcv_1_1ORB.html

#nlevels = 8

#The number of pyramid levels. The smallest level will have linear size equal

#to input_image_linear_size/pow(scaleFactor, nlevels - firstLevel).

return (cv2.ORB_create(nlevels=octaves), "BIN")

elif descriptor == "BRISK":

if octaves==None:

octaves = 3

#https://docs.opencv.org/3.4.1/de/dbf/classcv_1_1BRISK.html

#octaves = 3

#detection octaves. Use 0 to do single scale

return (cv2.BRISK_create(octaves=octaves), "BIN")

def getKeypoints(gray1, gray2, detector):

# detect keypoints and extract local invariant descriptors from the img

start = time.time()

(kps1, descs1) = detector.detectAndCompute(gray1, None)

(kps2, descs2) = detector.detectAndCompute(gray2, None)

end = time.time()

print("keypoints detection time: {:0.2f} seconds".format(end - start))

img_ref = cv2.drawKeypoints(gray1, kps1, None)

img_eval = cv2.drawKeypoints(gray2, kps2, None)

return (img_ref, img_eval, kps1, descs1, kps2, descs2, end - start)

def getBFMatcher(ref_img, kp1, desc1, eval_img, kp2, desc2):

TRESHOLD = 70

# Match the features

bf = cv2.BFMatcher()

matches = bf.knnMatch(desc1, desc2, k=2)

# Apply ratio test

good = []

for m in matches:

if len(m) == 2:

if m[0].distance < TRESHOLD / 100 * m[1].distance:

good.append(m[0])

n_good_matches = len(good)

return (good, matches)

def getFLANNMatcher(ref_img, kp1, desc1, eval_img, kp2, desc2, alg_type):

TRESHOLD = 70

# Parameters for Binary Algorithms (BIN)

FLANN_INDEX_LSH = 6

flann_params_bin = dict(algorithm=FLANN_INDEX_LSH,

table_number=6, # 12

key_size=12, # 20

multi_probe_level=1) # 2

# Parameters for String Based Algorithms (STR)

FLANN_INDEX_KDTREE = 0

flann_params_str = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

if alg_type == "BIN":

flann = cv2.FlannBasedMatcher(flann_params_bin, search_params)

else:

flann = cv2.FlannBasedMatcher(flann_params_str, search_params)

matches = flann.knnMatch(desc1, desc2, k=2)

good = []

for m in matches:

if len(m) == 2:

if m[0].distance < TRESHOLD / 100 * m[1].distance:

good.append(m[0])

n_good_matches = len(good)

return (good, matches)

def getHomography(good_matches, img1, img2, kp1, kp2, threshold):

MIN_MATCH_COUNT = 10

if len(good_matches) > MIN_MATCH_COUNT:

src_pts = np.float32([kp1[m.queryIdx].pt for m in good_matches]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in good_matches]).reshape(-1, 1, 2)

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, threshold)

matchesMask = mask.ravel().tolist()

h, w = img1.shape

pts = np.float32([[0, 0], [0, h - 1], [w - 1, h - 1], [w - 1, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, M)

img2 = cv2.polylines(img2, [np.int32(dst)], True, 255, 3, cv2.LINE_AA)

return (matchesMask, img2)

else:

print("Not enough matches are found: " + str(len(good_matches)) + "/" + str(MIN_MATCH_COUNT))

matchesMask = None

return (matchesMask, None)

def getFinalFrame(ref_img, kp1, eval_img, kp2, good_matches, matchesMask):

draw_params = dict(matchColor=(0, 255, 0), # draw matches in green color

singlePointColor=(255, 0, 0),

matchesMask=matchesMask, # draw only inliers

flags=2)

if matchesMask != None:

img_result = cv2.drawMatches(ref_img, kp1, eval_img, kp2, good_matches, None, **draw_params)

return img_result

else:

return None

def main(evaluation, reference, descriptor, matcher, octaves, mtreshold, testing):

# Setup the evaluation image, image reference and descriptor

imgRef = cv2.imread(reference)

imgEval = cv2.imread(evaluation)

imgRef = cv2.cvtColor(imgRef, cv2.COLOR_BGR2GRAY)

imgEval = cv2.cvtColor(imgEval, cv2.COLOR_BGR2GRAY)

(descriptor, descriptorType) = getDescriptor(descriptor, octaves)

# Get keypoint and desciption of the frame

(img_ref, img_eval, kps1, descs1, kps2, descs2, timeRes) = getKeypoints(imgRef, imgEval, descriptor)

# Get the match

if matcher == "BF":

(good, matches) = getBFMatcher(imgRef, kps1, descs1, imgEval, kps2, descs2)

elif matcher == "FLANN":

(good, matches) = getFLANNMatcher(imgRef, kps1, descs1, imgEval, kps2, descs2, descriptorType)

# Get the homography

(matchesMask, res_img) = getHomography(good, imgRef, imgEval, kps1, kps2, int(mtreshold))

# 可视化匹配结果

result = getFinalFrame(imgRef, kps1, imgEval, kps2, good, matchesMask)

# Metric评价指标

correspondencies = len(matches)

inliers = 0

if matchesMask != None:

inliers = matchesMask.count(1)

outliers = correspondencies - inliers

recall = 0

if correspondencies > 0:

recall = inliers / correspondencies

#

#precision = tp / (tp + fp)

#recall = tp / (tp + fn)

#f1_score = 2* ((precision * recall) / (precision + recall))

# ---相关--- ---不相关---

#---检索到--- A(TP) B(FP)

#---未被检索到--- C(FN) D(TN)

#Recall召回率 = 提取出的正确信息条数 / 样本中的信息条数

#Precision精确率 = 提取出的正确信息条数 / 提取出的信息条数

#Recall = A/(A+C)

#Precision=A/(A+B)

#在特征点匹配时:

#A指的是实际为匹配点对,且该匹配算法得到了这些匹配对;

#B指的是实际为匹配点对,但该匹配算法没有得到这些匹配对;

#C指的是实际为错误匹配对,但该算法得到了这些匹配对;

#即Precision为匹配结果中有多少是准确的,Recall就是所有正确的匹配结果有多少通过匹配算法得到了。

print("Recall = {:0.2f}%".format(recall * 100))

print("Correspondencies = {:0.2f}".format(correspondencies))

if matchesMask != None and not testing:

# Plot the result

graph = plt.figure()

plt.imshow(result)

graph.show()

if not testing:

plt.waitforbuttonpress(-1)

return (recall, timeRes)

def featurematching_evaluation(tar_img, ori_img, descriptor, matcher, octaves=None, mtreshold=5, testing=True):

#原始图像是参考图像;目标图像是评价图像,用于评价参考图像

imgRef = cv2.imread(ori_img, -1)

imgRef = cv2.cvtColor(imgRef, cv2.COLOR_BGR2GRAY)

imgEval = cv2.imread(tar_img, -1)

imgEval = cv2.cvtColor(imgEval, cv2.COLOR_BGR2GRAY)

(descriptor, descriptorType) = getDescriptor(descriptor, octaves)

# Get keypoint and desciption of the frame

(img_ref, img_eval, kps1, descs1, kps2, descs2, timeRes) = getKeypoints(imgRef, imgEval, descriptor)

# Get the match

if matcher == "BF":

(good, matches) = getBFMatcher(imgRef, kps1, descs1, imgEval, kps2, descs2)

elif matcher == "FLANN":

(good, matches) = getFLANNMatcher(imgRef, kps1, descs1, imgEval, kps2, descs2, descriptorType)

# Get the homography

(matchesMask, res_img) = getHomography(good, imgRef, imgEval, kps1, kps2, int(mtreshold))

# Get the resulting frame

result = getFinalFrame(imgRef, kps1, imgEval, kps2, good, matchesMask)

# Metric

correspondencies = len(matches)

inliers = 0

if matchesMask != None:

inliers = matchesMask.count(1)

outliers = correspondencies - inliers

recall = 0

if correspondencies > 0:

recall = inliers / correspondencies

#

#precision = tp / (tp + fp)

#recall = tp / (tp + fn)

#f1_score = 2* ((precision * recall) / (precision + recall))

# ---相关--- ---不相关---

#---检索到--- A(TP) B(FP)

#---未被检索到--- C(FN) D(TN)

#Recall召回率 = 提取出的正确信息条数 / 样本中的信息条数

#Precision精确率 = 提取出的正确信息条数 / 提取出的信息条数

#Recall = A/(A+C)

#Precision=A/(A+B)

#在特征点匹配时:

#A指的是实际为匹配点对,且该匹配算法得到了这些匹配对;

#B指的是实际为匹配点对,但该匹配算法没有得到这些匹配对;

#C指的是实际为错误匹配对,但该算法得到了这些匹配对;

#即Precision为匹配结果中有多少是准确的,Recall就是所有正确的匹配结果有多少通过匹配算法得到了。

print("Recall = {:0.2f}%".format(recall * 100))

print("Correspondencies = {:0.2f}".format(correspondencies))

if matchesMask != None and not testing:

# Plot the result

graph = plt.figure()

plt.imshow(result)

graph.show()

if not testing:

plt.waitforbuttonpress(-1)

return (recall, timeRes)

def plotResults(lines, title, xlabel, ylabel):

plt.legend(('SIFT', 'SURF', 'ORB', 'BRISK'),

loc='upper right')

plt.title(title)

plt.xlabel(xlabel)

plt.ylabel(ylabel)

plt.show()

def pipeline(args):

print("Image of evaluation path = " + args.evaluation)

print("Image of reference path = " + args.reference)

print("Descriptor = " + args.descriptor)

print("Matcher = " + args.matcher)

print("Octaves = " + str(args.octaves))

print("Matcher treshold = " + str(args.mtreshold))

descriptors = ['SIFT', 'SURF', 'ORB', 'BRISK']

#通过改变不同Octave,金字塔组数或金字塔每组层数,进行recall召回率和时间对比

if args.test == 'O':

print("Octave variation Test")

descriptorsResults = []

descriptorsTimes = []

for d in descriptors:

print("Testing for " + d)

recallResults = []

timeResults = []

for i in range(4, 9):

print("Testing " + str(i) + " octaves")

(recallRes, timeRes) = main(args.evaluation, args.reference, d, args.matcher, i, args.mtreshold, True)

recallResults.append(recallRes)

timeResults.append(timeRes)

descriptorsResults.append(recallResults)

descriptorsTimes.append(timeResults)

x = range(4, 9)

lines = plt.plot(x, descriptorsResults[0], x, descriptorsResults[1], x, descriptorsResults[2], x,

descriptorsResults[3])

plotResults(lines, 'Descriptors test with Octave variation', 'Octaves', 'Recall')

linesTime = plt.plot(x, descriptorsTimes[0], x, descriptorsTimes[1], x, descriptorsTimes[2], x,

descriptorsTimes[3])

plotResults(linesTime, 'Descriptors time test', 'Octaves', 'Time [s]')

elif args.test == 'T':

print("Match treshold Test")

descriptorsResults = []

descriptorsTimes = []

x = np.arange(0, 10, 0.25)

for d in descriptors:

print("Testing for " + d)

recallResults = []

timeResults = []

for i in x:

print("Testing " + str(i) + " treshold")

(recallRes, timeRes) = main(args.evaluation, args.reference, args.descriptor, args.matcher,

args.octaves,

i, True)

recallResults.append(recallRes)

timeResults.append(timeRes)

descriptorsResults.append(recallResults)

descriptorsTimes.append(timeResults)

lines = plt.plot(x, descriptorsResults[0], x, descriptorsResults[1], x, descriptorsResults[2], x,

descriptorsResults[3])

plotResults(lines, 'Descriptors test with inliers treshold variation', 'Match treshold', 'Recall')

linesTime = plt.plot(x, descriptorsTimes[0], x, descriptorsTimes[1], x, descriptorsTimes[2], x,

descriptorsTimes[3])

plotResults(linesTime, 'Descriptors time test', 'Match treshold', 'Time [s]')

elif args.test == 'yangninghua':

descriptors = ['SIFT', 'SURF', 'ORB', 'BRISK']

Mikolajczyk_dataset = ['bikes', 'trees', 'graf', 'wall',

'bark', 'boat', 'leuven', 'ubc']

theme_dataset = ['模糊程度', '模糊程度', '视角变化', '视角变化',

'缩放旋转变化', '缩放旋转变化', '光照变化', 'JPEG压缩程度']

dataset_path = './Mikolajczyk_dataset'

img_nums = 6

img_num_start = 1

descriptorsResults = []

descriptorsTimes = []

#可视化

mark = ['-kx', '-rv', '-gs', '-m+', '-bp']

for m_ind, scenes in enumerate(Mikolajczyk_dataset):

for n_ind, desc in descriptors:

if scenes== 'boat':

suffix = 'pgm'

else:

suffix = 'ppm'

original_img = os.path.join(dataset_path, scenes, 'img{}.{}'.format(str(1), suffix))

for i_img in range(img_num_start+1, img_nums+1, 1):

target_img = os.path.join(dataset_path, scenes, 'img{}.{}'.format(str(i_img), suffix))

GT_H = os.path.join(dataset_path, scenes, 'H{}to{}p'.format(str(1), str(i_img)))

featurematching_evaluation(target_img, original_img, GT_H, desc, 'BF', None, 5, True)

else:

main(args.evaluation, args.reference, args.descriptor, args.matcher, args.octaves, args.mtreshold, False)

if __name__ == "__main__":

parser = argparse.ArgumentParser(

description='Keypoints extraction and object tracking in a still image')

parser.add_argument('--evaluation',

help='Image of evaluation',

default='./IMG/deep_learning_book.jpg')

parser.add_argument('--reference',

help='Image of reference with the object to be detected',

default="./IMG/test_1.jpg")

parser.add_argument('--descriptor',

help='Descriptor algorithm: SIFT, SURF, ORB, BRISK',

default='SIFT')

parser.add_argument('--matcher',

help='Matcher method: Brute force (BF) or FLANN (FLANN)',

default='FLANN')

parser.add_argument('--octaves',

help='Number of octaves that the descriptor will use',

default=None)

parser.add_argument('--mtreshold',

help='Treshold for good matches.',

default=5)

parser.add_argument('--test',

help='Test cases: Octave variation (O), inliers treshold (T)',

default='yangninghua')

args = parser.parse_args()

if args.evaluation is None or args.reference is None:

parser.print_help()

exit()

pipeline(args)写着写着发现了opencv自带评估代码,还有评估代码的数据

参考opencv源代码:

opencv-2.4.10.4/samples/cpp/descriptor_extractor_matcher.cpp

opencv-2.4.10.4/samples/cpp/detector_descriptor_evaluation.cpp

opencv-2.4.10.4/samples/cpp/detector_descriptor_matcher_evaluation.cpp

调用了:

opencv-2.4.10.4/modules/features2d/src/evaluation.cpp

关于数据是在:

https://codeload.github.com/opencv/opencv_extra/zip/2.4.10

我把我想要的东西提取了出来,比如4个cpp,还有部分数据

clion工程设置:

cmake_minimum_required(VERSION 3.12)

project(opencv_evaluation)

set(CMAKE_CXX_STANDARD 14)

set(OpenCV_DIR /home/boyun/deepglint/install/opencv-2.4.10.4/cmake-build-debug)

find_package(OpenCV REQUIRED)

INCLUDE_DIRECTORIES(

${OpenCV_INCLUDE_DIRS}

)

#add_executable(opencv_evaluation detector_descriptor_matcher_evaluation.cpp)

add_executable(descriptor_extractor_matcher descriptor_extractor_matcher.cpp)

target_link_libraries(descriptor_extractor_matcher ${OpenCV_LIBS})

add_executable(detection_based_tracker_sample detection_based_tracker_sample.cpp)

target_link_libraries(detection_based_tracker_sample ${OpenCV_LIBS})

add_executable(detector_descriptor_evaluation detector_descriptor_evaluation.cpp)

target_link_libraries(detector_descriptor_evaluation ${OpenCV_LIBS})

add_executable(detector_descriptor_matcher_evaluation detector_descriptor_matcher_evaluation.cpp)

target_link_libraries(detector_descriptor_matcher_evaluation ${OpenCV_LIBS})

其中如果使用到了SIFT SURF

记得在代码中加入:

#include "opencv2/nonfree/nonfree.hpp"

//初始化sift surf

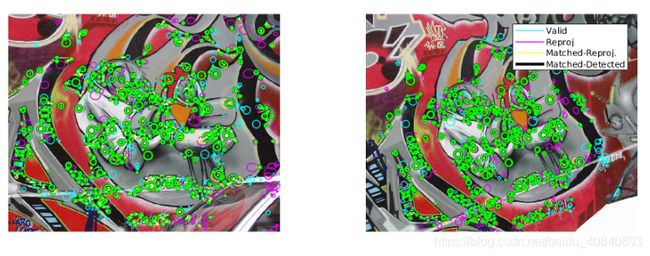

cv::initModule_nonfree();比如我们使用代码detector_descriptor_matcher_evaluation.cpp为例子:

运行后会生成:

关于怎么将文件绘图,参考opencv的代码,使用gnuplot:

//使用gnuplot画图 //sudo apt-get install libx11-dev //sudo apt-get install gnuplot-x11 //教程:https://ishare.iask.sina.com.cn/f/66489636.html //运行脚本模式 gnuplot createPlots.p

例如我们这里只有SURF和ORB跑出来的结果:

createPlots.p

set terminal png

set key box outside bottom

set size 1, 1

#set title "Detector-descriptor-matcher evaluation under scale changes (bark dataset)"

set title "Matcher evaluation under scale changes (bark dataset)"

set xlabel "dataset image index (increasing zoom+rotation)"

set ylabel "precision, %"

set output 'bark_precision.png'

set xr[2:6]

set yr[0:100]

plot "ORBX_BF_bark_precision.csv" title 'ORBX' with linespoints, "SURF_BF_bark_precision.csv" title 'SURF' with linespoints

set size 1, 1

#set title "Detector-descriptor-matcher evaluation under scale changes (bark dataset)"

set title "Matcher evaluation under scale changes (bark dataset)"

set xlabel "dataset image index (increasing zoom+rotation)"

set ylabel "recall, %"

set output 'bark_recall.png'

set xr[2:6]

set yr[0:100]

plot "ORBX_BF_bark_recall.csv" title 'ORBX' with linespoints, "SURF_BF_bark_recall.csv" title 'SURF' with linespoints运行:

gnuplot createPlots.p

经过反复确认,确实应该使用:

/*M///

//

// IMPORTANT: READ BEFORE DOWNLOADING, COPYING, INSTALLING OR USING.

//

// By downloading, copying, installing or using the software you agree to this license.

// If you do not agree to this license, do not download, install,

// copy or use the software.

//

//

// Intel License Agreement

// For Open Source Computer Vision Library

//

// Copyright (C) 2000, Intel Corporation, all rights reserved.

// Third party copyrights are property of their respective owners.

//

// Redistribution and use in source and binary forms, with or without modification,

// are permitted provided that the following conditions are met:

//

// * Redistribution's of source code must retain the above copyright notice,

// this list of conditions and the following disclaimer.

//

// * Redistribution's in binary form must reproduce the above copyright notice,

// this list of conditions and the following disclaimer in the documentation

// and/or other materials provided with the distribution.

//

// * The name of Intel Corporation may not be used to endorse or promote products

// derived from this software without specific prior written permission.

//

// This software is provided by the copyright holders and contributors "as is" and

// any express or implied warranties, including, but not limited to, the implied

// warranties of merchantability and fitness for a particular purpose are disclaimed.

// In no event shall the Intel Corporation or contributors be liable for any direct,

// indirect, incidental, special, exemplary, or consequential damages

// (including, but not limited to, procurement of substitute goods or services;

// loss of use, data, or profits; or business interruption) however caused

// and on any theory of liability, whether in contract, strict liability,

// or tort (including negligence or otherwise) arising in any way out of

// the use of this software, even if advised of the possibility of such damage.

//

//M*/

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/nonfree/nonfree.hpp"

#include

#include

#include

#include

#include

#include

#include

using namespace std;

using namespace cv;

/*

The algorithm:

for each tested combination of detector+descriptor+matcher:

create detector, descriptor and matcher,

load their params if they are there, otherwise use the default ones and save them

for each dataset:

load reference image

detect keypoints in it, compute descriptors

for each transformed image:

load the image

load the transformation matrix

detect keypoints in it too, compute descriptors

find matches

transform keypoints from the first image using the ground-truth matrix

compute the number of matched keypoints, i.e. for each pair (i,j) found by a matcher compare

j-th keypoint from the second image with the transformed i-th keypoint. If they are close, +1.

so, we have:

N - number of keypoints in the first image that are also visible

(after transformation) on the second image

N1 - number of keypoints in the first image that have been matched.

n - number of the correct matches found by the matcher

n/N1 - precision

n/N - recall (?)

we store (N, n/N1, n/N) (where N is stored primarily for tuning the detector's thresholds,

in order to semi-equalize their keypoints counts)

*/

typedef Vec3f TVec; // (N, n/N1, n/N) - see above

static void saveloadDDM( const string& params_filename,

Ptr& detector,

Ptr& descriptor,

Ptr& matcher )

{

//python

//https://docs.opencv.org/3.4.10/da/d56/classcv_1_1FileStorage.html

FileStorage fs(params_filename, FileStorage::READ);

if( fs.isOpened() )

{

detector->read(fs["detector"]);

descriptor->read(fs["descriptor"]);

matcher->read(fs["matcher"]);

fs.release();

}

else

{

fs.open(params_filename, FileStorage::WRITE);

fs << "detector" << "{";

detector->write(fs);

fs << "}" << "descriptor" << "{";

descriptor->write(fs);

fs << "}" << "matcher" << "{";

matcher->write(fs);

//python

//https://docs.opencv.org/3.4.10/db/d39/classcv_1_1DescriptorMatcher.html#abcba89024601e88afe6e4cc2349798c3

fs << "}";

fs.release();

}

}

static Mat loadMat(const string& fsname)

{

FileStorage fs(fsname, FileStorage::READ);

Mat m;

fs.getFirstTopLevelNode() >> m;

return m;

}

static void transformKeypoints( const vector& kp,

vector >& contours,

const Mat& H )

{

const float scale = 256.f;

size_t i, n = kp.size();

contours.resize(n);

vector temp;

for( i = 0; i < n; i++ )

{

//python

//https://docs.opencv.org/3.4.10/d6/d6e/group__imgproc__draw.html#ga727a72a3f6a625a2ae035f957c61051f

ellipse2Poly(Point2f(kp[i].pt.x*scale, kp[i].pt.y*scale),

Size2f(kp[i].size*scale, kp[i].size*scale),

0, 0, 360, 12, temp);

Mat(temp).convertTo(contours[i], CV_32F, 1./scale);

perspectiveTransform(contours[i], contours[i], H);

}

}

static TVec proccessMatches( Size imgsize,

const vector& matches,

const vector >& kp1t_contours,

const vector >& kp_contours,

double overlapThreshold )

{

const double visibilityThreshold = 0.6;

// 1. [preprocessing] find bounding rect for each element of kp1t_contours and kp_contours.

// 2. [cross-check] for each DMatch (iK, i1)

// update best_match[i1] using DMatch::distance.

// 3. [compute overlapping] for each i1 (keypoint from the first image) do:

// if i1-th keypoint is outside of image, skip it

// increment N

// if best_match[i1] is initialized, increment N1

// if kp_contours[best_match[i1]] and kp1t_contours[i1] overlap by overlapThreshold*100%,

// increment n. Use bounding rects to speedup this step

int i, size1 = (int)kp1t_contours.size(), size = (int)kp_contours.size(), msize = (int)matches.size();

vector best_match(size1);

vector rects1(size1), rects(size);

// proprocess

for( i = 0; i < size1; i++ )

rects1[i] = boundingRect(kp1t_contours[i]);

for( i = 0; i < size; i++ )

rects[i] = boundingRect(kp_contours[i]);

// cross-check

for( i = 0; i < msize; i++ )

{

DMatch m = matches[i];

int i1 = m.trainIdx, iK = m.queryIdx;

CV_Assert( 0 <= i1 && i1 < size1 && 0 <= iK && iK < size );

if( best_match[i1].trainIdx < 0 || best_match[i1].distance > m.distance )

best_match[i1] = m;

}

int N = 0, N1 = 0, n = 0;

// overlapping

for( i = 0; i < size1; i++ )

{

int i1 = i, iK = best_match[i].queryIdx;

if( iK >= 0 )

N1++;

Rect r = rects1[i] & Rect(0, 0, imgsize.width, imgsize.height);

if( r.area() < visibilityThreshold*rects1[i].area() )

continue;

N++;

if( iK < 0 || (rects1[i1] & rects[iK]).area() == 0 )

continue;

//python

//https://docs.opencv.org/3.4.10/d3/dc0/group__imgproc__shape.html#ga8e840f3f3695613d32c052bec89e782c

double n_area = intersectConvexConvex(kp1t_contours[i1], kp_contours[iK], noArray(), true);

if( n_area == 0 )

continue;

//https://docs.opencv.org/3.4.10/d3/dc0/group__imgproc__shape.html#ga2c759ed9f497d4a618048a2f56dc97f1

double area1 = contourArea(kp1t_contours[i1], false);

double area = contourArea(kp_contours[iK], false);

double ratio = n_area/(area1 + area - n_area);

n += ratio >= overlapThreshold;

}

return TVec((float)N, (float)n/std::max(N1, 1), (float)n/std::max(N, 1));

}

static void saveResults(const string& dir, const string& name, const string& dsname,

const vector& results, const int* xvals)

{

string fname1 = format("%s%s_%s_precision.csv", dir.c_str(), name.c_str(), dsname.c_str());

string fname2 = format("%s%s_%s_recall.csv", dir.c_str(), name.c_str(), dsname.c_str());

FILE* f1 = fopen(fname1.c_str(), "wt");

FILE* f2 = fopen(fname2.c_str(), "wt");

for( size_t i = 0; i < results.size(); i++ )

{

fprintf(f1, "%d, %.1f\n", xvals[i], results[i][1]*100);

fprintf(f2, "%d, %.1f\n", xvals[i], results[i][2]*100);

}

fclose(f1);

fclose(f2);

}

//使用gnuplot画图

//sudo apt-get install libx11-dev

//sudo apt-get install gnuplot-x11

//教程:https://ishare.iask.sina.com.cn/f/66489636.html

//运行脚本模式 gnuplot createPlots.p

//evaluateGenericDescriptorMatcher

//

int main(int argc, char** argv)

{

static const char* ddms[] =

{

"ORBX_BF", "ORB", "ORB", "BruteForce-Hamming",

//"ORB_BF", "ORB", "ORB", "BruteForce-Hamming",

//"ORB3_BF", "ORB", "ORB", "BruteForce-Hamming(2)",

//"ORB4_BF", "ORB", "ORB", "BruteForce-Hamming(2)",

//"ORB_LSH", "ORB", "ORB", "LSH",

//"SURF_BF", "SURF", "SURF", "BruteForce",

//"SURF_BF", "SURF", "SURF", "FlannBased",

0

};

static const char* datasets[] =

{

"bark", "bikes", "boat", "graf", "leuven", "trees", "ubc", "wall", 0

};

static const int imgXVals[] = { 2, 3, 4, 5, 6 }; // if scale, blur or light changes

static const int viewpointXVals[] = { 20, 30, 40, 50, 60 }; // if viewpoint changes

static const int jpegXVals[] = { 60, 80, 90, 95, 98 }; // if jpeg compression

const double overlapThreshold = 0.6;

vector > > results; // indexed as results[ddm][dataset][testcase]

string dataset_dir = "/home/boyun/CLionProjects/opencv_evaluation/cv_data/detectors_descriptors_evaluation/images_datasets";

//string dir=argc > 1 ? argv[1] : ".";

string dir="/home/boyun/CLionProjects/opencv_evaluation/result";

if( dir[dir.size()-1] != '\\' && dir[dir.size()-1] != '/' )

dir += "/";

//int result = system(("mkdir " + dir).c_str());

//CV_Assert(result == 0);

//初始化sift surf

cv::initModule_nonfree();

for( int i = 0; ddms[i*4] != 0; i++ )

{

const char* name = ddms[i*4];

const char* detector_name = ddms[i*4+1];

const char* descriptor_name = ddms[i*4+2];

const char* matcher_name = ddms[i*4+3];

string params_filename = dir + string(name) + "_params.yml";

cout << "Testing " << name << endl;

Ptr detector = FeatureDetector::create(detector_name);

Ptr descriptor = DescriptorExtractor::create(descriptor_name);

//python

//https://docs.opencv.org/3.4.10/db/d39/classcv_1_1DescriptorMatcher.html#ab5dc5036569ecc8d47565007fa518257

/*

BruteForce (it uses L2 )

BruteForce-L1

BruteForce-Hamming

BruteForce-Hamming(2)

FlannBased

*/

Ptr matcher = DescriptorMatcher::create(matcher_name);

saveloadDDM( params_filename, detector, descriptor, matcher );

results.push_back(vector >());

for( int j = 0; datasets[j] != 0; j++ )

{

const char* dsname = datasets[j];

cout << "\ton " << dsname << " ";

cout.flush();

const int* xvals = strcmp(dsname, "ubc") == 0 ? jpegXVals :

strcmp(dsname, "graf") == 0 || strcmp(dsname, "wall") == 0 ? viewpointXVals : imgXVals;

vector kp1, kp;

vector matches;

vector > kp1t_contours, kp_contours;

Mat desc1, desc;

Mat img1 = imread(format("%s/%s/img1.png", dataset_dir.c_str(), dsname), 0);

CV_Assert( !img1.empty() );

detector->detect(img1, kp1);

descriptor->compute(img1, kp1, desc1);

results[i].push_back(vector());

for( int k = 2; ; k++ )

{

cout << ".";

cout.flush();

Mat imgK = imread(format("%s/%s/img%d.png", dataset_dir.c_str(), dsname, k), 0);

if( imgK.empty() )

break;

detector->detect(imgK, kp);

descriptor->compute(imgK, kp, desc);

matcher->match( desc, desc1, matches );

Mat H = loadMat(format("%s/%s/H1to%dp.xml", dataset_dir.c_str(), dsname, k));

transformKeypoints( kp1, kp1t_contours, H );

transformKeypoints( kp, kp_contours, Mat::eye(3, 3, CV_64F));

TVec r = proccessMatches( imgK.size(), matches, kp1t_contours, kp_contours, overlapThreshold );

results[i][j].push_back(r);

//vector > matches1to2;

//vector > correctMatchesMask;

//vector recallPrecisionCurve;

//Ptr descMatch = new VectorDescriptorMatcher(descriptor, matcher);

//evaluateGenericDescriptorMatcher(img1, imgK, H, kp1, kp, &matches1to2, &correctMatchesMask, recallPrecisionCurve, descMatch);

// vector recallPrecisionCurve;

// Ptr descMatch = new VectorDescriptorMatcher( descriptor, matcher);

// evaluateGenericDescriptorMatcher( img1, imgK, H, kp1, kp, 0, 0, recallPrecisionCurve, descMatch );

//

// Point2f firstPoint = *recallPrecisionCurve.begin();

// Point2f lastPoint = *recallPrecisionCurve.rbegin();

// int prevPointIndex = -1;

// cout << "1-precision = " << firstPoint.x << "; recall = " << firstPoint.y << endl;

// for( float l_p = 0; l_p <= 1 + FLT_EPSILON; l_p+=0.05f )

// {

// int nearest = getNearestPoint( recallPrecisionCurve, l_p );

// if( nearest >= 0 )

// {

// Point2f curPoint = recallPrecisionCurve[nearest];

// if( curPoint.x > firstPoint.x && curPoint.x < lastPoint.x && nearest != prevPointIndex )

// {

// //cout << "1-precision = " << curPoint.x << "; recall = " << curPoint.y << endl;

// prevPointIndex = nearest;

// }

// }

// }

// cout << "1-precision = " << lastPoint.x << "; recall = " << lastPoint.y << endl;

// cout << ">" << endl;

//

// int debug = 1;

}

saveResults(dir, name, dsname, results[i][j], xvals);

cout << endl;

}

}

cout << "代码已运行结束" << endl;

}

自己的复现的:

import matplotlib

matplotlib.use('tkagg')

from matplotlib import pyplot as plt

import numpy as np

import argparse

import os

import cv2

import time

import pandas as pd

def mkdir_os(path):

if not os.path.exists(path):

os.makedirs(path)

def getDetector(detector_name):

if detector_name == "SIFT":

return cv2.xfeatures2d.SIFT_create()

elif detector_name == "SURF":

return cv2.xfeatures2d.SURF_create()

elif detector_name == "HarrisLaplace":

return cv2.xfeatures2d.HarrisLaplaceFeatureDetector_create()

elif detector_name == "STAR":

return cv2.xfeatures2d.StarDetector_create()

elif detector_name == "ORB":

return cv2.ORB_create()

elif detector_name == "BRISK":

return cv2.BRISK_create()

elif detector_name == "FAST":

return cv2.FastFeatureDetector_create()

elif detector_name == "AKAZE":

return cv2.AKAZE_create()

elif detector_name == "MSER":

return cv2.MSER_create()

elif detector_name == "KAZE":

return cv2.KAZE_create()

#HarrisAffine opencv-xfeatures2d 没有python接口

def getDescriptor(descriptor_name):

if descriptor_name == "SIFT":

return cv2.xfeatures2d.SIFT_create()

elif descriptor_name == "SURF":

return cv2.xfeatures2d.SURF_create()

elif descriptor_name == "FREAK":

return cv2.xfeatures2d.FREAK_create()

elif descriptor_name == "DAISY":

return cv2.xfeatures2d.DAISY_create()

elif descriptor_name == "Brief" or descriptor_name == "BRIEF":

return cv2.xfeatures2d.BriefDescriptorExtractor_create()

elif descriptor_name == "BoostDesc" or descriptor_name == "BOOSTDESC":

return cv2.xfeatures2d.BoostDesc_create()

elif descriptor_name == "ORB":

return cv2.ORB_create()

elif descriptor_name == "BRISK":

return cv2.BRISK_create()

elif descriptor_name == "AKAZE":

return cv2.AKAZE_create()

elif descriptor_name == "KAZE":

return cv2.AKAZE_create()

def transformKeypoints(kp, H):

scale = 256

n = len(kp)

contours = [[] for _ in range(n)]

for i in range(n):

temp = cv2.ellipse2Poly( (int(kp[i].pt[0] * scale), int(kp[i].pt[1]* scale)),

(int(kp[i].size * scale), int(kp[i].size * scale)),

0, 0, 360, 12)

i_temp = [np.float32(temp) * (1.0/scale)]

contours[i] = cv2.perspectiveTransform(np.array(i_temp), H)

return contours

def RectOverlap(Rect1, Rect2):

(x11, y11, x12, y12) = Rect1[0], Rect1[1], Rect1[0]+Rect1[2], Rect1[1]+Rect1[3]

(x21, y21, x22, y22) = Rect2[0], Rect2[1], Rect2[0]+Rect2[2], Rect2[1]+Rect2[3]

StartX = min(x11, x21)

EndX = max(x12, x22)

StartY = min(y11, y21)

EndY = max(y12, y22)

CurWidth = (x12 - x11) + (x22 - x21) - (EndX - StartX) # (EndX-StartX)表示外包框的宽度

CurHeight = (y12 - y11) + (y22 - y21) - (EndY - StartY) # (Endy-Starty)表示外包框的高度

if CurWidth <= 0 or CurHeight <= 0:

return (0, 0, 0, 0, 0)

else:

X1 = max(x11, x21)

Y1 = max(y11, y21)

X2 = min(x12, x22)

Y2 = min(y12, y22)

Area = CurWidth * CurHeight

IntersectRect = (X1, Y1, X2, Y2, Area)

return IntersectRect

def getArea(rect):

return rect[2] * rect[3]

def proccessMatches(imgsize, matches, kp1t_contours, kp_contours, overlapThreshold):

height, width = imgsize

visibilityThreshold = 0.6

size1 = len(kp1t_contours)

size = len(kp_contours)

msize = len(matches)

best_match = [cv2.DMatch() for m_ind in range(size1)]

rects1 = [None for m_ind in range(size1)]

rects = [None for m_ind in range(size)]

for i in range(size1):

rects1[i] = cv2.boundingRect(kp1t_contours[i])

for i in range(size):

rects[i] = cv2.boundingRect(kp_contours[i])

#https://blog.csdn.net/cxc2333/article/details/108652488

#match(descriptor_for_keypoints1, descriptor_for_keypoints2, matches)

#queryIdx代表的特征点序列是keypoints1中的,trainIdx代表的特征点序列是keypoints2中的

for i in range(msize):

m = matches[i]

i1 = m.trainIdx

iK = m.queryIdx

assert ( 0 <= i1 and i1 < size1 and 0 <= iK and iK < size )

if( best_match[i1].trainIdx < 0 or best_match[i1].distance > m.distance ):

best_match[i1] = m

N = 0

N1 = 0

n = 0

for i in range(size1):

i1 = i

iK = best_match[i].queryIdx

if( iK >= 0 ):

N1 += 1

r = RectOverlap(rects1[i], (0, 0, width, height))

if( r[4] < visibilityThreshold*getArea(rects1[i])):

continue

N+=1

if( iK < 0 or RectOverlap(rects1[i1], rects[iK])[4] == 0 ):

continue

n_area = cv2.intersectConvexConvex(kp1t_contours[i1], kp_contours[iK])

if( n_area == 0 ):

continue

area1 = cv2.contourArea(kp1t_contours[i1], False)

area = cv2.contourArea(kp_contours[iK], False)

ratio = n_area[0] / (area1 + area - n_area[0])

n += int(1 if ratio >= overlapThreshold else 0)

#precision = tp / (tp + fp)

#recall = tp / (tp + fn)

#f1_score = 2* ((precision * recall) / (precision + recall))

# ---相关--- ---不相关---

#---检索到--- A(TP) B(FP)

#---未被检索到--- C(FN) D(TN)

#Recall召回率 = 提取出的正确信息条数 / 样本中的信息条数

#Precision精确率 = 提取出的正确信息条数 / 提取出的信息条数

#Recall = A/(A+C)

#Precision=A/(A+B)

#F-measure=(2*Precision*Recall)/(Precision+Recall)

#在特征点匹配时:

#A指的是实际为匹配点对,且该匹配算法得到了这些匹配对;

#B指的是实际为匹配点对,但该匹配算法没有得到这些匹配对;

#C指的是实际为错误匹配对,但该算法得到了这些匹配对;

#即Precision为匹配结果中有多少是准确的,Recall就是所有正确的匹配结果有多少通过匹配算法得到了。

return ( float(N), float(n/max(N1, 1)), float(n/max(N, 1)) )

def saveResults(dir, name, dsname, results, xvals):

fname1 = "{}/{}_{}_precision.csv".format(dir, name, dsname)

fname2 = "{}/{}_{}_recall.csv".format(dir, name, dsname)

precision_list = []

recall_list = []

for i in range(len(results)):

precision = round(results[i][1]*100, 2)

recall = round(results[i][2]*100, 2)

#precision = "{:.2f}".format(results[i][1]*100)

#recall = "{:.2f}".format(results[i][2]*100)

print("\timg-id:", xvals[i], " precision:", precision)

print("\timg-id:", xvals[i], " recall:", recall)

precision_list.append({"img-id":xvals[i], "precision":precision})

recall_list.append({"img-id":xvals[i], "recall":recall})

df1 = pd.DataFrame(precision_list)

df1.to_csv(fname1, index=False, header=None)

df2 = pd.DataFrame(recall_list)

df2.to_csv(fname2, index=False, header=None)

#datasets = ["bark", "bikes", "boat", "graf", "leuven", "trees", "ubc", "wall"]

title_list = [

"ImageMatch evaluation under scale changes (bark dataset)",

"ImageMatch evaluation under blur changes (bike dataset)",

"ImageMatch evaluation under size changes (boat dataset)",

"ImageMatch evaluation under viewpoint changes (graf dataset)",

"ImageMatch evaluation under light changes (leuven dataset)",

"ImageMatch evaluation under blur changes (trees)",

"ImageMatch evaluation under JPEG compression (ubc dataset)",

"ImageMatch evaluation under viewpoint changes (wall dataset)"

]

xlabel_list = [

"dataset image index (increasing zoom+rotation)",#[2:6]

"dataset image index (increasing blur)",#[2:6]

"dataset image index (increasing zoom+rotation)",#[2:6]

"viewpoint angle",#[20:60]

"dataset image index (decreasing light)",#[2:6]

"dataset image index (increasing blur)",#[2:6]

"JPEG compression", #[60:98]

"viewpoint angle"#[20:60]

]

xlabel_value_list = [

[2, 3, 4, 5, 6],

[2, 3, 4, 5, 6],

[2, 3, 4, 5, 6],

[20, 30, 40, 50, 60],

[2, 3, 4, 5, 6],

[2, 3, 4, 5, 6],

[60, 80, 90, 95, 98],

[20, 30, 40, 50, 60],

]

#主色系

import colorsys

import random

def get_n_hls_colors(num):

hls_colors = []

i = 0

step = 360.0 / num

while i < 360:

h = i

s = 90 + random.random() * 10

l = 50 + random.random() * 10

_hlsc = [h / 360.0, l / 100.0, s / 100.0]

hls_colors.append(_hlsc)

i += step

return hls_colors

def ncolors(num):

rgb_colors = []

if num < 1:

return rgb_colors

hls_colors = get_n_hls_colors(num)

for hlsc in hls_colors:

_r, _g, _b = colorsys.hls_to_rgb(hlsc[0], hlsc[1], hlsc[2])

r, g, b = [int(x * 255.0) for x in (_r, _g, _b)]

rgb_colors.append([r, g, b])

return rgb_colors

# RGB格式颜色转换为16进制颜色格式

def RGB_to_Hex(RGB):

color = '#'

for i in RGB:

num = int(i)

#将R、G、B分别转化为16进制拼接转换并大写

#hex()函数用于将10进制整数转换成16进制,以字符串形式表示

color += str(hex(num))[-2:].replace('x', '0').upper()

return color

plot_color_list = ncolors(10)#rgb

plot_marker_list = ["x", "*", "s", "o", "p", "D", "H", "v", "+", "2"]

def plot_result(ddms_t, datasets_t, results_t, nimg_t, dir_name):

precision_list = []

recall_list = []

for m_ind, m_val in enumerate(results_t):

for n_ind, n_val in enumerate(m_val):

for k_ind, k_val in enumerate(n_val):

precision = round(results_t[m_ind][n_ind][k_ind][1] * 100, 2)

recall = round(results_t[m_ind][n_ind][k_ind][2] * 100, 2)

precision_list.append(precision)

recall_list.append(recall)

precision_array = np.array(precision_list)

precision_matrix = precision_array.reshape((len(ddms_t), len(datasets_t), nimg_t))

for ind in range(len(datasets_t)):

fig = plt.figure()

for alg in range(len(ddms_t)):

y_val = ((precision_matrix[alg:alg+1, ind:ind+1, :]).flatten()).tolist()

x_val = xlabel_value_list[ind]

color_hex = RGB_to_Hex(plot_color_list[alg])

plt.plot(x_val, y_val, color=color_hex, marker=plot_marker_list[alg], ms=10, label=ddms_t[alg][0])

# for x_t, y_t in zip(x_val, y_val):

# plt.text(x_t, y_t+1, str(y_t), ha='center', va='bottom', fontsize=10, rotation=15)

plt.suptitle(title_list[ind])

#plt.title(title_list[ind])

plt.xlabel(xlabel_list[ind])

plt.ylabel("precision")

plt.legend(loc="upper right")

# some clean up

# 去掉上边框和有边框

for spine in plt.gca().spines.keys():

if spine == 'top' or spine == 'right':

plt.gca().spines[spine].set_color('none')

# x轴的刻度在下边框

plt.gca().xaxis.set_ticks_position('bottom')

# y轴的刻度在左边框

plt.gca().yaxis.set_ticks_position('left')

# 绘制x、y轴网格

plt.grid(True, ls=':', color='k')

plt.savefig(os.path.join(dir_name, "{}_precision.png".format(datasets_t[ind])))

recall_array = np.array(recall_list)

recall_matrix = recall_array.reshape((len(ddms_t), len(datasets_t), nimg_t))

for ind in range(len(datasets_t)):

fig = plt.figure()

for alg in range(len(ddms_t)):

y_val = ((recall_matrix[alg:alg+1, ind:ind+1, :]).flatten()).tolist()

x_val = xlabel_value_list[ind]

color_hex = RGB_to_Hex(plot_color_list[alg])

plt.plot(x_val, y_val, color=color_hex, marker=plot_marker_list[alg], ms=10, label=ddms_t[alg][0])

# for x_t, y_t in zip(x_val, y_val):

# plt.text(x_t, y_t+1, str(y_t), ha='center', va='bottom', fontsize=10, rotation=15)

plt.suptitle(title_list[ind])

#plt.title(title_list[ind])

plt.xlabel(xlabel_list[ind])

plt.ylabel("recall")

plt.legend(loc="upper right")

# some clean up

# 去掉上边框和有边框

for spine in plt.gca().spines.keys():

if spine == 'top' or spine == 'right':

plt.gca().spines[spine].set_color('none')

# x轴的刻度在下边框

plt.gca().xaxis.set_ticks_position('bottom')

# y轴的刻度在左边框

plt.gca().yaxis.set_ticks_position('left')

# 绘制x、y轴网格

plt.grid(True, ls=':', color='k')

plt.savefig(os.path.join(dir_name, "{}_recall.png".format(datasets_t[ind])))

def getHomography(good_matches, img1, img2, kp1, kp2, threshold):

MIN_MATCH_COUNT = 10

if len(good_matches) > MIN_MATCH_COUNT:

src_pts = np.float32([kp1[m.queryIdx].pt for m in good_matches]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in good_matches]).reshape(-1, 1, 2)

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, threshold)

matchesMask = mask.ravel().tolist()

h, w = img1.shape

pts = np.float32([[0, 0], [0, h - 1], [w - 1, h - 1], [w - 1, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, M)

img2 = cv2.polylines(img2, [np.int32(dst)], True, 255, 3, cv2.LINE_AA)

return (matchesMask, img2)

else:

print("Not enough matches are found: " + str(len(good_matches)) + "/" + str(MIN_MATCH_COUNT))

matchesMask = None

return (matchesMask, None)

def pipeline(args):

mkdir_os(args.output_vis)

mkdir_os(args.output_file)

ddms = [

["SIFT_FB", "SIFT", "SIFT", "BruteForce"],

["SURF_FB", "SURF", "SURF", "BruteForce"],

["ORB_BF", "ORB", "ORB", "BruteForce-Hamming"],

["BRISK_BF", "BRISK", "BRISK", "BruteForce-Hamming"],

["AKAZE_BF", "AKAZE", "AKAZE", "BruteForce-Hamming"],

# ["SIFT_FB", "SIFT", "SIFT", "FlannBased"],

# ["SURF_FB", "SURF", "SURF", "FlannBased"],

]

datasets = ["bark", "bikes", "boat", "graf", "leuven", "trees", "ubc", "wall"]

#if scale, blur or light changes

imgXVals = [2, 3, 4, 5, 6]

#if viewpoint changes

viewpointXVals = [20, 30, 40, 50, 60]

#if jpeg compression

jpegXVals = [60, 80, 90, 95, 98]

overlapThreshold = 0.6

#indexed as results[ddm][dataset][testcase]

results = []

dataset_dir = args.input_dataset

dir = args.output_file

for i,m_val in enumerate(ddms):

name = m_val[0]

detector_name = m_val[1]

descriptor_name = m_val[2]

matcher_name = m_val[3]

print("Testing ", name)

detector = getDetector(detector_name)

descriptor = getDescriptor(descriptor_name)

'''

BruteForce (it uses L2 )

BruteForce-L1

BruteForce-Hamming

BruteForce-Hamming(2)

FlannBased

'''

matcher = cv2.DescriptorMatcher_create(matcher_name)

results.append([])

for j,n_val in enumerate(datasets):

dsname = n_val

print("\ton ", dsname, end=' ')

xvals = jpegXVals if dsname == "ubc" else viewpointXVals if dsname == "ubc" else viewpointXVals if dsname == "wall" else imgXVals

# if dsname == 'boat':

# suffix = 'pgm'

# else:

# suffix = 'ppm'

suffix = 'png'

img1 = cv2.imread("{}/{}/img1.{}".format(dataset_dir, dsname, suffix), 0)

assert (img1 is not None)

kp1 = detector.detect(img1, None)

kp1, desc1 = descriptor.compute(img1, kp1)

results[i].append([])

k = 2

while True:

print(".", end=' ')

# if dsname == 'boat':

# suffix = 'pgm'

# else:

# suffix = 'ppm'

suffix = 'png'

imgK = cv2.imread("{}/{}/img{}.{}".format(dataset_dir, dsname, k, suffix), 0)

if imgK is None:

break

kp = detector.detect(imgK, None)

kp, desc = descriptor.compute(imgK, kp)

#未经过top2+RANSAC,直接对匹配对进行计算

matches = matcher.match(desc, desc1)

#经过top2+RANSAC后的recall和precision

# matches_knn = matcher.knnMatch(desc, desc1, k=2)

# good = []

# TRESHOLD = 70

# for m in matches_knn:

# if len(m) == 2:

# if m[0].distance < TRESHOLD / 100 * m[1].distance:

# good.append(m[0])

# (matchesMask, res_img) = getHomography(good, imgK, img1, kp, kp1, 5)

# matches = []

# for match_t, mask_t in zip(good, matchesMask):

# if mask_t == 1:

# matches.append(match_t)

#H = np.loadtxt("{}/{}/H1to{}p".format(dataset_dir, dsname, k))

fs = cv2.FileStorage("{}/{}/H1to{}p.xml".format(dataset_dir, dsname, k), cv2.FILE_STORAGE_READ)

H = fs.getNode("H"+"1"+str(k)).mat()

kp1t_contours = transformKeypoints(kp1, H)

kp_contours = transformKeypoints(kp, np.eye(3))

r = proccessMatches(imgK.shape[:2], matches, kp1t_contours, kp_contours, overlapThreshold)

results[i][j].append(r)

k += 1

print("\n")

saveResults(dir, name, dsname, results[i][j], xvals)

print("\n")

plot_result(ddms, datasets, results, 5, args.output_vis)

#readme

#python -mpip install opencv-python==3.4.1.15

#python -mpip install opencv-contrib-python==3.4.1.15

if __name__ == "__main__":

parser = argparse.ArgumentParser(

description='detector_descriptor_matcher_evaluation.cpp')

parser.add_argument('--input_dataset',

help='input_dataset',

default='./cv_data/detectors_descriptors_evaluation/images_datasets')

parser.add_argument('--output_file',

help='output_file',

default='./output_file')

parser.add_argument('--output_vis',

help='output_vis',

default='./output_vis')

args = parser.parse_args()

if args.input_dataset is None or args.output_vis is None:

parser.print_help()

exit()

pipeline(args)

还有关于mAP的计算

现在最新的论文都用的是这个指标:

参考:

VLBennchmarks - VLBenchmarks

顺便通过https://github.com/ducha-aiki发现了一个特征检测器评估的框架:

https://github.com/lenck/vlb-deteval

导出patches

extract-patches · PyPI

https://github.com/ducha-aiki/extract_patches

https://github.com/ducha-aiki/extract-patches-old

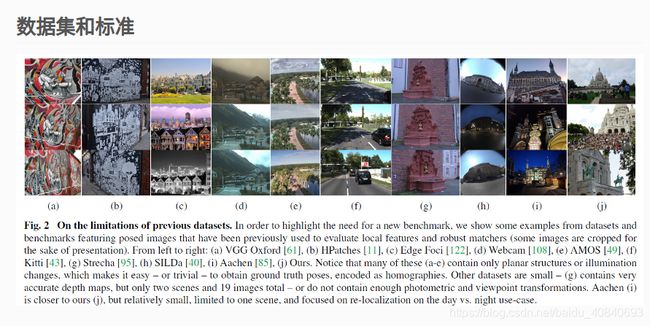

一些数据集:

http://xxx.itp.ac.cn/pdf/2003.01587v2

笔记:CVPR2020图像匹配挑战赛,新数据集+新评测方法,SOTA正瑟瑟发抖! | RealCat

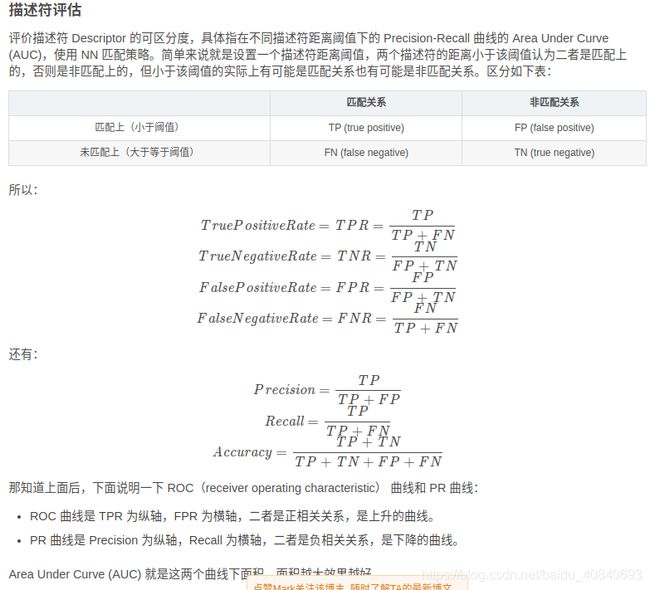

局部特征评价指标

局部特征评价指标_honyniu的专栏-CSDN博客_特征评价指标

还有参考:

https://www.robots.ox.ac.uk/~vgg/publications/2019/Lenc19/lenc19.pdf

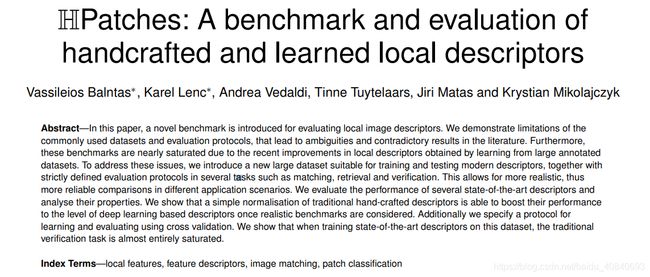

翻译:Image Processing and Computer Vision_Review:HPatches A benchmark and evaluation of handcrafted and learned local descriptors——2017.04 - Alliswell_WP - 博客园

翻译:HPatches A benchmark and evaluation of handcrafted and learned local descriptors——2017.04_Alliswell_WP-CSDN博客

指标:HPatches 数据集和评价指标_honyniu的专栏-CSDN博客

链接:

HPatches - A benchmark and evaluation of handcrafted and learned local descriptors - HPatches

https://github.com/hpatches/hpatches-benchmark

http://oa.upm.es/55889/1/TFM_GHESN_DANIEL_SFEIR_MALAVE.pdf

数据集地址

Learning Local Image Descriptors Data

Learning Local Image Descriptors Data

pytorch可以直接调用:

翻译:Liberty_NotreDame_Yosemite数据集_u013247002的博客-CSDN博客_phototour数据集

Multi-view Stereo Correspondence Dataset (MSC)

有人使用的评估代码:https://github.com/osdf/datasets/tree/master/patchdata