利用pytorch复现resnet对cifar-10进行分类

一、resnet创新点

传统的卷积网络在网络很深的时候,会出现梯度消失或者梯度爆炸的现象而resnet就能很好的解决这个问题。

resnet最为创新的一点是残差结构,它使用了一种连接方式叫做“shortcut connection”,顾名思义,shortcut就是“抄近道”的意思。示意图如下。

![]()

它对每层的输入做一个reference(X), 学习形成残差函数, 而不是学习一些没有reference(X)的函数。这种残差函数更容易优化,能使网络层数大大加深。

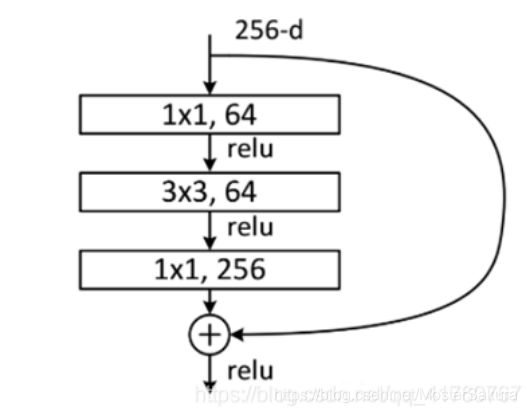

resnet还有一种结构,用在resnet50/101/102上。

极大程度上的减小了参数量,它将两个3x3的卷积层替换为1x1 + 3x3 + 1x1。第一个1x1的卷积把256维channel降到64维,然后在最后通过1x1卷积恢复,整体上用的参数数目:1x1x256x64 + 3x3x64x64 + 1x1x64x256 = 69632,而不使用bottleneck的话就是两个3x3x256的卷积,参数数目: 3x3x256x256x2 = 1179648,差了16.94倍。

二、代码部分

(一)数据预处理

引入包库

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

import os

import argparse

from torch.autograd import Variable参数设置

parser = argparse.ArgumentParser(description='cifar10')

parser.add_argument('--lr', default=1e-2, help='learning rate')

parser.add_argument('--epoch', default=20, help='time for ergodic')

parser.add_argument('--pre_epoch', default=0, help='begin epoch')

# 输出结果保存路径

parser.add_argument('--outf', default='./model/', help='folder to output images and model checkpoints')

# 恢复训练时的模型路径

parser.add_argument('--pre_model', default=True, help='use pre-model')

args = parser.parse_args()

# 使用gpu

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")图像处理

transform_train = transforms.Compose([

# transforms.RandomCrop(32, padding=4), # 先四周填充0,再把图像随机裁剪成32*32

# transforms.Resize(256),

# transforms.CenterCrop(224),#从中心开始裁剪

transforms.RandomHorizontalFlip(), # 图像一半的概率翻转,一半的概率不翻转

transforms.ToTensor(),

# transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))

])

transform_test = transforms.Compose([

transforms.ToTensor(),

# transforms.Resize(256),

# transforms.CenterCrop(224),#从中心开始裁剪

# transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))

])加载数据

trainset = torchvision.datasets.CIFAR10(root='./data/', train=True, download=False, transform=transform_train)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=128, shuffle=True, num_workers=0)

testset = torchvision.datasets.CIFAR10(root='./data/', train=False, download=False, transform=transform_test)

testloader = torch.utils.data.DataLoader(testset, batch_size=128, shuffle=False, num_workers=0)

# Cifar-10的标签

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')定义模型

import torch

import torch.nn as nn

import torch.nn.functional as F

#定义残差块

class ResidualBlock(nn.Module):

def __init__(self, inchannel, outchannel, stride):

super(ResidualBlock, self).__init__()

self.left = nn.Sequential(

nn.Conv2d(inchannel, outchannel, kernel_size=3, stride=stride, padding=1, bias=False),

nn.BatchNorm2d(outchannel),

nn.ReLU(inplace=True),

nn.Conv2d(outchannel, outchannel, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(outchannel)

)

self.shortcut = nn.Sequential()

if stride != 1 or inchannel != outchannel:

self.shortcut = nn.Sequential(

nn.Conv2d(inchannel, outchannel, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(outchannel)

)

def forward(self, x):

out = self.left(x)

out += self.shortcut(x)

out = F.relu(out)

return out

#resnet主体

class ResNet(nn.Module):

def __init__(self, ResidualBlock, num_classes=10):

super(ResNet, self).__init__()

self.inchannel = 64

self.conv1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(),

)

self.layer1 = self.make_layer(ResidualBlock, 64, 2, stride=1)

self.layer2 = self.make_layer(ResidualBlock, 128, 2, stride=2)

self.layer3 = self.make_layer(ResidualBlock, 256, 2, stride=2)

self.layer4 = self.make_layer(ResidualBlock, 512, 2, stride=2)

self.fc = nn.Linear(512, num_classes)

def make_layer(self, block, channels, num_blocks, stride):

strides = [stride] + [1] * (num_blocks - 1) #strides=[1,1]

print(strides)

layers = []

for stride in strides:

layers.append(block(self.inchannel, channels, stride))

self.inchannel = channels

return nn.Sequential(*layers)

def forward(self, x):

out = self.conv1(x)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = F.avg_pool2d(out,4)

out = out.view(out.size(0), -1)

out = self.fc(out)

return out

#定义resnet18

def ResNet18():

return ResNet(ResidualBlock)

if __name__=='__main__':

model = ResNet18()

print(model)

input = torch.randn(1, 3, 32, 32)

out = model(input)

print(out.shape)定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=args.lr, momentum=0.9,

weight_decay=5e-4)

训练部分

best_test_acc = 0

pre_epoch = args.pre_epoch

if __name__ == "__main__":

if not os.path.exists('./model'):

os.mkdir('./model')

#writer = SummaryWriter(log_dir='./log')

print("Start Training, VGG-16...")

with open("acc.txt", "w") as acc_f:

with open("log.txt", "w") as log_f:

for epoch in range(pre_epoch, args.epoch):

print('\nEpoch: %d' % (epoch + 1))

# 开始训练

net.train()

print(net)

# 总损失

sum_loss = 0.0

# 准确率

accuracy = 0.0

total = 0.0

for i, data in enumerate(trainloader):

# 准备数据

length = len(trainloader) # 数据大小

inputs, labels = data # 取出数据

#inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad() # 梯度初始化为零(因为一个batch的loss关于weight的导数是所有sample的loss关于weight的导数的累加和)

inputs, labels = Variable(inputs), Variable(labels)

# forward + backward + optimize

outputs = net(inputs) # 前向传播求出预测值

loss = criterion(outputs, labels) # 求loss

loss.backward() # 反向传播求梯度

optimizer.step() # 更新参数

# 每一个batch输出对应的损失loss和准确率accuracy

sum_loss += loss.item()

_, predicted = torch.max(outputs.data, 1) # 返回每一行中最大值的那个元素,且返回其索引

total += labels.size(0)

accuracy += predicted.eq(labels.data).cpu().sum() # 预测值和真实值进行比较,将数据放到cpu上并且求和

print('[epoch:%d, iter:%d] Loss: %.03f | Acc: %.3f%% '

% (epoch + 1, (i + 1 + epoch * length), sum_loss / (i + 1), 100. * accuracy / total))

# 写入日志

log_f.write('[epoch:%d, iter:%d] |Loss: %.03f | Acc: %.3f%% '

% (epoch + 1, (i + 1 + epoch * length), sum_loss / (i + 1), 100. * accuracy / total))

log_f.write('\n')

log_f.flush()

# 每一个训练epoch完成测试准确率

print("Waiting for test...")

# 在上下文环境中切断梯度计算,在此模式下,每一步的计算结果中requires_grad都是False,即使input设置为requires_grad=True

with torch.no_grad():

accuracy = 0

total = 0

for data in testloader:

# 开始测试

net.eval()

images, labels = data

#images, labels = images.to(device), labels.to(device)

outputs = net(images)

_, predicted = torch.max(outputs.data, 1) # 返回每一行中最大值的那个元素,且返回其索引(得分高的那一类)

total += labels.size(0)

accuracy += (predicted == labels).sum()

# 输出测试准确率

print('测试准确率为: %.3f%%' % (100 * accuracy / total))

acc = 100. * accuracy / total

# 写入tensorboard

#writer.add_scalar('accuracy/test', acc, epoch)

# 将测试结果写入文件

print('Saving model...')

torch.save(net.state_dict(), '%s/net_%3d.pth' % (args.outf, epoch + 1))

acc_f.write("epoch = %03d, accuracy = %.3f%%" % (epoch + 1, acc))

acc_f.write('\n')

acc_f.flush()

# 记录最佳的测试准确率

if acc > best_test_acc:

print('Saving Best Model...')

# 存储状态

state = {

'state_dict': net.state_dict(),

'acc': acc,

'epoch': epoch + 1,

}

# 没有就创建checkpoint文件夹

if not os.path.isdir('checkpoint'):

os.mkdir('checkpoint')

# best_acc_f = open("best_acc.txt","w")

# best_acc_f.write("epoch = %03d, accuracy = %.3f%%" % (epoch + 1, acc))

# best_acc_f.close()

torch.save(state, './checkpoint/ckpt.t7')

best_test_acc = acc

# 训练结束

print("Training Finished, Total Epoch = %d" % epoch)三、训练结果

最终resnet18在测试集上准确率为0.76,resnet50在测试集上准确率为0.77