One shot learning-Siamese Networks

问题描述

某个种类只有一张图片

(神经网络在某类只有一张图片的时候预测该类的效果较差)

(这样的训练图片集并不能够训练一个鲁棒性较好的神经网络)

(当每次有新的类型进来,需要重新训练整个网络)

解决方案:

Siamese Network

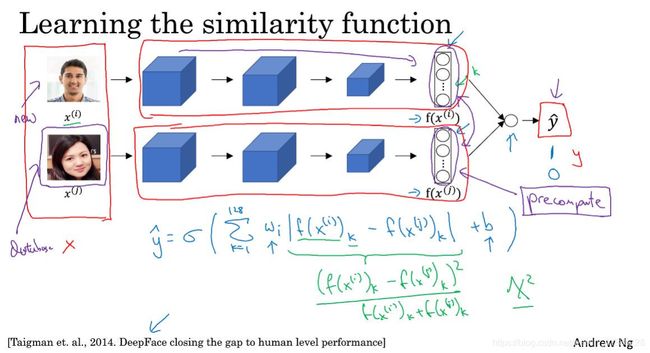

吴恩达深度学习视频中共提了两种Siamese Network,分别来自FaceNet和DeepFace

Siamese基本结构就是训练一个神经网络,将两张图片输入,得到两个n维数组

FaceNet:

FaceNet的大致过程就是选择一组Anchor,Positive,Negative,使用将这三幅图片放入神经网络(最后一层使用DenseLayer得到一个128维数组)得到三个128维数组,然后使用Euclidean距离分别计算Anchor输出数组和Positive输出数组,Anchor输出数组和Negative输出数组得到两个距离,然后使这两个距离满足上述不等式来不断训练神经网络。Loss则使用下述Triplet Loss

选择Anchor,Positive,Negative,尽量选择Negative与Positive较相近的样本,这样神经网络能够学习的更加快速。若使用随机取Anchor,Positive,Negative来训练,大部分的这组都是满足不等式的,神经网络学习不到东西。

PS:看了下github上keras的Siamese实现都是DeepFace的实现,就本人看来DeepFace更容易实现end2end的简单训练模式,所以这里只实现了DeepFace。

DeepFace:

DeepFace与FaceNet不同之处就是在最后使用一个Concatenate将两张图片的最后的n维数组结合并再进行一次DenseLayer得到0或1的标注,依此来判断两张图片是否为一个人。

实现代码:

主要参考https://github.com/sorenbouma/keras-oneshot,但这里面的选择Anchor,Positive,Negative实在是有点奇怪,能看出来是以一定的方式随机取。我的代码在选择Anchor,Positive,Negative使用的是最简单的穷举法。其实参考的代码主要是为了实现koch et al, Siamese Networks for one-shot learning论文,与DeepFace有所不同,只需要取一个pair(任意两张突破的组合),及相对应的0或1的标注,然后放入神经网络训练。

from keras.layers import Input, Conv2D, Lambda, merge, Dense, Flatten,MaxPooling2D

from keras.models import Model, Sequential

from keras.regularizers import l2

from keras import backend as K

from keras.optimizers import SGD,Adam

from keras.losses import binary_crossentropy

import numpy.random as rng

import numpy as np

import os

import pickle

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.utils import shuffle

%matplotlib inline

读取数据(这里的数据共5类,每类20个,当然每类的数量也可以不同,但为了方便起见,这里使用相同的数量)

import cv2

data_blur=np.zeros((1,20,224,224,3))

data_good=np.zeros((1,20,224,224,3))

data_other=np.zeros((1,20,224,224,3))

data_over=np.zeros((1,20,224,224,3))

data_under=np.zeros((1,20,224,224,3))

ind=0

for fn in os.listdir('C:\\Users\\18367\\Desktop\\siamese_test_0522\\blur\\'):

data_blur[0,ind,:,:,:]=cv2.cvtColor(cv2.resize(cv2.imread('C:\\Users\\18367\\Desktop\\siamese_test_0522\\blur\\'+fn),

(224,224), interpolation=cv2.INTER_LINEAR), cv2.COLOR_BGR2RGB)

ind+=1

ind=0

X=data_blur

for fn in os.listdir('C:\\Users\\18367\\Desktop\\siamese_test_0522\\good\\'):

data_good[0,ind,:,:,:]=cv2.cvtColor(cv2.resize(cv2.imread('C:\\Users\\18367\\Desktop\\siamese_test_0522\\good\\'+fn),

(224,224), interpolation=cv2.INTER_LINEAR), cv2.COLOR_BGR2RGB)

ind+=1

ind=0

X=np.append(X,data_good,axis=0)

for fn in os.listdir('C:\\Users\\18367\\Desktop\\siamese_test_0522\\other\\'):

data_other[0,ind,:,:,:]=cv2.cvtColor(cv2.resize(cv2.imread('C:\\Users\\18367\\Desktop\\siamese_test_0522\\other\\'+fn),

(224,224), interpolation=cv2.INTER_LINEAR), cv2.COLOR_BGR2RGB)

ind+=1

X=np.append(X,data_other,axis=0)

ind=0

for fn in os.listdir('C:\\Users\\18367\\Desktop\\siamese_test_0522\\over\\'):

data_over[0,ind,:,:,:]=cv2.cvtColor(cv2.resize(cv2.imread('C:\\Users\\18367\\Desktop\\siamese_test_0522\\over\\'+fn),

(224,224), interpolation=cv2.INTER_LINEAR), cv2.COLOR_BGR2RGB)

ind+=1

X=np.append(X,data_over,axis=0)

ind=0

for fn in os.listdir('C:\\Users\\18367\\Desktop\\siamese_test_0522\\under\\'):

data_under[0,ind,:,:,:]=cv2.cvtColor(cv2.resize(cv2.imread('C:\\Users\\18367\\Desktop\\siamese_test_0522\\under\\'+fn),

(224,224), interpolation=cv2.INTER_LINEAR), cv2.COLOR_BGR2RGB)

ind+=1

X=np.append(X,data_under,axis=0)

使用穷举法构造神经网络输入和标注[support_set_left,support_set_right],labels

num_classes, num_classmembers, _, _, _=X.shape

num_samples=num_classes*num_classmembers

total_number=num_samples*(num_samples+1)//2

support_set_left=np.zeros((5050,224,224,3))

support_set_right=np.zeros((5050,224,224,3))

ind=0

for i in np.arange(100):

for j in np.arange(100-i):

support_set_left[ind,:,:,:]=X[i//20,i%20,:,:,:].reshape(224,224,3)

ind+=1

expanded_X=np.zeros((100,224,224,3))

for i in np.arange(5):

for j in np.arange(20):

expanded_X[i*20+j,:,:,:]=X[i,j,:,:,:]

ind=0

indx=0

for i in np.arange(100):

for j in np.arange(indx,100):

support_set_right[ind,:,:,:]=expanded_X[j,:,:,:]

ind+=1

indx+=1

labels=np.zeros((5050,))

ind=0

indx=0

indxx=0

for i in np.arange(100):

for j in np.arange(indx,100):

if j<=indx+20-1-indxx:

labels[ind,]=1

ind+=1

indxx+=1

if indxx%20==0:

indxx=0

indx+=1

https://github.com/sorenbouma/keras-oneshot上的神经网络部分代码

def W_init(shape,name=None):

"""Initialize weights as in paper"""

values = rng.normal(loc=0,scale=1e-2,size=shape)

return K.variable(values,name=name)

#//TODO: figure out how to initialize layer biases in keras.

def b_init(shape,name=None):

"""Initialize bias as in paper"""

values=rng.normal(loc=0.5,scale=1e-2,size=shape)

return K.variable(values,name=name)

input_shape = (224, 224, 3)

left_input = Input(input_shape)

right_input = Input(input_shape)

convnet = Sequential()

convnet.add(Conv2D(64,(10,10),activation='relu',input_shape=input_shape,kernel_initializer=W_init,kernel_regularizer=l2(2e-4)))

convnet.add(MaxPooling2D())

convnet.add(Conv2D(128,(7,7),activation='relu',kernel_initializer=W_init,kernel_regularizer=l2(2e-4),bias_initializer=b_init))

convnet.add(MaxPooling2D())

convnet.add(Conv2D(128,(4,4),activation='relu',kernel_initializer=W_init,kernel_regularizer=l2(2e-4),bias_initializer=b_init))

convnet.add(MaxPooling2D())

convnet.add(Conv2D(256,(4,4),activation='relu',kernel_initializer=W_init,kernel_regularizer=l2(2e-4),bias_initializer=b_init))

convnet.add(Flatten())

convnet.add(Dense(128,activation="sigmoid",kernel_initializer=W_init,kernel_regularizer=l2(1e-3),bias_initializer=b_init,name='output_layer'))

encoded_l = convnet(left_input)

encoded_r = convnet(right_input)

L1_layer = Lambda(lambda tensors:K.abs(tensors[0] - tensors[1]))

L1_distance = L1_layer([encoded_l, encoded_r])

prediction = Dense(1,activation='sigmoid',bias_initializer=b_init)(L1_distance)

siamese_net = Model(inputs=[left_input,right_input],outputs=prediction)

optimizer = Adam(0.00006)

siamese_net.compile(loss="binary_crossentropy",optimizer=optimizer)

训练神经网络

siamese_net.fit(x=[support_set_left,support_set_right], y=labels, batch_size=16, epochs=100, shuffle=True)

测试结果(需要将每个需要预测的图片与已有每张图片形成一个pair输入神经网络进行预测,就这里看的话该方法较为冗余,除非有人工挑选已有图片中的较难判断的图片来与需要预测的图片来进行pair组合;之前的FaceNet和DeepFace则可以简化这个过程,因为可以储存已有图片的经过处理后的n维数组来进行最后的DenseLayer或者是不等式;就现在使用的keras框架,我无法根据https://github.com/sorenbouma/keras-oneshot上的神经网络部分代码来提取那一层,因为使用的是convnet(left_input),它没有名字,所以无法用keras里的get_layer来提取那层)

def siamese_test(test_dir):

data_test=np.zeros((100,224,224,3))

ind=0

for i in np.arange(100):

data_test[ind,:,:,:]=cv2.cvtColor(cv2.resize(cv2.imread(test_dir),(224,224), interpolation=cv2.INTER_LINEAR), cv2.COLOR_BGR2RGB)

ind+=1

output_array=siamese_net.predict([data_test,expanded_X], batch_size=16)

result=np.zeros((5,))

for i in np.arange(5):

count=0

for j in np.arange(20):

if output_array[i*5+j]>=0.5:

count+=1

result[i]=count

return result,output_array

测试的话会取prediction最高的那组pair来判断需要预测的图片属于哪个类别。