python-新闻文本分类详细案例-(数据集见文末链接)

文章目录

- 分析思路

- 所用数据集

- 一、导入相关包

- 二、数据分析

-

- 1.读取数据

- 2. jieba分词并去除停用词

- 3. TF-IDF

- 4. 网格搜索寻最优模型及最优参数

- 5. 预测并评估预测效果

- 总结

分析思路

新闻文本数据包含四类新闻,分别用1,2,3,4 表示。

(1)读取数据;

(2)利用 jieba 对文本进行分词并去除停用词;

(3)运用 TF-IDF 将文本转换为机器学习分类算法能够识别的数字特征,

(4)通过网格搜索 在 LogisticRegression, MultinomialNB,RandomForestRegressor 三种分类算法 中寻找最优算法以及最优参数;

(5)训练最优模型进行预测并评估预测效果。

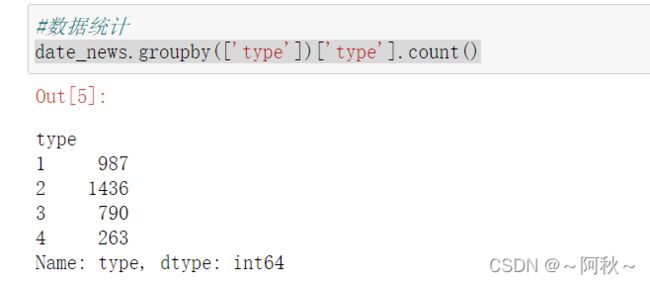

所用数据集

所用新闻数据集包含 train 训练集, test 测试集 数据两个文件夹,两个文件夹下各包含 四个子文件夹,代表1,2,3,4 四类新闻的文件,每条新闻的txt 文件存放在此四类文件夹中。如下图所示:

train 和 test 各类新闻总数量:

一、导入相关包

import pandas as pd

import numpy as np

import jieba #jieba分词

import os

from sklearn.feature_extraction.text import TfidfVectorizer #TF-IDF

from sklearn.model_selection import GridSearchCV #网格搜索

from sklearn.linear_model import LogisticRegression

from sklearn.naive_bayes import MultinomialNB

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import confusion_matrix,classification_report #预测效果的评估

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif']=['SimHei'] #这两行用于plt图像显示中文,否则plt无法显示中文

plt.rcParams['axes.unicode_minus']=False

二、数据分析

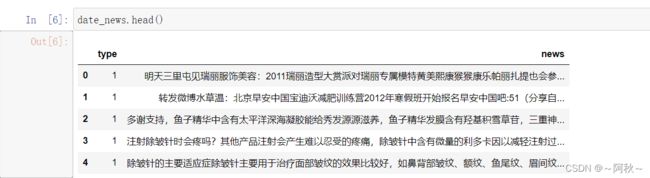

1.读取数据

代码如下:

# 数据读取函数

def read_file(train_test,news_type): #train_test:指定是trian或test文件夹,news_type:新闻类型 1,2,3,4

files_path = f'text//{train_test}//{news_type}'

files = os.listdir(files_path) #获取某一类新闻的文件名列表:['1.txt','2.txt',...]

file_news = []

for file in files:

file_path = files_path + '//'+ file

with open(file_path,'r') as f: #读取新闻

news = f.readlines()

file_news.append(news[0].strip())

df = pd.DataFrame({'type':[news_type for i in range(len(file_news))],'news':file_news})

return df #返回 新闻类型 、新闻内容构成的Dataframe

#获取训练集数据量

train = read_file('train',1).append(read_file('train',2),ignore_index = True).append(read_file('train',3),ignore_index = True).append(read_file('train',4),ignore_index = True)

train_size = len(train)

#数据读取,去重,重置索引 date_news: 所有新闻数据

date_news = read_file('train',1).append(read_file('train',2),ignore_index = True).append(read_file('train',3),ignore_index = True).append(read_file('train',4),ignore_index = True).append(read_file('test',1)).append(read_file('test',2),ignore_index = True).append(read_file('test',3),ignore_index = True).append(read_file('test',4),ignore_index = True)

date_news = date_news.drop_duplicates()

date_news = date_news.reset_index(drop = True) #去重之后,重置索引,否则后面检索数据时易出错

#数据统计

date_news.groupby(['type'])['type'].count()

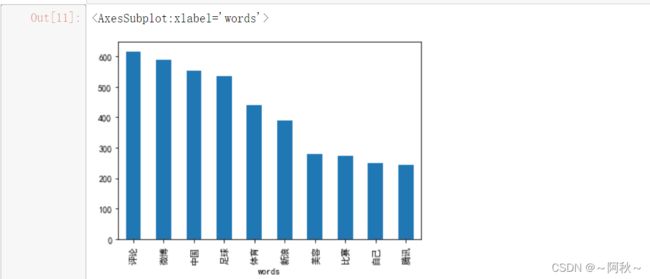

2. jieba分词并去除停用词

代码如下:

#停用词读取

with open('stopwords.txt','r',encoding='utf-8') as f:

stopwords = f.readlines()

stopwords = [i.strip() for i in stopwords]

#结巴分词并去除停用词

def jieba_stop(each):

result = []

jieba_line = jieba.lcut(each) #jieb.cut()返回迭代器,节省空间;jieba.lcut() 直接返回分词后的列表。三种分词模式:默认精确模式,用于文本分析

for l in jieba_line:

if (l not in stopwords) and (l != ''): # 去除停用词以及空格

result.append(l.strip())

return result

date_news.news = date_news.news.apply(jieba_stop) #注意 apply 的用法

date_news.news = date_news.news.apply(lambda x: ','.join([i for i in x if i !=''])) #反复去除空字符

all_words = [i.strip() for line in date_news.news for i in line.split(',')]

all_df = pd.DataFrame({'words':all_words})

all_df.groupby(['words'])['words'].count().sort_values(ascending = False)[:10].plot.bar() #表示停用词去除完全,分词效果尚可

3. TF-IDF

#TF-IDF

tfidf = TfidfVectorizer()

news_tfidf = tfidf.fit_transform(date_news.news)

4. 网格搜索寻最优模型及最优参数

在这里只用了 LogisticRegression,MultinomialNB。

#数据集划分

y = date_news.type

X_train = news_tfidf[:train_size,:] #训练集X

X_test = news_tfidf[train_size:,:] #测试集X

y_train = y.iloc[:train_size,]

y_test = y.iloc[train_size:,]

lr = LogisticRegression() #模型拟合

MNB = MultinomialNB()

lr_params = {'C':[0.1,0.5,1,10],'random_state':[0]} #参数

MNB_params = {'alpha':[0.1,0.5,1,10]}

#rf = RandomForestRegressor()

#rf_params = {'n_estimators':[500,1000],'max_depth':[5,10],'random_state':[0]}

#网格搜索

lr_grid = GridSearchCV(lr,lr_params,n_jobs=-1,cv=10,verbose=1,scoring='neg_mean_squared_error')

lr_grid.fit(X_train,y_train)

MNB_grid = GridSearchCV(MNB,MNB_params,n_jobs=-1,cv=10,verbose=1,scoring='neg_mean_squared_error')

MNB_grid.fit(X_train,y_train)

# rf_grid = GridSearchCV(rf,rf_params,n_jobs=-1,cv=10,verbose=1,scoring='neg_mean_squared_error')

# rf_grid.fit(X_train,y_train)

print('LR best params: '+ str(lr_grid.best_params_)) #最优参数

print('LR best score: '+ str(lr_grid.best_score_)) #最优分数

#print('RF best params: '+ str(rf_grid.best_params_))

#print('RF best score: '+ str(rf_grid.best_score_))

print('MNB best params: '+ str(MNB_grid.best_params_))

print('MNB best score: '+ str(MNB_grid.best_score_))

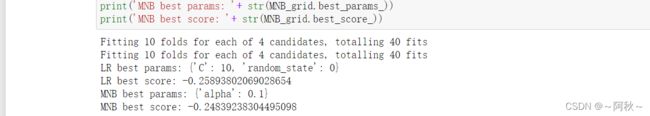

结果显示 MultinomialNB(alpha = 0.1) 最优 :

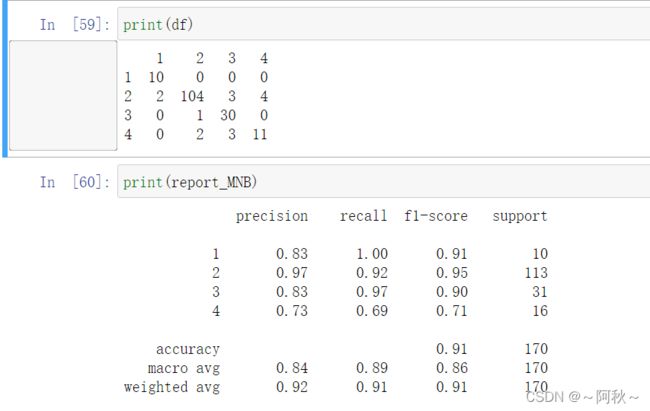

5. 预测并评估预测效果

y_predict = MNB_grid.predict(X_test) #预测值

mat = confusion_matrix(y_test,y_predict) #混淆矩阵

df = pd.DataFrame(mat,columns=[1,2,3,4],index=[1,2,3,4])

report_MNB = classification_report(y_test,y_predict)

总结

预测结果显示:模型对 1、2、3 类的 预测效果较好而对第四类预测效果不佳,通过分析发现,各类的样本量存在较大差异,这可能导致预测效果的偏差。因此,在做文本分类任务,应尽量保证各类型文本数量相近(采用下采样或者过采样),并且考虑利用模型融合提高预测效果。

数据集链接:https://pan.baidu.com/s/1580VyM0LqxsAjxYsXlhLuA

提取码:sky5